- •QoS Overview

- •“Do I Know This Already?” Quiz

- •QoS: Tuning Bandwidth, Delay, Jitter, and Loss Questions

- •Foundation Topics

- •QoS: Tuning Bandwidth, Delay, Jitter, and Loss

- •Bandwidth

- •The clock rate Command Versus the bandwidth Command

- •QoS Tools That Affect Bandwidth

- •Delay

- •Serialization Delay

- •Propagation Delay

- •Queuing Delay

- •Forwarding Delay

- •Shaping Delay

- •Network Delay

- •Delay Summary

- •QoS Tools That Affect Delay

- •Jitter

- •QoS Tools That Affect Jitter

- •Loss

- •QoS Tools That Affect Loss

- •Summary: QoS Characteristics: Bandwidth, Delay, Jitter, and Loss

- •Voice Basics

- •Voice Bandwidth Considerations

- •Voice Delay Considerations

- •Voice Jitter Considerations

- •Voice Loss Considerations

- •Video Basics

- •Video Bandwidth Considerations

- •Video Delay Considerations

- •Video Jitter Considerations

- •Video Loss Considerations

- •Comparing Voice and Video: Summary

- •IP Data Basics

- •Data Bandwidth Considerations

- •Data Delay Considerations

- •Data Jitter Considerations

- •Data Loss Considerations

- •Comparing Voice, Video, and Data: Summary

- •Foundation Summary

- •QoS Tools and Architectures

- •“Do I Know This Already?” Quiz

- •QoS Tools Questions

- •Differentiated Services Questions

- •Integrated Services Questions

- •Foundation Topics

- •Introduction to IOS QoS Tools

- •Queuing

- •Queuing Tools

- •Shaping and Policing

- •Shaping and Policing Tools

- •Congestion Avoidance

- •Congestion-Avoidance Tools

- •Call Admission Control and RSVP

- •CAC Tools

- •Management Tools

- •Summary

- •The Good-Old Common Sense QoS Model

- •GOCS Flow-Based QoS

- •GOCS Class-Based QoS

- •The Differentiated Services QoS Model

- •DiffServ Per-Hop Behaviors

- •The Class Selector PHB and DSCP Values

- •The Assured Forwarding PHB and DSCP Values

- •The Expedited Forwarding PHB and DSCP Values

- •The Integrated Services QoS Model

- •Foundation Summary

- •“Do I Know This Already?” Quiz Questions

- •CAR, PBR, and CB Marking Questions

- •Foundation Topics

- •Marking

- •IP Header QoS Fields: Precedence and DSCP

- •LAN Class of Service (CoS)

- •Other Marking Fields

- •Summary of Marking Fields

- •Class-Based Marking (CB Marking)

- •Network-Based Application Recognition (NBAR)

- •CB Marking show Commands

- •CB Marking Summary

- •Committed Access Rate (CAR)

- •CAR Marking Summary

- •Policy-Based Routing (PBR)

- •PBR Marking Summary

- •VoIP Dial Peer

- •VoIP Dial-Peer Summary

- •Foundation Summary

- •Congestion Management

- •“Do I Know This Already?” Quiz

- •Queuing Concepts Questions

- •WFQ and IP RTP Priority Questions

- •CBWFQ and LLQ Questions

- •Comparing Queuing Options Questions

- •Foundation Topics

- •Queuing Concepts

- •Output Queues, TX Rings, and TX Queues

- •Queuing on Interfaces Versus Subinterfaces and Virtual Circuits (VCs)

- •Summary of Queuing Concepts

- •Queuing Tools

- •FIFO Queuing

- •Priority Queuing

- •Custom Queuing

- •Weighted Fair Queuing (WFQ)

- •WFQ Scheduler: The Net Effect

- •WFQ Scheduling: The Process

- •WFQ Drop Policy, Number of Queues, and Queue Lengths

- •WFQ Summary

- •Class-Based WFQ (CBWFQ)

- •CBWFQ Summary

- •Low Latency Queuing (LLQ)

- •LLQ with More Than One Priority Queue

- •IP RTP Priority

- •Summary of Queuing Tool Features

- •Foundation Summary

- •Conceptual Questions

- •Priority Queuing and Custom Queuing

- •CBWFQ, LLQ, IP RTP Priority

- •Comparing Queuing Tool Options

- •“Do I Know This Already?” Quiz

- •Shaping and Policing Concepts Questions

- •Policing with CAR and CB Policer Questions

- •Shaping with FRTS, GTS, DTS, and CB Shaping

- •Foundation Topics

- •When and Where to Use Shaping and Policing

- •How Shaping Works

- •Where to Shape: Interfaces, Subinterfaces, and VCs

- •How Policing Works

- •CAR Internals

- •CB Policing Internals

- •Policing, but Not Discarding

- •Foundation Summary

- •Shaping and Policing Concepts

- •“Do I Know This Already?” Quiz

- •Congestion-Avoidance Concepts and RED Questions

- •WRED Questions

- •FRED Questions

- •Foundation Topics

- •TCP and UDP Reactions to Packet Loss

- •Tail Drop, Global Synchronization, and TCP Starvation

- •Random Early Detection (RED)

- •Weighted RED (WRED)

- •How WRED Weights Packets

- •WRED and Queuing

- •WRED Summary

- •Flow-Based WRED (FRED)

- •Foundation Summary

- •Congestion-Avoidance Concepts and Random Early Detection (RED)

- •Weighted RED (WRED)

- •Flow-Based WRED (FRED)

- •“Do I Know This Already?” Quiz

- •Compression Questions

- •Link Fragmentation and Interleave Questions

- •Foundation Topics

- •Payload and Header Compression

- •Payload Compression

- •Header Compression

- •Link Fragmentation and Interleaving

- •Multilink PPP LFI

- •Maximum Serialization Delay and Optimum Fragment Sizes

- •Frame Relay LFI Using FRF.12

- •Choosing Fragment Sizes for Frame Relay

- •Fragmentation with More Than One VC on a Single Access Link

- •FRF.11-C and FRF.12 Comparison

- •Foundation Summary

- •Compression Tools

- •LFI Tools

- •“Do I Know This Already?” Quiz

- •Foundation Topics

- •Call Admission Control Overview

- •Call Rerouting Alternatives

- •Bandwidth Engineering

- •CAC Mechanisms

- •CAC Mechanism Evaluation Criteria

- •Local Voice CAC

- •Physical DS0 Limitation

- •Max-Connections

- •Voice over Frame Relay—Voice Bandwidth

- •Trunk Conditioning

- •Local Voice Busyout

- •Measurement-Based Voice CAC

- •Service Assurance Agents

- •SAA Probes Versus Pings

- •SAA Service

- •Calculated Planning Impairment Factor

- •Advanced Voice Busyout

- •PSTN Fallback

- •SAA Probes Used for PSTN Fallback

- •IP Destination Caching

- •SAA Probe Format

- •PSTN Fallback Scalability

- •PSTN Fallback Summary

- •Resource-Based CAC

- •Resource Availability Indication

- •Gateway Calculation of Resources

- •RAI in Service Provider Networks

- •RAI in Enterprise Networks

- •RAI Operation

- •RAI Platform Support

- •Cisco CallManager Resource-Based CAC

- •Location-Based CAC Operation

- •Locations and Regions

- •Calculation of Resources

- •Automatic Alternate Routing

- •Location-Based CAC Summary

- •Gatekeeper Zone Bandwidth

- •Gatekeeper Zone Bandwidth Operation

- •Single-Zone Topology

- •Multizone Topology

- •Zone-per-Gateway Design

- •Gatekeeper in CallManager Networks

- •Zone Bandwidth Calculation

- •Gatekeeper Zone Bandwidth Summary

- •Integrated Services / Resource Reservation Protocol

- •RSVP Levels of Service

- •RSVP Operation

- •RSVP/H.323 Synchronization

- •Bandwidth per Codec

- •Subnet Bandwidth Management

- •Monitoring and Troubleshooting RSVP

- •RSVP CAC Summary

- •Foundation Summary

- •Call Admission Control Concepts

- •Local-Based CAC

- •Measurement-Based CAC

- •Resources-Based CAC

- •“Do I Know This Already?” Quiz

- •QoS Management Tools Questions

- •QoS Design Questions

- •Foundation Topics

- •QoS Management Tools

- •QoS Device Manager

- •QoS Policy Manager

- •Service Assurance Agent

- •Internetwork Performance Monitor

- •Service Management Solution

- •QoS Management Tool Summary

- •QoS Design for the Cisco QoS Exams

- •Four-Step QoS Design Process

- •Step 1: Determine Customer Priorities/QoS Policy

- •Step 2: Characterize the Network

- •Step 3: Implement the Policy

- •Step 4: Monitor the Network

- •QoS Design Guidelines for Voice and Video

- •Voice and Video: Bandwidth, Delay, Jitter, and Loss Requirements

- •Voice and Video QoS Design Recommendations

- •Foundation Summary

- •QoS Management

- •QoS Design

- •“Do I Know This Already?” Quiz

- •Foundation Topics

- •The Need for QoS on the LAN

- •Layer 2 Queues

- •Drop Thresholds

- •Trust Boundries

- •Cisco Catalyst Switch QoS Features

- •Catalyst 6500 QoS Features

- •Supervisor and Switching Engine

- •Policy Feature Card

- •Ethernet Interfaces

- •QoS Flow on the Catalyst 6500

- •Ingress Queue Scheduling

- •Layer 2 Switching Engine QoS Frame Flow

- •Layer 3 Switching Engine QoS Packet Flow

- •Egress Queue Scheduling

- •Catalyst 6500 QoS Summary

- •Cisco Catalyst 4500/4000 QoS Features

- •Supervisor Engine I and II

- •Supervisor Engine III and IV

- •Cisco Catalyst 3550 QoS Features

- •Cisco Catalyst 3524 QoS Features

- •CoS-to-Egress Queue Mapping for the Catalyst OS Switch

- •Layer-2-to-Layer 3 Mapping

- •Connecting a Catalyst OS Switch to WAN Segments

- •Displaying QoS Settings for the Catalyst OS Switch

- •Enabling QoS for the Catalyst IOS Switch

- •Enabling Priority Queuing for the Catalyst IOS Switch

- •CoS-to-Egress Queue Mapping for the Catalyst IOS Switch

- •Layer 2-to-Layer 3 Mapping

- •Connecting a Catalyst IOS Switch to Distribution Switches or WAN Segments

- •Displaying QoS Settings for the Catalyst IOS Switch

- •Foundation Summary

- •LAN QoS Concepts

- •Catalyst 6500 Series of Switches

- •Catalyst 4500/4000 Series of Switches

- •Catalyst 3550/3524 Series of Switches

- •QoS: Tuning Bandwidth, Delay, Jitter, and Loss

- •QoS Tools

- •Differentiated Services

- •Integrated Services

- •CAR, PBR, and CB Marking

- •Queuing Concepts

- •WFQ and IP RTP Priority

- •CBWFQ and LLQ

- •Comparing Queuing Options

- •Conceptual Questions

- •Priority Queuing and Custom Queuing

- •CBWFQ, LLQ, IP RTP Priority

- •Comparing Queuing Tool Options

- •Shaping and Policing Concepts

- •Policing with CAR and CB Policer

- •Shaping with FRTS, GTS, DTS, and CB Shaping

- •Shaping and Policing Concepts

- •Congestion-Avoidance Concepts and RED

- •WRED

- •FRED

- •Congestion-Avoidance Concepts and Random Early Detection (RED)

- •Weighted RED (WRED)

- •Flow-Based WRED (FRED)

- •Compression

- •Link Fragmentation and Interleave

- •Compression Tools

- •LFI Tools

- •Call Admission Control Concepts

- •Local-Based CAC

- •Measurement-Based CAC

- •Resources-Based CAC

- •QoS Management Tools

- •QoS Design

- •QoS Management

- •QoS Design

- •LAN QoS Concepts

- •Catalyst 6500 Series of Switches

- •Catalyst 4500/4000 Series of Switches

- •Catalyst 3550/3524 Series of Switches

- •Foundation Topics

- •QPPB Route Marking: Step 1

- •QPPB Per-Packet Marking: Step 2

- •QPPB: The Hidden Details

- •QPPB Summary

- •Flow-Based dWFQ

- •ToS-Based dWFQ

- •Distributed QoS Group–Based WFQ

- •Summary: dWFQ Options

432 Chapter 6: Congestion Avoidance Through Drop Policies

Tail Drop, Global Synchronization, and TCP Starvation

Tail drop occurs when a packet needs to be added to a queue, but the queue is full. Yes, tail drop is indeed that simple. However, tail drop results in some interesting behavior in real networks, particularly when most traffic is TCP based, but with some UDP traffic. Of course, the Internet today delivers mostly TCP traffic, because web traffic uses HTTP, and HTTP uses TCP.

The preceding section described the behavior of a single TCP connection after a single packet loss. Now imagine an Internet router, with 100,000 or more TCP connections running their traffic out of a high-speed interface. The amount of traffic in the combined TCP connections finally exceeds the output line rate, causing the output queue on the interface to fill, which in turn causes tail drop.

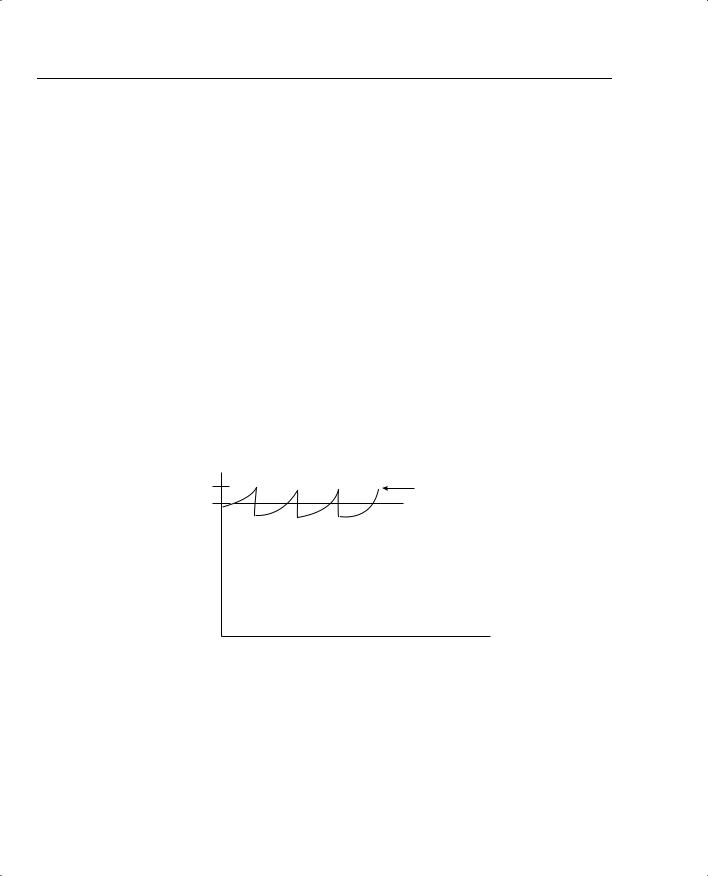

What happens to those 100,000 TCP connections after many of them have at least one packet dropped? The TCP connections reduce their CWND; the congestion in the queue abates; the various CWND values increase with slow start, and then with congestion avoidance. Eventually, however, as the CWND values of the collective TCP connections approach the previous CWND value, the congestion occurs again, and the process is repeated. When a large number of TCP connections experience near simultaneous packet loss, the lowering and growth of CWND at about the same time causes the TCP connections to synchronize. The result is called global synchronization. The graph in Figure 6-3 shows this behavior.

Figure 6-3 Graph of Global Synchronization

Line Rate |

Actual Bit Rate |

Average

Rate

Time

The graph shows the results of global synchronization. The router never fully utilizes the bandwidth on the link because the offered rate keeps dropping as a result of synchronization. Note that the overall rate does not drop to almost nothing because not all TCP connections happen to have packets drop when tail drop occurs, and some traffic uses UDP, which does not slow down in reaction to lost packets.

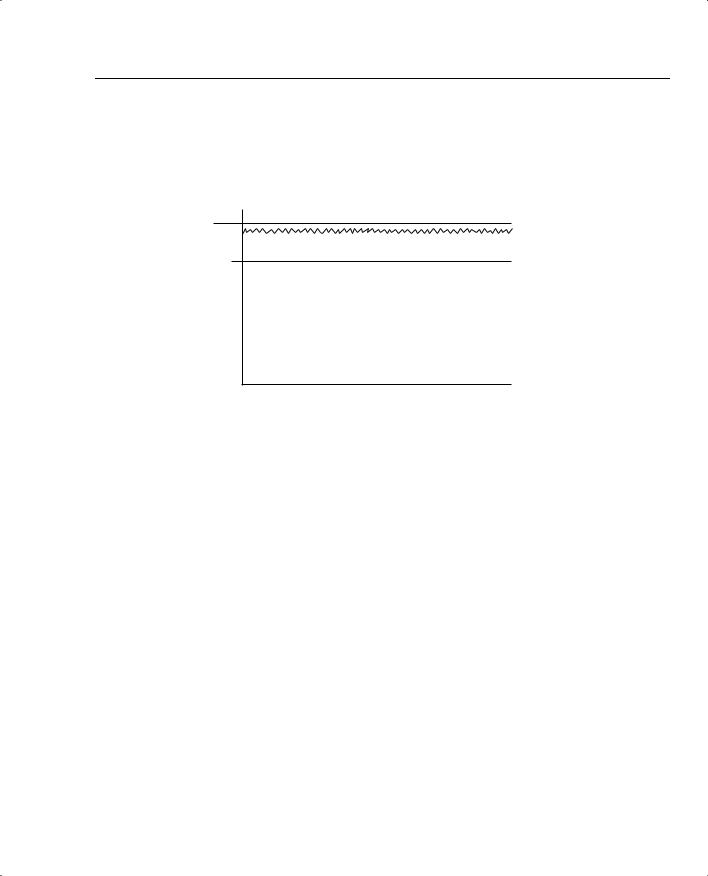

Weighted RED (WRED), when applied to the interface that was tail dropping packets, significantly reduces global synchronization. WRED allows the average output rates to approach line

Congestion-Avoidance Concepts and Random Early Detection (RED) 433

rate, with even more significant throughput improvements, because avoiding congestion and tail drops decreases the overall number of lost packets. Figure 6-4 shows an example graph of the same interface, after WRED was applied.

Figure 6-4 Graph of Traffic Rates After the Application of WRED

Line Rate

Actual Rate

Actual Rate

with WRED

Average Rate

Before WRED

Another problem can occur if UDP traffic competes with TCP for bandwidth and queue space. Although UDP traffic consumes a much lower percentage of Internet bandwidth than TCP does, UDP can get a disproportionate amount of bandwidth as a result of TCP’s reaction to packet loss. Imagine that on the same Internet router, 20 percent of the offered packets were UDP, and 80 percent TCP. Tail drop causes some TCP and UDP packets to be dropped; however, because the TCP senders slow down, and the UDP senders do not, additional UDP streams from the UDP senders can consume more and more bandwidth during congestion.

Taking the same concept a little deeper, imagine that several people crank up some UDP-based audio or video streaming applications, and that traffic also happens to need to exit this same congested interface. The interface output queue on this Internet router could fill with UDP packets. If a few high-bandwidth UDP applications fill the queue, a larger percentage of TCP packets might get tail dropped—resulting in further reduction of TCP windows, and less TCP traffic relative to the amount of UDP traffic.

The term “TCP starvation” describes the phenomena of the output queue being filled with larger volumes of UDP, causing TCP connections to have packets tail dropped. Tail drop does not distinguish between packets in any way, including whether they are TCP or UDP, or whether the flow uses a lot of bandwidth or just a little bandwidth. TCP connections can be starved for bandwidth because the UDP flows behave poorly in terms of congestion control. Flow-Based WRED (FRED), which is also based on RED, specifically addresses the issues related to TCP starvation, as discussed later in the chapter.

434 Chapter 6: Congestion Avoidance Through Drop Policies

Random Early Detection (RED)

Random Early Detection (RED) reduces the congestion in queues by dropping packets so that some of the TCP connections temporarily send fewer packets into the network. Instead of waiting until a queue fills, causing a large number of tail drops, RED purposefully drops a percentage of packets before a queue fills. This action attempts to make the computers sending the traffic reduce the offered load that is sent into the network.

The name “Random Early Detection” itself describes the overall operation of the algorithm. RED randomly picks the packets that are dropped after the decision to drop some packets has been made. RED detects queue congestion early, before the queue actually fills, thereby avoiding tail drops and synchronization. In short, RED discards some randomly picked packets early, before congestion gets really bad and the queue fills.

NOTE |

IOS supports two RED-based tools: Weighted RED (WRED) and Flow-Based WRED (FRED). |

|

RED itself is not supported in IOS. |

|

|

RED logic contains two main parts. RED must first detect when congestion occurs; in other words, RED must choose under what conditions it should discard packets. When RED decides to discard packets, it must decide how many to discard.

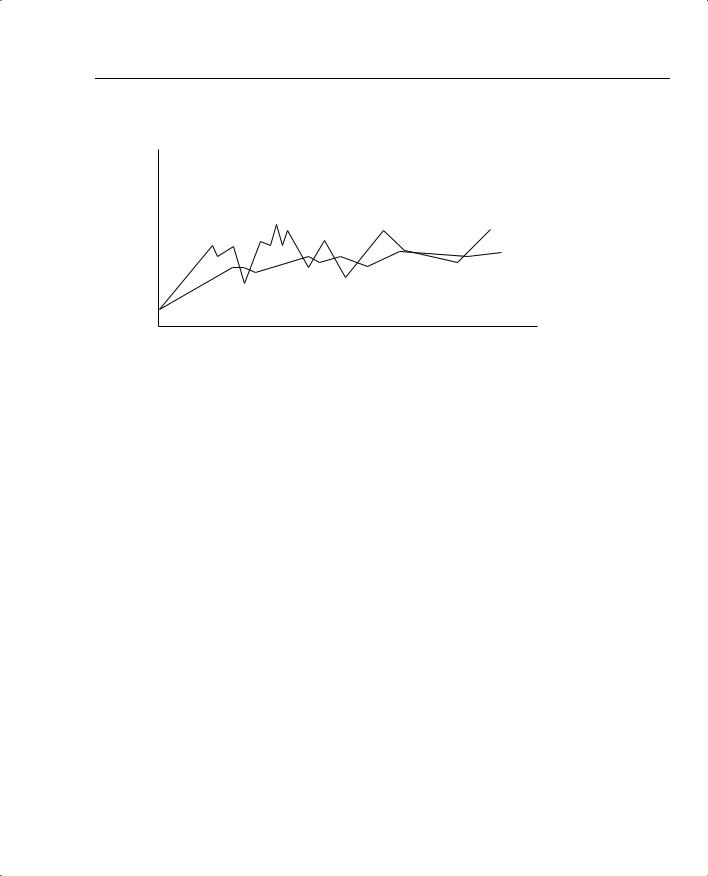

First, RED measures the average queue depth of the queue in question. RED calculates the average depth, and then decides whether congestion is occurring based on the average depth. RED uses the average depth, and not the actual queue depth, because the actual queue depth will most likely change much more quickly than the average depth. Because RED wants to avoid the effects of synchronization, it needs to act in a balanced fashion, not a jerky, sporadic fashion. Figure 6-5 shows a graph of the actual queue depth for a particular queue, compared with the average queue depth.

As seen in the graph, the calculated average queue depth changes more slowly than does the actual queue depth. RED uses the following algorithm when calculating the average queue depth:

New average = (Old_average * (1 – 2–n)) + (Current_Q_depth * 2–n)

For you test takers out there, do not worry about memorizing the formula, but focus on the idea. WRED uses this algorithm, with a default for n of 9. This makes the equation read as follows:

New average = (Old_average * .998) + (Current_Q_depth * .002)

Congestion-Avoidance Concepts and Random Early Detection (RED) 435

Figure 6-5 Graph of Actual Queue Depth Versus Average Queue Depth

Actual Queue Depth |

Average Queue Depth |

Time |

In other words, the current queue depth only accounts for .2 percent of the new average each time it is calculated. Therefore, the average changes slowly, which helps RED prevent overreaction to changes in the queue depth. When configuring WRED and FRED, you can change the value of n in this formula by setting the exponential weighting constant parameter. By making the exponential weighting constant smaller, you make the average change more quickly; by making it larger, the average changes more slowly.

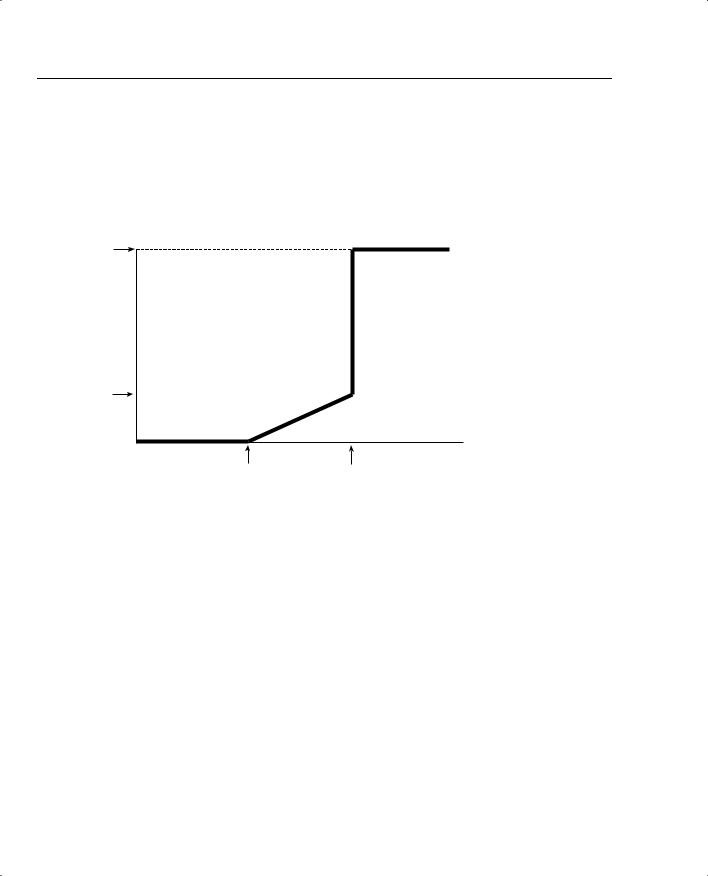

RED decides whether to discard packets by comparing the average queue depth to two thresholds, called the minimum threshold and maximum threshold. Table 6-2 describes the overall logic of when RED discards packets, as illustrated in Figure 6-6.

Table 6-2 Three Categories of When RED Will Discard Packets and How Many

Average Queue Depth Versus |

|

Thresholds |

Action |

|

|

Average < minimum threshold |

No packets dropped. |

|

|

Minimum threshold < average depth < |

A percentage of packets dropped. Drop percentage increases |

maximum threshold |

from 0 to a maximum percent as the average depth moves |

|

from the minimum threshold to the maximum. |

|

|

Average depth > maximum threshold |

All new packets discarded similar to tail dropping. |

|

|

As seen in Table 6-2 and Figure 6-6, RED does not discard packets when the average queue depth falls below the minimum threshold. When the average depth rises above the maximum threshold, RED discards all packets. In between the two thresholds, however, RED discards a percentage of packets, with the percentage growing linearly as the average queue depth grows. The core concept behind RED becomes more obvious if you notice that the maximum percentage of packets discarded is still much less than discarding all packets. Once again, RED wants

436 Chapter 6: Congestion Avoidance Through Drop Policies

to discard some packets, but not all packets. As congestion increases, RED discards a higher percentage of packets. Eventually, the congestion can increase to the point that RED discards all packets.

Figure 6-6 RED Discarding Logic Using Average Depth, Minimum Threshold, and Maximum Threshold

Discard

Percentage

100%

Maximum

Discard

Percentage

Average Queue Depth

Minimum Threshold Maximum Threshold

You can set the maximum percentage of packets discarded by WRED by setting the mark probability denominator (MPD) setting in IOS. IOS calculates the maximum percentage using the formula 1/MPD. For instance, an MPD of 10 yields a calculated value of 1/10, meaning the maximum discard rate is 10 percent.

Table 6-3 summarizes some of the key terms related to RED.

Table 6-3 |

RED Terminology |

|

|

|

|

|

Term |

Meaning |

|

|

|

|

Actual queue depth |

The actual number of packets in a queue at a particular point in time. |

|

|

|

|

Average queue |

Calculated measurement based on the actual queue depth and the previous aver- |

|

depth |

age. Designed to adjust slowly to the rapid changes of the actual queue depth. |

|

|

|

|

Minimum threshold |

Compares this setting to the average queue depth to decide whether packets |

|

|

should be discarded. No packets are discarded if the average queue depth falls |

|

|

below this minimum threshold. |

|

|

|

|

Maximum |

Compares this setting to the average queue depth to decide whether packets |

|

threshold |

should be discarded. All packets are discarded if the average queue depth falls |

|

|

above this maximum threshold. |

|

|

|