- •QoS Overview

- •“Do I Know This Already?” Quiz

- •QoS: Tuning Bandwidth, Delay, Jitter, and Loss Questions

- •Foundation Topics

- •QoS: Tuning Bandwidth, Delay, Jitter, and Loss

- •Bandwidth

- •The clock rate Command Versus the bandwidth Command

- •QoS Tools That Affect Bandwidth

- •Delay

- •Serialization Delay

- •Propagation Delay

- •Queuing Delay

- •Forwarding Delay

- •Shaping Delay

- •Network Delay

- •Delay Summary

- •QoS Tools That Affect Delay

- •Jitter

- •QoS Tools That Affect Jitter

- •Loss

- •QoS Tools That Affect Loss

- •Summary: QoS Characteristics: Bandwidth, Delay, Jitter, and Loss

- •Voice Basics

- •Voice Bandwidth Considerations

- •Voice Delay Considerations

- •Voice Jitter Considerations

- •Voice Loss Considerations

- •Video Basics

- •Video Bandwidth Considerations

- •Video Delay Considerations

- •Video Jitter Considerations

- •Video Loss Considerations

- •Comparing Voice and Video: Summary

- •IP Data Basics

- •Data Bandwidth Considerations

- •Data Delay Considerations

- •Data Jitter Considerations

- •Data Loss Considerations

- •Comparing Voice, Video, and Data: Summary

- •Foundation Summary

- •QoS Tools and Architectures

- •“Do I Know This Already?” Quiz

- •QoS Tools Questions

- •Differentiated Services Questions

- •Integrated Services Questions

- •Foundation Topics

- •Introduction to IOS QoS Tools

- •Queuing

- •Queuing Tools

- •Shaping and Policing

- •Shaping and Policing Tools

- •Congestion Avoidance

- •Congestion-Avoidance Tools

- •Call Admission Control and RSVP

- •CAC Tools

- •Management Tools

- •Summary

- •The Good-Old Common Sense QoS Model

- •GOCS Flow-Based QoS

- •GOCS Class-Based QoS

- •The Differentiated Services QoS Model

- •DiffServ Per-Hop Behaviors

- •The Class Selector PHB and DSCP Values

- •The Assured Forwarding PHB and DSCP Values

- •The Expedited Forwarding PHB and DSCP Values

- •The Integrated Services QoS Model

- •Foundation Summary

- •“Do I Know This Already?” Quiz Questions

- •CAR, PBR, and CB Marking Questions

- •Foundation Topics

- •Marking

- •IP Header QoS Fields: Precedence and DSCP

- •LAN Class of Service (CoS)

- •Other Marking Fields

- •Summary of Marking Fields

- •Class-Based Marking (CB Marking)

- •Network-Based Application Recognition (NBAR)

- •CB Marking show Commands

- •CB Marking Summary

- •Committed Access Rate (CAR)

- •CAR Marking Summary

- •Policy-Based Routing (PBR)

- •PBR Marking Summary

- •VoIP Dial Peer

- •VoIP Dial-Peer Summary

- •Foundation Summary

- •Congestion Management

- •“Do I Know This Already?” Quiz

- •Queuing Concepts Questions

- •WFQ and IP RTP Priority Questions

- •CBWFQ and LLQ Questions

- •Comparing Queuing Options Questions

- •Foundation Topics

- •Queuing Concepts

- •Output Queues, TX Rings, and TX Queues

- •Queuing on Interfaces Versus Subinterfaces and Virtual Circuits (VCs)

- •Summary of Queuing Concepts

- •Queuing Tools

- •FIFO Queuing

- •Priority Queuing

- •Custom Queuing

- •Weighted Fair Queuing (WFQ)

- •WFQ Scheduler: The Net Effect

- •WFQ Scheduling: The Process

- •WFQ Drop Policy, Number of Queues, and Queue Lengths

- •WFQ Summary

- •Class-Based WFQ (CBWFQ)

- •CBWFQ Summary

- •Low Latency Queuing (LLQ)

- •LLQ with More Than One Priority Queue

- •IP RTP Priority

- •Summary of Queuing Tool Features

- •Foundation Summary

- •Conceptual Questions

- •Priority Queuing and Custom Queuing

- •CBWFQ, LLQ, IP RTP Priority

- •Comparing Queuing Tool Options

- •“Do I Know This Already?” Quiz

- •Shaping and Policing Concepts Questions

- •Policing with CAR and CB Policer Questions

- •Shaping with FRTS, GTS, DTS, and CB Shaping

- •Foundation Topics

- •When and Where to Use Shaping and Policing

- •How Shaping Works

- •Where to Shape: Interfaces, Subinterfaces, and VCs

- •How Policing Works

- •CAR Internals

- •CB Policing Internals

- •Policing, but Not Discarding

- •Foundation Summary

- •Shaping and Policing Concepts

- •“Do I Know This Already?” Quiz

- •Congestion-Avoidance Concepts and RED Questions

- •WRED Questions

- •FRED Questions

- •Foundation Topics

- •TCP and UDP Reactions to Packet Loss

- •Tail Drop, Global Synchronization, and TCP Starvation

- •Random Early Detection (RED)

- •Weighted RED (WRED)

- •How WRED Weights Packets

- •WRED and Queuing

- •WRED Summary

- •Flow-Based WRED (FRED)

- •Foundation Summary

- •Congestion-Avoidance Concepts and Random Early Detection (RED)

- •Weighted RED (WRED)

- •Flow-Based WRED (FRED)

- •“Do I Know This Already?” Quiz

- •Compression Questions

- •Link Fragmentation and Interleave Questions

- •Foundation Topics

- •Payload and Header Compression

- •Payload Compression

- •Header Compression

- •Link Fragmentation and Interleaving

- •Multilink PPP LFI

- •Maximum Serialization Delay and Optimum Fragment Sizes

- •Frame Relay LFI Using FRF.12

- •Choosing Fragment Sizes for Frame Relay

- •Fragmentation with More Than One VC on a Single Access Link

- •FRF.11-C and FRF.12 Comparison

- •Foundation Summary

- •Compression Tools

- •LFI Tools

- •“Do I Know This Already?” Quiz

- •Foundation Topics

- •Call Admission Control Overview

- •Call Rerouting Alternatives

- •Bandwidth Engineering

- •CAC Mechanisms

- •CAC Mechanism Evaluation Criteria

- •Local Voice CAC

- •Physical DS0 Limitation

- •Max-Connections

- •Voice over Frame Relay—Voice Bandwidth

- •Trunk Conditioning

- •Local Voice Busyout

- •Measurement-Based Voice CAC

- •Service Assurance Agents

- •SAA Probes Versus Pings

- •SAA Service

- •Calculated Planning Impairment Factor

- •Advanced Voice Busyout

- •PSTN Fallback

- •SAA Probes Used for PSTN Fallback

- •IP Destination Caching

- •SAA Probe Format

- •PSTN Fallback Scalability

- •PSTN Fallback Summary

- •Resource-Based CAC

- •Resource Availability Indication

- •Gateway Calculation of Resources

- •RAI in Service Provider Networks

- •RAI in Enterprise Networks

- •RAI Operation

- •RAI Platform Support

- •Cisco CallManager Resource-Based CAC

- •Location-Based CAC Operation

- •Locations and Regions

- •Calculation of Resources

- •Automatic Alternate Routing

- •Location-Based CAC Summary

- •Gatekeeper Zone Bandwidth

- •Gatekeeper Zone Bandwidth Operation

- •Single-Zone Topology

- •Multizone Topology

- •Zone-per-Gateway Design

- •Gatekeeper in CallManager Networks

- •Zone Bandwidth Calculation

- •Gatekeeper Zone Bandwidth Summary

- •Integrated Services / Resource Reservation Protocol

- •RSVP Levels of Service

- •RSVP Operation

- •RSVP/H.323 Synchronization

- •Bandwidth per Codec

- •Subnet Bandwidth Management

- •Monitoring and Troubleshooting RSVP

- •RSVP CAC Summary

- •Foundation Summary

- •Call Admission Control Concepts

- •Local-Based CAC

- •Measurement-Based CAC

- •Resources-Based CAC

- •“Do I Know This Already?” Quiz

- •QoS Management Tools Questions

- •QoS Design Questions

- •Foundation Topics

- •QoS Management Tools

- •QoS Device Manager

- •QoS Policy Manager

- •Service Assurance Agent

- •Internetwork Performance Monitor

- •Service Management Solution

- •QoS Management Tool Summary

- •QoS Design for the Cisco QoS Exams

- •Four-Step QoS Design Process

- •Step 1: Determine Customer Priorities/QoS Policy

- •Step 2: Characterize the Network

- •Step 3: Implement the Policy

- •Step 4: Monitor the Network

- •QoS Design Guidelines for Voice and Video

- •Voice and Video: Bandwidth, Delay, Jitter, and Loss Requirements

- •Voice and Video QoS Design Recommendations

- •Foundation Summary

- •QoS Management

- •QoS Design

- •“Do I Know This Already?” Quiz

- •Foundation Topics

- •The Need for QoS on the LAN

- •Layer 2 Queues

- •Drop Thresholds

- •Trust Boundries

- •Cisco Catalyst Switch QoS Features

- •Catalyst 6500 QoS Features

- •Supervisor and Switching Engine

- •Policy Feature Card

- •Ethernet Interfaces

- •QoS Flow on the Catalyst 6500

- •Ingress Queue Scheduling

- •Layer 2 Switching Engine QoS Frame Flow

- •Layer 3 Switching Engine QoS Packet Flow

- •Egress Queue Scheduling

- •Catalyst 6500 QoS Summary

- •Cisco Catalyst 4500/4000 QoS Features

- •Supervisor Engine I and II

- •Supervisor Engine III and IV

- •Cisco Catalyst 3550 QoS Features

- •Cisco Catalyst 3524 QoS Features

- •CoS-to-Egress Queue Mapping for the Catalyst OS Switch

- •Layer-2-to-Layer 3 Mapping

- •Connecting a Catalyst OS Switch to WAN Segments

- •Displaying QoS Settings for the Catalyst OS Switch

- •Enabling QoS for the Catalyst IOS Switch

- •Enabling Priority Queuing for the Catalyst IOS Switch

- •CoS-to-Egress Queue Mapping for the Catalyst IOS Switch

- •Layer 2-to-Layer 3 Mapping

- •Connecting a Catalyst IOS Switch to Distribution Switches or WAN Segments

- •Displaying QoS Settings for the Catalyst IOS Switch

- •Foundation Summary

- •LAN QoS Concepts

- •Catalyst 6500 Series of Switches

- •Catalyst 4500/4000 Series of Switches

- •Catalyst 3550/3524 Series of Switches

- •QoS: Tuning Bandwidth, Delay, Jitter, and Loss

- •QoS Tools

- •Differentiated Services

- •Integrated Services

- •CAR, PBR, and CB Marking

- •Queuing Concepts

- •WFQ and IP RTP Priority

- •CBWFQ and LLQ

- •Comparing Queuing Options

- •Conceptual Questions

- •Priority Queuing and Custom Queuing

- •CBWFQ, LLQ, IP RTP Priority

- •Comparing Queuing Tool Options

- •Shaping and Policing Concepts

- •Policing with CAR and CB Policer

- •Shaping with FRTS, GTS, DTS, and CB Shaping

- •Shaping and Policing Concepts

- •Congestion-Avoidance Concepts and RED

- •WRED

- •FRED

- •Congestion-Avoidance Concepts and Random Early Detection (RED)

- •Weighted RED (WRED)

- •Flow-Based WRED (FRED)

- •Compression

- •Link Fragmentation and Interleave

- •Compression Tools

- •LFI Tools

- •Call Admission Control Concepts

- •Local-Based CAC

- •Measurement-Based CAC

- •Resources-Based CAC

- •QoS Management Tools

- •QoS Design

- •QoS Management

- •QoS Design

- •LAN QoS Concepts

- •Catalyst 6500 Series of Switches

- •Catalyst 4500/4000 Series of Switches

- •Catalyst 3550/3524 Series of Switches

- •Foundation Topics

- •QPPB Route Marking: Step 1

- •QPPB Per-Packet Marking: Step 2

- •QPPB: The Hidden Details

- •QPPB Summary

- •Flow-Based dWFQ

- •ToS-Based dWFQ

- •Distributed QoS Group–Based WFQ

- •Summary: dWFQ Options

428 Chapter 6: Congestion Avoidance Through Drop Policies

Foundation Topics

The term “congestion avoidance” describes a small set of IOS tools that help queues avoid congestion. Queues fill when the cumulative offered load from the various senders of packets exceeds the line rate of the interface or the shaping rate if shaping is enabled. When more traffic needs to exit the interface than the interface can support, queues form. Queuing tools help us manage the queues; congestion-avoidance tools help us reduce the level of congestion in the queues by selectively dropping packets.

Once again, a QoS tool gives you the opportunity to make tradeoffs between QoS characteris- tics—in this case, packet loss versus delay and jitter. However, the tradeoff is not so simple in this case. It turns out that by selectively discarding some packets before the queues get completely full, cumulative packet loss can be reduced, and queuing delay and queuing jitter can also be reduced! When you prune a plant, you kill some of the branches, but the plant gets healthier and more beautiful through the process. Similarly, congestion-avoidance tools discard some packets, but in doing so achieve the overall effect of a healthier network.

This chapter begins by explaining the core concepts that create the need for congestionavoidance tools. Following this discussion, the underlying algorithms, which are based RED, are covered. Finally, the chapter includes coverage of configuration and monitoring for the two IOS congestion-avoidance tools, WRED and FRED.

Congestion-Avoidance Concepts and Random Early

Detection (RED)

Congestion-avoidance tools rely on the behavior of TCP to reduce congestion. A large percentage of Internet traffic consists of TCP traffic, and TCP senders reduce the rate at which they send packets after packet loss. By purposefully discarding a percentage of packets, congestionavoidance tools cause some TCP connections to slow down, which reduces congestion.

This section begins with a discussion of User Datagram Protocol (UDP) and TCP behavior when packets are lost. By understanding TCP behavior in particular, you can appreciate what happens as a result of tail drop, which is covered next in this section. Finally, to close this section, RED is covered. The two IOS congestion-avoidance tools both use the underlying concepts of RED.

TCP and UDP Reactions to Packet Loss

UDP and TCP behave very differently when packets are lost. UDP, by itself, does not react to packet loss, because UDP does not include any mechanism with which to know whether a packet was lost. TCP senders, however, slow down the rate at which they send after recognizing that a packet was lost. Unlike UDP, TCP includes a field in the TCP header to number each TCP

Congestion-Avoidance Concepts and Random Early Detection (RED) 429

segment (sequence number), and another field used by the receiver to confirm receipt of the packets (acknowledgment number). When a TCP receiver signals that a packet was not received, or if an acknowledgment is not received at all, the TCP sender assumes the packet was lost, and resends the packet. More importantly, the sender also slows down sending data into the network.

TCP uses two separate window sizes that determine the maximum window size of data that can be sent before the sender must stop and wait for an acknowledgment. The first of the two different windowing features of TCP uses the Window field in the TCP header, which is also called the receiver window or the advertised window. The receiver grants the sender the right to send x bytes of data before requiring an acknowledgment, by setting the value x into the Window field of the TCP header. The receiver grants larger and larger windows as time goes on, reaching the point at which the TCP sender never stops sending, with acknowledgments arriving just before a complete window of traffic has been sent.

The second window used by TCP is called the congestion window, or CWND, as defined by RFC 2581. Unlike the advertised window, the congestion window is not communicated between the receiver and sender using fields in the TCP header. Instead, the TCP sender calculates CWND. CWND varies in size much more quickly than does the advertised window, because it was designed to react to congestion in networks.

The TCP sender always uses the lower of the two windows to determine how much data it can send before receiving an acknowledgment. The receiver window is designed to let the receiver prevent the sender from sending data faster than the receiver can process the data. The CWND is designed to let the sender react to network congestion by slowing down its sending rate. It is the variation in the CWND, in reaction to lost packets, which RED relies upon.

To appreciate how RED works, you need to understand the processes by which a TCP sender lowers and increases the CWND. CWND is lowered in response to lost segments. CWND is raised based on the logic defined as the TCP slow start and TCP congestion-avoidance algorithms. In fact, most people use the term “slow start” to describe both features together, in part because they work closely together. The process works like this:

•A TCP sender fails to receive an acknowledgment in time, signifying a possible lost packet.

•The TCP sender sets CWND to the size of a single segment.

•Another variable, called slow start threshold (SSTHRESH) is set to 50 percent of the CWND value before the lost segment.

•After CWND has been lowered, slow start governs how fast the CWND grows up until the CWND has been increased to the value of SSTHRESH.

•After the slow start phase is complete, congestion avoidance governs how fast CWND grows after CWND > SSTHRESH.

430 Chapter 6: Congestion Avoidance Through Drop Policies

Therefore, when a TCP sender fails to receive an acknowledgment, it reduces the CWND to a very low value (one segment size of window). This process is sometimes called slamming the window or slamming the window shut. The sender progressively increases CWND based first on slow start, and then on congestion avoidance. As you go through this text, remember that the TCP windows use a unit of bytes in reality. To make the discussion a little easier, I have listed the windows as a number of segments, which makes the actual numbers more obvious.

Slow start increases CWND by the maximum segment size for every packet for which it receives an acknowledgment. Because TCP receivers may, and typically do, acknowledge segments well before the full window has been sent by the sender, CWND grows at an exponential rate during slow start—a seemingly contradictory concept. Slow start gets its name from the fact that CWND has been set to a very low value at the beginning of the process, meaning it starts slowly, but slow start does cause CWND to grow quickly.

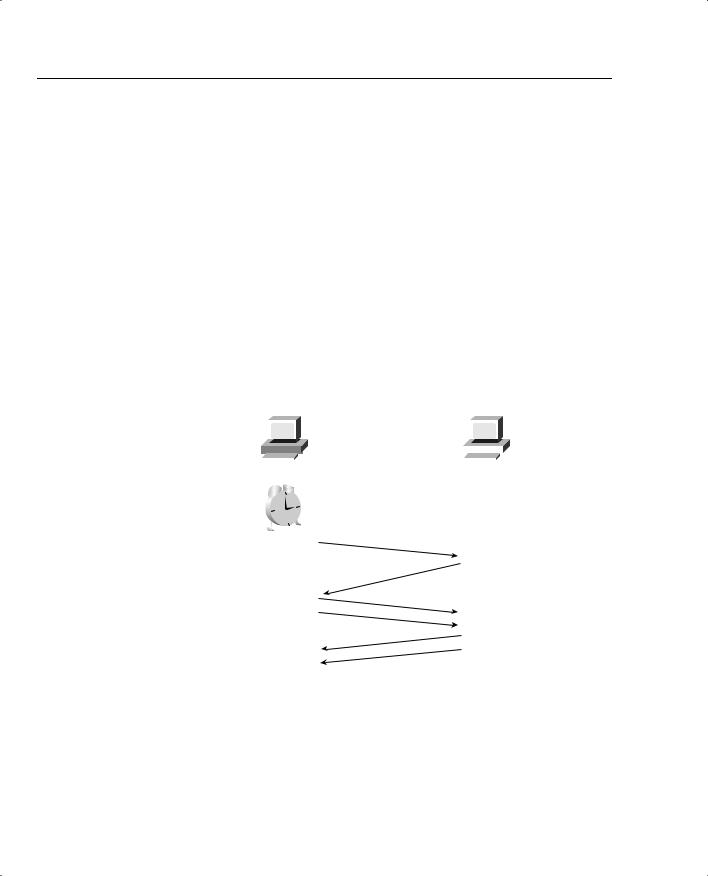

Figure 6-1 outlines the process of no acknowledgment received, slamming CWND to the size of a single segment, and slow start.

Figure 6-1 Slamming the CWND Shut and the Slow Start Process After No Acknowledgment Received

Sender |

Receiver |

||||||||

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Timeout!

Note: CWND units Are Segment Size in This Example

CWND = 1

CWND = 2

CWND = 3 CWND = 4

SN = 1 |

||||

ACK |

= |

2 |

||

|

||||

|

|

|

||

SN = 2 |

||||

SN = 3 |

||||

ACK |

|

= 3 |

||

|

= 4 |

|||

ACK |

||||

|

|

|||

By increasing CWND when each acknowledgment is received, CWND actually increases at an exponential rate. So, Slow Start might be better called slow start but fast recovery.

Congestion avoidance is the second mechanism that dictates how quickly CWND increases after being lowered. As CWND grows, it begins to approach the original CWND value. If the original packet loss was a result of queue congestion, letting this TCP connection increase back to the original CWND may then induce the same congestion that caused the CWND to be lowered in the first place. Congestion avoidance just reduces the rate of increase for CWND as it

Congestion-Avoidance Concepts and Random Early Detection (RED) 431

approaches the previous CWND value. Once slow start has increased CWND to the value of SSTHRESH, which was set to 50 percent of the original CWND, congestion-avoidance logic replaces the slow start logic for increasing CWND. Congestion avoidance uses a formula that allows CWND to grow more slowly, essentially at a linear rate.

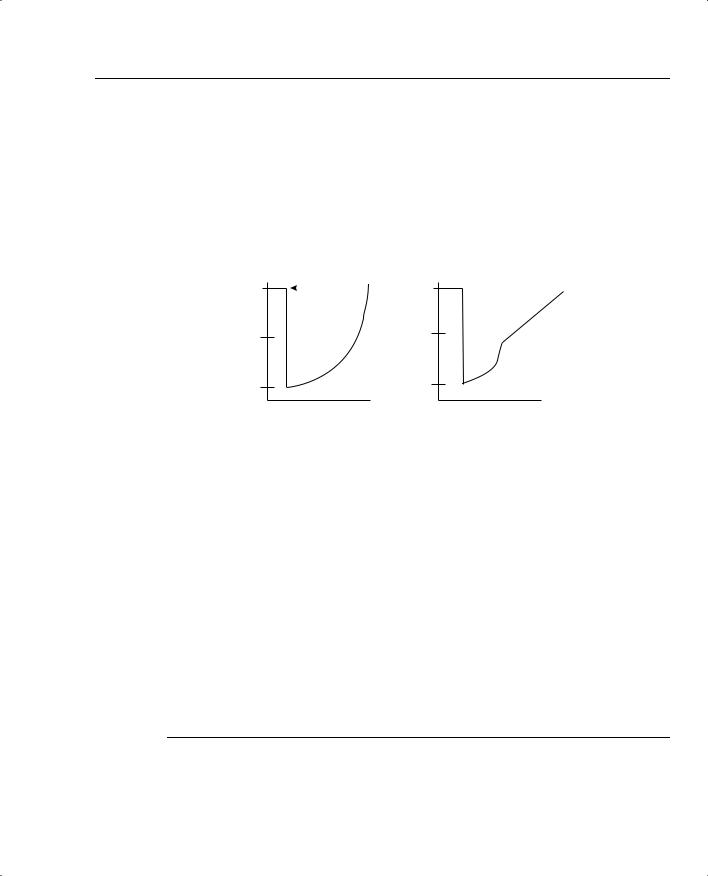

Figure 6-2 shows a graph of CWND with just slow start, and with slow start and congestion avoidance, after the sender times out waiting for an acknowledgment.

Figure 6-2 Graphs of CWND with Slow Start and Congestion Avoidance

CWND |

|

CWND |

Before |

Lost ACK |

|

|

|

|

Congestion |

|

|

1 Segment |

|

|

|

Size |

Time |

|

Time |

|

|||

|

Slow Start Only |

CWND>SSthresh |

|

|

|

Slow Start and |

|

|

|

Congestion Avoidance |

|

Many people do not realize that the slow start process consists of a combination of the slow start algorithm and the congestion-avoidance algorithm. With slow start, CWND is lowered, but it grows quickly. With congestion avoidance, the CWND value grows more slowly as it approaches the previous CWND value.

In summary, UDP and TCP react to packet loss in the following ways:

•UDP senders do not reduce or increase sending rates as a result of lost packets.

•TCP senders do reduce their sending rates as a result of lost packets.

•TCP senders decide to use either the receiver window or the CWND, based on whichever is smaller at the time.

•TCP slow start and congestion avoidance dictate how fast the CWND rises after the window was lowered due to packet loss.

NOTE |

Depending on the circumstances, TCP sometimes halves CWND in reaction to lost packets, and |

|

in some cases it lowers CWND to one segment size, as was described in this first section. The |

|

more severe reaction of reducing the window to one segment size was shown in this section for |

|

a more complete description of slow start and congestion avoidance. |

|

|