- •brief contents

- •contents

- •preface

- •acknowledgments

- •about this book

- •What’s new in the second edition

- •Who should read this book

- •Roadmap

- •Advice for data miners

- •Code examples

- •Code conventions

- •Author Online

- •About the author

- •about the cover illustration

- •1 Introduction to R

- •1.2 Obtaining and installing R

- •1.3 Working with R

- •1.3.1 Getting started

- •1.3.2 Getting help

- •1.3.3 The workspace

- •1.3.4 Input and output

- •1.4 Packages

- •1.4.1 What are packages?

- •1.4.2 Installing a package

- •1.4.3 Loading a package

- •1.4.4 Learning about a package

- •1.5 Batch processing

- •1.6 Using output as input: reusing results

- •1.7 Working with large datasets

- •1.8 Working through an example

- •1.9 Summary

- •2 Creating a dataset

- •2.1 Understanding datasets

- •2.2 Data structures

- •2.2.1 Vectors

- •2.2.2 Matrices

- •2.2.3 Arrays

- •2.2.4 Data frames

- •2.2.5 Factors

- •2.2.6 Lists

- •2.3 Data input

- •2.3.1 Entering data from the keyboard

- •2.3.2 Importing data from a delimited text file

- •2.3.3 Importing data from Excel

- •2.3.4 Importing data from XML

- •2.3.5 Importing data from the web

- •2.3.6 Importing data from SPSS

- •2.3.7 Importing data from SAS

- •2.3.8 Importing data from Stata

- •2.3.9 Importing data from NetCDF

- •2.3.10 Importing data from HDF5

- •2.3.11 Accessing database management systems (DBMSs)

- •2.3.12 Importing data via Stat/Transfer

- •2.4 Annotating datasets

- •2.4.1 Variable labels

- •2.4.2 Value labels

- •2.5 Useful functions for working with data objects

- •2.6 Summary

- •3 Getting started with graphs

- •3.1 Working with graphs

- •3.2 A simple example

- •3.3 Graphical parameters

- •3.3.1 Symbols and lines

- •3.3.2 Colors

- •3.3.3 Text characteristics

- •3.3.4 Graph and margin dimensions

- •3.4 Adding text, customized axes, and legends

- •3.4.1 Titles

- •3.4.2 Axes

- •3.4.3 Reference lines

- •3.4.4 Legend

- •3.4.5 Text annotations

- •3.4.6 Math annotations

- •3.5 Combining graphs

- •3.5.1 Creating a figure arrangement with fine control

- •3.6 Summary

- •4 Basic data management

- •4.1 A working example

- •4.2 Creating new variables

- •4.3 Recoding variables

- •4.4 Renaming variables

- •4.5 Missing values

- •4.5.1 Recoding values to missing

- •4.5.2 Excluding missing values from analyses

- •4.6 Date values

- •4.6.1 Converting dates to character variables

- •4.6.2 Going further

- •4.7 Type conversions

- •4.8 Sorting data

- •4.9 Merging datasets

- •4.9.1 Adding columns to a data frame

- •4.9.2 Adding rows to a data frame

- •4.10 Subsetting datasets

- •4.10.1 Selecting (keeping) variables

- •4.10.2 Excluding (dropping) variables

- •4.10.3 Selecting observations

- •4.10.4 The subset() function

- •4.10.5 Random samples

- •4.11 Using SQL statements to manipulate data frames

- •4.12 Summary

- •5 Advanced data management

- •5.2 Numerical and character functions

- •5.2.1 Mathematical functions

- •5.2.2 Statistical functions

- •5.2.3 Probability functions

- •5.2.4 Character functions

- •5.2.5 Other useful functions

- •5.2.6 Applying functions to matrices and data frames

- •5.3 A solution for the data-management challenge

- •5.4 Control flow

- •5.4.1 Repetition and looping

- •5.4.2 Conditional execution

- •5.5 User-written functions

- •5.6 Aggregation and reshaping

- •5.6.1 Transpose

- •5.6.2 Aggregating data

- •5.6.3 The reshape2 package

- •5.7 Summary

- •6 Basic graphs

- •6.1 Bar plots

- •6.1.1 Simple bar plots

- •6.1.2 Stacked and grouped bar plots

- •6.1.3 Mean bar plots

- •6.1.4 Tweaking bar plots

- •6.1.5 Spinograms

- •6.2 Pie charts

- •6.3 Histograms

- •6.4 Kernel density plots

- •6.5 Box plots

- •6.5.1 Using parallel box plots to compare groups

- •6.5.2 Violin plots

- •6.6 Dot plots

- •6.7 Summary

- •7 Basic statistics

- •7.1 Descriptive statistics

- •7.1.1 A menagerie of methods

- •7.1.2 Even more methods

- •7.1.3 Descriptive statistics by group

- •7.1.4 Additional methods by group

- •7.1.5 Visualizing results

- •7.2 Frequency and contingency tables

- •7.2.1 Generating frequency tables

- •7.2.2 Tests of independence

- •7.2.3 Measures of association

- •7.2.4 Visualizing results

- •7.3 Correlations

- •7.3.1 Types of correlations

- •7.3.2 Testing correlations for significance

- •7.3.3 Visualizing correlations

- •7.4 T-tests

- •7.4.3 When there are more than two groups

- •7.5 Nonparametric tests of group differences

- •7.5.1 Comparing two groups

- •7.5.2 Comparing more than two groups

- •7.6 Visualizing group differences

- •7.7 Summary

- •8 Regression

- •8.1 The many faces of regression

- •8.1.1 Scenarios for using OLS regression

- •8.1.2 What you need to know

- •8.2 OLS regression

- •8.2.1 Fitting regression models with lm()

- •8.2.2 Simple linear regression

- •8.2.3 Polynomial regression

- •8.2.4 Multiple linear regression

- •8.2.5 Multiple linear regression with interactions

- •8.3 Regression diagnostics

- •8.3.1 A typical approach

- •8.3.2 An enhanced approach

- •8.3.3 Global validation of linear model assumption

- •8.3.4 Multicollinearity

- •8.4 Unusual observations

- •8.4.1 Outliers

- •8.4.3 Influential observations

- •8.5 Corrective measures

- •8.5.1 Deleting observations

- •8.5.2 Transforming variables

- •8.5.3 Adding or deleting variables

- •8.5.4 Trying a different approach

- •8.6 Selecting the “best” regression model

- •8.6.1 Comparing models

- •8.6.2 Variable selection

- •8.7 Taking the analysis further

- •8.7.1 Cross-validation

- •8.7.2 Relative importance

- •8.8 Summary

- •9 Analysis of variance

- •9.1 A crash course on terminology

- •9.2 Fitting ANOVA models

- •9.2.1 The aov() function

- •9.2.2 The order of formula terms

- •9.3.1 Multiple comparisons

- •9.3.2 Assessing test assumptions

- •9.4 One-way ANCOVA

- •9.4.1 Assessing test assumptions

- •9.4.2 Visualizing the results

- •9.6 Repeated measures ANOVA

- •9.7 Multivariate analysis of variance (MANOVA)

- •9.7.1 Assessing test assumptions

- •9.7.2 Robust MANOVA

- •9.8 ANOVA as regression

- •9.9 Summary

- •10 Power analysis

- •10.1 A quick review of hypothesis testing

- •10.2 Implementing power analysis with the pwr package

- •10.2.1 t-tests

- •10.2.2 ANOVA

- •10.2.3 Correlations

- •10.2.4 Linear models

- •10.2.5 Tests of proportions

- •10.2.7 Choosing an appropriate effect size in novel situations

- •10.3 Creating power analysis plots

- •10.4 Other packages

- •10.5 Summary

- •11 Intermediate graphs

- •11.1 Scatter plots

- •11.1.3 3D scatter plots

- •11.1.4 Spinning 3D scatter plots

- •11.1.5 Bubble plots

- •11.2 Line charts

- •11.3 Corrgrams

- •11.4 Mosaic plots

- •11.5 Summary

- •12 Resampling statistics and bootstrapping

- •12.1 Permutation tests

- •12.2 Permutation tests with the coin package

- •12.2.2 Independence in contingency tables

- •12.2.3 Independence between numeric variables

- •12.2.5 Going further

- •12.3 Permutation tests with the lmPerm package

- •12.3.1 Simple and polynomial regression

- •12.3.2 Multiple regression

- •12.4 Additional comments on permutation tests

- •12.5 Bootstrapping

- •12.6 Bootstrapping with the boot package

- •12.6.1 Bootstrapping a single statistic

- •12.6.2 Bootstrapping several statistics

- •12.7 Summary

- •13 Generalized linear models

- •13.1 Generalized linear models and the glm() function

- •13.1.1 The glm() function

- •13.1.2 Supporting functions

- •13.1.3 Model fit and regression diagnostics

- •13.2 Logistic regression

- •13.2.1 Interpreting the model parameters

- •13.2.2 Assessing the impact of predictors on the probability of an outcome

- •13.2.3 Overdispersion

- •13.2.4 Extensions

- •13.3 Poisson regression

- •13.3.1 Interpreting the model parameters

- •13.3.2 Overdispersion

- •13.3.3 Extensions

- •13.4 Summary

- •14 Principal components and factor analysis

- •14.1 Principal components and factor analysis in R

- •14.2 Principal components

- •14.2.1 Selecting the number of components to extract

- •14.2.2 Extracting principal components

- •14.2.3 Rotating principal components

- •14.2.4 Obtaining principal components scores

- •14.3 Exploratory factor analysis

- •14.3.1 Deciding how many common factors to extract

- •14.3.2 Extracting common factors

- •14.3.3 Rotating factors

- •14.3.4 Factor scores

- •14.4 Other latent variable models

- •14.5 Summary

- •15 Time series

- •15.1 Creating a time-series object in R

- •15.2 Smoothing and seasonal decomposition

- •15.2.1 Smoothing with simple moving averages

- •15.2.2 Seasonal decomposition

- •15.3 Exponential forecasting models

- •15.3.1 Simple exponential smoothing

- •15.3.3 The ets() function and automated forecasting

- •15.4 ARIMA forecasting models

- •15.4.1 Prerequisite concepts

- •15.4.2 ARMA and ARIMA models

- •15.4.3 Automated ARIMA forecasting

- •15.5 Going further

- •15.6 Summary

- •16 Cluster analysis

- •16.1 Common steps in cluster analysis

- •16.2 Calculating distances

- •16.3 Hierarchical cluster analysis

- •16.4 Partitioning cluster analysis

- •16.4.2 Partitioning around medoids

- •16.5 Avoiding nonexistent clusters

- •16.6 Summary

- •17 Classification

- •17.1 Preparing the data

- •17.2 Logistic regression

- •17.3 Decision trees

- •17.3.1 Classical decision trees

- •17.3.2 Conditional inference trees

- •17.4 Random forests

- •17.5 Support vector machines

- •17.5.1 Tuning an SVM

- •17.6 Choosing a best predictive solution

- •17.7 Using the rattle package for data mining

- •17.8 Summary

- •18 Advanced methods for missing data

- •18.1 Steps in dealing with missing data

- •18.2 Identifying missing values

- •18.3 Exploring missing-values patterns

- •18.3.1 Tabulating missing values

- •18.3.2 Exploring missing data visually

- •18.3.3 Using correlations to explore missing values

- •18.4 Understanding the sources and impact of missing data

- •18.5 Rational approaches for dealing with incomplete data

- •18.6 Complete-case analysis (listwise deletion)

- •18.7 Multiple imputation

- •18.8 Other approaches to missing data

- •18.8.1 Pairwise deletion

- •18.8.2 Simple (nonstochastic) imputation

- •18.9 Summary

- •19 Advanced graphics with ggplot2

- •19.1 The four graphics systems in R

- •19.2 An introduction to the ggplot2 package

- •19.3 Specifying the plot type with geoms

- •19.4 Grouping

- •19.5 Faceting

- •19.6 Adding smoothed lines

- •19.7 Modifying the appearance of ggplot2 graphs

- •19.7.1 Axes

- •19.7.2 Legends

- •19.7.3 Scales

- •19.7.4 Themes

- •19.7.5 Multiple graphs per page

- •19.8 Saving graphs

- •19.9 Summary

- •20 Advanced programming

- •20.1 A review of the language

- •20.1.1 Data types

- •20.1.2 Control structures

- •20.1.3 Creating functions

- •20.2 Working with environments

- •20.3 Object-oriented programming

- •20.3.1 Generic functions

- •20.3.2 Limitations of the S3 model

- •20.4 Writing efficient code

- •20.5 Debugging

- •20.5.1 Common sources of errors

- •20.5.2 Debugging tools

- •20.5.3 Session options that support debugging

- •20.6 Going further

- •20.7 Summary

- •21 Creating a package

- •21.1 Nonparametric analysis and the npar package

- •21.1.1 Comparing groups with the npar package

- •21.2 Developing the package

- •21.2.1 Computing the statistics

- •21.2.2 Printing the results

- •21.2.3 Summarizing the results

- •21.2.4 Plotting the results

- •21.2.5 Adding sample data to the package

- •21.3 Creating the package documentation

- •21.4 Building the package

- •21.5 Going further

- •21.6 Summary

- •22 Creating dynamic reports

- •22.1 A template approach to reports

- •22.2 Creating dynamic reports with R and Markdown

- •22.3 Creating dynamic reports with R and LaTeX

- •22.4 Creating dynamic reports with R and Open Document

- •22.5 Creating dynamic reports with R and Microsoft Word

- •22.6 Summary

- •afterword Into the rabbit hole

- •appendix A Graphical user interfaces

- •appendix B Customizing the startup environment

- •appendix C Exporting data from R

- •Delimited text file

- •Excel spreadsheet

- •Statistical applications

- •appendix D Matrix algebra in R

- •appendix E Packages used in this book

- •appendix F Working with large datasets

- •F.1 Efficient programming

- •F.2 Storing data outside of RAM

- •F.3 Analytic packages for out-of-memory data

- •F.4 Comprehensive solutions for working with enormous datasets

- •appendix G Updating an R installation

- •G.1 Automated installation (Windows only)

- •G.2 Manual installation (Windows and Mac OS X)

- •G.3 Updating an R installation (Linux)

- •references

- •index

- •Symbols

- •Numerics

- •23.1 The lattice package

- •23.2 Conditioning variables

- •23.3 Panel functions

- •23.4 Grouping variables

- •23.5 Graphic parameters

- •23.6 Customizing plot strips

- •23.7 Page arrangement

- •23.8 Going further

ARIMA forecasting models |

359 |

Johnson & Johnson Forecasts

|

25 |

|

|

|

|

(Dollars) |

20 |

|

|

|

|

Earnings |

15 |

|

|

|

|

Quarterly |

10 |

|

|

|

|

|

5 |

|

|

|

|

|

0 |

|

|

|

|

|

1960 |

1965 |

1970 |

1975 |

1980 |

|

|

|

Time |

|

|

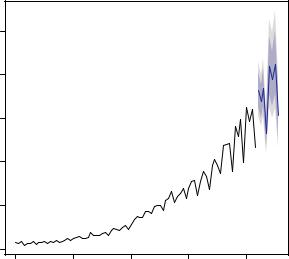

Figure 15.10 Multiplicative exponential smoothing forecast with trend and seasonal components. The forecasts are a dashed line, and the 80% and 95% confidence intervals are provided in light and dark gray, respectively.

Because no model is specified, the software performs a search over a wide array of models to find one that minimizes the fit criterion (log-likelihood by default). The selected model is one that has multiplicative trend, seasonal, and error components. The plot, along with forecasts for the next eight quarters (the default in this case), is given in figure 15.10. The flty parameter sets the line type for the forecast line (dashed in this case).

As stated earlier, exponential time-series modeling is popular because it can give good short-term forecasts in many situations. A second approach that is also popular is the Box-Jenkins methodology, commonly referred to as ARIMA models. These are described in the next section.

15.4 ARIMA forecasting models

In the autoregressive integrated moving average (ARIMA) approach to forecasting, predicted values are a linear function of recent actual values and recent errors of prediction (residuals). ARIMA is a complex approach to forecasting. In this section, we’ll limit discussion to ARIMA models for non-seasonal time series.

Before describing ARIMA models, a number of terms need to be defined, including lags, autocorrelation, partial autocorrelation, differencing, and stationarity. Each is considered in the next section.

15.4.1Prerequisite concepts

When you lag a time series, you shift it back by a given number of observations. Con-

sider the first few observations from the Nile time series, displayed in table 15.5. Lag 0

360 |

CHAPTER 15 Time series |

is the unshifted time series. Lag 1 is the time series shifted one position to the left. Lag 2 shifts the time series two positions to the left, and so on. Time series can be lagged using the function lag(ts,k), where ts is the time series and k is the number of lags.

Table 15.5 The Nile time series at various lags

Lag |

1869 |

1870 |

1871 |

1872 |

1873 |

1874 |

1875 |

… |

|

|

|

|

|

|

|

|

|

0 |

|

|

1120 |

1160 |

963 |

1210 |

1160 |

… |

1 |

|

1120 |

1160 |

963 |

1210 |

1160 |

1160 |

… |

2 |

1120 |

1160 |

963 |

1210 |

1160 |

1160 |

813 |

… |

|

|

|

|

|

|

|

|

|

Autocorrelation measures the way observations in a time series relate to each other. ACk is the correlation between a set of observations (Yt) and observations k periods earlier (Yt-k). So AC1 is the correlation between the Lag 1 and Lag 0 time series, AC2 is the correlation between the Lag 2 and Lag 0 time series, and so on. Plotting these correlations (AC1, AC2, …, ACk) produces an autocorrelation function (ACF) plot. The ACF plot is used to select appropriate parameters for the ARIMA model and to assess the fit of the final model.

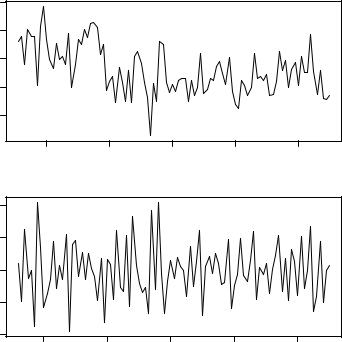

An ACF plot can be produced with the acf() function in the stats package or the Acf() function in the forecast package. Here, the Acf() function is used because it produces a plot that is somewhat easier to read. The format is Acf(ts), where ts is the original time series. The ACF plot for the Nile time series, with k=1 to 18, is provided a little later, in the top half of figure 15.12.

A partial autocorrelation is the correlation between Yt and Yt-k with the effects of all Y values between the two (Yt-1, Yt-2, …, Yt-k+1) removed. Partial autocorrelations can also be plotted for multiple values of k. The PACF plot can be generated with either the pacf() function in the stats package or the Pacf() function in the forecast package. Again, the Pacf() function is preferred due to its formatting. The function call is Pacf(ts), where ts is the time series to be assessed. The PACF plot is also used to determine the most appropriate parameters for the ARIMA model. The results for the Nile time series are given in the bottom half of figure 15.12.

ARIMA models are designed to fit stationary time series (or time series that can be made stationary). In a stationary time series, the statistical properties of the series don’t change over time. For example, the mean and variance of Yt are constant. Additionally, the autocorrelations for any lag k don’t change with time.

It may be necessary to transform the values of a time series in order to achieve constant variance before proceeding to fitting an ARIMA model. The log transformation is often useful here, as you saw in section 15.1.3. Other transformations, such as the BoxCox transformation described in section 8.5.2, may also be helpful.

Because stationary time series are assumed to have constant means, they can’t have a trend component. Many non-stationary time series can be made stationary through

ARIMA forecasting models |

361 |

differencing. In differencing, each value of a time series Yt is replaced with Yt-1 – Yt. Differencing a time series once removes a linear trend. Differencing it a second time removes a quadratic trend. A third time removes a cubic trend. It’s rarely necessary to difference more than twice.

You can difference a time series with the diff() function. The format is diff(ts, differences=d), where d indicates the number of times the time series ts is differenced. The default is d=1. The ndiffs() function in the forecast package can be used to help determine the best value of d. The format is ndiffs(ts).

Stationarity is often evaluated with a visual inspection of a time-series plot. If the variance isn’t constant, the data are transformed. If there are trends, the data are differenced. You can also use a statistical procedure called the Augmented Dickey-Fuller (ADF) test to evaluate the assumption of stationarity. In R, the function adf.test() in the tseries package performs the test. The format is adf.test(ts), where ts is the time series to be evaluated. A significant result suggests stationarity.

To summarize, ACF and PCF plots are used to determine the parameters of ARIMA models. Stationarity is an important assumption, and transformations and differencing are used to help achieve stationarity. With these concepts in hand, we can now turn to fitting models with an autoregressive (AR) component, a moving averages (MA) component, or both components (ARMA). Finally, we’ll examine ARIMA models that include ARMA components and differencing to achieve stationarity (Integration).

15.4.2ARMA and ARIMA models

In an autoregressive model of order p, each value in a time series is predicted from a linear combination of the previous p values

AR(p):Yt = μ + β1Yt−1 + β2Yt−2 + ... + βpYt−p + εt

where Yt is a given value of the series, µ is the mean of the series, the βs are the weights, and εt is the irregular component. In a moving average model of order q, each value in the time series is predicted from a linear combination of q previous errors. In

this case

MA(q):Yt = μ −θ1εt−1 − θ2εt−2 ... − θqεt−q + εt

where the εs are the errors of prediction and the θ s are the weights. (It’s important to note that the moving averages described here aren’t the simple moving averages described in section 15.1.2.)

Combining the two approaches yields an ARMA(p, q) model of the form

Yt = μ + β1Yt−1 + β2Yt−2 + ... + βpYt−p − θ1εt−1 − θ2εt−2 ... − θqεt−q + εt

that predicts each value of the time series from the past p values and q residuals.

An ARIMA(p, d, q) model is a model in which the time series has been differenced d times, and the resulting values are predicted from the previous p actual values and q

362 |

CHAPTER 15 Time series |

previous errors. The predictions are “un-differenced” or integrated to achieve the final prediction.

The steps in ARIMA modeling are as follows:

1Ensure that the time series is stationary.

2Identify a reasonable model or models (possible values of p and q).

3Fit the model.

4Evaluate the model’s fit, including statistical assumptions and predictive accuracy.

5Make forecasts.

Let’s apply each step in turn to fit an ARIMA model to the Nile time series.

ENSURING THAT THE TIME SERIES IS STATIONARY

First you plot the time series and assess its stationarity (see listing 15.7 and the top half of figure 15.11). The variance appears to be stable across the years observed, so there’s no need for a transformation. There may be a trend, which is supported by the results of the ndiffs() function.

|

1400 |

|

|

|

|

Nile |

1000 |

|

|

|

|

|

800 |

|

|

|

|

|

600 |

|

|

|

|

|

1880 |

1900 |

1920 |

1940 |

1960 |

|

400 |

|

|

|

|

|

200 |

|

|

|

|

diff(Nile) |

0 |

|

|

|

|

|

−200 |

|

|

|

|

|

−400 |

|

|

|

|

|

1880 |

1900 |

1920 |

1940 |

1960 |

|

|

|

Time |

|

|

Figure 15.11 Time series displaying the annual flow of the river Nile at Ashwan from 1871 to 1970 (top) along with the times series differenced once (bottom). The differencing removes the decreasing trend evident in the original plot.

ARIMA forecasting models |

363 |

Listing 15.7 Transforming the time series and assessing stationarity

>library(forecast)

>library(tseries)

>plot(Nile)

>ndiffs(Nile)

[1] 1

>dNile <- diff(Nile)

>plot(dNile)

>adf.test(dNile)

Augmented Dickey-Fuller Test

data: dNile

Dickey-Fuller = -6.5924, Lag order = 4, p-value = 0.01 alternative hypothesis: stationary

The series is differenced once (lag=1 is the default) and saved as dNile. The differenced time series is plotted in the bottom half of figure 15.11 and certainly looks more stationary. Applying the ADF test to the differenced series suggest that it’s now stationary, so you can proceed to the next step.

IDENTIFYING ONE OR MORE REASONABLE MODELS

Possible models are selected based on the ACF and PACF plots:

Acf(dNile)

Pacf(dNile)

The resulting plots are given in figure 15.12.

Figure 15.12 Autocorrelation and partial autocorrelation plots for the differenced Nile time series

0.2

ACF |

0.0 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

||

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

||

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

||

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

−0.2 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|||

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

−0.4 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

1 |

2 |

3 |

4 |

5 |

6 |

7 |

8 |

9 |

10 |

12 |

14 |

16 |

18 |

|

|

|||||||||||||||||

Partial ACF |

0.2 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

||

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

||

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|||

−0.2 0.0 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

||

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

||

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

||

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

||

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

||

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

−0.4 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

||

|

1 |

2 |

3 |

4 |

5 |

6 |

7 |

8 |

9 |

10 |

12 |

14 |

16 |

18 |

|

|

|||||||||||||||||

Lag

364 |

CHAPTER 15 Time series |

The goal is to identify the parameters p, d, and q. You already know that d=1 from the previous section. You get p and q by comparing the ACF and PACF plots with the guidelines given in table 15.6.

Table 15.6 Guidelines for selecting an ARIMA model

Model |

ACF |

PACF |

|

|

|

ARIMA(p, d, 0) |

Trails off to zero |

Zero after lag p |

ARIMA(0, d, q) |

Zero after lag q |

Trails off to zero |

ARIMA(p, d, q) |

Trails off to zero |

Trails off to zero |

|

|

|

The results in table 15.6 are theoretical, and the actual ACF and PACF may not match this exactly. But they can be used to give a rough guide of reasonable models to try. For the Nile time series in figure 15.12, there appears to be one large autocorrelation at lag 1, and the partial autocorrelations trail off to zero as the lags get bigger. This suggests trying an ARIMA(0, 1, 1) model.

FITTING THE MODEL(S)

The ARIMA model is fit with the arima() function. The format is arima(ts, order=c(q, d, q)). The result of fitting an ARIMA(0, 1, 1) model to the Nile time series is given in the following listing.

Listing 15.8 Fitting an ARIMA model

>library(forecast)

>fit <- arima(Nile, order=c(0,1,1))

>fit

Series: Nile

ARIMA(0,1,1)

Coefficients:

ma1 -0.7329 s.e. 0.1143

sigma^2 estimated as |

20600: |

log likelihood=-632.55 |

|||||

AIC=1269.09 |

AICc=1269.22 |

|

BIC=1274.28 |

|

|||

> accuracy(fit) |

|

|

|

|

|

|

|

|

ME |

RMSE |

|

MAE |

MPE |

MAPE |

MASE |

Training set -11.94 |

142.8 |

112.2 |

-3.575 |

12.94 |

0.8089 |

||

Note that you apply the model to the original time series. By specifying d=1, it calculates first differences for you. The coefficient for the moving averages (-0.73) is provided along with the AIC. If you fit other models, the AIC can help you choose which one is most reasonable. Smaller AIC values suggest better models. The accuracy

ARIMA forecasting models |

365 |

measures can help you determine whether the model fits with sufficient accuracy. Here the mean absolute percent error is 13% of the river level.

EVALUATING MODEL FIT

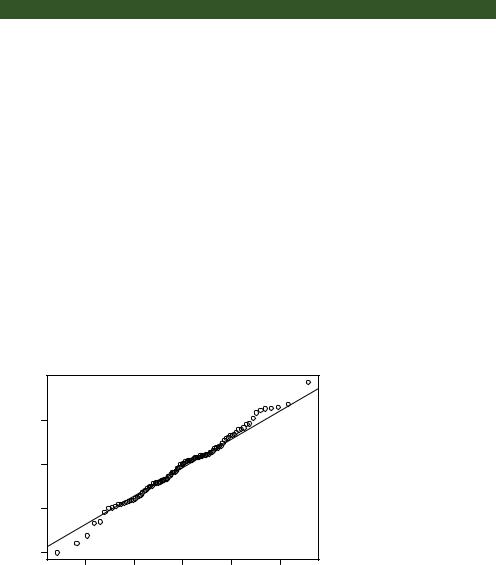

If the model is appropriate, the residuals should be normally distributed with mean zero, and the autocorrelations should be zero for every possible lag. In other words, the residuals should be normally and independently distributed (no relationship between them). The assumptions can be evaluated with the following code.

Listing 15.9 Evaluating the model fit

>qqnorm(fit$residuals)

>qqline(fit$residuals)

>Box.test(fit$residuals, type="Ljung-Box")

Box-Ljung test

data: fit$residuals

X-squared = 1.3711, df = 1, p-value = 0.2416

The qqnorm() and qqline() functions produce the plot in figure 15.13. Normally distributed data should fall along the line. In this case, the results look good.

The Box.test() function provides a test that the autocorrelations are all zero. The results aren’t significant, suggesting that the autocorrelations don’t differ from zero. This ARIMA model appears to fit the data well.

MAKING FORECASTS

If the model hadn’t met the assumptions of normal residuals and zero autocorrelations, it would have been necessary to alter the model, add parameters, or try a different approach. Once a final model has been chosen, it can be used to make predictions of future values. In the next listing, the forecast() function from the forecast package is used to predict three years ahead.

Normal Q−Q Plot

Quantiles |

200 |

0 |

|

Sample |

−200 |

|

−400 |

−2 |

−1 |

0 |

1 |

2 |

Theoretical Quantiles

Figure 15.13 Normal Q-Q plot for determining the normality of the time-series residuals