- •Contents

- •Preface

- •Introduction to Computers, the Internet and the Web

- •1.3 Computer Organization

- •Languages

- •1.9 Java Class Libraries

- •1.12 The Internet and the World Wide Web

- •1.14 General Notes about Java and This Book

- •Sections

- •Introduction to Java Applications

- •2.4 Displaying Text in a Dialog Box

- •2.5 Another Java Application: Adding Integers

- •2.8 Decision Making: Equality and Relational Operators

- •Introduction to Java Applets

- •3.2 Sample Applets from the Java 2 Software Development Kit

- •3.3 A Simple Java Applet: Drawing a String

- •3.4 Two More Simple Applets: Drawing Strings and Lines

- •3.6 Viewing Applets in a Web Browser

- •3.7 Java Applet Internet and World Wide Web Resources

- •Repetition)

- •Class Attributes

- •5.8 Labeled break and continue Statements

- •5.9 Logical Operators

- •Methods

- •6.2 Program Modules in Java

- •6.7 Java API Packages

- •6.13 Example Using Recursion: The Fibonacci Series

- •6.16 Methods of Class JApplet

- •Class Operations

- •Arrays

- •7.6 Passing Arrays to Methods

- •7.8 Searching Arrays: Linear Search and Binary Search

- •Collaboration Among Objects

- •8.2 Implementing a Time Abstract Data Type with a Class

- •8.3 Class Scope

- •8.4 Controlling Access to Members

- •8.5 Creating Packages

- •8.7 Using Overloaded Constructors

- •8.9 Software Reusability

- •8.10 Final Instance Variables

- •Classes

- •8.16 Data Abstraction and Encapsulation

- •9.2 Superclasses and Subclasses

- •9.5 Constructors and Finalizers in Subclasses

- •Conversion

- •9.11 Type Fields and switch Statements

- •9.14 Abstract Superclasses and Concrete Classes

- •9.17 New Classes and Dynamic Binding

- •9.18 Case Study: Inheriting Interface and Implementation

- •9.19 Case Study: Creating and Using Interfaces

- •9.21 Notes on Inner Class Definitions

- •Strings and Characters

- •10.2 Fundamentals of Characters and Strings

- •10.21 Card Shuffling and Dealing Simulation

- •Handling

- •Graphics and Java2D

- •11.2 Graphics Contexts and Graphics Objects

- •11.5 Drawing Lines, Rectangles and Ovals

- •11.9 Java2D Shapes

- •12.12 Adapter Classes

- •Cases

- •13.3 Creating a Customized Subclass of JPanel

- •Applications

- •Controller

- •Exception Handling

- •14.6 Throwing an Exception

- •14.7 Catching an Exception

- •Multithreading

- •15.3 Thread States: Life Cycle of a Thread

- •15.4 Thread Priorities and Thread Scheduling

- •15.5 Thread Synchronization

- •15.9 Daemon Threads

- •Multithreading

- •Design Patterns

- •Files and Streams

- •16.2 Data Hierarchy

- •16.3 Files and Streams

- •Networking

- •17.2 Manipulating URIs

- •17.3 Reading a File on a Web Server

- •17.4 Establishing a Simple Server Using Stream Sockets

- •17.5 Establishing a Simple Client Using Stream Sockets

- •17.9 Security and the Network

- •18.2 Loading, Displaying and Scaling Images

- •18.3 Animating a Series of Images

- •18.5 Image Maps

- •18.6 Loading and Playing Audio Clips

- •18.7 Internet and World Wide Web Resources

- •Data Structures

- •19.4 Linked Lists

- •20.8 Bit Manipulation and the Bitwise Operators

- •Collections

- •21.8 Maps

- •21.9 Synchronization Wrappers

- •21.10 Unmodifiable Wrappers

- •22.2 Playing Media

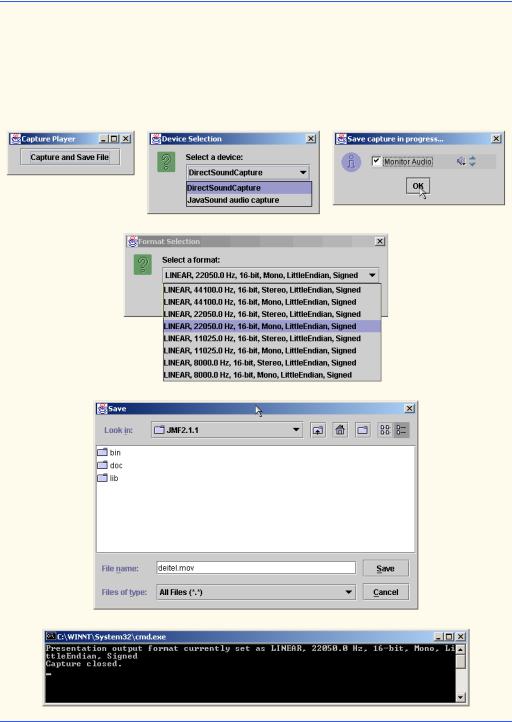

- •22.3 Formatting and Saving Captured Media

- •22.5 Java Sound

- •22.8 Internet and World Wide Web Resources

- •Hexadecimal Numbers

Chapter 22 |

Java Media Framework and Java Sound (on CD) |

1249 |

Performance Tip 22.1

Starting the Player takes less time if the Player has already prefetched the media before

Starting the Player takes less time if the Player has already prefetched the media before  invoking start.

invoking start.

When the media clip ends, the Player generates a ControllerEvent of type EndOfMediaEvent. Most media players “rewind” the media clip after reaching the end so users can see or hear it again from the beginning. Method endOfMedia (lines 249– 253) handles the EndOfMediaEvent and resets the media clip to its beginning position by invoking Player method setMediaTime with a new Time (package javax.media) of 0 (line 251). Method setMediaTime sets the position of the media to a specific time location in the media, and is useful for “jumping” to a different part of the media. Line 252 invokes Player method stop, which ends media processing and places the Player in state Stopped. Invoking method start on a Stopped Player that has not been closed resumes media playback.

Often, it is desirable to configure the media before presentation. In the next section, we discuss interface Processor, which has more configuration capabilities than interface Player. Processors enable a program to format media and to save it to a file.

22.3 Formatting and Saving Captured Media

The Java Media Framework supports playing and saving media from capture devices such as microphones and video cameras. This type of media is known as captured media. Capture devices convert analog media into digitized media. For example, a program that captures an analog voice from a microphone attached to computer can create a digitized file from a that recording.

The JMF can access video capture devices that use Video for Windows drivers. Also, JMF supports audio capture devices that use the Windows Direct Sound Interface or the Java Sound Interface. The Video for Windows driver provides interfaces that enable Windows applications to access and process media from video devices. Similarly, Direct Sound and Java Sound are interfaces that enable applications to access sound devices such as hardware sound cards. The Solaris Performance Pack provides support for Java Sound and Sun Video capture devices on the Solaris platform. For a complete list of devices supported by JMF, visit JMF’s official Web site.

The SimplePlayer application presented in Fig. 22.1 allowed users to play media from a capture device. A locator string specifies the location of a capture device that the SimplePlayer demo accesses. For example, to test the SimplePlayer’s capturing capabilities, plug a microphone into a sound card’s microphone input jack. Typing the locator string javasound:// in the Open Location input dialog specifies that media should be input from the Java Sound-enabled capture device. The locator string initializes the MediaLocator that the Player needs for the audio material captured from the microphone.

Although SimplePlayer provides access to capture devices, it does not format the media or save captured data. Figure 22.2 presents a program that accomplishes these two new tasks. Class CapturePlayer provides more control over media properties via class

DataSource (package javax.media.protocol). Class DataSource provides the connection to the media source, then abstracts that connection to allow users to manipulate it. This program uses a DataSource to format the input and output media. The DataSource passes the formatted output media to a Controller, which will format it

1250 |

Java Media Framework and Java Sound (on CD) |

Chapter 22 |

further so that it can be saved to a file. The Controller that handles media is a Processor, which extends interface Player. Finally, an object that implements interface DataSink saves the captured, formatted media. The Processor object handles the flow of data from the DataSource to the DataSink object.

JMF and Java Sound use media sources extensively, so programmers must understand the arrangement of data in the media. The header on a media source specifies the media format and other essential information needed to play the media. The media content usually consists of tracks of data, similar to tracks of music on a CD. Media sources may have one or more tracks that contain a variety of data. For example, a movie clip may contain one track for video, one track for audio, and a third track for closed-captioning data for the hearing-impaired.

1// Fig. 22.2: CapturePlayer.java

2 // Presents and saves captured media

3

4 // Java core packages

5import java.awt.*;

6 import java.awt.event.*;

7import java.io.*;

8 import java.util.*;

9

10// Java extension packages

11import javax.swing.*;

12import javax.swing.event.*;

13import javax.media.*;

14import javax.media.protocol.*;

15import javax.media.format.*;

16import javax.media.control.*;

17import javax.media.datasink.*;

19 public class CapturePlayer extends JFrame {

20

21// capture and save button

22private JButton captureButton;

24// component for save capture GUI

25private Component saveProgress;

27// formats of device's media, user-chosen format

28private Format formats[], selectedFormat;

29

30// controls of device's media formats

31private FormatControl formatControls[];

33// specification information of device

34private CaptureDeviceInfo deviceInfo;

36// vector containing all devices' information

37private Vector deviceList;

38

Fig. 22.2 Formatting and saving media from capture devices (part 1 of 9).

Chapter 22 |

Java Media Framework and Java Sound (on CD) |

1251 |

39// input and output data sources

40private DataSource inSource, outSource;

42// file writer for captured media

43private DataSink dataSink;

44

45// processor to render and save captured media

46private Processor processor;

47

48// constructor for CapturePlayer

49public CapturePlayer()

50{

51super( "Capture Player" );

52

53// panel containing buttons

54JPanel buttonPanel = new JPanel();

55getContentPane().add( buttonPanel );

57// button for accessing and initializing capture devices

58captureButton = new JButton( "Capture and Save File" );

59buttonPanel.add( captureButton, BorderLayout.CENTER );

61// register an ActionListener for captureButton events

62captureButton.addActionListener( new CaptureHandler() );

64// turn on light rendering to enable compatibility

65// with lightweight GUI components

66Manager.setHint( Manager.LIGHTWEIGHT_RENDERER,

67 |

Boolean.TRUE ); |

68 |

|

69// register a WindowListener to frame events

70addWindowListener(

71 |

|

72 |

// anonymous inner class to handle WindowEvents |

73 |

new WindowAdapter() { |

74 |

|

75 |

// dispose processor |

76 |

public void windowClosing( |

77 |

WindowEvent windowEvent ) |

78 |

{ |

79 |

if ( processor != null ) |

80 |

processor.close(); |

81 |

} |

82 |

|

83 |

} // end WindowAdapter |

84 |

|

85 |

); // end call to method addWindowListener |

86 |

|

87 |

} // end constructor |

88 |

|

89// action handler class for setting up device

90private class CaptureHandler implements ActionListener {

Fig. 22.2 Formatting and saving media from capture devices (part 2 of 9).

1252 |

Java Media Framework and Java Sound (on CD) |

Chapter 22 |

92// initialize and configure capture device

93public void actionPerformed( ActionEvent actionEvent )

94{

95 |

// put available devices' information into vector |

96 |

deviceList = |

97 |

CaptureDeviceManager.getDeviceList( null ); |

98 |

|

99 |

// if no devices found, display error message |

100 |

if ( ( deviceList == null ) || |

101 |

( deviceList.size() == 0 ) ) { |

102 |

|

103 |

showErrorMessage( "No capture devices found!" ); |

104 |

|

105 |

return; |

106 |

} |

107 |

|

108 |

// array of device names |

109 |

String deviceNames[] = new String[ deviceList.size() ]; |

110 |

|

111 |

// store all device names into array of |

112 |

// string for display purposes |

113 |

for ( int i = 0; i < deviceList.size(); i++ ){ |

114 |

|

115 |

deviceInfo = |

116 |

( CaptureDeviceInfo ) deviceList.elementAt( i ); |

117 |

|

118 |

deviceNames[ i ] = deviceInfo.getName(); |

119 |

} |

120 |

|

121 |

// get vector index of selected device |

122 |

int selectDeviceIndex = |

123 |

getSelectedDeviceIndex( deviceNames ); |

124 |

|

125 |

if ( selectDeviceIndex == -1 ) |

126 |

return; |

127 |

|

128 |

// get device information of selected device |

129 |

deviceInfo = ( CaptureDeviceInfo ) |

130 |

deviceList.elementAt( selectDeviceIndex ); |

131 |

|

132 |

formats = deviceInfo.getFormats(); |

133 |

|

134 |

// if previous capture device opened, disconnect it |

135 |

if ( inSource != null ) |

136 |

inSource.disconnect(); |

137 |

|

138 |

// obtain device and set its format |

139 |

try { |

140 |

|

141 |

// create data source from MediaLocator of device |

142 |

inSource = Manager.createDataSource( |

143 |

deviceInfo.getLocator() ); |

144 |

|

|

|

Fig. 22.2 Formatting and saving media from capture devices (part 3 of 9).

Chapter 22 |

Java Media Framework and Java Sound (on CD) |

1253 |

|

|

|

|

|

145 |

|

// get format setting controls for device |

|

146 |

|

formatControls = ( ( CaptureDevice ) |

|

147 |

|

inSource ).getFormatControls(); |

|

148 |

|

|

|

149 |

|

// get user's desired device format setting |

|

150 |

|

selectedFormat = getSelectedFormat( formats ); |

|

151 |

|

|

|

152 |

|

if ( selectedFormat == null ) |

|

153 |

|

return; |

|

154 |

|

|

|

155 |

|

setDeviceFormat( selectedFormat ); |

|

156 |

|

|

|

157 |

|

captureSaveFile(); |

|

158 |

|

|

|

159 |

|

} // end try |

|

160 |

|

|

|

161 |

|

// unable to find DataSource from MediaLocator |

|

162 |

|

catch ( NoDataSourceException noDataException ) { |

|

163 |

|

noDataException.printStackTrace(); |

|

164 |

|

} |

|

165 |

|

|

|

166 |

|

// device connection error |

|

167 |

|

catch ( IOException ioException ) { |

|

168 |

|

ioException.printStackTrace(); |

|

169 |

|

} |

|

170 |

|

|

|

171 |

} |

// end method actionPerformed |

|

172 |

|

|

|

173 |

} // end inner class CaptureHandler |

|

|

174 |

|

|

|

175// set output format of device-captured media

176public void setDeviceFormat( Format currentFormat )

177{

178// set desired format through all format controls

179for ( int i = 0; i < formatControls.length; i++ ) {

181 |

// make sure format control is configurable |

182 |

if ( formatControls[ i ].isEnabled() ) { |

183 |

|

184 |

formatControls[ i ].setFormat( currentFormat ); |

185 |

|

186 |

System.out.println ( |

187 |

"Presentation output format currently set as " + |

188 |

formatControls[ i ].getFormat() ); |

189 |

} |

190 |

|

191} // end for loop

192}

193

194// get selected device vector index

195public int getSelectedDeviceIndex( String[] names )

196{

Fig. 22.2 Formatting and saving media from capture devices (part 4 of 9).

1254 |

Java Media Framework and Java Sound (on CD) |

Chapter 22 |

197// get device name from dialog box of device choices

198String name = ( String ) JOptionPane.showInputDialog(

199this, "Select a device:", "Device Selection",

200 |

JOptionPane.QUESTION_MESSAGE, |

201 |

null, names, names[ 0 ] ); |

202 |

|

203// if format selected, get index of name in array names

204if ( name != null )

205 return Arrays.binarySearch( names, name );

206

207// else return bad selection value

208else

209return -1;

210}

211

212// return user-selected format for device

213public Format getSelectedFormat( Format[] showFormats )

214{

215return ( Format ) JOptionPane.showInputDialog( this,

216"Select a format: ", "Format Selection",

217 JOptionPane.QUESTION_MESSAGE,

218null, showFormats, null );

219}

220

221// pop up error messages

222public void showErrorMessage( String error )

223{

224JOptionPane.showMessageDialog( this, error, "Error",

225JOptionPane.ERROR_MESSAGE );

226 |

} |

227 |

|

228// get desired file for saved captured media

229public File getSaveFile()

230{

231JFileChooser fileChooser = new JFileChooser();

233 fileChooser.setFileSelectionMode(

234JFileChooser.FILES_ONLY );

235int result = fileChooser.showSaveDialog( this );

237 |

if ( |

result == JFileChooser.CANCEL_OPTION ) |

238 |

return null; |

|

239 |

|

|

240 |

else |

|

241return fileChooser.getSelectedFile();

242}

243

244// show saving monitor of captured media

245public void showSaveMonitor()

246{

Fig. 22.2 Formatting and saving media from capture devices (part 5 of 9).

Chapter 22 |

Java Media Framework and Java Sound (on CD) |

1255 |

247// show saving monitor dialog

248int result = JOptionPane.showConfirmDialog( this,

249saveProgress, "Save capture in progress...",

250 |

JOptionPane.DEFAULT_OPTION, |

251 |

JOptionPane.INFORMATION_MESSAGE ); |

252 |

|

253// terminate saving if user presses "OK" or closes dialog

254if ( ( result == JOptionPane.OK_OPTION ) ||

255 |

( result == JOptionPane.CLOSED_OPTION ) ) { |

256 |

|

257 |

processor.stop(); |

258 |

processor.close(); |

259 |

|

260System.out.println ( "Capture closed." );

261}

262}

263

264// process captured media and save to file

265public void captureSaveFile()

266{

267// array of desired saving formats supported by tracks

268Format outFormats[] = new Format[ 1 ];

269

270 outFormats[ 0 ] = selectedFormat;

271

272// file output format

273FileTypeDescriptor outFileType =

274 new FileTypeDescriptor( FileTypeDescriptor.QUICKTIME );

275

276// set up and start processor and monitor capture

277try {

278 |

|

279 |

// create processor from processor model |

280 |

// of specific data source, track output formats, |

281 |

// and file output format |

282 |

processor = Manager.createRealizedProcessor( |

283 |

new ProcessorModel( inSource, outFormats, |

284 |

outFileType ) ); |

285 |

|

286 |

// try to make a data writer for media output |

287 |

if ( !makeDataWriter() ) |

288 |

return; |

289 |

|

290 |

// call start on processor to start captured feed |

291 |

processor.start(); |

292 |

|

293 |

// get monitor control for capturing and encoding |

294 |

MonitorControl monitorControl = |

295 |

( MonitorControl ) processor.getControl( |

296 |

"javax.media.control.MonitorControl" ); |

297 |

|

298 |

// get GUI component of monitoring control |

299 |

saveProgress = monitorControl.getControlComponent(); |

|

|

Fig. 22.2 Formatting and saving media from capture devices (part 6 of 9).

1256 |

Java Media Framework and Java Sound (on CD) |

Chapter 22 |

300

301 showSaveMonitor();

302

303 } // end try

304

305// no processor could be found for specific

306// data source

307catch ( NoProcessorException processorException ) {

308processorException.printStackTrace();

309}

310

311// unable to realize through

312// createRealizedProcessor method

313catch ( CannotRealizeException realizeException ) {

314realizeException.printStackTrace();

315}

316

317// device connection error

318catch ( IOException ioException ) {

319ioException.printStackTrace();

320}

321

322 } // end method captureSaveFile

323

324// method initializing media file writer

325public boolean makeDataWriter()

326{

327File saveFile = getSaveFile();

328

329 if ( saveFile == null )

330 return false;

331

332// get output data source from processor

333outSource = processor.getDataOutput();

335 if ( outSource == null ) {

336 showErrorMessage( "No output from processor!" );

337return false;

338}

339

340// start data writing process

341try {

342 |

|

343 |

// create new MediaLocator from saveFile URL |

344 |

MediaLocator saveLocator = |

345 |

new MediaLocator ( saveFile.toURL() ); |

346 |

|

347 |

// create DataSink from output data source |

348 |

// and user-specified save destination file |

349 |

dataSink = Manager.createDataSink( |

350 |

outSource, saveLocator ); |

351 |

|

|

|

Fig. 22.2 Formatting and saving media from capture devices (part 7 of 9).

Chapter 22 |

Java Media Framework and Java Sound (on CD) |

1257 |

|

|

|

|

|

352 |

|

// register a DataSinkListener for DataSinkEvents |

|

353 |

|

dataSink.addDataSinkListener( |

|

354 |

|

|

|

355 |

|

// anonymous inner class to handle DataSinkEvents |

|

356 |

|

new DataSinkListener () { |

|

357 |

|

|

|

358 |

|

// if end of media, close data writer |

|

359 |

|

public void dataSinkUpdate( |

|

360 |

|

DataSinkEvent dataEvent ) |

|

361 |

|

{ |

|

362 |

|

// if capturing stopped, close DataSink |

|

363 |

|

if ( dataEvent instanceof EndOfStreamEvent ) |

|

364 |

|

dataSink.close(); |

|

365 |

|

} |

|

366 |

|

|

|

367 |

|

} // end DataSinkListener |

|

368 |

|

|

|

369 |

|

); // end call to method addDataSinkListener |

|

370 |

|

|

|

371 |

|

// start saving |

|

372 |

|

dataSink.open(); |

|

373 |

|

dataSink.start(); |

|

374 |

|

|

|

375 |

} |

// end try |

|

376 |

|

|

|

377// DataSink could not be found for specific

378// save file and data source

379catch ( NoDataSinkException noDataSinkException ) {

380 noDataSinkException.printStackTrace();

381return false;

382}

383

384// violation while accessing MediaLocator

385// destination

386catch ( SecurityException securityException ) {

387 securityException.printStackTrace();

388return false;

389}

390

391// problem opening and starting DataSink

392catch ( IOException ioException ) {

393 ioException.printStackTrace();

394return false;

395}

396

397 return true;

398

399 } // end method makeDataWriter

400

401// main method

402public static void main( String args[] )

403{

404CapturePlayer testPlayer = new CapturePlayer();

Fig. 22.2 Formatting and saving media from capture devices (part 8 of 9).

1258 |

Java Media Framework and Java Sound (on CD) |

Chapter 22 |

405

406testPlayer.setSize( 200, 70 );

407testPlayer.setLocation( 300, 300 );

408testPlayer.setDefaultCloseOperation( EXIT_ON_CLOSE );

409testPlayer.setVisible( true );

410}

411

412 } // end class CapturePlayer

Fig. 22.2 Formatting and saving media from capture devices (part 9 of 9).

Chapter 22 Java Media Framework and Java Sound (on CD) 1259

Class CapturePlayer (Fig. 22.2) illustrates capturing, setting media formats and saving media from capture devices supported by JMF. The simplest test of the program uses a microphone for audio input. Initially, the GUI has only one button, which users click to begin the configuration process. Users then select a capture device from a pull-down menu dialog. The next dialog box has options for the format of the capture device and file output. The third dialog box asks users to save the media to a specific file. The final dialog box provides a volume control and the option of monitoring the data. Monitoring allows users to hear or see the media as it is captured and saved without modifying it in any way. Many media capture technologies offer monitoring capabilities. For example, many video recorders have a screen attachment to let users see what the camera is capturing without looking through the viewfinder. In a recording studio producers can listen to live music being recorded through headphones in another room. Monitoring data is different from playing data in that it does not make any changes to the format of the media or affect the data being sent to the Processor.

In the CapturePlayer program, lines 14–16 import the JMF Java extension packages javax.media.protocol, javax.media.format and javax.media.control, which contain classes and interfaces for media control and device formatting. Line 17 imports the JMF package javax.media.datasink, which contains classes for outputting formatted media. The program uses the classes and interfaces provided by these packages to obtain the desired capture device information, set the format of the capture device, create a Processor to handle the captured media data, create a DataSink to write the media data to a file and monitor the saving process.

CapturePlayer is capable of setting the media format. JMF provides class Format to describe the attributes of a media format, such as the sampling rate (which controls the quality of the sound) or whether the media should be in stereo or mono format. Each media format is encoded differently and can be played only with a media handler that supports its particular format. Line 28 declares an array of the Formats that the capture device supports and a Format reference for the user-selected format (selectedFormat).

After obtaining the Format objects, the program needs access to the formatting controls of the capture device. Line 31 declares an array to hold the FormatControls which will set the capture-device format. Class CapturePlayer sets the desired Format for the media source through the device’s FormatControls (line 31). The CaptureDeviceInfo reference deviceInfo (line 34) stores the capture device information, which will be placed in a Vector containing all of the device’s information.

Class DataSource connects programs to media data sources, such as capture devices. The SimplePlayer of Figure 22.1 accessed a DataSource object by invoking Manager method createPlayer, passing in a MediaLocator. However, class CapturePlayer accesses the DataSource directly. Line 40 declares two DataSources—inSource connects to the capture device’s media and outSource connects to the output data source to which the captured media will be saved.

An object that implements interface Processor provides the primary function that controls and processes the flow of media data in class CapturePlayer (line 46). The class also creates an object that implements interface DataSink to write the captured data to a file (line 43).

Clicking the Capture and Save File button configures the capture device by invoking method actionPerformed (lines 93–171) in private inner class Cap-

1260 |

Java Media Framework and Java Sound (on CD) |

Chapter 22 |

tureHandler (lines 90–173). An instance of inner class CaptureHandler is registered to listen for ActionEvents from captureButton (line 62). The program provides users with a list of available capture devices when lines 96–97 invoke CaptureDeviceManager static method getDeviceList. Method getDeviceList obtains all of the computer’s available devices that support the specified Format. Specifying null as the Format parameter returns a complete list of available devices. Class CaptureDeviceManager enables a program to access this list of devices.

Lines 109–119 copy the names of all capture devices into a String array (deviceNames) for display purposes. Lines 122-123 invoke CapturePlayer method getSelectedDeviceIndex (lines 195–210) to show a selector dialog with a list of all the device names stored in array deviceNames. The method call to showInputDialog (lines 198–201) has a different parameter list than earlier examples. The first four parameters are the dialog’s parent component, message, title, and message type, as earlier chapters use. The final three, which are new, specify the icon (in this case, null), the list of values presented to the user (deviceNames), and the default selection (the first element of deviceNames). Once users select a device, the dialog returns the string, which is used to return the integer index of the selected name in deviceNames. This String helps determine the parallel element in the deviceList. This element, which is an instance of CaptureDeviceInfo, creates and configures a new device from which the desired media can be recorded.

A CaptureDeviceInfo object encapsulates the information that the program needs to access and configure a capture device, such as location and format preferences. Calling methods getLocator (line 143) and getFormats (line 132) access these pieces of information. Lines 129–130 access the new CaptureDeviceInfo that the user specified in the deviceList. Next, lines 135–136 call inSource’s disconnect method to disengage any previously opened capture devices before connecting the new device.

Lines 142–143 invoke Manager method createDataSource to obtain the DataSource object that connects to the capture device’s media source, passing in the capture device’s MediaLocator object as an argument. CaptureDeviceInfo method getLocator returns the capture device’s MediaLocator. Method createDataSource in turn invokes DataSource method connect which establishes a connection with the capture device. Method createDataSource throws a NoDataSourceException if it cannot locate a DataSource for the capture device. An IOException occurs if there is an error opening the device.

Before capturing the media, the program needs to format the DataSource as specified by the user in the Format Selection dialog. Lines 146–147 use CaptureDevice method getFormatControls to obtain an array of FormatControls for DataSource inSource. An object that implements interface FormatControl specifies the format of the DataSource. DataSource objects can represent media sources other than capture devices, so for this example the cast operator in line 146 manipulates object inSource as a CaptureDevice and accesses capture device methods such as getFormatControls. Line 150 invokes method getSelectedFormat (lines 213–219) to display an input dialog from which users can select one of the available formats. Lines 176–192 call the method setDeviceFormat to set the media output format for the device to the user-selected Format. Each capture device can have several FormatControls, so setDeviceFormat uses FormatControl method setFormat to specify the format for each FormatControl object.

Chapter 22 |

Java Media Framework and Java Sound (on CD) |

1261 |

Formatting the DataSource completes the configuration of the capture device. A Processor (object inSource) converts the data to the file format in which it will be saved. The Processor works as a connector between the outSource and method captureSaveFile since DataSource inSource does not play or save the media, it only serves to configure the capture device. Line 157 invokes method captureSaveFile (lines 265–322) to perform the steps needed to save the captured media in a recognizable file format.

To create a Processor, this program first creates a ProcessorModel, a template for the Processor. The ProcessorModel determines the attributes of a Processor through a collection of information which includes a DataSource or MediaLocator, the desired formats for the media tracks which the Processor will handle, and a ContentDescriptor indicating the output content type. Line 268 creates a new Format array (outFormats) that represents the possible formats for each track in the media. Line 270 sets the default format to the first element of the array. To save the captured output to a file, the Processor must first convert the data it receives to a file-enabled format. A new QuickTime FileTypeDescriptor (package javax.media.format) is created to store a description of the content type of the Processor’s output and store it in outFileType (lines 273–274). Lines 282–284 use the DataSource inSource, the array outFormats, and the file type outFileType to instantiate a new ProcessorModel (lines 283–284).

Generally, Processors need to be configured before they can process media, but the one in this application does not since lines 282–284 invoke Manager method createRealizedProcessor. This method creates a configured, realized Processor based on the ProcessorModel object passed in as an argument. Method createRealizedProcessor throws a NoProcessorException if the program cannot locate a Processor for the media or if JMF does not support the media type. The method throws a CannotRealizeException if the Processor cannot be realized. This may occur if another program is already using the media, thus blocking communication with the media source.

Common Programming Error 22.1

Be careful when specifying track formats. Incompatible formats for specific output file types

Be careful when specifying track formats. Incompatible formats for specific output file types  prevent the program from realizing the Processor.

prevent the program from realizing the Processor.

Software Engineering Observation 22.2

Software Engineering Observation 22.2

Recall that the Processor transitions through several states before being realized. Man-

Recall that the Processor transitions through several states before being realized. Man-  ager method createProcessor allows a program to provide more customized configuration before a Processor is realized.

ager method createProcessor allows a program to provide more customized configuration before a Processor is realized.

Performance Tip 22.2

When method createRealizedProcessor configures the Processor, the method

When method createRealizedProcessor configures the Processor, the method  blocks until the Processor is realized. This may prevent other parts of the program from executing. In some cases, using a ControllerListener to respond to ControllerEvents may enable a program to operate more efficiently. When the Processor is realized, the listener is notified so the program can begin processing the media.

blocks until the Processor is realized. This may prevent other parts of the program from executing. In some cases, using a ControllerListener to respond to ControllerEvents may enable a program to operate more efficiently. When the Processor is realized, the listener is notified so the program can begin processing the media.

Having obtained media data in a file format from the Processor, the program can make a “data writer” to write the media output to a file. An object that implements interface DataSink enables media data to be output to a specific location—most commonly a file.

1262 |

Java Media Framework and Java Sound (on CD) |

Chapter 22 |

Line 287 invokes method makeDataWriter (lines 325–399) to create a DataSink object that can save the file. Manager method createDataSink requires the DataSource of the Processor and the MediaLocator for the new file as arguments. Within makeDataWriter, lines 229–242 invokes method getSaveFile to prompt users to specify the name and location of the file to which the media should be saved. The File object saveFile stores the information. Processor method getDataOutput (line 333) returns the DataSource from which it received the media. Lines 344–345 create a new MediaLocator for saveFile. Using this MediaLocator and the

DataSource, lines 349–350 create a DataSink object which writes the output media from the DataSource to the file in which the data will be saved, as specified by the

MediaLocator. Method createDataSink throws a NoDataSinkException if it cannot create a DataSink that can read data from the DataSource and output the data to the location specified by the MediaLocator. This failure may occur as a result of invalid media or an invalid MediaLocator.

The program needs to know when to stop outputting data, so lines 353–369 register a

DataSinkListener to listen for DataSinkEvents. DataSinkListener method dataSinkUpdate (lines 359–365) is called when each DataSinkEvent occurs. If the DataSinkEvent is an EndOfStreamEvent, indicating that the Processor has been closed because the capture stream connection has closed, line 364 closes the DataSink. Invoking DataSink method close stops the data transfer. A closed DataSink cannot be used again.

Common Programming Error 22.2

The media file output with a DataSink will be corrupted if the DataSink is not closed

The media file output with a DataSink will be corrupted if the DataSink is not closed  properly.

properly.

Software Engineering Observation 22.3

Software Engineering Observation 22.3

Captured media may not yield an EndOfMediaEvent if the media’s endpoint cannot be

Captured media may not yield an EndOfMediaEvent if the media’s endpoint cannot be  determined.

determined.

After setting up the DataSink and registering its listener, line 372 calls DataSink method open to connect the DataSink to the destination that the MediaLocator specifies. Method open throws a SecurityException if the DataSink attempts to write to a destination for which the program does not have write permission, such as a read-only file.

Line 373 calls DataSink method start to initiate data transfer. At this point, the program returns from method makeDataWriter back to method captureSaveFile (lines 265–322). Although the DataSink prepares itself to receive the transfer and indicates that it is ready by calling start, the transfer does not actually take place until the Processor’s start method is called. The invocation of Processor method start begins the flow of data from the capture device, formats the data and transfers that data to the DataSink. The DataSink writes the media to a file, which completes the process performed by class CapturePlayer.

While Processor encodes the data and the DataSink saves it to a file, CapturePlayer monitors the process. Monitoring provides a method of overseeing data as the capture device collects it. Lines 294–296 obtain an object that implements interface MonitorControl (package javax.media.control) from the Processor by calling method getControl. Line 299 calls MonitorControl method getControlCom-

Chapter 22 |

Java Media Framework and Java Sound (on CD) |

1263 |

ponent to obtain the GUI component that displays the monitored media. MonitorControls typically have a checkbox to enable or disable displaying media. Also, audio devices have a volume control and video devices have a control for setting the preview frame rate. Line 301 invokes method showSaveMonitor (lines 245–261) to display the monitoring GUI in a dialog box. To terminate capturing, users can press the OK button or close the dialog box (lines 254–261). For some video capture devices, the Processor needs to be in a Stopped state to enable monitoring of the saving and capturing process.

Thus far, we have discussed JMF’s capabilities to access, present and save media content. Our final JMF example demonstrates how to send media between computers using JMF’s streaming capabilities.

22.4 RTP Streaming

Streaming media refers to media that is transferred from a server to a client in a continuous stream of bytes. The client can begin playing the media while still downloading the media from the server. Audio and video media files are often many megabytes in size. Live events, such as concerts or football game broadcasts, may have indeterminable sizes. Users could wait until a recording of a concert or game is posted, then download the entire recording. However, at today’s Internet connection speeds, downloading such a broadcast could take days and typically users prefer to listen to live broadcasts as they occur. Streaming media enables client applications to play media over the Internet or over a network without downloading the entire media file at once.

In a streaming media application, the client typically connects to a server that sends a stream of bytes containing the media back to the client. The client application buffers (i.e. stores locally) a portion of the media, which the client begins playing after a certain portion has been received. The client continually buffers additional media, providing users with an uninterrupted clip, as long as network traffic does not prevent the client application from buffering additional bytes. With buffering, users experience the media within seconds of when the streaming begins, even though all of the material has not been received.

Performance Tip 22.3

Streaming media to a client enables the client to experience the media faster than if the client

Streaming media to a client enables the client to experience the media faster than if the client  must wait for an entire media file to download.

must wait for an entire media file to download.

Demand for real-time, robust multimedia is rising dramatically as the speed of Internet connections increase. Broadband Internet access, which provides high-speed network connections to the Internet for so many home users, is becoming more popular, though the number of users remains relatively small compared to the total number of Internet users. With faster connections, streaming media can provide a better multimedia experience. Users with slower connections can still experience the multimedia, though with lesser quality. The wide range of applications that use streaming media is growing. Applications that stream video clips to clients have expanded to provide real-time broadcast feeds. Thousands of radio stations stream music continuously over the Internet. Client applications such as RealPlayer have focused on streaming media content with live radio broadcasts. Applications are not limited to audio and video server-to-client streaming. For example, teleconferencing and video conferencing applications increase efficiency in everyday business by reducing the need for business people to travel great distances to attend meetings.

1264 |

Java Media Framework and Java Sound (on CD) |

Chapter 22 |

JMF provides a streaming media package that enables Java applications to send and receive streams of media in some of the formats discussed earlier in this chapter. For a complete list of formats, see the official JMF Web site:

java.sun.com/products/java-media/jmf/2.1.1/formats.html

JMF uses the industry-standard Real-Time Transport Protocol (RTP) to control media transmission. RTP is designed specifically to transmit real-time media data.

The two mechanisms for streaming RTP-supported media are passing it through a DataSink and buffering it. The easier mechanism to use is a DataSink, which writes the contents of a stream to a host destination (i.e., a client computer), via the same techniques shown in Fig. 22.2 to save captured media to a file. In this case, however, the destination MediaLocator’s URL would be specified in the following format:

rtp://host:port/contentType

where host is the IP address or host name of the server, port is the port number on which the server is streaming the media and contentType is either audio or video.

Using a DataSink as specified allows only one stream to be sent at a time. To send multiple streams (e.g., as a karaoke video with separate tracks for video and audio would) to multiple hosts, a server application must use RTP session managers. An RTPManager (package javax.media.rtp) provides more control over the streaming process, permitting specification of buffer sizes, error checking and streaming reports on the propagation delay (the time it takes for the data to reach its destination).

The program in Fig. 22.3 and Fig. 22.4 demonstrates streaming using the RTP session manager. This example supports sending multiple streams in parallel, so separate clients must be opened for each stream. This example does not show a client that can receive the RTP stream. The program in Fig. 22.1 (SimplePlayer) can test the RTP server by specifying an RTP session address

rtp://127.0.0.1:4000/audio

as the location SimplePlayer should open to begin playing audio. To execute the streaming media server on a different computer, replace 127.0.0.1 with either the IP address or host name of the server computer.

1// Fig. 22.3: RTPServer.java

2 // Provides configuration and sending capabilities

3 // for RTP-supported media files

4

5 // Java core packages

6 import java.io.*;

7 import java.net.*;

8

9 // Java extension packages

10import javax.media.*;

11import javax.media.protocol.*;

12import javax.media.control.*;

13import javax.media.rtp.*;

14import javax.media.format.*;

Fig. 22.3 Serving streaming media with RTP session managers (part 1 of 7).

Chapter 22 |

Java Media Framework and Java Sound (on CD) |

1265 |

15

16 public class RTPServer {

17

18// IP address, file or medialocator name, port number

19private String ipAddress, fileName;

20private int port;

21

22// processor controlling data flow

23private Processor processor;

24

25// data output from processor to be sent

26private DataSource outSource;

27

28// media tracks' configurable controls

29private TrackControl tracks[];

30

31// RTP session manager

32private RTPManager rtpManager[];

34// constructor for RTPServer

35public RTPServer( String locator, String ip, int portNumber )

36{

37fileName = locator;

38port = portNumber;

39ipAddress = ip;

40}

41

42// initialize and set up processor

43// return true if successful, false if not

44public boolean beginSession()

45{

46// get MediaLocator from specific location

47MediaLocator mediaLocator = new MediaLocator( fileName );

49 |

if ( mediaLocator == null ) { |

50 |

System.err.println( |

51 |

"No MediaLocator found for " + fileName ); |

52 |

|

53return false;

54}

55

56// create processor from MediaLocator

57try {

58 |

processor = Manager.createProcessor( mediaLocator ); |

59 |

|

60 |

// register a ControllerListener for processor |

61 |

// to listen for state and transition events |

62 |

processor.addControllerListener( |

63 |

new ProcessorEventHandler() ); |

64 |

|

65 |

System.out.println( "Processor configuring..." ); |

66 |

|

|

|

Fig. 22.3 Serving streaming media with RTP session managers (part 2 of 7).

1266 |

Java Media Framework and Java Sound (on CD) |

Chapter 22 |

|

|

|

67 |

// configure processor before setting it up |

|

68processor.configure();

69}

70

71// source connection error

72catch ( IOException ioException ) {

73 ioException.printStackTrace();

74return false;

75}

76

77// exception thrown when no processor could

78// be found for specific data source

79catch ( NoProcessorException noProcessorException ) {

80 noProcessorException.printStackTrace();

81return false;

82}

83

84 return true;

85

86 } // end method beginSession

87

88// ControllerListener handler for processor

89private class ProcessorEventHandler

90extends ControllerAdapter {

91

92// set output format and realize

93// configured processor

94public void configureComplete(

95ConfigureCompleteEvent configureCompleteEvent )

96{

97 |

System.out.println( "\nProcessor configured." ); |

98 |

|

99 |

setOutputFormat(); |

100 |

|

101 |

System.out.println( "\nRealizing Processor...\n" ); |

102 |

|

103processor.realize();

104}

105

106// start sending when processor is realized

107public void realizeComplete(

108RealizeCompleteEvent realizeCompleteEvent )

109{

110 |

System.out.println( |

111 |

"\nInitialization successful for " + fileName ); |

112 |

|

113 |

if ( transmitMedia() == true ) |

114 |

System.out.println( "\nTransmission setup OK" ); |

115 |

|

116 |

else |

117System.out.println( "\nTransmission failed." );

118}

119

Fig. 22.3 Serving streaming media with RTP session managers (part 3 of 7).

Chapter 22 |

Java Media Framework and Java Sound (on CD) |

1267 |

120// stop RTP session when there is no media to send

121public void endOfMedia( EndOfMediaEvent mediaEndEvent )

122{

123 stopTransmission();

124System.out.println ( "Transmission completed." );

125}

126

127 } // end inner class ProcessorEventHandler

128

129// set output format of all tracks in media

130public void setOutputFormat()

131{

132// set output content type to RTP capable format

133processor.setContentDescriptor(

134 new ContentDescriptor( ContentDescriptor.RAW_RTP ) );

135

136// get all track controls of processor

137tracks = processor.getTrackControls();

139// supported RTP formats of a track

140Format rtpFormats[];

141

142// set each track to first supported RTP format

143// found in that track

144for ( int i = 0; i < tracks.length; i++ ) {

145 |

|

|

146 |

System.out.println( "\nTrack #" + |

|

147 |

|

( i + 1 ) + " supports " ); |

148 |

|

|

149 |

if |

( tracks[ i ].isEnabled() ) { |

150 |

|

|

151 |

|

rtpFormats = tracks[ i ].getSupportedFormats(); |

152 |

|

|

153 |

|

// if supported formats of track exist, |

154 |

|

// display all supported RTP formats and set |

155 |

|

// track format to be first supported format |

156 |

|

if ( rtpFormats.length > 0 ) { |

157 |

|

|

158 |

|

for ( int j = 0; j < rtpFormats.length; j++ ) |

159 |

|

System.out.println( rtpFormats[ j ] ); |

160 |

|

|

161 |

|

tracks[ i ].setFormat( rtpFormats[ 0 ] ); |

162 |

|

|

163 |

|

System.out.println ( "Track format set to " + |

164 |

|

tracks[ i ].getFormat() ); |

165 |

|

} |

166 |

|

|

167 |

|

else |

168 |

|

System.err.println ( |

169 |

|

"No supported RTP formats for track!" ); |

170 |

|

|

171 |

} |

// end if |

172 |

|

|

|

|

|

Fig. 22.3 Serving streaming media with RTP session managers (part 4 of 7).

1268 |

Java Media Framework and Java Sound (on CD) |

Chapter 22 |

173 } // end for loop

174

175 } // end method setOutputFormat

176

177// send media with boolean success value

178public boolean transmitMedia()

179{

180outSource = processor.getDataOutput();

182 if ( outSource == null ) {

183 System.out.println ( "No data source from media!" );

184

185return false;

186}

187

188// rtp stream managers for each track

189rtpManager = new RTPManager[ tracks.length ];

191// destination and local RTP session addresses

192SessionAddress localAddress, remoteAddress;

194// RTP stream being sent

195SendStream sendStream;

197// IP address

198 InetAddress ip;

199

200// initialize transmission addresses and send out media

201try {

202 |

|

203 |

// transmit every track in media |

204 |

for ( int i = 0; i < tracks.length; i++ ) { |

205 |

|

206 |

// instantiate a RTPManager |

207 |

rtpManager[ i ] = RTPManager.newInstance(); |

208 |

|

209 |

// add 2 to specify next control port number; |

210 |

// (RTP Session Manager uses 2 ports) |

211 |

port += ( 2 * i ); |

212 |

|

213 |

// get IP address of host from ipAddress string |

214 |

ip = InetAddress.getByName( ipAddress ); |

215 |

|

216 |

// encapsulate pair of IP addresses for control and |

217 |

// data with 2 ports into local session address |

218 |

localAddress = new SessionAddress( |

219 |

ip.getLocalHost(), port ); |

220 |

|

221 |

// get remoteAddress session address |

222 |

remoteAddress = new SessionAddress( ip, port ); |

223 |

|

224 |

// initialize the session |

225 |

rtpManager[ i ].initialize( localAddress ); |

|

|

Fig. 22.3 Serving streaming media with RTP session managers (part 5 of 7).

Chapter 22 |

Java Media Framework and Java Sound (on CD) |

1269 |

|

|

|

|

|

226 |

|

|

|

227 |

|

// open RTP session for destination |

|

228 |

|

rtpManager[ i ].addTarget( remoteAddress ); |

|

229 |

|

|

|

230 |

|

System.out.println( "\nStarted RTP session: " |

|

231 |

|

+ ipAddress + " " + port); |

|

232 |

|

|

|

233 |

|

// create send stream in RTP session |

|

234 |

|

sendStream = |

|

235 |

|

rtpManager[ i ].createSendStream( outSource, i ); |

|

236 |

|

|

|

237 |

|

// start sending the stream |

|

238 |

|

sendStream.start(); |

|

239 |

|

|

|

240 |

|

System.out.println( "Transmitting Track #" + |

|

241 |

|

( i + 1 ) + " ... " ); |

|

242 |

|

|

|

243 |

|

} // end for loop |

|

244 |

|

|

|

245 |

|

// start media feed |

|

246 |

|

processor.start(); |

|

247 |

|

|

|

248 |

} |

// end try |

|

249 |

|

|

|

250// unknown local or unresolvable remote address

251catch ( InvalidSessionAddressException addressError ) {

252 addressError.printStackTrace();

253return false;

254}

255

256// DataSource connection error

257catch ( IOException ioException ) {

258 ioException.printStackTrace();

259return false;

260}

261

262// format not set or invalid format set on stream source

263catch ( UnsupportedFormatException formatException ) {

264 formatException.printStackTrace();

265return false;

266}

267

268// transmission initialized successfully

269return true;

270

271 } // end method transmitMedia

272

273// stop transmission and close resources

274public void stopTransmission()

275{

276if ( processor != null ) {

277

Fig. 22.3 Serving streaming media with RTP session managers (part 6 of 7).

1270 |

Java Media Framework and Java Sound (on CD) |

Chapter 22 |

|

|

|

|

|

278 |

|

// stop processor |

|

279 |

|

processor.stop(); |

|

280 |

|

|

|

281 |

|

// dispose processor |

|

282 |

|

processor.close(); |

|

283 |

|

|

|

284 |

|

if ( rtpManager != null ) |

|

285 |

|

|

|

286 |

|

// close destination targets |

|

287 |

|

// and dispose RTP managers |

|

288 |

|

for ( int i = 0; i < rtpManager.length; i++ ) { |

|

289 |

|

|

|

290 |

|

// close streams to all destinations |

|

291 |

|

// with a reason for termination |

|

292 |

|

rtpManager[ i ].removeTargets( |

|

293 |

|

"Session stopped." ); |

|

294 |

|

|

|

295 |

|

// release RTP session resources |

|

296 |

|

rtpManager[ i ].dispose(); |

|

297 |

|

} |

|

298 |

|

|

|

299 |

|

} // end if |

|

300 |

|

|

|

301 |

|

System.out.println ( "Transmission stopped." ); |

|

302 |

|

|

|

303 |

|

} // end method stopTransmission |

|

304 |

|

|

|

305 |

} |

// end class RTPServer |

|

|

|

|

|

Fig. 22.3 Serving streaming media with RTP session managers (part 7 of 7).

Class RTPServer’s purpose is to stream media content. As in previous examples, the RTPServer (Fig. 22.3) sets up the media, processes and formats it, then outputs it. ControllerEvents and the various states of the streaming process drive this process. Processing of the media has three distinct parts—Processor initialization, Format configuration and data transmission. The code in this example contains numerous confirmation messages displayed to the command prompt and an emphasis on error checking. A problem during streaming will most likely end the entire process and it will need to be restarted.

To test RTPServer, class RTPServerTest (Fig. 22.4) creates a new RTPServer object and passes its constructor (lines 35–40) three arguments—a String representing the media’s location, a String representing the IP address of the client and a port number for streaming content. These arguments contain the information class RTPServer needs to obtain the media and set up the streaming process. Following the general approach outlined in SimplePlayer (Fig. 22.1) and CapturePlayer (Fig. 22.2), class RTPServer obtains a media source, configures the source through a type of Controller and outputs the data to a specified destination.

RTPServerTest calls RTPServer method beginSession (lines 44–86) to set up the Processor that controls the data flow. Line 47 creates a MediaLocator and initializes it with the media location stored in fileName. Line 58 creates a Processor for the data specified by that MediaLocator.

Chapter 22 |

Java Media Framework and Java Sound (on CD) |

1271 |

Unlike the program in Fig. 22.2, this Processor is not preconfigured by a Manager. Until class RTPServer configures and realizes the Processor, the media cannot be formatted. Lines 62–63 register a ProcessorEventHandler to react to the processor’s ControllerEvents. The methods of class ProcessorEventHandler (lines 89–127) control the media setup as the Processor changes states. Line 68 invokes Processor method configure to place the Processor in the Configuring state. Configuration is when the Processor inquires of the system and the media the format information needed to program the Processor to perform the correct task. A ConfigureCompleteEvent occurs when the Processor completes configuration. The ProcessorEventHandler method configureComplete (lines 94–104) responds to this transition. Method configureComplete calls method setOutputFormat (lines 130–175) then realizes the Processor (line 103). When line 99 invokes method setOutputFormat, it sets each media track to an RTP streaming media format. Lines 133–134 in method setOutputFormat specify the output content-type by calling Processor method setContentDescriptor. The method takes as an argument a

ContentDescriptor initialized with constant ContentDescriptor.RAW_RTP. The RTP output content-type restricts the Processor to only support RTP-enabled media track formats. The Processor’s output content-type should be set before the media tracks are configured.

Once the Processor is configured, the media track formats need to be set. Line 137 invokes Processor method getTrackControls to obtain an array that contains the corresponding TrackControl object (package javax.media.control) for each media track. For each enabled TrackControl, lines 144–173 obtain an array of all supported RTP media Formats (line 151), then set the first supported RTP format as the preferred RTP-streaming media format for that track (line 161). When method setOutputFormat returns, line 103 in method configureComplete realizes the

Processor.

As with any controller realization, the Processor can output media as soon as it has finished realizing itself. When the Processor enters the Realized state, the ProcessorEventHandler invokes method realizeComplete (lines 107–118). Line 113 invokes method transmitMedia (lines 178–271) which creates the structures needed to transmit the media to the Processor. This method obtains the DataSource from the Processor (line 180), then declares an array of RTPManagers that are able to start and control an RTP session (line 189). RTPManagers use a pair of SessionAddress objects with identical IP addresses, but different port numbers—one for stream control and one for streaming media data. An RTPManager receives each IP address and port number as a SessionAddress object. Line 192 declares the SessionAddress objects used in the streaming process. An object that implements interface SendStream (line 195) performs the RTP streaming.

Software Engineering Observation 22.4

Software Engineering Observation 22.4

For videos that have multiple tracks, each SendStream must have its own RTPManager

For videos that have multiple tracks, each SendStream must have its own RTPManager  managing its session. Each Track has its own SendStream.

managing its session. Each Track has its own SendStream.

The try block (lines 201–248) of method transmitMedia sends out each track of the media as an RTP stream. First, managers must be created for the sessions. Line 207 invokes RTPManager method newInstance to instantiate an RTPManager for each

1272 |

Java Media Framework and Java Sound (on CD) |

Chapter 22 |

track stream. Line 211 assigns the port number to be two more than the previous port number, because the each track uses one port number for the stream control and one to actually stream the data. Lines 218–219 instantiate a new local session address where the stream is located (i.e., the RTP address that clients use to obtain the media stream) with the local IP address and a port number as parameters. Line 219 invokes InetAddress method getLocalHost to get the local IP address. Line 222 instantiates the client’s session address, which the RTPManager uses as the stream’s target destination. When line 225 calls RTPManager method initialize, the method directs the local streaming session to use the local session address. Using object remoteAddress as a parameter, line 228 calls RTPManager method addTarget to open the destination session at the specified address. To stream media to multiple clients, call RTPManager method addTarget for each destination address. Method addTarget must be called after the session is initialized and before any of the streams are created on the session.

The program can now create the streams on the session and start sending data. The streams are created in the current RTP session with the DataSource outSource (obtained at line 180) and the source stream index (i.e. media track index) in lines 234–235. Invoking method start on the SendStream (line 238) and on the Processor (line 246) starts transmission of the media streams, which may cause exceptions. An InvalidSessionAddressException occurs when the specified session address is invalid. An UnsupportedFormatException occurs when an unsupported media format is specified or if the DataSource’s Format has not been set. An IOException occurs if the application encounters networking problems. During the streaming process, RTPManagers can be used with related classes in package javax.media.rtp and package javax.media.rtp.event controls the streaming process and send reports to the application.

The program should close connections and stop streaming transmission when it reaches the end of the streaming media or when the program terminates. When the Processor encounters the end of the media, it generates an EndOfMediaEvent. In response, the program calls method endOfMedia (lines 121–125). Line 123 invokes method stopTransmission (lines 274–303) to stop and close the Processor (lines 279–282). After calling stopTransmission, streaming cannot resume because it disposes of the Processor and the RTP session resources. Lines 288–297 invoke RTPManager method removeTargets (lines 292–293) to close streaming to all destinations. RTPManager method dispose (line 296) is also invoked, releasing the resources held by the RTP sessions. The class RTPServerTest (Fig. 22.4), explicitly invokes method stopTransmission when users terminates the server application (line 40).

1 // Fig. 22.4: RTPServerTest.java

2 // Test class for RTPServer

3

4// Java core packages

5 import java.awt.event.*;

6 import java.io.*;

7 import java.net.*;

8

Fig. 22.4 Application to test class RTPServer from Fig. 22.3 (part 1 of 6).

Chapter 22 |

Java Media Framework and Java Sound (on CD) |

1273 |

9 // Java extension packages

10 import javax.swing.*;

11

12 public class RTPServerTest extends JFrame {

13

14// object handling RTP streaming

15private RTPServer rtpServer;

16

17// media sources and destination locations

18private int port;

19private String ip, mediaLocation;

20private File mediaFile;

21

22// GUI buttons

23private JButton transmitFileButton, transmitUrlButton;

25// constructor for RTPServerTest

26public RTPServerTest()

27{

28super( "RTP Server Test" );

30// register a WindowListener for frame events

31addWindowListener(

32 |

|

33 |

// anonymous inner class to handle WindowEvents |

34 |

new WindowAdapter() { |

35 |

|

36 |

public void windowClosing( |

37 |

WindowEvent windowEvent ) |

38 |

{ |

39 |

if ( rtpServer != null ) |

40 |

rtpServer.stopTransmission(); |

41 |

} |

42 |

|

43 |

} // end WindowAdpater |

44 |

|

45 |

); // end call to method addWindowListener |

46 |

|

47// panel containing button GUI

48JPanel buttonPanel = new JPanel();

49getContentPane().add( buttonPanel );

51// transmit file button GUI

52transmitFileButton = new JButton( "Transmit File" );

53buttonPanel.add( transmitFileButton );

54

55// register ActionListener for transmitFileButton events

56transmitFileButton.addActionListener(

57 |

new ButtonHandler() ); |

58 |

|

59// transmit URL button GUI

60transmitUrlButton = new JButton( "Transmit Media" );

61buttonPanel.add( transmitUrlButton );

Fig. 22.4 Application to test class RTPServer from Fig. 22.3 (part 2 of 6).

1274 |

Java Media Framework and Java Sound (on CD) |

Chapter 22 |

62

63// register ActionListener for transmitURLButton events

64transmitUrlButton.addActionListener(

65 |

new ButtonHandler() ); |

66 |

|

67 |

} // end constructor |

68 |

|

69// inner class handles transmission button events

70private class ButtonHandler implements ActionListener {

72// open and try to send file to user-input destination

73public void actionPerformed( ActionEvent actionEvent )

74{

75 |

// |

if transmitFileButton invoked, get file URL string |

76 |

if |

( actionEvent.getSource() == transmitFileButton ) { |

77 |

|

|

78 |

|

mediaFile = getFile(); |

79 |

|

|

80 |

|

if ( mediaFile != null ) |

81 |

|

|

82 |

|

// obtain URL string from file |

83 |

|

try { |

84 |

|

mediaLocation = mediaFile.toURL().toString(); |

85 |

|

} |

86 |

|

|

87 |

|

// file path unresolvable |

88 |

|

catch ( MalformedURLException badURL ) { |

89 |

|

badURL.printStackTrace(); |

90 |

|

} |

91 |

|

|

92 |

|

else |

93 |

|

return; |

94 |

|

|

95 |

} |

// end if |

96 |

|

|

97 |

// else transmitMediaButton invoked, get location |

|

98 |

else |

|

99 |

|

mediaLocation = getMediaLocation(); |

100 |

|

|

101 |

if |

( mediaLocation == null ) |

102 |

|

return; |

103 |

|

|

104 |

// get IP address |

|

105 |

ip = getIP(); |

|

106 |

|

|

107 |

if ( ip == null ) |

|

108 |

|

return; |

109 |

|

|

110 |

// get port number |

|

111 |

port = getPort(); |

|

112 |

|

|

|

|

|

Fig. 22.4 Application to test class RTPServer from Fig. 22.3 (part 3 of 6).

Chapter 22 |

Java Media Framework and Java Sound (on CD) |

1275 |

|

|

|

|

|

113 |

|

// check for valid positive port number and input |

|

114 |

|

if ( port <= 0 ) { |

|

115 |

|

|

|

116 |

|

if ( port != -999 ) |

|

117 |

|

System.err.println( "Invalid port number!" ); |

|

118 |

|

|

|

119 |

|

return; |

|

120 |

|

} |

|

121 |

|

|

|

122 |

|

// instantiate new RTP streaming server |

|

123 |

|

rtpServer = new RTPServer( mediaLocation, ip, port ); |

|

124 |

|

|

|

125 |

|

rtpServer.beginSession(); |

|

126 |

|

|

|

127 |

} |

// end method actionPeformed |

|

128 |

|

|

|

129 |

} // end inner class ButtonHandler |

|

|

130 |

|

|

|

131// get file from computer

132public File getFile()

133{

134JFileChooser fileChooser = new JFileChooser();

136 |

fileChooser.setFileSelectionMode( |

137 |

JFileChooser.FILES_ONLY ); |

138 |

|

139 |

int result = fileChooser.showOpenDialog( this ); |

140 |

|

141 |

if ( result == JFileChooser.CANCEL_OPTION ) |

142 |

return null; |

143 |

|

144 |

else |

145return fileChooser.getSelectedFile();

146}

147

148// get media location from user

149public String getMediaLocation()

150{

151String input = JOptionPane.showInputDialog(

152this, "Enter MediaLocator" );

154// if user presses OK with no input

155if ( input != null && input.length() == 0 ) {

156System.err.println( "No input!" );

157return null;

158}

159

160return input;

161}

162

163// method getting IP string from user

164public String getIP()

165{

Fig. 22.4 Application to test class RTPServer from Fig. 22.3 (part 4 of 6).

1276 |

Java Media Framework and Java Sound (on CD) |

Chapter 22 |

166 String input = JOptionPane.showInputDialog(

167 this, "Enter IP Address: " );

168

169// if user presses OK with no input

170if ( input != null && input.length() == 0 ) {

171System.err.println( "No input!" );

172return null;

173}

174

175return input;

176}

177

178// get port number

179public int getPort()

180{

181String input = JOptionPane.showInputDialog(

182this, "Enter Port Number: " );

184// return flag value if user clicks OK with no input

185if ( input != null && input.length() == 0 ) {

186 System.err.println( "No input!" );

187return -999;

188}

189

190// return flag value if user clicked CANCEL

191if ( input == null )

192 |

return -999; |

193 |

|

194// else return input

195return Integer.parseInt( input );

197 } // end method getPort

198

199// execute application

200public static void main( String args[] )

201{

202RTPServerTest serverTest = new RTPServerTest();

204serverTest.setSize( 250, 70 );

205serverTest.setLocation( 300, 300 );

206serverTest.setDefaultCloseOperation( EXIT_ON_CLOSE );

207serverTest.setVisible( true );

208}

209

210 } // end class RTPServerTest

Fig. 22.4 Application to test class RTPServer from Fig. 22.3 (part 5 of 6).