- •List of Tables

- •List of Figures

- •Table of Notation

- •Preface

- •Boolean retrieval

- •An example information retrieval problem

- •Processing Boolean queries

- •The extended Boolean model versus ranked retrieval

- •References and further reading

- •The term vocabulary and postings lists

- •Document delineation and character sequence decoding

- •Obtaining the character sequence in a document

- •Choosing a document unit

- •Determining the vocabulary of terms

- •Tokenization

- •Dropping common terms: stop words

- •Normalization (equivalence classing of terms)

- •Stemming and lemmatization

- •Faster postings list intersection via skip pointers

- •Positional postings and phrase queries

- •Biword indexes

- •Positional indexes

- •Combination schemes

- •References and further reading

- •Dictionaries and tolerant retrieval

- •Search structures for dictionaries

- •Wildcard queries

- •General wildcard queries

- •Spelling correction

- •Implementing spelling correction

- •Forms of spelling correction

- •Edit distance

- •Context sensitive spelling correction

- •Phonetic correction

- •References and further reading

- •Index construction

- •Hardware basics

- •Blocked sort-based indexing

- •Single-pass in-memory indexing

- •Distributed indexing

- •Dynamic indexing

- •Other types of indexes

- •References and further reading

- •Index compression

- •Statistical properties of terms in information retrieval

- •Dictionary compression

- •Dictionary as a string

- •Blocked storage

- •Variable byte codes

- •References and further reading

- •Scoring, term weighting and the vector space model

- •Parametric and zone indexes

- •Weighted zone scoring

- •Learning weights

- •The optimal weight g

- •Term frequency and weighting

- •Inverse document frequency

- •The vector space model for scoring

- •Dot products

- •Queries as vectors

- •Computing vector scores

- •Sublinear tf scaling

- •Maximum tf normalization

- •Document and query weighting schemes

- •Pivoted normalized document length

- •References and further reading

- •Computing scores in a complete search system

- •Index elimination

- •Champion lists

- •Static quality scores and ordering

- •Impact ordering

- •Cluster pruning

- •Components of an information retrieval system

- •Tiered indexes

- •Designing parsing and scoring functions

- •Putting it all together

- •Vector space scoring and query operator interaction

- •References and further reading

- •Evaluation in information retrieval

- •Information retrieval system evaluation

- •Standard test collections

- •Evaluation of unranked retrieval sets

- •Evaluation of ranked retrieval results

- •Assessing relevance

- •A broader perspective: System quality and user utility

- •System issues

- •User utility

- •Results snippets

- •References and further reading

- •Relevance feedback and query expansion

- •Relevance feedback and pseudo relevance feedback

- •The Rocchio algorithm for relevance feedback

- •Probabilistic relevance feedback

- •When does relevance feedback work?

- •Relevance feedback on the web

- •Evaluation of relevance feedback strategies

- •Pseudo relevance feedback

- •Indirect relevance feedback

- •Summary

- •Global methods for query reformulation

- •Vocabulary tools for query reformulation

- •Query expansion

- •Automatic thesaurus generation

- •References and further reading

- •XML retrieval

- •Basic XML concepts

- •Challenges in XML retrieval

- •A vector space model for XML retrieval

- •Evaluation of XML retrieval

- •References and further reading

- •Exercises

- •Probabilistic information retrieval

- •Review of basic probability theory

- •The Probability Ranking Principle

- •The 1/0 loss case

- •The PRP with retrieval costs

- •The Binary Independence Model

- •Deriving a ranking function for query terms

- •Probability estimates in theory

- •Probability estimates in practice

- •Probabilistic approaches to relevance feedback

- •An appraisal and some extensions

- •An appraisal of probabilistic models

- •Bayesian network approaches to IR

- •References and further reading

- •Language models for information retrieval

- •Language models

- •Finite automata and language models

- •Types of language models

- •Multinomial distributions over words

- •The query likelihood model

- •Using query likelihood language models in IR

- •Estimating the query generation probability

- •Language modeling versus other approaches in IR

- •Extended language modeling approaches

- •References and further reading

- •Relation to multinomial unigram language model

- •The Bernoulli model

- •Properties of Naive Bayes

- •A variant of the multinomial model

- •Feature selection

- •Mutual information

- •Comparison of feature selection methods

- •References and further reading

- •Document representations and measures of relatedness in vector spaces

- •k nearest neighbor

- •Time complexity and optimality of kNN

- •The bias-variance tradeoff

- •References and further reading

- •Exercises

- •Support vector machines and machine learning on documents

- •Support vector machines: The linearly separable case

- •Extensions to the SVM model

- •Multiclass SVMs

- •Nonlinear SVMs

- •Experimental results

- •Machine learning methods in ad hoc information retrieval

- •Result ranking by machine learning

- •References and further reading

- •Flat clustering

- •Clustering in information retrieval

- •Problem statement

- •Evaluation of clustering

- •Cluster cardinality in K-means

- •Model-based clustering

- •References and further reading

- •Exercises

- •Hierarchical clustering

- •Hierarchical agglomerative clustering

- •Time complexity of HAC

- •Group-average agglomerative clustering

- •Centroid clustering

- •Optimality of HAC

- •Divisive clustering

- •Cluster labeling

- •Implementation notes

- •References and further reading

- •Exercises

- •Matrix decompositions and latent semantic indexing

- •Linear algebra review

- •Matrix decompositions

- •Term-document matrices and singular value decompositions

- •Low-rank approximations

- •Latent semantic indexing

- •References and further reading

- •Web search basics

- •Background and history

- •Web characteristics

- •The web graph

- •Spam

- •Advertising as the economic model

- •The search user experience

- •User query needs

- •Index size and estimation

- •Near-duplicates and shingling

- •References and further reading

- •Web crawling and indexes

- •Overview

- •Crawling

- •Crawler architecture

- •DNS resolution

- •The URL frontier

- •Distributing indexes

- •Connectivity servers

- •References and further reading

- •Link analysis

- •The Web as a graph

- •Anchor text and the web graph

- •PageRank

- •Markov chains

- •The PageRank computation

- •Hubs and Authorities

- •Choosing the subset of the Web

- •References and further reading

- •Bibliography

- •Author Index

210 |

10 XML retrieval |

•We collect the statistics used for computing weight(q, t, c) and weight(d, t, c) from all contexts that have a non-zero resemblance to c (as opposed to just from c as in SIMNOMERGE). For instance, for computing the document frequency of the structural term atl#"recognition", we also count occurrences of recognition in XML contexts fm/atl, article//atl etc.

•We modify Equation (10.2) by merging all structural terms in the document that have a non-zero context resemblance to a given query structural term. For example, the contexts /play/act/scene/title and /play/title in the document will be merged when matching against the query term /play/title#"Macbeth".

•The context resemblance function is further relaxed: Contexts have a nonzero resemblance in many cases where the definition of CR in Equation (10.1) returns 0.

See the references in Section 10.6 for details.

These three changes alleviate the problem of sparse term statistics discussed in Section 10.2 and increase the robustness of the matching function against poorly posed structural queries. The evaluation of SIMNOMERGE and SIMMERGE in the next section shows that the relaxed matching conditions of SIMMERGE increase the effectiveness of XML retrieval.

?Exercise 10.1

Consider computing df for a structural term as the number of times that the structural term occurs under a particular parent node. Assume the following: the structural term hc, ti = author#"Herbert" occurs once as the child of the node squib; there are 10 squib nodes in the collection; hc, ti occurs 1000 times as the child of article; there are

1,000,000 article nodes in the collection. The idf weight of hc, ti then is log2 10/1 ≈ 3.3 when occurring as the child of squib and log2 1,000,000/1000 ≈ 10.0 when occurring as the child of article. (i) Explain why this is not an appropriate weighting for hc, ti.

Why should hc, ti not receive a weight that is three times higher in articles than in squibs? (ii) Suggest a better way of computing idf.

Exercise 10.2

Write down all the structural terms occurring in the XML document in Figure 10.8.

Exercise 10.3

How many structural terms does the document in Figure 10.1 yield?

10.4Evaluation of XML retrieval

INEX The premier venue for research on XML retrieval is the INEX (INitiative for the Evaluation of XML retrieval) program, a collaborative effort that has produced reference collections, sets of queries, and relevance judgments. A yearly INEX meeting is held to present and discuss research results. The

Online edition (c) 2009 Cambridge UP

10.4 Evaluation of XML retrieval |

211 |

|

12,107 |

number of documents |

|

494 MB |

size |

|

1995–2002 |

time of publication of articles |

|

1,532 |

average number of XML nodes per document |

|

6.9average depth of a node

30 |

number of CAS topics |

30number of CO topics

Table 10.2 INEX 2002 collection statistics.

|

|

article |

|

|

|

|

|

body |

|

front matter |

|

section |

||

journal title |

article title |

title |

paragraph |

|

IEEE Transac- |

Activity |

|

This work fo- |

|

tion on Pat- |

Introduction |

|||

recognition |

cuses on . . . |

|||

tern Analysis |

|

|||

|

|

|

||

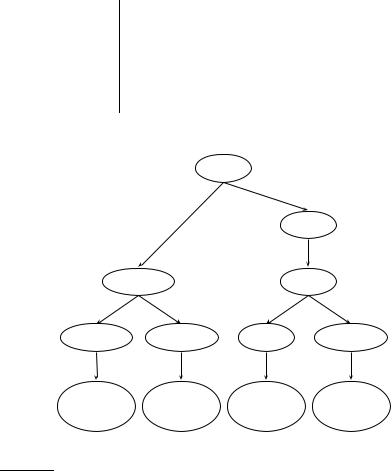

Figure 10.11 Simplified schema of the documents in the INEX collection.

INEX 2002 collection consisted of about 12,000 articles from IEEE journals. We give collection statistics in Table 10.2 and show part of the schema of the collection in Figure 10.11. The IEEE journal collection was expanded in 2005. Since 2006 INEX uses the much larger English Wikipedia as a test collection. The relevance of documents is judged by human assessors using the methodology introduced in Section 8.1 (page 152), appropriately modified for structured documents as we will discuss shortly.

Two types of information needs or topics in INEX are content-only or CO CO TOPICS topics and content-and-structure (CAS) topics. CO topics are regular key- CAS TOPICS word queries as in unstructured information retrieval. CAS topics have structural constraints in addition to keywords. We already encountered an exam-

Online edition (c) 2009 Cambridge UP

212 |

10 XML retrieval |

ple of a CAS topic in Figure 10.3. The keywords in this case are summer and holidays and the structural constraints specify that the keywords occur in a section that in turn is part of an article and that this article has an embedded year attribute with value 2001 or 2002.

Since CAS queries have both structural and content criteria, relevance assessments are more complicated than in unstructured retrieval. INEX 2002 defined component coverage and topical relevance as orthogonal dimen-

COMPONENT sions of relevance. The component coverage dimension evaluates whether the COVERAGE element retrieved is “structurally” correct, i.e., neither too low nor too high

in the tree. We distinguish four cases:

•Exact coverage (E). The information sought is the main topic of the component and the component is a meaningful unit of information.

•Too small (S). The information sought is the main topic of the component, but the component is not a meaningful (self-contained) unit of information.

•Too large (L). The information sought is present in the component, but is not the main topic.

•No coverage (N). The information sought is not a topic of the component.

TOPICAL RELEVANCE The topical relevance dimension also has four levels: highly relevant (3), fairly relevant (2), marginally relevant (1) and nonrelevant (0). Components are judged on both dimensions and the judgments are then combined into a digit-letter code. 2S is a fairly relevant component that is too small and 3E is a highly relevant component that has exact coverage. In theory, there are 16 combinations of coverage and relevance, but many cannot occur. For example, a nonrelevant component cannot have exact coverage, so the combination 3N is not possible.

The relevance-coverage combinations are quantized as follows:

|

1.00 |

if |

(rel, cov) = 3E |

||

|

0.75 |

if |

(rel, cov) |

{ |

} |

|

{ |

2E, 3L |

|||

|

0.50 |

if |

|

} |

|

Q(rel, cov) = |

(rel, cov) {1E, 2L,}2S |

||||

|

0.25 |

if |

(rel, cov) |

|

1S, 1L |

|

|

||||

|

|

|

|

|

|

|

|

|

|

|

|

|

0.00 |

if |

(rel, cov) = 0N |

||

|

|||||

This evaluation scheme takes account of the fact that binary relevance judgments, which are standard in unstructured information retrieval (Section 8.5.1, page 166), are not appropriate for XML retrieval. A 2S component provides incomplete information and may be difficult to interpret without more context, but it does answer the query partially. The quantization function Q does not impose a binary choice relevant/nonrelevant and instead allows us to grade the component as partially relevant.

Online edition (c) 2009 Cambridge UP

10.4 Evaluation of XML retrieval |

213 |

||

|

algorithm |

average precision |

|

|

SIMNOMERGE |

0.242 |

|

|

SIMMERGE |

0.271 |

|

Table 10.3 INEX 2002 results of the vector space model in Section 10.3 for content- and-structure (CAS) queries and the quantization function Q.

The number of relevant components in a retrieved set A of components can then be computed as:

#(relevant items retrieved) = ∑ Q(rel(c), cov(c))

c A

As an approximation, the standard definitions of precision, recall and F from Chapter 8 can be applied to this modified definition of relevant items retrieved, with some subtleties because we sum graded as opposed to binary relevance assessments. See the references on focused retrieval in Section 10.6 for further discussion.

One flaw of measuring relevance this way is that overlap is not accounted for. We discussed the concept of marginal relevance in the context of unstructured retrieval in Section 8.5.1 (page 166). This problem is worse in XML retrieval because of the problem of multiple nested elements occurring in a search result as we discussed on page 203. Much of the recent focus at INEX has been on developing algorithms and evaluation measures that return non-redundant results lists and evaluate them properly. See the references in Section 10.6.

Table 10.3 shows two INEX 2002 runs of the vector space system we described in Section 10.3. The better run is the SIMMERGE run, which incorporates few structural constraints and mostly relies on keyword matching. SIMMERGE’s median average precision (where the median is with respect to average precision numbers over topics) is only 0.147. Effectiveness in XML retrieval is often lower than in unstructured retrieval since XML retrieval is harder. Instead of just finding a document, we have to find the subpart of a document that is most relevant to the query. Also, XML retrieval effectiveness – when evaluated as described here – can be lower than unstructured retrieval effectiveness on a standard evaluation because graded judgments lower measured performance. Consider a system that returns a document with graded relevance 0.6 and binary relevance 1 at the top of the retrieved list. Then, interpolated precision at 0.00 recall (cf. page 158) is 1.0 on a binary evaluation, but can be as low as 0.6 on a graded evaluation.

Table 10.3 gives us a sense of the typical performance of XML retrieval, but it does not compare structured with unstructured retrieval. Table 10.4 directly shows the effect of using structure in retrieval. The results are for a

Online edition (c) 2009 Cambridge UP

214 |

|

|

|

10 XML retrieval |

|

|

|

content only |

full structure |

improvement |

|

|

precision at 5 |

0.2000 |

0.3265 |

63.3% |

|

|

precision at 10 |

0.1820 |

0.2531 |

39.1% |

|

|

precision at 20 |

0.1700 |

0.1796 |

5.6% |

|

|

precision at 30 |

0.1527 |

0.1531 |

0.3% |

|

Table 10.4 A comparison of content-only and full-structure search in INEX 2003/2004.

language-model-based system (cf. Chapter 12) that is evaluated on a subset of CAS topics from INEX 2003 and 2004. The evaluation metric is precision at k as defined in Chapter 8 (page 161). The discretization function used for the evaluation maps highly relevant elements (roughly corresponding to the 3E elements defined for Q) to 1 and all other elements to 0. The contentonly system treats queries and documents as unstructured bags of words. The full-structure model ranks elements that satisfy structural constraints higher than elements that do not. For instance, for the query in Figure 10.3 an element that contains the phrase summer holidays in a section will be rated higher than one that contains it in an abstract.

The table shows that structure helps increase precision at the top of the results list. There is a large increase of precision at k = 5 and at k = 10. There is almost no improvement at k = 30. These results demonstrate the benefits of structured retrieval. Structured retrieval imposes additional constraints on what to return and documents that pass the structural filter are more likely to be relevant. Recall may suffer because some relevant documents will be filtered out, but for precision-oriented tasks structured retrieval is superior.

10.5Text-centric vs. data-centric XML retrieval

In the type of structured retrieval we cover in this chapter, XML structure serves as a framework within which we match the text of the query with the text of the XML documents. This exemplifies a system that is optimized for

TEXT-CENTRIC XML text-centric XML. While both text and structure are important, we give higher priority to text. We do this by adapting unstructured retrieval methods to handling additional structural constraints. The premise of our approach is that XML document retrieval is characterized by (i) long text fields (e.g., sections of a document), (ii) inexact matching, and (iii) relevance-ranked results. Relational databases do not deal well with this use case.

DATA-CENTRIC XML In contrast, data-centric XML mainly encodes numerical and non-text attributevalue data. When querying data-centric XML, we want to impose exact match conditions in most cases. This puts the emphasis on the structural aspects of XML documents and queries. An example is:

Online edition (c) 2009 Cambridge UP

10.5 Text-centric vs. data-centric XML retrieval |

215 |

Find employees whose salary is the same this month as it was 12 months ago.

This query requires no ranking. It is purely structural and an exact matching of the salaries in the two time periods is probably sufficient to meet the user’s information need.

Text-centric approaches are appropriate for data that are essentially text documents, marked up as XML to capture document structure. This is becoming a de facto standard for publishing text databases since most text documents have some form of interesting structure – paragraphs, sections, footnotes etc. Examples include assembly manuals, issues of journals, Shakespeare’s collected works and newswire articles.

Data-centric approaches are commonly used for data collections with complex structures that mainly contain non-text data. A text-centric retrieval engine will have a hard time with proteomic data in bioinformatics or with the representation of a city map that (together with street names and other textual descriptions) forms a navigational database.

Two other types of queries that are difficult to handle in a text-centric structured retrieval model are joins and ordering constraints. The query for employees with unchanged salary requires a join. The following query imposes an ordering constraint:

Retrieve the chapter of the book Introduction to algorithms that follows the chapter Binomial heaps.

This query relies on the ordering of elements in XML – in this case the ordering of chapter elements underneath the book node. There are powerful query languages for XML that can handle numerical attributes, joins and ordering constraints. The best known of these is XQuery, a language proposed for standardization by the W3C. It is designed to be broadly applicable in all areas where XML is used. Due to its complexity, it is challenging to implement an XQuery-based ranked retrieval system with the performance characteristics that users have come to expect in information retrieval. This is currently one of the most active areas of research in XML retrieval.

Relational databases are better equipped to handle many structural constraints, particularly joins (but ordering is also difficult in a database framework – the tuples of a relation in the relational calculus are not ordered). For this reason, most data-centric XML retrieval systems are extensions of relational databases (see the references in Section 10.6). If text fields are short, exact matching meets user needs and retrieval results in form of unordered sets are acceptable, then using a relational database for XML retrieval is appropriate.

Online edition (c) 2009 Cambridge UP