- •List of Tables

- •List of Figures

- •Table of Notation

- •Preface

- •Boolean retrieval

- •An example information retrieval problem

- •Processing Boolean queries

- •The extended Boolean model versus ranked retrieval

- •References and further reading

- •The term vocabulary and postings lists

- •Document delineation and character sequence decoding

- •Obtaining the character sequence in a document

- •Choosing a document unit

- •Determining the vocabulary of terms

- •Tokenization

- •Dropping common terms: stop words

- •Normalization (equivalence classing of terms)

- •Stemming and lemmatization

- •Faster postings list intersection via skip pointers

- •Positional postings and phrase queries

- •Biword indexes

- •Positional indexes

- •Combination schemes

- •References and further reading

- •Dictionaries and tolerant retrieval

- •Search structures for dictionaries

- •Wildcard queries

- •General wildcard queries

- •Spelling correction

- •Implementing spelling correction

- •Forms of spelling correction

- •Edit distance

- •Context sensitive spelling correction

- •Phonetic correction

- •References and further reading

- •Index construction

- •Hardware basics

- •Blocked sort-based indexing

- •Single-pass in-memory indexing

- •Distributed indexing

- •Dynamic indexing

- •Other types of indexes

- •References and further reading

- •Index compression

- •Statistical properties of terms in information retrieval

- •Dictionary compression

- •Dictionary as a string

- •Blocked storage

- •Variable byte codes

- •References and further reading

- •Scoring, term weighting and the vector space model

- •Parametric and zone indexes

- •Weighted zone scoring

- •Learning weights

- •The optimal weight g

- •Term frequency and weighting

- •Inverse document frequency

- •The vector space model for scoring

- •Dot products

- •Queries as vectors

- •Computing vector scores

- •Sublinear tf scaling

- •Maximum tf normalization

- •Document and query weighting schemes

- •Pivoted normalized document length

- •References and further reading

- •Computing scores in a complete search system

- •Index elimination

- •Champion lists

- •Static quality scores and ordering

- •Impact ordering

- •Cluster pruning

- •Components of an information retrieval system

- •Tiered indexes

- •Designing parsing and scoring functions

- •Putting it all together

- •Vector space scoring and query operator interaction

- •References and further reading

- •Evaluation in information retrieval

- •Information retrieval system evaluation

- •Standard test collections

- •Evaluation of unranked retrieval sets

- •Evaluation of ranked retrieval results

- •Assessing relevance

- •A broader perspective: System quality and user utility

- •System issues

- •User utility

- •Results snippets

- •References and further reading

- •Relevance feedback and query expansion

- •Relevance feedback and pseudo relevance feedback

- •The Rocchio algorithm for relevance feedback

- •Probabilistic relevance feedback

- •When does relevance feedback work?

- •Relevance feedback on the web

- •Evaluation of relevance feedback strategies

- •Pseudo relevance feedback

- •Indirect relevance feedback

- •Summary

- •Global methods for query reformulation

- •Vocabulary tools for query reformulation

- •Query expansion

- •Automatic thesaurus generation

- •References and further reading

- •XML retrieval

- •Basic XML concepts

- •Challenges in XML retrieval

- •A vector space model for XML retrieval

- •Evaluation of XML retrieval

- •References and further reading

- •Exercises

- •Probabilistic information retrieval

- •Review of basic probability theory

- •The Probability Ranking Principle

- •The 1/0 loss case

- •The PRP with retrieval costs

- •The Binary Independence Model

- •Deriving a ranking function for query terms

- •Probability estimates in theory

- •Probability estimates in practice

- •Probabilistic approaches to relevance feedback

- •An appraisal and some extensions

- •An appraisal of probabilistic models

- •Bayesian network approaches to IR

- •References and further reading

- •Language models for information retrieval

- •Language models

- •Finite automata and language models

- •Types of language models

- •Multinomial distributions over words

- •The query likelihood model

- •Using query likelihood language models in IR

- •Estimating the query generation probability

- •Language modeling versus other approaches in IR

- •Extended language modeling approaches

- •References and further reading

- •Relation to multinomial unigram language model

- •The Bernoulli model

- •Properties of Naive Bayes

- •A variant of the multinomial model

- •Feature selection

- •Mutual information

- •Comparison of feature selection methods

- •References and further reading

- •Document representations and measures of relatedness in vector spaces

- •k nearest neighbor

- •Time complexity and optimality of kNN

- •The bias-variance tradeoff

- •References and further reading

- •Exercises

- •Support vector machines and machine learning on documents

- •Support vector machines: The linearly separable case

- •Extensions to the SVM model

- •Multiclass SVMs

- •Nonlinear SVMs

- •Experimental results

- •Machine learning methods in ad hoc information retrieval

- •Result ranking by machine learning

- •References and further reading

- •Flat clustering

- •Clustering in information retrieval

- •Problem statement

- •Evaluation of clustering

- •Cluster cardinality in K-means

- •Model-based clustering

- •References and further reading

- •Exercises

- •Hierarchical clustering

- •Hierarchical agglomerative clustering

- •Time complexity of HAC

- •Group-average agglomerative clustering

- •Centroid clustering

- •Optimality of HAC

- •Divisive clustering

- •Cluster labeling

- •Implementation notes

- •References and further reading

- •Exercises

- •Matrix decompositions and latent semantic indexing

- •Linear algebra review

- •Matrix decompositions

- •Term-document matrices and singular value decompositions

- •Low-rank approximations

- •Latent semantic indexing

- •References and further reading

- •Web search basics

- •Background and history

- •Web characteristics

- •The web graph

- •Spam

- •Advertising as the economic model

- •The search user experience

- •User query needs

- •Index size and estimation

- •Near-duplicates and shingling

- •References and further reading

- •Web crawling and indexes

- •Overview

- •Crawling

- •Crawler architecture

- •DNS resolution

- •The URL frontier

- •Distributing indexes

- •Connectivity servers

- •References and further reading

- •Link analysis

- •The Web as a graph

- •Anchor text and the web graph

- •PageRank

- •Markov chains

- •The PageRank computation

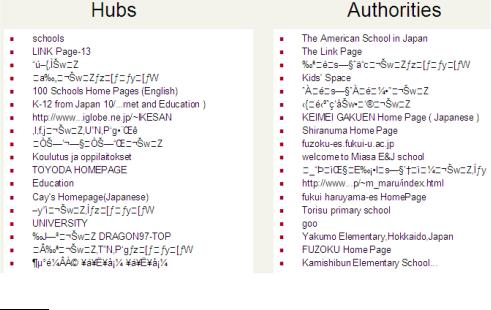

- •Hubs and Authorities

- •Choosing the subset of the Web

- •References and further reading

- •Bibliography

- •Author Index

474 |

21 Link analysis |

Exercise 21.12

Suppose that the web graph is stored on disk as an adjacency list, in such a way that you may only query for the out-neighbors of pages in the order in which they are stored. You cannot load the graph in main memory but you may do multiple reads over the full graph. Write the algorithm for computing the PageRank in this setting.

Exercise 21.13

Recall the sets S and Y introduced near the beginning of Section 21.2.3. How does the set Y relate to S?

Exercise 21.14

Is the set Y always the set of all web pages? Why or why not?

Exercise 21.15 |

[ ] |

Is the sports PageRank of any page in S at least as large as its PageRank? |

|

Exercise 21.16 |

[ ] |

Consider a setting where we have two topic-specific PageRank values for each web page: a sports PageRank ~πs, and a politics PageRank ~π p. Let α be the (common)

teleportation probability used in computing both sets of topic-specific PageRanks.

For q [0, 1], consider a user whose interest profile is divided between a fraction q in sports and a fraction 1 − q in politics. Show that the user’s personalized PageRank is the steady-state distribution of a random walk in which – on a teleport step – the walk teleports to a sports page with probability q and to a politics page with probability 1 − q.

Exercise 21.17

Show that the Markov chain corresponding to the walk in Exercise 21.16 is ergodic and hence the user’s personalized PageRank can be obtained by computing the steadystate distribution of this Markov chain.

Exercise 21.18

Show that in the steady-state distribution of Exercise 21.17, the steady-state probability for any web page i equals qπs(i) + (1 − q)π p(i).

21.3Hubs and Authorities

HUB SCORE AUTHORITY SCORE

We now develop a scheme in which, given a query, every web page is assigned two scores. One is called its hub score and the other its authority score. For any query, we compute two ranked lists of results rather than one. The ranking of one list is induced by the hub scores and that of the other by the authority scores.

This approach stems from a particular insight into the creation of web pages, that there are two primary kinds of web pages useful as results for broad-topic searches. By a broad topic search we mean an informational query such as "I wish to learn about leukemia". There are authoritative sources of information on the topic; in this case, the National Cancer Institute’s page on

Online edition (c) 2009 Cambridge UP

21.3 Hubs and Authorities |

475 |

leukemia would be such a page. We will call such pages authorities; in the computation we are about to describe, they are the pages that will emerge with high authority scores.

On the other hand, there are many pages on the Web that are hand-compiled lists of links to authoritative web pages on a specific topic. These hub pages are not in themselves authoritative sources of topic-specific information, but rather compilations that someone with an interest in the topic has spent time putting together. The approach we will take, then, is to use these hub pages to discover the authority pages. In the computation we now develop, these hub pages are the pages that will emerge with high hub scores.

A good hub page is one that points to many good authorities; a good authority page is one that is pointed to by many good hub pages. We thus appear to have a circular definition of hubs and authorities; we will turn this into an iterative computation. Suppose that we have a subset of the web containing good hub and authority pages, together with the hyperlinks amongst them. We will iteratively compute a hub score and an authority score for every web page in this subset, deferring the discussion of how we pick this subset until Section 21.3.1.

For a web page v in our subset of the web, we use h(v) to denote its hub score and a(v) its authority score. Initially, we set h(v) = a(v) = 1 for all nodes v. We also denote by v 7→y the existence of a hyperlink from v to y. The core of the iterative algorithm is a pair of updates to the hub and authority scores of all pages given by Equation 21.8, which capture the intuitive notions that good hubs point to good authorities and that good authorities are pointed to by good hubs.

(21.8) |

h(v) |

← |

∑ a(y) |

|

|

|

v7→y |

|

a(v) |

← |

∑ h(y). |

|

|

|

y7→v |

Thus, the first line of Equation (21.8) sets the hub score of page v to the sum of the authority scores of the pages it links to. In other words, if v links to pages with high authority scores, its hub score increases. The second line plays the reverse role; if page v is linked to by good hubs, its authority score increases.

What happens as we perform these updates iteratively, recomputing hub scores, then new authority scores based on the recomputed hub scores, and so on? Let us recast the equations Equation (21.8) into matrix-vector form.

Let~ and denote the vectors of all hub and all authority scores respectively, h ~a

for the pages in our subset of the web graph. Let A denote the adjacency matrix of the subset of the web graph that we are dealing with: A is a square matrix with one row and one column for each page in the subset. The entry

Online edition (c) 2009 Cambridge UP

476 |

|

|

|

21 Link analysis |

|

Aij is 1 if there is a hyperlink from page i to page j, and 0 otherwise. Then, |

|||

|

we may write Equation (21.8) |

|

|

|

(21.9) |

~ |

← A~a |

||

h |

||||

|

~a |

← |

A |

T~ |

|

h, |

|||

where AT denotes the transpose of the matrix A. Now the right hand side of each line of Equation (21.9) is a vector that is the left hand side of the other line of Equation (21.9). Substituting these into one another, we may rewrite Equation (21.9) as

(21.10) |

~ |

← |

AA |

T~ |

h |

h |

|||

|

~a |

← |

AT A~a. |

|

Now, Equation (21.10) bears an uncanny resemblance to a pair of eigenvector equations (Section 18.1); indeed, if we replace the ← symbols by = symbols and introduce the (unknown) eigenvalue, the first line of Equation (21.10) becomes the equation for the eigenvectors of AAT, while the second becomes the equation for the eigenvectors of AT A:

|

~ |

= |

(1/λh)AA |

T~ |

|

h |

h |

||

(21.11) |

~a |

= |

(1/λa)AT A~a. |

|

Here we have used λh to denote the eigenvalue of AAT and λa to denote the eigenvalue of AT A.

This leads to some key consequences:

1.The iterative updates in Equation (21.8) (or equivalently, Equation (21.9)), if scaled by the appropriate eigenvalues, are equivalent to the power iteration method for computing the eigenvectors of AAT and AT A. Provided that the principal eigenvalue of AAT is unique, the iteratively computed

entries of~ and settle into unique steady-state values determined by the h ~a

entries of A and hence the link structure of the graph.

2.In computing these eigenvector entries, we are not restricted to using the power iteration method; indeed, we could use any fast method for computing the principal eigenvector of a stochastic matrix.

The resulting computation thus takes the following form:

1.Assemble the target subset of web pages, form the graph induced by their hyperlinks and compute AAT and AT A.

2.Compute the principal eigenvectors of AAT and AT A to form the vector

of hub scores~ and authority scores . h ~a

Online edition (c) 2009 Cambridge UP

21.3 Hubs and Authorities |

477 |

3. Output the top-scoring hubs and the top-scoring authorities.

HITS This method of link analysis is known as HITS, which is an acronym for

Hyperlink-Induced Topic Search.

Example 21.2: Assuming the query jaguar and double-weighting of links whose anchors contain the query word, the matrix A for Figure 21.4 is as follows:

0 |

0 |

1 |

0 |

0 |

0 |

0 |

0 |

1 |

1 |

0 |

0 |

0 |

0 |

1 |

0 |

1 |

2 |

0 |

0 |

0 |

0 |

0 |

0 |

1 |

1 |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

0 |

1 |

0 |

0 |

0 |

0 |

0 |

1 |

1 |

0 |

0 |

0 |

2 |

1 |

0 |

1 |

The hub and authority vectors are:

~ = (0.03 0.04 0.33 0.18 0.04 0.04 0.35) h

~a = (0.10 0.01 0.12 0.47 0.16 0.01 0.13)

Here, q3 is the main authority – two hubs (q2 and q6) are pointing to it via highly weighted jaguar links.

Since the iterative updates captured the intuition of good hubs and good authorities, the high-scoring pages we output would give us good hubs and authorities from the target subset of web pages. In Section 21.3.1 we describe the remaining detail: how do we gather a target subset of web pages around a topic such as leukemia?

21.3.1Choosing the subset of the Web

In assembling a subset of web pages around a topic such as leukemia, we must cope with the fact that good authority pages may not contain the specific query term leukemia. This is especially true, as we noted in Section 21.1.1, when an authority page uses its web presence to project a certain marketing image. For instance, many pages on the IBM website are authoritative sources of information on computer hardware, even though these pages may not contain the term computer or hardware. However, a hub compiling computer hardware resources is likely to use these terms and also link to the relevant pages on the IBM website.

Building on these observations, the following procedure has been suggested for compiling the subset of the Web for which to compute hub and authority scores.

Online edition (c) 2009 Cambridge UP

478 |

21 Link analysis |

1.Given a query (say leukemia), use a text index to get all pages containing leukemia. Call this the root set of pages.

2.Build the base set of pages, to include the root set as well as any page that either links to a page in the root set, or is linked to by a page in the root set.

We then use the base set for computing hub and authority scores. The base set is constructed in this manner for three reasons:

1.A good authority page may not contain the query text (such as computer hardware).

2.If the text query manages to capture a good hub page vh in the root set, then the inclusion of all pages linked to by any page in the root set will capture all the good authorities linked to by vh in the base set.

3.Conversely, if the text query manages to capture a good authority page

va in the root set, then the inclusion of pages which point to va will bring other good hubs into the base set. In other words, the “expansion” of the root set into the base set enriches the common pool of good hubs and authorities.

Running HITS across a variety of queries reveals some interesting insights about link analysis. Frequently, the documents that emerge as top hubs and authorities include languages other than the language of the query. These pages were presumably drawn into the base set, following the assembly of the root set. Thus, some elements of cross-language retrieval (where a query in one language retrieves documents in another) are evident here; interestingly, this cross-language effect resulted purely from link analysis, with no linguistic translation taking place.

We conclude this section with some notes on implementing this algorithm. The root set consists of all pages matching the text query; in fact, implementations (see the references in Section 21.4) suggest that it suffices to use 200 or so web pages for the root set, rather than all pages matching the text query. Any algorithm for computing eigenvectors may be used for computing the hub/authority score vector. In fact, we need not compute the exact values of these scores; it suffices to know the relative values of the scores so that we may identify the top hubs and authorities. To this end, it is possible that a small number of iterations of the power iteration method yields the relative ordering of the top hubs and authorities. Experiments have suggested that in practice, about five iterations of Equation (21.8) yield fairly good results. Moreover, since the link structure of the web graph is fairly sparse (the average web page links to about ten others), we do not perform these as matrix-vector products but rather as additive updates as in Equation (21.8).

Online edition (c) 2009 Cambridge UP

21.3 Hubs and Authorities |

479 |

Figure 21.6 A sample run of HITS on the query japan elementary schools.

Figure 21.6 shows the results of running HITS on the query japan elementary schools. The figure shows the top hubs and authorities; each row lists the title tag from the corresponding HTML page. Because the resulting string is not necessarily in Latin characters, the resulting print is (in many cases) a string of gibberish. Each of these corresponds to a web page that does not use Latin characters, in this case very likely pages in Japanese. There also appear to be pages in other non-English languages, which seems surprising given that the query string is in English. In fact, this result is emblematic of the functioning of HITS – following the assembly of the root set, the (English) query string is ignored. The base set is likely to contain pages in other languages, for instance if an English-language hub page links to the Japanese-language home pages of Japanese elementary schools. Because the subsequent computation of the top hubs and authorities is entirely linkbased, some of these non-English pages will appear among the top hubs and authorities.

?Exercise 21.19

If all the hub and authority scores are initialized to 1, what is the hub/authority score of a node after one iteration?

Online edition (c) 2009 Cambridge UP