- •List of Tables

- •List of Figures

- •Table of Notation

- •Preface

- •Boolean retrieval

- •An example information retrieval problem

- •Processing Boolean queries

- •The extended Boolean model versus ranked retrieval

- •References and further reading

- •The term vocabulary and postings lists

- •Document delineation and character sequence decoding

- •Obtaining the character sequence in a document

- •Choosing a document unit

- •Determining the vocabulary of terms

- •Tokenization

- •Dropping common terms: stop words

- •Normalization (equivalence classing of terms)

- •Stemming and lemmatization

- •Faster postings list intersection via skip pointers

- •Positional postings and phrase queries

- •Biword indexes

- •Positional indexes

- •Combination schemes

- •References and further reading

- •Dictionaries and tolerant retrieval

- •Search structures for dictionaries

- •Wildcard queries

- •General wildcard queries

- •Spelling correction

- •Implementing spelling correction

- •Forms of spelling correction

- •Edit distance

- •Context sensitive spelling correction

- •Phonetic correction

- •References and further reading

- •Index construction

- •Hardware basics

- •Blocked sort-based indexing

- •Single-pass in-memory indexing

- •Distributed indexing

- •Dynamic indexing

- •Other types of indexes

- •References and further reading

- •Index compression

- •Statistical properties of terms in information retrieval

- •Dictionary compression

- •Dictionary as a string

- •Blocked storage

- •Variable byte codes

- •References and further reading

- •Scoring, term weighting and the vector space model

- •Parametric and zone indexes

- •Weighted zone scoring

- •Learning weights

- •The optimal weight g

- •Term frequency and weighting

- •Inverse document frequency

- •The vector space model for scoring

- •Dot products

- •Queries as vectors

- •Computing vector scores

- •Sublinear tf scaling

- •Maximum tf normalization

- •Document and query weighting schemes

- •Pivoted normalized document length

- •References and further reading

- •Computing scores in a complete search system

- •Index elimination

- •Champion lists

- •Static quality scores and ordering

- •Impact ordering

- •Cluster pruning

- •Components of an information retrieval system

- •Tiered indexes

- •Designing parsing and scoring functions

- •Putting it all together

- •Vector space scoring and query operator interaction

- •References and further reading

- •Evaluation in information retrieval

- •Information retrieval system evaluation

- •Standard test collections

- •Evaluation of unranked retrieval sets

- •Evaluation of ranked retrieval results

- •Assessing relevance

- •A broader perspective: System quality and user utility

- •System issues

- •User utility

- •Results snippets

- •References and further reading

- •Relevance feedback and query expansion

- •Relevance feedback and pseudo relevance feedback

- •The Rocchio algorithm for relevance feedback

- •Probabilistic relevance feedback

- •When does relevance feedback work?

- •Relevance feedback on the web

- •Evaluation of relevance feedback strategies

- •Pseudo relevance feedback

- •Indirect relevance feedback

- •Summary

- •Global methods for query reformulation

- •Vocabulary tools for query reformulation

- •Query expansion

- •Automatic thesaurus generation

- •References and further reading

- •XML retrieval

- •Basic XML concepts

- •Challenges in XML retrieval

- •A vector space model for XML retrieval

- •Evaluation of XML retrieval

- •References and further reading

- •Exercises

- •Probabilistic information retrieval

- •Review of basic probability theory

- •The Probability Ranking Principle

- •The 1/0 loss case

- •The PRP with retrieval costs

- •The Binary Independence Model

- •Deriving a ranking function for query terms

- •Probability estimates in theory

- •Probability estimates in practice

- •Probabilistic approaches to relevance feedback

- •An appraisal and some extensions

- •An appraisal of probabilistic models

- •Bayesian network approaches to IR

- •References and further reading

- •Language models for information retrieval

- •Language models

- •Finite automata and language models

- •Types of language models

- •Multinomial distributions over words

- •The query likelihood model

- •Using query likelihood language models in IR

- •Estimating the query generation probability

- •Language modeling versus other approaches in IR

- •Extended language modeling approaches

- •References and further reading

- •Relation to multinomial unigram language model

- •The Bernoulli model

- •Properties of Naive Bayes

- •A variant of the multinomial model

- •Feature selection

- •Mutual information

- •Comparison of feature selection methods

- •References and further reading

- •Document representations and measures of relatedness in vector spaces

- •k nearest neighbor

- •Time complexity and optimality of kNN

- •The bias-variance tradeoff

- •References and further reading

- •Exercises

- •Support vector machines and machine learning on documents

- •Support vector machines: The linearly separable case

- •Extensions to the SVM model

- •Multiclass SVMs

- •Nonlinear SVMs

- •Experimental results

- •Machine learning methods in ad hoc information retrieval

- •Result ranking by machine learning

- •References and further reading

- •Flat clustering

- •Clustering in information retrieval

- •Problem statement

- •Evaluation of clustering

- •Cluster cardinality in K-means

- •Model-based clustering

- •References and further reading

- •Exercises

- •Hierarchical clustering

- •Hierarchical agglomerative clustering

- •Time complexity of HAC

- •Group-average agglomerative clustering

- •Centroid clustering

- •Optimality of HAC

- •Divisive clustering

- •Cluster labeling

- •Implementation notes

- •References and further reading

- •Exercises

- •Matrix decompositions and latent semantic indexing

- •Linear algebra review

- •Matrix decompositions

- •Term-document matrices and singular value decompositions

- •Low-rank approximations

- •Latent semantic indexing

- •References and further reading

- •Web search basics

- •Background and history

- •Web characteristics

- •The web graph

- •Spam

- •Advertising as the economic model

- •The search user experience

- •User query needs

- •Index size and estimation

- •Near-duplicates and shingling

- •References and further reading

- •Web crawling and indexes

- •Overview

- •Crawling

- •Crawler architecture

- •DNS resolution

- •The URL frontier

- •Distributing indexes

- •Connectivity servers

- •References and further reading

- •Link analysis

- •The Web as a graph

- •Anchor text and the web graph

- •PageRank

- •Markov chains

- •The PageRank computation

- •Hubs and Authorities

- •Choosing the subset of the Web

- •References and further reading

- •Bibliography

- •Author Index

90 |

|

|

|

|

|

5 |

Index compression |

7 |

|

|

|

|

|

|

|

6 |

|

|

|

|

|

|

|

5 |

|

|

|

|

|

|

|

4 |

|

|

|

|

|

|

|

log10 cf |

|

|

|

|

|

|

|

3 |

|

|

|

|

|

|

|

2 |

|

|

|

|

|

|

|

1 |

|

|

|

|

|

|

|

0 |

|

|

|

|

|

|

|

0 |

1 |

2 |

3 |

4 |

5 |

6 |

7 |

|

|

|

log10 rank |

|

|

|

|

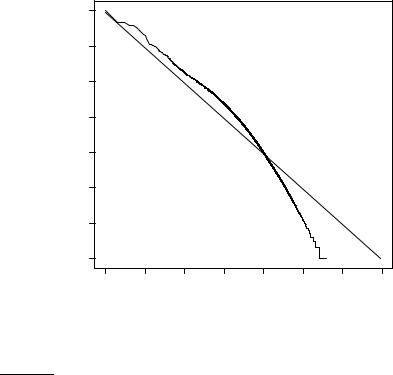

Figure 5.2 Zipf’s law for Reuters-RCV1. Frequency is plotted as a function of frequency rank for the terms in the collection. The line is the distribution predicted by Zipf’s law (weighted least-squares fit; intercept is 6.95).

? |

Exercise 5.1 |

[ ] |

Assuming one machine word per posting, what is the size of the uncompressed (non- |

||

positional) index for different tokenizations based on Table 5.1? How do these num- |

||

|

bers compare with Table 5.6? |

|

5.2 Dictionary compression

This section presents a series of dictionary data structures that achieve increasingly higher compression ratios. The dictionary is small compared with the postings file as suggested by Table 5.1. So why compress it if it is responsible for only a small percentage of the overall space requirements of the IR system?

One of the primary factors in determining the response time of an IR system is the number of disk seeks necessary to process a query. If parts of the dictionary are on disk, then many more disk seeks are necessary in query evaluation. Thus, the main goal of compressing the dictionary is to fit it in main memory, or at least a large portion of it, to support high query through-

Online edition (c) 2009 Cambridge UP

5.2 Dictionary compression |

|

91 |

||

|

|

|

|

|

|

term |

document |

pointer to |

|

|

|

frequency |

postings list |

|

|

a |

656,265 |

−→ |

|

|

aachen |

65 |

−→ |

|

|

. . . |

. . . |

. . . |

|

space needed: |

zulu |

221 |

−→ |

|

20 bytes |

4 bytes |

4 bytes |

|

|

Figure 5.3 Storing the dictionary as an array of fixed-width entries.

put. Although dictionaries of very large collections fit into the memory of a standard desktop machine, this is not true of many other application scenarios. For example, an enterprise search server for a large corporation may have to index a multiterabyte collection with a comparatively large vocabulary because of the presence of documents in many different languages. We also want to be able to design search systems for limited hardware such as mobile phones and onboard computers. Other reasons for wanting to conserve memory are fast startup time and having to share resources with other applications. The search system on your PC must get along with the memory-hogging word processing suite you are using at the same time.

5.2.1Dictionary as a string

The simplest data structure for the dictionary is to sort the vocabulary lexicographically and store it in an array of fixed-width entries as shown in Figure 5.3. We allocate 20 bytes for the term itself (because few terms have more than twenty characters in English), 4 bytes for its document frequency, and 4 bytes for the pointer to its postings list. Four-byte pointers resolve a 4 gigabytes (GB) address space. For large collections like the web, we need to allocate more bytes per pointer. We look up terms in the array by binary search. For Reuters-RCV1, we need M × (20 + 4 + 4) = 400,000 × 28 = 11.2megabytes (MB) for storing the dictionary in this scheme.

Using fixed-width entries for terms is clearly wasteful. The average length of a term in English is about eight characters (Table 4.2, page 70), so on average we are wasting twelve characters in the fixed-width scheme. Also, we have no way of storing terms with more than twenty characters like hydrochlorofluorocarbons and supercalifragilisticexpialidocious. We can overcome these shortcomings by storing the dictionary terms as one long string of characters, as shown in Figure 5.4. The pointer to the next term is also used to demarcate the end of the current term. As before, we locate terms in the data structure by way of binary search in the (now smaller) table. This scheme saves us 60% compared to fixed-width storage – 12 bytes on average of the

Online edition (c) 2009 Cambridge UP

92 5 Index compression

. . . s y s t i l e s y z y g e t i c s y z y g i a l s y z y g y s z a i b e l y i t e s z e c i n s z o n o . . .

freq. |

postings ptr. |

term ptr. |

9 |

→ |

|

92 |

→ |

|

5 |

→ |

|

71 |

→ |

|

12 |

→ |

|

. . . |

. . . |

. . . |

4 bytes |

4 bytes |

3 bytes |

Figure 5.4 Dictionary-as-a-string storage. Pointers mark the end of the preceding term and the beginning of the next. For example, the first three terms in this example

are systile, syzygetic, and syzygial.

20 bytes we allocated for terms before. However, we now also need to store term pointers. The term pointers resolve 400,000 × 8 = 3.2 × 106 positions, so they need to be log2 3.2 × 106 ≈ 22 bits or 3 bytes long.

In this new scheme, we need 400,000 × (4 + 4 + 3 + 8) = 7.6 MB for the Reuters-RCV1 dictionary: 4 bytes each for frequency and postings pointer, 3 bytes for the term pointer, and 8 bytes on average for the term. So we have reduced the space requirements by one third from 11.2 to 7.6 MB.

5.2.2Blocked storage

We can further compress the dictionary by grouping terms in the string into blocks of size k and keeping a term pointer only for the first term of each block (Figure 5.5). We store the length of the term in the string as an additional byte at the beginning of the term. We thus eliminate k − 1 term pointers, but need an additional k bytes for storing the length of each term. For k = 4, we save (k − 1) × 3 = 9 bytes for term pointers, but need an additional k = 4 bytes for term lengths. So the total space requirements for the dictionary of Reuters-RCV1 are reduced by 5 bytes per four-term block, or a total of 400,000 × 1/4 × 5 = 0.5 MB, bringing us down to 7.1 MB.

Online edition (c) 2009 Cambridge UP

5.2 Dictionary compression |

93 |

. . . 7 s y s t i l e 9 s y z y g e t i c 8 s y z y g i a l 6 s y z y g y11 s z a i b e l y i t e 6 s z e c i n . . .

freq. |

postings ptr. |

term ptr. |

9 |

→ |

|

92 |

→ |

|

5 |

→ |

|

71 |

→ |

|

12 |

→ |

|

. . . |

. . . |

. . . |

Figure 5.5 Blocked storage with four terms per block. The first block consists of systile, syzygetic, syzygial, and syzygy with lengths of seven, nine, eight, and six characters, respectively. Each term is preceded by a byte encoding its length that indicates how many bytes to skip to reach subsequent terms.

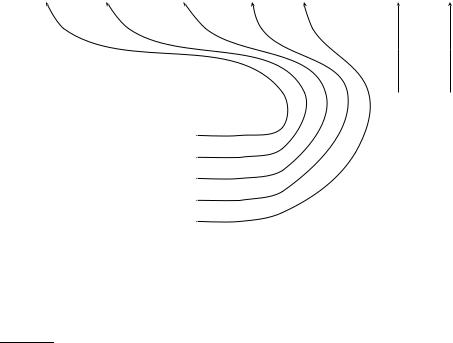

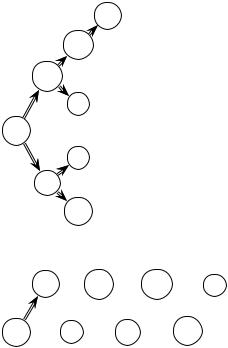

By increasing the block size k, we get better compression. However, there is a tradeoff between compression and the speed of term lookup. For the eight-term dictionary in Figure 5.6, steps in binary search are shown as double lines and steps in list search as simple lines. We search for terms in the uncompressed dictionary by binary search (a). In the compressed dictionary, we first locate the term’s block by binary search and then its position within the list by linear search through the block (b). Searching the uncompressed dictionary in (a) takes on average (0 + 1 + 2 + 3 + 2 + 1 + 2 + 2)/8 ≈ 1.6 steps, assuming each term is equally likely to come up in a query. For example, finding the two terms, aid and box, takes three and two steps, respectively. With blocks of size k = 4 in (b), we need (0 + 1 + 2 + 3 + 4 + 1 + 2 + 3)/8 = 2 steps on average, ≈ 25% more. For example, finding den takes one binary search step and two steps through the block. By increasing k, we can get the size of the compressed dictionary arbitrarily close to the minimum of 400,000 × (4 + 4 + 1 + 8) = 6.8 MB, but term lookup becomes prohibitively slow for large values of k.

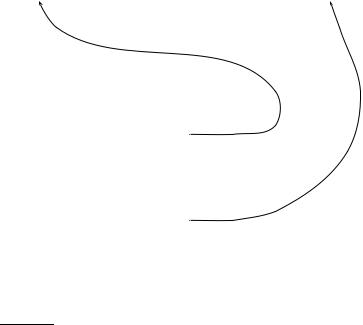

One source of redundancy in the dictionary we have not exploited yet is the fact that consecutive entries in an alphabetically sorted list share common FRONT CODING prefixes. This observation leads to front coding (Figure 5.7). A common prefix

Online edition (c) 2009 Cambridge UP

94 |

5 Index compression |

(a) |

aid |

box

den

ex

job

ox

pit

win

(b)aid  box

box  den

den  ex

ex

job  ox

ox  pit

pit  win

win

Figure 5.6 Search of the uncompressed dictionary (a) and a dictionary compressed by blocking with k = 4 (b).

One block in blocked compression (k = 4) . . .

8automata8automate9au t omatic10automation

. . . further compressed with front coding. 8automat a1 e2 ic3 i on

Figure 5.7 Front coding. A sequence of terms with identical prefix (“automat”) is encoded by marking the end of the prefix with and replacing it with in subsequent terms. As before, the first byte of each entry encodes the number of characters.

Online edition (c) 2009 Cambridge UP

5.3 Postings file compression |

95 |

Table 5.2 Dictionary compression for Reuters-RCV1.

data structure |

size in MB |

dictionary, fixed-width |

11.2 |

dictionary, term pointers into string |

7.6 |

, with blocking, k = 4 |

7.1 |

, with blocking & front coding |

5.9 |

is identified for a subsequence of the term list and then referred to with a special character. In the case of Reuters, front coding saves another 1.2 MB, as we found in an experiment.

Other schemes with even greater compression rely on minimal perfect hashing, that is, a hash function that maps M terms onto [1, . . . , M] without collisions. However, we cannot adapt perfect hashes incrementally because each new term causes a collision and therefore requires the creation of a new perfect hash function. Therefore, they cannot be used in a dynamic environment.

Even with the best compression scheme, it may not be feasible to store the entire dictionary in main memory for very large text collections and for hardware with limited memory. If we have to partition the dictionary onto pages that are stored on disk, then we can index the first term of each page using a B-tree. For processing most queries, the search system has to go to disk anyway to fetch the postings. One additional seek for retrieving the term’s dictionary page from disk is a significant, but tolerable increase in the time it takes to process a query.

Table 5.2 summarizes the compression achieved by the four dictionary data structures.

?Exercise 5.2

Estimate the space usage of the Reuters-RCV1 dictionary with blocks of size k = 8 and k = 16 in blocked dictionary storage.

Exercise 5.3

Estimate the time needed for term lookup in the compressed dictionary of ReutersRCV1 with block sizes of k = 4 (Figure 5.6, b), k = 8, and k = 16. What is the slowdown compared with k = 1 (Figure 5.6, a)?

5.3Postings file compression

Recall from Table 4.2 (page 70) that Reuters-RCV1 has 800,000 documents, 200 tokens per document, six characters per token, and 100,000,000 postings where we define a posting in this chapter as a docID in a postings list, that is, excluding frequency and position information. These numbers

Online edition (c) 2009 Cambridge UP