- •List of Tables

- •List of Figures

- •Table of Notation

- •Preface

- •Boolean retrieval

- •An example information retrieval problem

- •Processing Boolean queries

- •The extended Boolean model versus ranked retrieval

- •References and further reading

- •The term vocabulary and postings lists

- •Document delineation and character sequence decoding

- •Obtaining the character sequence in a document

- •Choosing a document unit

- •Determining the vocabulary of terms

- •Tokenization

- •Dropping common terms: stop words

- •Normalization (equivalence classing of terms)

- •Stemming and lemmatization

- •Faster postings list intersection via skip pointers

- •Positional postings and phrase queries

- •Biword indexes

- •Positional indexes

- •Combination schemes

- •References and further reading

- •Dictionaries and tolerant retrieval

- •Search structures for dictionaries

- •Wildcard queries

- •General wildcard queries

- •Spelling correction

- •Implementing spelling correction

- •Forms of spelling correction

- •Edit distance

- •Context sensitive spelling correction

- •Phonetic correction

- •References and further reading

- •Index construction

- •Hardware basics

- •Blocked sort-based indexing

- •Single-pass in-memory indexing

- •Distributed indexing

- •Dynamic indexing

- •Other types of indexes

- •References and further reading

- •Index compression

- •Statistical properties of terms in information retrieval

- •Dictionary compression

- •Dictionary as a string

- •Blocked storage

- •Variable byte codes

- •References and further reading

- •Scoring, term weighting and the vector space model

- •Parametric and zone indexes

- •Weighted zone scoring

- •Learning weights

- •The optimal weight g

- •Term frequency and weighting

- •Inverse document frequency

- •The vector space model for scoring

- •Dot products

- •Queries as vectors

- •Computing vector scores

- •Sublinear tf scaling

- •Maximum tf normalization

- •Document and query weighting schemes

- •Pivoted normalized document length

- •References and further reading

- •Computing scores in a complete search system

- •Index elimination

- •Champion lists

- •Static quality scores and ordering

- •Impact ordering

- •Cluster pruning

- •Components of an information retrieval system

- •Tiered indexes

- •Designing parsing and scoring functions

- •Putting it all together

- •Vector space scoring and query operator interaction

- •References and further reading

- •Evaluation in information retrieval

- •Information retrieval system evaluation

- •Standard test collections

- •Evaluation of unranked retrieval sets

- •Evaluation of ranked retrieval results

- •Assessing relevance

- •A broader perspective: System quality and user utility

- •System issues

- •User utility

- •Results snippets

- •References and further reading

- •Relevance feedback and query expansion

- •Relevance feedback and pseudo relevance feedback

- •The Rocchio algorithm for relevance feedback

- •Probabilistic relevance feedback

- •When does relevance feedback work?

- •Relevance feedback on the web

- •Evaluation of relevance feedback strategies

- •Pseudo relevance feedback

- •Indirect relevance feedback

- •Summary

- •Global methods for query reformulation

- •Vocabulary tools for query reformulation

- •Query expansion

- •Automatic thesaurus generation

- •References and further reading

- •XML retrieval

- •Basic XML concepts

- •Challenges in XML retrieval

- •A vector space model for XML retrieval

- •Evaluation of XML retrieval

- •References and further reading

- •Exercises

- •Probabilistic information retrieval

- •Review of basic probability theory

- •The Probability Ranking Principle

- •The 1/0 loss case

- •The PRP with retrieval costs

- •The Binary Independence Model

- •Deriving a ranking function for query terms

- •Probability estimates in theory

- •Probability estimates in practice

- •Probabilistic approaches to relevance feedback

- •An appraisal and some extensions

- •An appraisal of probabilistic models

- •Bayesian network approaches to IR

- •References and further reading

- •Language models for information retrieval

- •Language models

- •Finite automata and language models

- •Types of language models

- •Multinomial distributions over words

- •The query likelihood model

- •Using query likelihood language models in IR

- •Estimating the query generation probability

- •Language modeling versus other approaches in IR

- •Extended language modeling approaches

- •References and further reading

- •Relation to multinomial unigram language model

- •The Bernoulli model

- •Properties of Naive Bayes

- •A variant of the multinomial model

- •Feature selection

- •Mutual information

- •Comparison of feature selection methods

- •References and further reading

- •Document representations and measures of relatedness in vector spaces

- •k nearest neighbor

- •Time complexity and optimality of kNN

- •The bias-variance tradeoff

- •References and further reading

- •Exercises

- •Support vector machines and machine learning on documents

- •Support vector machines: The linearly separable case

- •Extensions to the SVM model

- •Multiclass SVMs

- •Nonlinear SVMs

- •Experimental results

- •Machine learning methods in ad hoc information retrieval

- •Result ranking by machine learning

- •References and further reading

- •Flat clustering

- •Clustering in information retrieval

- •Problem statement

- •Evaluation of clustering

- •Cluster cardinality in K-means

- •Model-based clustering

- •References and further reading

- •Exercises

- •Hierarchical clustering

- •Hierarchical agglomerative clustering

- •Time complexity of HAC

- •Group-average agglomerative clustering

- •Centroid clustering

- •Optimality of HAC

- •Divisive clustering

- •Cluster labeling

- •Implementation notes

- •References and further reading

- •Exercises

- •Matrix decompositions and latent semantic indexing

- •Linear algebra review

- •Matrix decompositions

- •Term-document matrices and singular value decompositions

- •Low-rank approximations

- •Latent semantic indexing

- •References and further reading

- •Web search basics

- •Background and history

- •Web characteristics

- •The web graph

- •Spam

- •Advertising as the economic model

- •The search user experience

- •User query needs

- •Index size and estimation

- •Near-duplicates and shingling

- •References and further reading

- •Web crawling and indexes

- •Overview

- •Crawling

- •Crawler architecture

- •DNS resolution

- •The URL frontier

- •Distributing indexes

- •Connectivity servers

- •References and further reading

- •Link analysis

- •The Web as a graph

- •Anchor text and the web graph

- •PageRank

- •Markov chains

- •The PageRank computation

- •Hubs and Authorities

- •Choosing the subset of the Web

- •References and further reading

- •Bibliography

- •Author Index

96 |

5 Index compression |

Table 5.3 Encoding gaps instead of document IDs. For example, we store gaps 107, 5, 43, . . . , instead of docIDs 283154, 283159, 283202, . . . for computer. The first docID is left unchanged (only shown for arachnocentric).

|

|

encoding |

postings list |

|

|

|

|

the |

docIDs |

. . . |

283042 |

283043 |

283044 |

283045 |

|

|

|

gaps |

|

1 |

|

1 |

1 |

computer |

docIDs |

. . . |

283047 |

283154 |

283159 |

283202 |

|

|

|

gaps |

|

107 |

|

5 |

43 |

arachnocentric |

docIDs |

252000 |

500100 |

|

|

|

|

|

|

gaps |

252000 |

248100 |

|

|

|

|

|

|

|

|

|

|

|

correspond to line 3 (“case folding”) in Table 5.1. Document identifiers are log2 800,000 ≈ 20 bits long. Thus, the size of the collection is about 800,000 × 200 × 6 bytes = 960 MB and the size of the uncompressed postings file is 100,000,000 × 20/8 = 250 MB.

To devise a more efficient representation of the postings file, one that uses fewer than 20 bits per document, we observe that the postings for frequent terms are close together. Imagine going through the documents of a collection one by one and looking for a frequent term like computer. We will find a document containing computer, then we skip a few documents that do not contain it, then there is again a document with the term and so on (see Table 5.3). The key idea is that the gaps between postings are short, requiring a lot less space than 20 bits to store. In fact, gaps for the most frequent terms such as the and for are mostly equal to 1. But the gaps for a rare term that occurs only once or twice in a collection (e.g., arachnocentric in Table 5.3) have the same order of magnitude as the docIDs and need 20 bits. For an economical representation of this distribution of gaps, we need a variable encoding method that uses fewer bits for short gaps.

To encode small numbers in less space than large numbers, we look at two types of methods: bytewise compression and bitwise compression. As the names suggest, these methods attempt to encode gaps with the minimum number of bytes and bits, respectively.

5.3.1Variable byte codes

VARIABLE BYTE ENCODING

CONTINUATION BIT

Variable byte (VB) encoding uses an integral number of bytes to encode a gap. The last 7 bits of a byte are “payload” and encode part of the gap. The first bit of the byte is a continuation bit.It is set to 1 for the last byte of the encoded gap and to 0 otherwise. To decode a variable byte code, we read a sequence of bytes with continuation bit 0 terminated by a byte with continuation bit 1. We then extract and concatenate the 7-bit parts. Figure 5.8 gives pseudocode

Online edition (c) 2009 Cambridge UP

5.3 Postings file compression |

97 |

VBENCODENUMBER(n)

1bytes ← hi

2while true

3 do PREPEND(bytes, n mod 128)

4if n < 128

5 then BREAK

6n ← n div 128

7 bytes[LENGTH(bytes)] += 128

8return bytes

VBENCODE(numbers)

1bytestream ← hi

2for each n numbers

3do bytes ← VBENCODENUMBER(n)

4 bytestream ← EXTEND(bytestream, bytes)

5return bytestream

VBDECODE(bytestream)

1 numbers ← hi

2n ← 0

3 for i ← 1 to LENGTH(bytestream)

4do if bytestream[i] < 128

5then n ← 128 × n + bytestream[i]

6else n ← 128 × n + (bytestream[i] − 128)

7APPEND(numbers, n)

8 |

n ← 0 |

9return numbers

Figure 5.8 VB encoding and decoding. The functions div and mod compute integer division and remainder after integer division, respectively. PREPEND adds an element to the beginning of a list, for example, PREPEND(h1, 2i, 3) = h3, 1, 2i. EXTEND extends a list, for example, EXTEND(h1, 2i, h3, 4i) = h1, 2, 3, 4i.

Table 5.4 VB encoding. Gaps are encoded using an integral number of bytes. The first bit, the continuation bit, of each byte indicates whether the code ends with this byte (1) or not (0).

docIDs |

824 |

829 |

215406 |

gaps |

|

5 |

214577 |

VB code |

00000110 10111000 |

10000101 |

00001101 00001100 10110001 |

Online edition (c) 2009 Cambridge UP

98 |

5 Index compression |

Table 5.5 Some examples of unary and γ codes. Unary codes are only shown for the smaller numbers. Commas in γ codes are for readability only and are not part of the actual codes.

number |

unary code |

length |

offset |

γ code |

0 |

0 |

|

|

|

1 |

10 |

0 |

|

0 |

2 |

110 |

10 |

0 |

10,0 |

3 |

1110 |

10 |

1 |

10,1 |

4 |

11110 |

110 |

00 |

110,00 |

9 |

1111111110 |

1110 |

001 |

1110,001 |

13 |

|

1110 |

101 |

1110,101 |

24 |

|

11110 |

1000 |

11110,1000 |

511 |

|

111111110 |

11111111 |

111111110,11111111 |

1025 |

|

11111111110 |

0000000001 |

11111111110,0000000001 |

|

|

|

|

|

for VB encoding and decoding and Table 5.4 an example of a VB-encoded postings list. 1

With VB compression, the size of the compressed index for Reuters-RCV1 is 116 MB as we verified in an experiment. This is a more than 50% reduction of the size of the uncompressed index (see Table 5.6).

The idea of VB encoding can also be applied to larger or smaller units than NIBBLE bytes: 32-bit words, 16-bit words, and 4-bit words or nibbles. Larger words further decrease the amount of bit manipulation necessary at the cost of less effective (or no) compression. Word sizes smaller than bytes get even better compression ratios at the cost of more bit manipulation. In general, bytes offer a good compromise between compression ratio and speed of decom-

pression.

|

|

For most IR systems variable byte codes offer an excellent tradeoff between |

|

|

time and space. They are also simple to implement – most of the alternatives |

|

|

referred to in Section 5.4 are more complex. But if disk space is a scarce |

|

|

resource, we can achieve better compression ratios by using bit-level encod- |

|

|

ings, in particular two closely related encodings: γ codes, which we will turn |

|

|

to next, and δ codes (Exercise 5.9). |

|

5.3.2 |

γ codes |

|

VB codes use an adaptive number of bytes depending on the size of the gap. |

Bit-level codes adapt the length of the code on the finer grained bit level. The

1. Note that the origin is 0 in the table. Because we never need to encode a docID or a gap of 0, in practice the origin is usually 1, so that 10000000 encodes 1, 10000101 encodes 6 (not 5 as in the table), and so on.

Online edition (c) 2009 Cambridge UP

5.3 Postings file compression |

99 |

UNARY CODE simplest bit-level code is unary code. The unary code of n is a string of n 1s followed by a 0 (see the first two columns of Table 5.5). Obviously, this is not a very efficient code, but it will come in handy in a moment.

How efficient can a code be in principle? Assuming the 2n gaps G with 1 ≤ G ≤ 2n are all equally likely, the optimal encoding uses n bits for each G. So some gaps (G = 2n in this case) cannot be encoded with fewer than log2 G bits. Our goal is to get as close to this lower bound as possible.

γ ENCODING A method that is within a factor of optimal is γ encoding. γ codes implement variable-length encoding by splitting the representation of a gap G into a pair of length and offset. Offset is G in binary, but with the leading 1 removed.2 For example, for 13 (binary 1101) offset is 101. Length encodes the length of offset in unary code. For 13, the length of offset is 3 bits, which is 1110 in unary. The γ code of 13 is therefore 1110101, the concatenation of length 1110 and offset 101. The right hand column of Table 5.5 gives additional examples of γ codes.

A γ code is decoded by first reading the unary code up to the 0 that terminates it, for example, the four bits 1110 when decoding 1110101. Now we know how long the offset is: 3 bits. The offset 101 can then be read correctly and the 1 that was chopped off in encoding is prepended: 101 → 1101 = 13.

The length of offset is log2 G bits and the length of length is log2 G + 1 bits, so the length of the entire code is 2 × log2 G + 1 bits. γ codes are always of odd length and they are within a factor of 2 of what we claimed to be the optimal encoding length log2 G. We derived this optimum from the assumption that the 2n gaps between 1 and 2n are equiprobable. But this need not be the case. In general, we do not know the probability distribution over gaps a priori.

The characteristic of a discrete probability distribution3 P that determines ENTROPY its coding properties (including whether a code is optimal) is its entropy H(P),

which is defined as follows:

H(P) = − ∑ P(x) log2 P(x)

x X

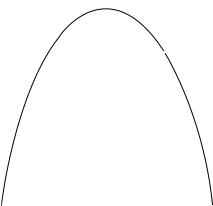

where X is the set of all possible numbers we need to be able to encode (and therefore ∑x X P(x) = 1.0). Entropy is a measure of uncertainty as shown in Figure 5.9 for a probability distribution P over two possible outcomes, namely, X = {x1, x2}. Entropy is maximized (H(P) = 1) for P(x1) = P(x2) = 0.5 when uncertainty about which xi will appear next is largest; and

2.We assume here that G has no leading 0s. If there are any, they are removed before deleting the leading 1.

3.Readers who want to review basic concepts of probability theory may want to consult Rice (2006) or Ross (2006). Note that we are interested in probability distributions over integers (gaps, frequencies, etc.), but that the coding properties of a probability distribution are independent of whether the outcomes are integers or something else.

Online edition (c) 2009 Cambridge UP

100 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

5 Index compression |

|||

|

1.0 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

||

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

||

|

0.8 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

||

|

|

H(P) 0.6 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

||

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

||

|

0.4 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

||

|

0.2 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

||

|

0.0 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

||

|

0.0 |

0.2 |

|

0.4 |

0.6 |

0.8 |

1.0 |

|

|||||||||||||||||

|

|

|

|

|

|

|

|

|

|

|

|

|

|

P(x1) |

|

|

|

|

|

|

|

||||

|

Figure 5.9 Entropy H(P) as a function of P(x1) for a sample space with two |

||||||||||||||||||||||||

|

outcomes x1 and x2. |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|||||

|

|

|

|

|

|

||||||||||||||||||||

|

minimized (H(P) = 0) for P(x1) = 1, P(x2) = 0 and for P(x1) = 0, P(x2) = 1 |

||||||||||||||||||||||||

|

when there is absolute certainty. |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|||||||||

|

It can be shown that the lower bound for the expected length E(L) of a |

||||||||||||||||||||||||

|

code L is H(P) if certain conditions hold (see the references). It can further |

||||||||||||||||||||||||

|

be shown that for 1 < H(P) < ∞, γ encoding is within a factor of 3 of this |

||||||||||||||||||||||||

|

optimal encoding, approaching 2 for large H(P): |

|

|

|

|

|

|

|

|||||||||||||||||

|

|

|

|

|

|

|

E(Lγ ) |

|

≤ 2 + |

|

1 |

|

|

≤ 3. |

|

|

|

|

|

|

|

||||

|

|

|

|

|

|

|

H(P) |

|

H(P) |

|

|

|

|

|

|

|

|||||||||

|

What is remarkable about this result is that it holds for any probability distri- |

||||||||||||||||||||||||

|

bution P. So without knowing anything about the properties of the distribu- |

||||||||||||||||||||||||

|

tion of gaps, we can apply γ codes and be certain that they are within a factor |

||||||||||||||||||||||||

|

of ≈ 2 of the optimal code for distributions of large entropy. A code like γ |

||||||||||||||||||||||||

|

code with the property of being within a factor of optimal for an arbitrary |

||||||||||||||||||||||||

UNIVERSAL CODE |

distribution P is called universal. |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|||||||||

|

In addition to universality, γ codes have two other properties that are use- |

||||||||||||||||||||||||

PREFIX FREE |

ful for index compression. First, they are prefix free, namely, no γ code is the |

||||||||||||||||||||||||

|

prefix of another. This means that there is always a unique decoding of a |

||||||||||||||||||||||||

|

sequence of γ codes – and we do not need delimiters between them, which |

||||||||||||||||||||||||

|

would decrease the efficiency of the code. The second property is that γ |

||||||||||||||||||||||||

PARAMETER FREE |

codes are parameter free. For many other efficient codes, we have to fit the |

||||||||||||||||||||||||

|

parameters of a model (e.g., the binomial distribution) to the distribution |

||||||||||||||||||||||||

Online edition (c) 2009 Cambridge UP

(5.3)

(5.4)

(5.5)

5.3 Postings file compression |

101 |

of gaps in the index. This complicates the implementation of compression and decompression. For instance, the parameters need to be stored and retrieved. And in dynamic indexing, the distribution of gaps can change, so that the original parameters are no longer appropriate. These problems are avoided with a parameter-free code.

How much compression of the inverted index do γ codes achieve? To answer this question we use Zipf’s law, the term distribution model introduced in Section 5.1.2. According to Zipf’s law, the collection frequency cfi is proportional to the inverse of the rank i, that is, there is a constant c′ such that:

c′ cfi = i .

We can choose a different constant c such that the fractions c/i are relative frequencies and sum to 1 (that is, c/i = cfi/T):

M |

c |

|

M |

1 |

|

||

1 = ∑ |

|

= c |

∑ |

|

= c HM |

||

i |

i |

||||||

i=1 |

|

i=1 |

|

||||

|

|

c = |

1 |

|

|

||

|

|

|

HM |

|

|||

|

|

|

|

|

|||

where M is the number of distinct terms and HM is the Mth harmonic number. 4 Reuters-RCV1 has M = 400,000 distinct terms and HM ≈ ln M, so we

have |

1 |

1 |

1 |

|

1 |

|

||

|

|

. |

||||||

c = |

|

≈ |

|

= |

|

≈ |

|

|

HM |

ln M |

ln 400,000 |

13 |

|||||

Thus the ith term has a relative frequency of roughly 1/(13i), and the expected average number of occurrences of term i in a document of length L is:

|

c |

|

200 × |

1 |

|

|

15 |

L |

≈ |

13 |

|

≈ |

|||

i |

i |

|

i |

||||

where we interpret the relative frequency as a term occurrence probability. Recall that 200 is the average number of tokens per document in ReutersRCV1 (Table 4.2).

Now we have derived term statistics that characterize the distribution of terms in the collection and, by extension, the distribution of gaps in the postings lists. From these statistics, we can calculate the space requirements for an inverted index compressed with γ encoding. We first stratify the vocabulary into blocks of size Lc = 15. On average, term i occurs 15/i times per

4. Note that, unfortunately, the conventional symbol for both entropy and harmonic number is H. Context should make clear which is meant in this chapter.

Online edition (c) 2009 Cambridge UP

102 |

5 Index compression |

N documents

Lc most

frequent N gaps of 1 each terms

Lc next most

frequent N/2 gaps of 2 each terms

Lc next most

frequent N/3 gaps of 3 each terms

. . . . . .

Figure 5.10 Stratification of terms for estimating the size of a γ encoded inverted index.

document. So the average number of occurrences f per document is 1 ≤ f for terms in the first block, corresponding to a total number of N gaps per term.

The average is 12 ≤ f < 1 for terms in the second block, corresponding to

N/2 gaps per term, and 13 ≤ f < 12 for terms in the third block, corresponding to N/3 gaps per term, and so on. (We take the lower bound because it simplifies subsequent calculations. As we will see, the final estimate is too pessimistic, even with this assumption.) We will make the somewhat unrealistic assumption that all gaps for a given term have the same size as shown in Figure 5.10. Assuming such a uniform distribution of gaps, we then have gaps of size 1 in block 1, gaps of size 2 in block 2, and so on.

Encoding the N/j gaps of size j with γ codes, the number of bits needed for the postings list of a term in the jth block (corresponding to one row in

the figure) is: |

|

|

|

|

|

bits-per-row = |

N |

× (2 × log2 j + 1) |

|||

j |

|||||

≈ |

2N log |

2 |

j |

. |

|

|

|

|

|||

|

j |

|

|

||

To encode the entire block, we need (Lc) · (2N log2 j)/j bits. There are M/(Lc) blocks, so the postings file as a whole will take up:

M

Lc 2NLc log2 j (5.6) ∑ .

j=1 j

Online edition (c) 2009 Cambridge UP

5.3 Postings file compression |

103 |

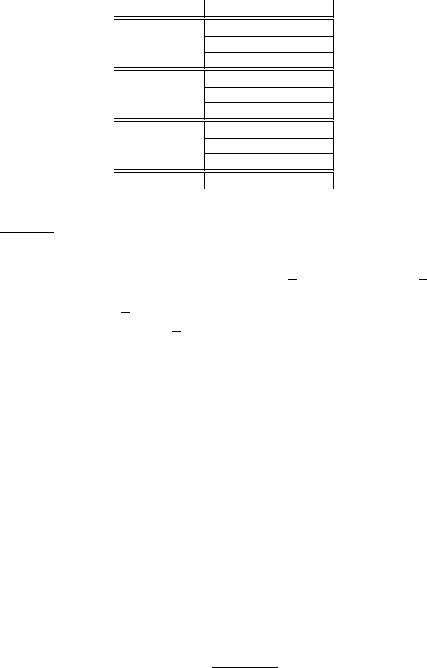

Table 5.6 Index and dictionary compression for Reuters-RCV1. The compression ratio depends on the proportion of actual text in the collection. Reuters-RCV1 contains a large amount of XML markup. Using the two best compression schemes, γ encoding and blocking with front coding, the ratio compressed index to collection size is therefore especially small for Reuters-RCV1: (101 + 5.9)/3600 ≈ 0.03.

|

|

|

data structure |

|

|

|

size in MB |

|

|

|

|

dictionary, fixed-width |

|

|

11.2 |

||

|

|

|

dictionary, term pointers into string |

|

7.6 |

|||

|

|

|

, with blocking, k = 4 |

|

|

7.1 |

||

|

|

|

, with blocking & front coding |

|

|

5.9 |

||

|

|

|

collection (text, xml markup etc) |

|

|

3600.0 |

||

|

|

|

collection (text) |

|

|

|

960.0 |

|

|

|

|

term incidence matrix |

|

|

|

40,000.0 |

|

|

|

|

postings, uncompressed (32-bit words) |

400.0 |

||||

|

|

|

postings, uncompressed (20 bits) |

|

|

250.0 |

||

|

|

|

postings, variable byte encoded |

|

|

116.0 |

||

|

|

|

postings, γ encoded |

|

|

|

101.0 |

|

|

|

|

|

|

||||

|

For Reuters-RCV1, LcM ≈ 400,000/15 ≈ 27,000 and |

|

||||||

(5.7) |

27,000 2 × 106 |

× 15 log2 j |

|

224 MB. |

||||

|

|

∑ |

|

j |

≈ |

|||

|

|

|

j=1 |

|

|

|

|

|

So the postings file of the compressed inverted index for our 960 MB collection has a size of 224 MB, one fourth the size of the original collection.

When we run γ compression on Reuters-RCV1, the actual size of the compressed index is even lower: 101 MB, a bit more than one tenth of the size of the collection. The reason for the discrepancy between predicted and actual value is that (i) Zipf’s law is not a very good approximation of the actual distribution of term frequencies for Reuters-RCV1 and (ii) gaps are not uniform. The Zipf model predicts an index size of 251 MB for the unrounded numbers from Table 4.2. If term frequencies are generated from the Zipf model and a compressed index is created for these artificial terms, then the compressed size is 254 MB. So to the extent that the assumptions about the distribution of term frequencies are accurate, the predictions of the model are correct.

Table 5.6 summarizes the compression techniques covered in this chapter. The term incidence matrix (Figure 1.1, page 4) for Reuters-RCV1 has size 400,000 × 800,000 = 40 × 8 × 109 bits or 40 GB.

γ codes achieve great compression ratios – about 15% better than variable byte codes for Reuters-RCV1. But they are expensive to decode. This is because many bit-level operations – shifts and masks – are necessary to decode a sequence of γ codes as the boundaries between codes will usually be

Online edition (c) 2009 Cambridge UP

104 |

5 |

Index compression |

|

somewhere in the middle of a machine word. As a result, query processing is |

|

|

more expensive for γ codes than for variable byte codes. Whether we choose |

|

|

variable byte or γ encoding depends on the characteristics of an application, |

|

|

for example, on the relative weights we give to conserving disk space versus |

|

|

maximizing query response time. |

|

|

The compression ratio for the index in Table 5.6 is about 25%: 400 MB (un- |

|

|

compressed, each posting stored as a 32-bit word) versus 101 MB (γ) and 116 |

|

|

MB (VB). This shows that both γ and VB codes meet the objectives we stated |

|

|

in the beginning of the chapter. Index compression substantially improves |

|

|

time and space efficiency of indexes by reducing the amount of disk space |

|

|

needed, increasing the amount of information that can be kept in the cache, |

|

|

and speeding up data transfers from disk to memory. |

|

? |

Exercise 5.4 |

[ ] |

Compute variable byte codes for the numbers in Tables 5.3 and 5.5. |

[ ] |

|

|

Exercise 5.5 |

|

Compute variable byte and γ codes for the postings list h777, 17743, 294068, 31251336i. Use gaps instead of docIDs where possible. Write binary codes in 8-bit blocks.

Exercise 5.6

Consider the postings list h4, 10, 11, 12, 15, 62, 63, 265, 268, 270, 400i with a correspond-

ing list of gaps h4, 6, 1, 1, 3, 47, 1, 202, 3, 2, 130i. Assume that the length of the postings list is stored separately, so the system knows when a postings list is complete. Using variable byte encoding: (i) What is the largest gap you can encode in 1 byte? (ii) What is the largest gap you can encode in 2 bytes? (iii) How many bytes will the above postings list require under this encoding? (Count only space for encoding the sequence of numbers.)

Exercise 5.7

A little trick is to notice that a gap cannot be of length 0 and that the stuff left to encode after shifting cannot be 0. Based on these observations: (i) Suggest a modification to variable byte encoding that allows you to encode slightly larger gaps in the same amount of space. (ii) What is the largest gap you can encode in 1 byte? (iii) What is the largest gap you can encode in 2 bytes? (iv) How many bytes will the postings list in Exercise 5.6 require under this encoding? (Count only space for encoding the sequence of numbers.)

Exercise 5.8 |

[ ] |

From the following sequence of γ-coded gaps, reconstruct first the gap sequence and then the postings sequence: 1110001110101011111101101111011.

Exercise 5.9

γcodes are relatively inefficient for large numbers (e.g., 1025 in Table 5.5) as they

δCODES encode the length of the offset in inefficient unary code. δ codes differ from γ codes

in that they encode the first part of the code (length) in γ code instead of unary code. The encoding of offset is the same. For example, the δ code of 7 is 10,0,11 (again, we add commas for readability). 10,0 is the γ code for length (2 in this case) and the encoding of offset (11) is unchanged. (i) Compute the δ codes for the other numbers

Online edition (c) 2009 Cambridge UP