- •List of Tables

- •List of Figures

- •Table of Notation

- •Preface

- •Boolean retrieval

- •An example information retrieval problem

- •Processing Boolean queries

- •The extended Boolean model versus ranked retrieval

- •References and further reading

- •The term vocabulary and postings lists

- •Document delineation and character sequence decoding

- •Obtaining the character sequence in a document

- •Choosing a document unit

- •Determining the vocabulary of terms

- •Tokenization

- •Dropping common terms: stop words

- •Normalization (equivalence classing of terms)

- •Stemming and lemmatization

- •Faster postings list intersection via skip pointers

- •Positional postings and phrase queries

- •Biword indexes

- •Positional indexes

- •Combination schemes

- •References and further reading

- •Dictionaries and tolerant retrieval

- •Search structures for dictionaries

- •Wildcard queries

- •General wildcard queries

- •Spelling correction

- •Implementing spelling correction

- •Forms of spelling correction

- •Edit distance

- •Context sensitive spelling correction

- •Phonetic correction

- •References and further reading

- •Index construction

- •Hardware basics

- •Blocked sort-based indexing

- •Single-pass in-memory indexing

- •Distributed indexing

- •Dynamic indexing

- •Other types of indexes

- •References and further reading

- •Index compression

- •Statistical properties of terms in information retrieval

- •Dictionary compression

- •Dictionary as a string

- •Blocked storage

- •Variable byte codes

- •References and further reading

- •Scoring, term weighting and the vector space model

- •Parametric and zone indexes

- •Weighted zone scoring

- •Learning weights

- •The optimal weight g

- •Term frequency and weighting

- •Inverse document frequency

- •The vector space model for scoring

- •Dot products

- •Queries as vectors

- •Computing vector scores

- •Sublinear tf scaling

- •Maximum tf normalization

- •Document and query weighting schemes

- •Pivoted normalized document length

- •References and further reading

- •Computing scores in a complete search system

- •Index elimination

- •Champion lists

- •Static quality scores and ordering

- •Impact ordering

- •Cluster pruning

- •Components of an information retrieval system

- •Tiered indexes

- •Designing parsing and scoring functions

- •Putting it all together

- •Vector space scoring and query operator interaction

- •References and further reading

- •Evaluation in information retrieval

- •Information retrieval system evaluation

- •Standard test collections

- •Evaluation of unranked retrieval sets

- •Evaluation of ranked retrieval results

- •Assessing relevance

- •A broader perspective: System quality and user utility

- •System issues

- •User utility

- •Results snippets

- •References and further reading

- •Relevance feedback and query expansion

- •Relevance feedback and pseudo relevance feedback

- •The Rocchio algorithm for relevance feedback

- •Probabilistic relevance feedback

- •When does relevance feedback work?

- •Relevance feedback on the web

- •Evaluation of relevance feedback strategies

- •Pseudo relevance feedback

- •Indirect relevance feedback

- •Summary

- •Global methods for query reformulation

- •Vocabulary tools for query reformulation

- •Query expansion

- •Automatic thesaurus generation

- •References and further reading

- •XML retrieval

- •Basic XML concepts

- •Challenges in XML retrieval

- •A vector space model for XML retrieval

- •Evaluation of XML retrieval

- •References and further reading

- •Exercises

- •Probabilistic information retrieval

- •Review of basic probability theory

- •The Probability Ranking Principle

- •The 1/0 loss case

- •The PRP with retrieval costs

- •The Binary Independence Model

- •Deriving a ranking function for query terms

- •Probability estimates in theory

- •Probability estimates in practice

- •Probabilistic approaches to relevance feedback

- •An appraisal and some extensions

- •An appraisal of probabilistic models

- •Bayesian network approaches to IR

- •References and further reading

- •Language models for information retrieval

- •Language models

- •Finite automata and language models

- •Types of language models

- •Multinomial distributions over words

- •The query likelihood model

- •Using query likelihood language models in IR

- •Estimating the query generation probability

- •Language modeling versus other approaches in IR

- •Extended language modeling approaches

- •References and further reading

- •Relation to multinomial unigram language model

- •The Bernoulli model

- •Properties of Naive Bayes

- •A variant of the multinomial model

- •Feature selection

- •Mutual information

- •Comparison of feature selection methods

- •References and further reading

- •Document representations and measures of relatedness in vector spaces

- •k nearest neighbor

- •Time complexity and optimality of kNN

- •The bias-variance tradeoff

- •References and further reading

- •Exercises

- •Support vector machines and machine learning on documents

- •Support vector machines: The linearly separable case

- •Extensions to the SVM model

- •Multiclass SVMs

- •Nonlinear SVMs

- •Experimental results

- •Machine learning methods in ad hoc information retrieval

- •Result ranking by machine learning

- •References and further reading

- •Flat clustering

- •Clustering in information retrieval

- •Problem statement

- •Evaluation of clustering

- •Cluster cardinality in K-means

- •Model-based clustering

- •References and further reading

- •Exercises

- •Hierarchical clustering

- •Hierarchical agglomerative clustering

- •Time complexity of HAC

- •Group-average agglomerative clustering

- •Centroid clustering

- •Optimality of HAC

- •Divisive clustering

- •Cluster labeling

- •Implementation notes

- •References and further reading

- •Exercises

- •Matrix decompositions and latent semantic indexing

- •Linear algebra review

- •Matrix decompositions

- •Term-document matrices and singular value decompositions

- •Low-rank approximations

- •Latent semantic indexing

- •References and further reading

- •Web search basics

- •Background and history

- •Web characteristics

- •The web graph

- •Spam

- •Advertising as the economic model

- •The search user experience

- •User query needs

- •Index size and estimation

- •Near-duplicates and shingling

- •References and further reading

- •Web crawling and indexes

- •Overview

- •Crawling

- •Crawler architecture

- •DNS resolution

- •The URL frontier

- •Distributing indexes

- •Connectivity servers

- •References and further reading

- •Link analysis

- •The Web as a graph

- •Anchor text and the web graph

- •PageRank

- •Markov chains

- •The PageRank computation

- •Hubs and Authorities

- •Choosing the subset of the Web

- •References and further reading

- •Bibliography

- •Author Index

15.4 Machine learning methods in ad hoc information retrieval |

341 |

presumably works because most such sentences are somehow more central to the concerns of the document.

? |

Exercise 15.4 |

|

|

[ ] |

Spam email often makes use of various cloaking techniques to try to get through. One |

||||

method is to pad or substitute characters so as to defeat word-based text classifiers. |

||||

|

For example, you see terms like the following in spam email: |

|||

|

Rep1icaRolex |

bonmus |

Viiiaaaagra |

pi11z |

|

PHARlbdMACY |

[LEV]i[IT]l[RA] |

se xual |

ClAfLlS |

Discuss how you could engineer features that would largely defeat this strategy.

Exercise 15.5 |

[ ] |

Another strategy often used by purveyors of email spam is to follow the message they wish to send (such as buying a cheap stock or whatever) with a paragraph of text from another innocuous source (such as a news article). Why might this strategy be effective? How might it be addressed by a text classifier?

Exercise 15.6 |

[ ] |

What other kinds of features appear as if they would be useful in an email spam classifier?

15.4Machine learning methods in ad hoc information retrieval

Rather than coming up with term and document weighting functions by hand, as we primarily did in Chapter 6, we can view different sources of relevance signal (cosine score, title match, etc.) as features in a learning problem. A classifier that has been fed examples of relevant and nonrelevant documents for each of a set of queries can then figure out the relative weights of these signals. If we configure the problem so that there are pairs of a document and a query which are assigned a relevance judgment of relevant or nonrelevant, then we can think of this problem too as a text classification problem. Taking such a classification approach is not necessarily best, and we present an alternative in Section 15.4.2. Nevertheless, given the material we have covered, the simplest place to start is to approach this problem as a classification problem, by ordering the documents according to the confidence of a two-class classifier in its relevance decision. And this move is not purely pedagogical; exactly this approach is sometimes used in practice.

15.4.1A simple example of machine-learned scoring

In this section we generalize the methodology of Section 6.1.2 (page 113) to machine learning of the scoring function. In Section 6.1.2 we considered a case where we had to combine Boolean indicators of relevance; here we consider more general factors to further develop the notion of machine-learned

Online edition (c) 2009 Cambridge UP

342 |

|

|

15 Support vector machines and machine learning on documents |

||||

|

|

|

|

|

|

|

|

|

Example |

DocID |

Query |

Cosine score |

ω |

|

Judgment |

|

Φ1 |

37 |

linux operating system |

0.032 |

3 |

|

relevant |

|

Φ2 |

37 |

penguin logo |

0.02 |

4 |

|

nonrelevant |

|

Φ3 |

238 |

operating system |

0.043 |

2 |

|

relevant |

|

Φ4 |

238 |

runtime environment |

0.004 |

2 |

|

nonrelevant |

|

Φ5 |

1741 |

kernel layer |

0.022 |

3 |

|

relevant |

|

Φ6 |

2094 |

device driver |

0.03 |

2 |

|

relevant |

|

Φ7 |

3191 |

device driver |

0.027 |

5 |

|

nonrelevant |

|

· · · |

· · · |

· · · |

· · · |

· · · |

|

· · · |

Table 15.3 Training examples for machine-learned scoring.

relevance. In particular, the factors we now consider go beyond Boolean functions of query term presence in document zones, as in Section 6.1.2.

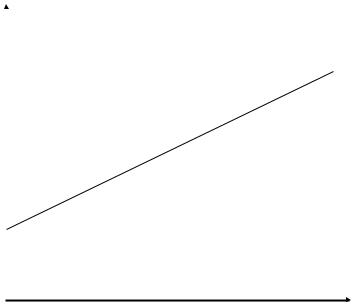

We develop the ideas in a setting where the scoring function is a linear combination of two factors: (1) the vector space cosine similarity between query and document and (2) the minimum window width ω within which the query terms lie. As we noted in Section 7.2.2 (page 144), query term proximity is often very indicative of a document being on topic, especially with longer documents and on the web. Among other things, this quantity gives us an implementation of implicit phrases. Thus we have one factor that depends on the statistics of query terms in the document as a bag of words, and another that depends on proximity weighting. We consider only two features in the development of the ideas because a two-feature exposition remains simple enough to visualize. The technique can be generalized to many more features.

As in Section 6.1.2, we are provided with a set of training examples, each of which is a pair consisting of a query and a document, together with a relevance judgment for that document on that query that is either relevant or nonrelevant. For each such example we can compute the vector space cosine similarity, as well as the window width ω. The result is a training set as shown in Table 15.3, which resembles Figure 6.5 (page 115) from Section 6.1.2.

Here, the two features (cosine score denoted α and window width ω) are real-valued predictors. If we once again quantify the judgment relevant as 1 and nonrelevant as 0, we seek a scoring function that combines the values of the features to generate a value that is (close to) 0 or 1. We wish this function to be in agreement with our set of training examples as far as possible. Without loss of generality, a linear classifier will use a linear combination of features of the form

(15.17) |

Score(d, q) = Score(α, ω) = aα + bω + c, |

with the coefficients a, b, c to be learned from the training data. While it is

Online edition (c) 2009 Cambridge UP

15.4 Machine learning methods in ad hoc information retrieval |

343 |

|

re |

|

0 |

. 0 |

5 |

|

|

|

|

|

|

|

|

R |

||

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|||

|

co |

|

|

|

|

|

|

|

R |

|

|

R |

R |

|

||

|

i osn |

|

|

|

|

|

R |

|

|

|

N |

N |

||||

|

c |

0 |

. 0 |

2 5 |

|

|

R |

|

|

R |

|

NN |

|

|||

|

|

|

|

|

|

|

|

|

N |

|

|

N |

|

|

|

|

|

Figure 15.7 A collection of training examples. Each R denotes a training example |

|||||||||||||||

labeled relevant0 2 |

, while each3 |

NT ise |

ar m |

trainingp 4 r o x |

examplei m i t y 5 |

|

labeled nonrelevant. |

|||||||||

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

possible to formulate this as an error minimization problem as we did in Section 6.1.2, it is instructive to visualize the geometry of Equation (15.17). The examples in Table 15.3 can be plotted on a two-dimensional plane with axes corresponding to the cosine score α and the window width ω. This is depicted in Figure 15.7.

In this setting, the function Score(α, ω) from Equation (15.17) represents a plane “hanging above” Figure 15.7. Ideally this plane (in the direction perpendicular to the page containing Figure 15.7) assumes values close to 1 above the points marked R, and values close to 0 above the points marked N. Since a plane is unlikely to assume only values close to 0 or 1 above the training sample points, we make use of thresholding: given any query and document for which we wish to determine relevance, we pick a value θ and if Score(α, ω) > θ we declare the document to be relevant, else we declare the document to be nonrelevant. As we know from Figure 14.8 (page 301), all points that satisfy Score(α, ω) = θ form a line (shown as a dashed line in Figure 15.7) and we thus have a linear classifier that separates relevant

Online edition (c) 2009 Cambridge UP

344 |

15 Support vector machines and machine learning on documents |

from nonrelevant instances. Geometrically, we can find the separating line as follows. Consider the line passing through the plane Score(α, ω) whose height is θ above the page containing Figure 15.7. Project this line down onto Figure 15.7; this will be the dashed line in Figure 15.7. Then, any subsequent query/document pair that falls below the dashed line in Figure 15.7 is deemed nonrelevant; above the dashed line, relevant.

Thus, the problem of making a binary relevant/nonrelevant judgment given training examples as above turns into one of learning the dashed line in Figure 15.7 separating relevant training examples from the nonrelevant ones. Being in the α-ω plane, this line can be written as a linear equation involving α and ω, with two parameters (slope and intercept). The methods of linear classification that we have already looked at in Chapters 13–15 provide methods for choosing this line. Provided we can build a sufficiently rich collection of training samples, we can thus altogether avoid hand-tuning score functions as in Section 7.2.3 (page 145). The bottleneck of course is the ability to maintain a suitably representative set of training examples, whose relevance assessments must be made by experts.

15.4.2Result ranking by machine learning

The above ideas can be readily generalized to functions of many more than two variables. There are lots of other scores that are indicative of the relevance of a document to a query, including static quality (PageRank-style measures, discussed in Chapter 21), document age, zone contributions, document length, and so on. Providing that these measures can be calculated for a training document collection with relevance judgments, any number of such measures can be used to train a machine learning classifier. For instance, we could train an SVM over binary relevance judgments, and order documents based on their probability of relevance, which is monotonic with the documents’ signed distance from the decision boundary.

However, approaching IR result ranking like this is not necessarily the right way to think about the problem. Statisticians normally first divide

REGRESSION

ORDINAL REGRESSION

problems into classification problems (where a categorical variable is predicted) versus regression problems (where a real number is predicted). In between is the specialized field of ordinal regression where a ranking is predicted. Machine learning for ad hoc retrieval is most properly thought of as an ordinal regression problem, where the goal is to rank a set of documents for a query, given training data of the same sort. This formulation gives some additional power, since documents can be evaluated relative to other candidate documents for the same query, rather than having to be mapped to a global scale of goodness, while also weakening the problem space, since just a ranking is required rather than an absolute measure of relevance. Issues of ranking are especially germane in web search, where the ranking at

Online edition (c) 2009 Cambridge UP

15.4 Machine learning methods in ad hoc information retrieval |

345 |

the very top of the results list is exceedingly important, whereas decisions of relevance of a document to a query may be much less important. Such work can and has been pursued using the structural SVM framework which we mentioned in Section 15.2.2, where the class being predicted is a ranking of results for a query, but here we will present the slightly simpler ranking SVM.

RANKING SVM The construction of a ranking SVM proceeds as follows. We begin with a set of judged queries. For each training query q, we have a set of documents returned in response to the query, which have been totally ordered by a person for relevance to the query. We construct a vector of features ψj = ψ(dj, q) for each document/query pair, using features such as those discussed in Section 15.4.1, and many more. For two documents di and dj, we then form the vector of feature differences:

(15.18) |

Φ(di, dj, q) = ψ(di, q) − ψ(dj, q) |

|

By hypothesis, one of di and dj has been judged more relevant. If di is |

|

judged more relevant than dj, denoted di dj (di should precede dj in the |

results ordering), then we will assign the vector Φ(di, dj, q) the class yijq = +1; otherwise −1. The goal then is to build a classifier which will return

(15.19) w~ TΦ(di, dj, q) > 0 iff di dj

This SVM learning task is formalized in a manner much like the other examples that we saw before:

(15.20) Find w~ , and ξi,j ≥ 0 such that:

• |

21 w~ Tw~ + C ∑i,j ξi,j is minimized |

• |

and for all {Φ(di, dj, q) : di dj}, w~ TΦ(di, dj, q) ≥ 1 − ξi,j |

We can leave out yijq in the statement of the constraint, since we only need to consider the constraint for document pairs ordered in one direction, sinceis antisymmetric. These constraints are then solved, as before, to give a linear classifier which can rank pairs of documents. This approach has been used to build ranking functions which outperform standard hand-built ranking functions in IR evaluations on standard data sets; see the references for papers that present such results.

Both of the methods that we have just looked at use a linear weighting of document features that are indicators of relevance, as has most work in this area. It is therefore perhaps interesting to note that much of traditional IR weighting involves nonlinear scaling of basic measurements (such as logweighting of term frequency, or idf). At the present time, machine learning is very good at producing optimal weights for features in a linear combination

Online edition (c) 2009 Cambridge UP