Handbook_of_statistical_analysis_using_SAS

.pdf

|

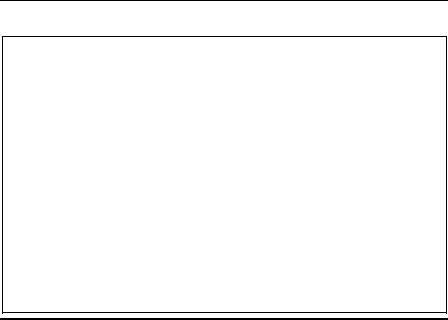

Odds Ratio Estimates |

|

||

|

|

Point |

95% Wald |

|

Effect |

|

Estimate |

Confidence Limits |

|

work Skilled vs Office |

1.357 |

1.030 |

1.788 |

|

work Unskilled vs Office |

0.633 |

0.496 |

0.808 |

|

tenure rent vs own |

0.363 |

0.290 |

0.454 |

|

agegrp 2 |

vs 1 |

0.893 |

0.683 |

1.168 |

agegrp 3 |

vs 1 |

0.646 |

0.491 |

0.851 |

Association of Predicted Probabilities and Observed Responses

Percent Concordant |

62 |

.8 |

Somers' D |

0.327 |

Percent Discordant |

30 |

.1 |

Gamma |

0.352 |

Percent Tied |

7 |

.1 |

Tau-a |

0.159 |

Pairs |

614188 |

c |

0.663 |

|

Display 8.10

Work, tenure, and age group are all selected in the final model; only the type of accommodation is dropped. The estimated conditional odds ratio suggests that skilled workers are more likely to respond “yes” to the question asked than office workers (estimated odds ratio 1.357 with 95% confidence interval 1.030, 1.788). And unskilled workers are less likely than office workers to respond “yes” (0.633, 0.496–0.808). People who rent their home are far less likely to answer “yes” than people who own their home (0.363, 0.290–0.454). Finally, it appears that people in the two younger age groups are more likely to respond “yes” than the oldest respondents.

Exercises

8.1In the text, a main-effects-only logistic regression was fitted to the GHQ data. This assumes that the effect of GHQ on caseness is the same for men and women. Fit a model where this assumption is not made, and assess which model best fits the data.

8.2For the ESR and plasma protein data, fit a logistic model that includes quadratic effects for both fibrinogen and γ -globulin. Does the model fit better than the model selected in the text?

8.3Investigate using logistic regression on the Danish do-it-yourself data allowing for interactions among some factors.

©2002 CRC Press LLC

Chapter 9

Generalised Linear

Models: School

Attendance Amongst

Australian School

Children

9.1 Description of Data

This chapter reanalyses a number of data sets from previous chapters, in particular the data on school attendance in Australian school children used in Chapter 6. The aim of this chapter is to introduce the concept of generalized linear models and to illustrate how they can be applied in SAS using proc genmod.

9.2 Generalised Linear Models

The analysis of variance models considered in Chapters 5 and 6 and the multiple regression model described in Chapter 4 are, essentially, completely equivalent. Both involve a linear combination of a set of explanatory variables (dummy variables in the case of analysis of variance) as

©2002 CRC Press LLC

a model for an observed response variable. And both include residual terms assumed to have a normal distribution. (The equivalence of analysis of variance and multiple regression models is spelt out in more detail in Everitt [2001].)

The logistic regression model encountered in Chapter 8 also has similarities to the analysis of variance and multiple regression models. Again, a linear combination of explanatory variables is involved, although here the binary response variable is not modelled directly (for the reasons outlined in Chapter 8), but via a logistic transformation.

Multiple regression, analysis of variance, and logistic regression models can all, in fact, be included in a more general class of models known as generalised linear models. The essence of this class of models is a linear

predictor of the form: |

|

η = β 0 + β 1x1 + … + β pxp = β ′x |

(9.1) |

where β ′ = [β 0, β 1, …, β p] and x′ = [1, x1, x2, …, xp]. The linear predictor determines the expectation, µ , of the response variable. In linear regres-

sion, where the response is continuous, µ is directly equated with the linear predictor. This is not sensible when the response is dichotomous because in this case the expectation is a probability that must satisfy 0 ≤ µ ≤ 1. Consequently, in logistic regression, the linear predictor is equated with the logistic function of µ , log µ /(1 – µ ).

In the generalised linear model formulation, the linear predictor can be equated with a chosen function of µ , g(µ ), and the model now becomes:

η = g(µ ) |

(9.2) |

The function g is referred to as a link function.

In linear regression (and analysis of variance), the probability distribution of the response variable is assumed to be normal with mean µ . In logistic regression, a binomial distribution is assumed with probability parameter µ . Both distributions, the normal and binomial distributions, come from the same family of distributions, called the exponential family,

and are given by: |

|

f(y; θ , φ ) = exp{(yθ – b(θ ))/a(φ ) + c(y, φ )} |

(9.3) |

For example, for the normal distribution, |

|

©2002 CRC Press LLC

1 |

|

|

|

–( y – µ ) |

2 |

⁄ 2σ |

2 |

|

|

|

|

|

|||

f( y;θ ,φ ) = --------------------- exp{ |

|

|

} |

|

|

|

|

|

|||||||

( 2πσ |

2) |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

( yµ |

–µ |

2 |

⁄ 2) ⁄σ |

2 |

– |

1 |

2 |

⁄ σ |

2 |

+ log( 2 |

πσ |

2 |

|

|

= exp |

|

|

--( y |

|

|

) ) (9.4) |

|||||||||

|

|

|

|

|

|

|

|

2 |

|

|

|

|

|

|

|

so that θ = µ , b(θ ) = θ 2/2, φ |

= σ 2 |

and a(φ ) = φ . |

|

|

|

|

|

||||||||

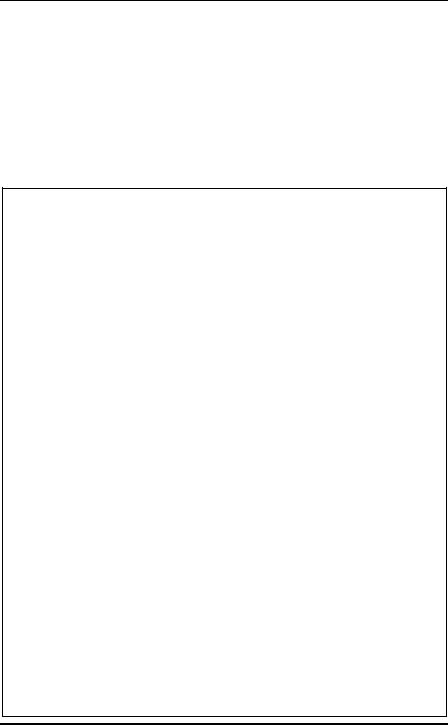

The parameter θ , a function of µ , is called the canonical link. The canonical link is frequently chosen as the link function, although the canonical link is not necessarily more appropriate than any other link. Display 9.1 lists some of the most common distributions and their canonical link functions used in generalised linear models.

|

|

|

Variance |

Dispersion |

Link |

g(µ ) = θ (µ ) |

|

|

|

|

Distribution |

Function |

Parameter |

Function |

|

||

|

|

|

|

|

|

|

|

|

|

|

Normal |

1 |

σ 2 |

Identity |

µ |

|

|

|

|

Binomial |

µ (1 – µ ) |

1 |

Logit |

log(µ /(1 – µ )) |

|

|

|

|

Poisson |

µ |

1 |

Log |

log(µ ) |

|

|

|

|

Gamma |

µ 2 |

v–1 |

Reciprocal |

1/µ |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Display 9.1 |

|

|

|

|

The mean and variance of a random variable Y having the distribution in Eq. (9.3) are given, respectively, by:

E(Y) = b′(0) = µ |

(9.5) |

and |

|

var(Y) = b″ (θ ) a(φ ) = V(µ ) a(φ ) |

(9.6) |

where b′(θ ) and b″ (θ ) denote the first and second derivative of b(θ ) with respect to θ , and the variance function V(µ ) is obtained by expression b″ (θ ) as a function of µ . It can be seen from Eq. (9.4) that the variance for the normal distribution is simply σ 2, regardless of the value of the mean µ , that is, the variance function is 1.

The data on Australian school children will be analysed by assuming a Poisson distribution for the number of days absent from school. The Poisson distribution is the appropriate distribution of the number of events

©2002 CRC Press LLC

observed if these events occur independently in continuous time at a constant instantaneous probability rate (or incidence rate); see, for example, Clayton and Hills (1993). The Poisson distribution is given by:

f(y; µ ) = µ ye– /y!, y = 0, 1, 2, … |

(9.7) |

Taking the logarithm and summing over observations y1, y2, …, yn, the log likelihood is

|

|

l( ; y1, y2, …, yn) =∑ { ( yi lnµ i – µ i) – ln( yi!)} |

(9.8) |

|

|

i |

|

Where µ′ |

= [µ |

1…µ n] gives the expected values of each observation. Here |

|

θ = ln µ |

, b(θ |

) = exp(θ ), φ = 1, and var(y) = exp(θ ) = µ . Therefore, the |

|

variance of the Poisson distribution is not constant, but equal to the mean. Unlike the normal distribution, the Poisson distribution has no separate parameter for the variance and the same is true of the Binomial distribution. Display 9.1 shows the variance functions and dispersion parameters for some commonly used probability distributions.

9.2.1 Model Selection and Measure of Fit

Lack of fit in a generalized linear model can be expressed by the deviance, which is minus twice the difference between the maximized log-likelihood of the model and the maximum likelihood achievable, that is, the maximized likelihood of the full or saturated model. For the normal distribution, the deviance is simply the residual sum of squares. Another measure of lack of fit is the generalized Pearson X2,

X |

2 |

= |

∑ |

ˆ |

i) |

2 |

ˆ |

(9.9) |

|

( yi – µ |

|

⁄ V( µ i) |

i

which, for the Poisson distribution, is just the familiar statistic for twoway cross-tabulations (since V(µˆ) = µˆ). Both the deviance and Pearson X2 have chi-square distributions when the sample size tends to infinity. When the dispersion parameter φ is fixed (not estimated), an analysis of variance can be used for testing nested models in the same way as analysis of variance is used for linear models. The difference in deviance between two models is simply compared with the chi-square distribution, with degrees of freedom equal to the difference in model degrees of freedom.

The Pearson and deviance residuals are defined as the (signed) square roots of the contributions of the individual observations to the Pearson

©2002 CRC Press LLC

X2 and deviance, respectively. These residuals can be used to assess the appropriateness of the link and variance functions.

A relatively common phenomenon with binary and count data is overdispersion, that is, the variance is greater than that of the assumed distribution (binomial and Poisson, respectively). This overdispersion may be due to extra variability in the parameter µ , which has not been completely explained by the covariates. One way of addressing the problem is to allow µ to vary randomly according to some (prior) distribution and to assume that conditional on the parameter having a certain value, the response variable follows the binomial (or Poisson) distribution. Such models are called random effects models (see Pinheiro and Bates [2000] and Chapter 11).

A more pragmatic way of accommodating overdispersion in the model is to assume that the variance is proportional to the variance function, but to estimate the dispersion rather than assuming the value 1 appropriate for the distributions. For the Poisson distribution, the variance is modelled as:

var(Y) = φµ |

(9.10) |

where φ is the estimated from the deviance or Pearson X2. (This is analogous to the estimation of the residual variance in linear regression models from the residual sums of squares.) This parameter is then used to scale the estimated standard errors of the regression coefficients. This approach of assuming a variance function that does not correspond to any probability distribution is an example of quasi-likelihood. See McCullagh and Nelder (1989) for more details on generalised linear models.

9.3 Analysis Using SAS

Within SAS, the genmod procedure uses the framework described in the previous section to fit generalised linear models. The distributions covered include those shown in Display 9.1, plus the inverse Gaussian, negative binomial, and multinomial.

To first illustrate the use of proc genmod, we begin by replicating the analysis of U.S. crime rates presented in Chapter 4 using the subset of explanatory variables selected by stepwise regression. We assume the data have been read into a SAS data set uscrime as described there.

proc genmod data=uscrime;

model R=ex1 x ed age u2 / dist=normal link=identity; run;

©2002 CRC Press LLC

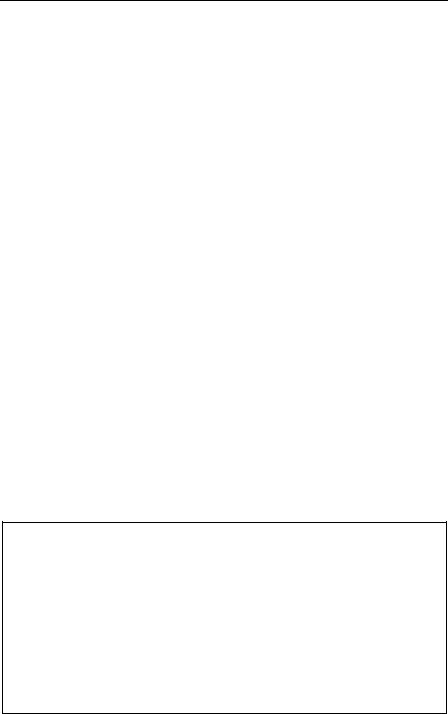

The model statement specifies the regression equation in much the same way as for proc glm described in Chapter 6. For a binomial response, the events/trials syntax described in Chapter 8 for proc logistic can also be used. The distribution and link function are specified as options in the model statement. Normal and identity can be abbreviated to N and id, respectively. The output is shown in Display 9.2. The parameter estimates are equal to those obtained in Chapter 4 using proc reg (see Display 4.5), although the standard errors are not identical. The deviance value of 495.3383 is equal to the error mean square in Display 4.5.

|

|

|

|

The GENMOD Procedure |

|

|

|

|

|

|||||

|

|

|

|

|

Model Information |

|

|

|

|

|

||||

|

|

Data Set |

|

|

|

WORK.USCRIME |

|

|

||||||

|

|

Distribution |

|

|

|

|

|

Normal |

|

|

||||

|

|

Link Function |

|

|

|

|

Identity |

|

|

|||||

|

|

Dependent Variable |

|

|

|

|

|

R |

|

|

||||

|

|

Observations Used |

|

|

|

|

|

47 |

|

|

||||

|

|

Criteria For Assessing Goodness-Of-Fit |

|

|

||||||||||

|

Criterion |

|

|

DF |

|

|

Value |

Value/DF |

|

|||||

|

Deviance |

|

|

41 |

|

20308.8707 |

495.3383 |

|

||||||

|

Scaled Deviance |

|

41 |

|

47.0000 |

|

1.1463 |

|

||||||

|

Pearson Chi-Square |

41 |

|

20308.8707 |

495.3383 |

|

||||||||

|

Scaled Pearson X2 |

41 |

|

47.0000 |

|

1.1463 |

|

|||||||

|

Log Likelihood |

|

|

|

-209.3037 |

|

|

|

|

|||||

Algorithm converged. |

|

|

|

|

|

|

|

|

|

|

|

|||

|

|

|

Analysis Of Parameter Estimates |

|

|

|

|

|||||||

|

|

|

|

Standard |

Wald 95% Confidence |

Chi- |

|

|||||||

Parameter |

DF |

Estimate |

|

Error |

|

|

Limits |

|

|

Square |

Pr > ChiSq |

|||

Intercept |

1 |

-528.856 |

93 |

.0407 |

-711.212 |

-346.499 |

32 |

.31 |

<.0001 |

|||||

Ex1 |

1 |

1 |

.2973 |

0 |

.1492 |

|

1 |

.0050 |

1 |

.5897 |

75 |

.65 |

<.0001 |

|

X |

1 |

0 |

.6463 |

0 |

.1443 |

|

0 |

.3635 |

0 |

.9292 |

20 |

.06 |

<.0001 |

|

Ed |

1 |

2 |

.0363 |

0 |

.4628 |

|

1 |

.1294 |

2 |

.9433 |

19 |

.36 |

<.0001 |

|

Age |

1 |

1 |

.0184 |

0 |

.3447 |

|

0 |

.3428 |

1 |

.6940 |

8 |

.73 |

0.0031 |

|

U2 |

1 |

0 |

.9901 |

0 |

.4223 |

|

0 |

.1625 |

1 |

.8178 |

5 |

.50 |

0.0190 |

|

Scale |

1 |

20 |

.7871 |

2 |

.1440 |

|

16 |

.9824 |

25 |

.4442 |

|

|

|

|

NOTE: The scale parameter was estimated by maximum likelihood.

Display 9.2

©2002 CRC Press LLC

Now we can move on to a more interesting application of generalized linear models involving the data on Australian children’s school attendance, used previously in Chapter 6 (see Display 6.1). Here, because the response variable — number of days absent — is a count, we will use a Poisson distribution and a log link.

Assuming that the data on Australian school attendance have been read into a SAS data set, ozkids, as described in Chapter 6, we fit a main effects model as follows.

proc genmod data=ozkids; class origin sex grade type;

model days=sex origin type grade / dist=p link=log type1 type3;

run;

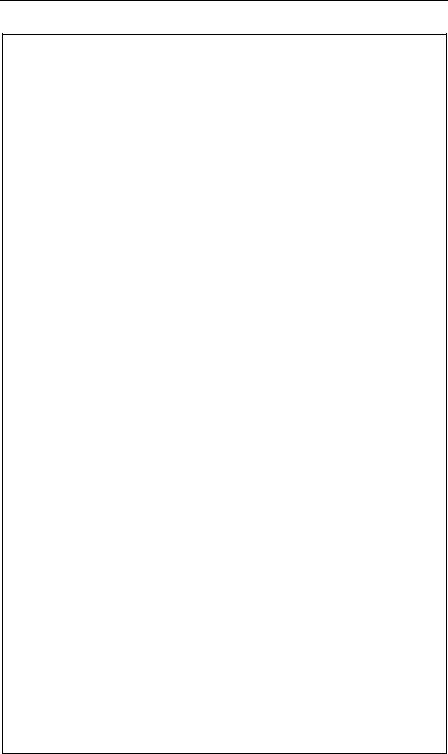

The predictors are all categorical variables and thus must be declared as such with a class statement. The Poisson probability distribution with a log link are requested with Type 1 and Type 3 analyses. These are analogous to Type I and Type III sums of squares discussed in Chapter 6. The results are shown in Display 9.3. Looking first at the LR statistics for each of the main effects, we see that both Type 1 and Type 3 analyses lead to very similar conclusions, namely that each main effect is significant. For the moment, we will ignore the Analysis of Parameter Estimates part of the output and examine instead the criteria for assessing goodness-of- fit. In the absence of overdispersion, the dispersion parameters based on the Pearson X2 of the deviance should be close to 1. The values of 13.6673 and 12.2147 given in Display 9.3 suggest, therefore, that there is overdispersion; and as a consequence, the P-values in this display may be too low.

The GENMOD Procedure

Model Information

Data Set |

WORK.OZKIDS |

Distribution |

Poisson |

Link Function |

Log |

Dependent Variable |

days |

Observations Used |

154 |

©2002 CRC Press LLC

Class Level Information |

|

||

Class |

Levels |

Values |

|

origin |

2 |

A N |

|

sex |

2 |

F M |

|

grade |

4 |

F0 F1 F2 F3 |

|

type |

2 |

AL SL |

|

Criteria For Assessing Goodness-Of-Fit |

|||

Criterion |

DF |

Value |

Value/DF |

Deviance |

147 |

1795.5665 |

12.2147 |

Scaled Deviance |

147 |

1795.5665 |

12.2147 |

Pearson Chi-Square 147 |

2009.0882 |

13.6673 |

|

Scaled Pearson X2 |

147 |

2009.0882 |

13.6673 |

Log Likelihood |

|

4581.1746 |

|

Algorithm converged.

Analysis Of Parameter Estimates

|

|

|

|

|

Standard |

Wald 95% Confidence |

Chi- |

|

||||

Parameter |

|

DF |

Estimate |

Error |

|

Limits |

|

|

Square |

Pr > ChiSq |

||

Intercept |

|

1 |

2 |

.7742 |

0.0628 |

2 |

.6510 |

2 |

.8973 |

1949 |

.78 |

<.0001 |

sex |

F |

1 |

-0.1405 |

0.0416 |

-0.2220 |

-0.0589 |

11 |

.39 |

0.0007 |

|||

sex |

M |

0 |

0 |

.0000 |

0.0000 |

0 |

.0000 |

0 |

.0000 |

|

. |

. |

origin |

A |

1 |

0 |

.4951 |

0.0412 |

0 |

.4143 |

0 |

.5758 |

144 |

.40 |

<.0001 |

origin |

N |

0 |

0 |

.0000 |

0.0000 |

0 |

.0000 |

0 |

.0000 |

|

. |

. |

type |

AL |

1 |

-0.1300 |

0.0442 |

-0.2166 |

-0.0435 |

8 |

.67 |

0.0032 |

|||

type |

SL |

0 |

0 |

.0000 |

0.0000 |

0 |

.0000 |

0 |

.0000 |

|

. |

. |

grade |

F0 |

1 |

-0.2060 |

0.0629 |

-0.3293 |

-0.0826 |

10 |

.71 |

0.0011 |

|||

grade |

F1 |

1 |

-0.4718 |

0.0614 |

-0.5920 |

-0.3515 |

59 |

.12 |

<.0001 |

|||

grade |

F2 |

1 |

0.1108 |

0.0536 |

0.0057 |

0.2158 |

4 |

.27 |

0.0387 |

|||

grade |

F3 |

0 |

0.0000 |

0.0000 |

0.0000 |

0.0000 |

|

. |

. |

|||

Scale |

|

0 |

1.0000 |

0.0000 |

1.0000 |

1.0000 |

|

|

|

|||

NOTE: The scale parameter was held fixed.

LR Statistics For Type 1 Analysis

|

|

|

Chi- |

|

|

Source |

Deviance |

DF |

Square |

Pr > ChiSq |

|

Intercept |

2105.9714 |

|

|

|

|

sex |

2086.9571 |

1 |

19 |

.01 |

<.0001 |

origin |

1920.3673 |

1 |

166 |

.59 |

<.0001 |

type |

1917.0156 |

1 |

3 |

.35 |

0.0671 |

grade |

1795.5665 |

3 |

121 |

.45 |

<.0001 |

©2002 CRC Press LLC

The GENMOD Procedure

LR Statistics For Type 3 Analysis

|

|

Chi- |

|

|

Source |

DF |

Square |

Pr > ChiSq |

|

sex |

1 |

11 |

.38 |

0.0007 |

origin |

1 |

148 |

.20 |

<.0001 |

type |

1 |

8 |

.65 |

0.0033 |

grade |

3 |

121 |

.45 |

<.0001 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Display 9.3

To rerun the analysis allowing for overdispersion, we need an estimate of the dispersion parameter φ . One strategy is to fit a model that contains a sufficient number of parameters so that all systematic variation is removed, estimate φ from this model as the deviance of Pearson X2 divided by its degrees of freedom, and then use this estimate in fitting the required model.

Thus, here we first fit a model with all first-order interactions included, simply to get an estimate of φ . The necessary SAS code is

proc genmod data=ozkids; class origin sex grade type;

model days=sex|origin|type|grade@2 / dist=p link=log scale=d;

run;

The scale=d option in the model statement specifies that the scale parameter is to be estimated from the deviance. The model statement also illustrates a modified use of the bar operator. By appending @2, we limit its expansion to terms involving two effects. This leads to an estimate of φ of 3.1892.

We now fit a main effects model allowing for overdispersion by specifying scale =3.1892 as an option in the model statement.

proc genmod data=ozkids; class origin sex grade type;

model days=sex origin type grade / dist=p link=log type1 type3 scale=3.1892;

output out=genout pred=pr_days stdreschi=resid; run;

©2002 CRC Press LLC