- •Preface

- •Contents

- •1.1 What Operating Systems Do

- •1.2 Computer-System Organization

- •1.4 Operating-System Structure

- •1.5 Operating-System Operations

- •1.6 Process Management

- •1.7 Memory Management

- •1.8 Storage Management

- •1.9 Protection and Security

- •1.10 Kernel Data Structures

- •1.11 Computing Environments

- •1.12 Open-Source Operating Systems

- •1.13 Summary

- •Practice Exercises

- •Bibliographical Notes

- •Bibliography

- •2.3 System Calls

- •2.4 Types of System Calls

- •2.5 System Programs

- •2.6 Operating-System Design and Implementation

- •2.9 Operating-System Generation

- •2.10 System Boot

- •2.11 Summary

- •Practice Exercises

- •Bibliographical Notes

- •Bibliography

- •3.1 Process Concept

- •3.2 Process Scheduling

- •3.3 Operations on Processes

- •3.4 Interprocess Communication

- •3.5 Examples of IPC Systems

- •3.7 Summary

- •Practice Exercises

- •Bibliographical Notes

- •Bibliography

- •4.1 Overview

- •4.2 Multicore Programming

- •4.3 Multithreading Models

- •4.4 Thread Libraries

- •4.5 Implicit Threading

- •4.6 Threading Issues

- •4.8 Summary

- •Practice Exercises

- •Bibliographical Notes

- •Bibliography

- •5.1 Background

- •5.3 Peterson’s Solution

- •5.4 Synchronization Hardware

- •5.5 Mutex Locks

- •5.6 Semaphores

- •5.7 Classic Problems of Synchronization

- •5.8 Monitors

- •5.9 Synchronization Examples

- •5.10 Alternative Approaches

- •5.11 Summary

- •Practice Exercises

- •Bibliographical Notes

- •Bibliography

- •6.1 Basic Concepts

- •6.2 Scheduling Criteria

- •6.3 Scheduling Algorithms

- •6.4 Thread Scheduling

- •6.5 Multiple-Processor Scheduling

- •6.6 Real-Time CPU Scheduling

- •6.8 Algorithm Evaluation

- •6.9 Summary

- •Practice Exercises

- •Bibliographical Notes

- •Bibliography

- •7.1 System Model

- •7.2 Deadlock Characterization

- •7.3 Methods for Handling Deadlocks

- •7.4 Deadlock Prevention

- •7.5 Deadlock Avoidance

- •7.6 Deadlock Detection

- •7.7 Recovery from Deadlock

- •7.8 Summary

- •Practice Exercises

- •Bibliography

- •8.1 Background

- •8.2 Swapping

- •8.3 Contiguous Memory Allocation

- •8.4 Segmentation

- •8.5 Paging

- •8.6 Structure of the Page Table

- •8.7 Example: Intel 32 and 64-bit Architectures

- •8.8 Example: ARM Architecture

- •8.9 Summary

- •Practice Exercises

- •Bibliographical Notes

- •Bibliography

- •9.1 Background

- •9.2 Demand Paging

- •9.3 Copy-on-Write

- •9.4 Page Replacement

- •9.5 Allocation of Frames

- •9.6 Thrashing

- •9.8 Allocating Kernel Memory

- •9.9 Other Considerations

- •9.10 Operating-System Examples

- •9.11 Summary

- •Practice Exercises

- •Bibliographical Notes

- •Bibliography

- •10.2 Disk Structure

- •10.3 Disk Attachment

- •10.4 Disk Scheduling

- •10.5 Disk Management

- •10.6 Swap-Space Management

- •10.7 RAID Structure

- •10.8 Stable-Storage Implementation

- •10.9 Summary

- •Practice Exercises

- •Bibliographical Notes

- •Bibliography

- •11.1 File Concept

- •11.2 Access Methods

- •11.3 Directory and Disk Structure

- •11.4 File-System Mounting

- •11.5 File Sharing

- •11.6 Protection

- •11.7 Summary

- •Practice Exercises

- •Bibliographical Notes

- •Bibliography

- •12.2 File-System Implementation

- •12.3 Directory Implementation

- •12.4 Allocation Methods

- •12.5 Free-Space Management

- •12.7 Recovery

- •12.9 Example: The WAFL File System

- •12.10 Summary

- •Practice Exercises

- •Bibliographical Notes

- •Bibliography

- •13.1 Overview

- •13.2 I/O Hardware

- •13.3 Application I/O Interface

- •13.4 Kernel I/O Subsystem

- •13.5 Transforming I/O Requests to Hardware Operations

- •13.6 STREAMS

- •13.7 Performance

- •13.8 Summary

- •Practice Exercises

- •Bibliographical Notes

- •Bibliography

- •14.1 Goals of Protection

- •14.2 Principles of Protection

- •14.3 Domain of Protection

- •14.4 Access Matrix

- •14.5 Implementation of the Access Matrix

- •14.6 Access Control

- •14.7 Revocation of Access Rights

- •14.8 Capability-Based Systems

- •14.9 Language-Based Protection

- •14.10 Summary

- •Practice Exercises

- •Bibliographical Notes

- •Bibliography

- •15.1 The Security Problem

- •15.2 Program Threats

- •15.3 System and Network Threats

- •15.4 Cryptography as a Security Tool

- •15.5 User Authentication

- •15.6 Implementing Security Defenses

- •15.7 Firewalling to Protect Systems and Networks

- •15.9 An Example: Windows 7

- •15.10 Summary

- •Exercises

- •Bibliographical Notes

- •Bibliography

- •16.1 Overview

- •16.2 History

- •16.4 Building Blocks

- •16.5 Types of Virtual Machines and Their Implementations

- •16.6 Virtualization and Operating-System Components

- •16.7 Examples

- •16.8 Summary

- •Exercises

- •Bibliographical Notes

- •Bibliography

- •17.1 Advantages of Distributed Systems

- •17.2 Types of Network-based Operating Systems

- •17.3 Network Structure

- •17.4 Communication Structure

- •17.5 Communication Protocols

- •17.6 An Example: TCP/IP

- •17.7 Robustness

- •17.8 Design Issues

- •17.9 Distributed File Systems

- •17.10 Summary

- •Practice Exercises

- •Bibliographical Notes

- •Bibliography

- •18.1 Linux History

- •18.2 Design Principles

- •18.3 Kernel Modules

- •18.4 Process Management

- •18.5 Scheduling

- •18.6 Memory Management

- •18.7 File Systems

- •18.8 Input and Output

- •18.9 Interprocess Communication

- •18.10 Network Structure

- •18.11 Security

- •18.12 Summary

- •Practice Exercises

- •Bibliographical Notes

- •Bibliography

- •19.1 History

- •19.2 Design Principles

- •19.3 System Components

- •19.4 Terminal Services and Fast User Switching

- •19.5 File System

- •19.6 Networking

- •19.7 Programmer Interface

- •19.8 Summary

- •Practice Exercises

- •Bibliographical Notes

- •Bibliography

- •20.1 Feature Migration

- •20.2 Early Systems

- •20.3 Atlas

- •20.7 CTSS

- •20.8 MULTICS

- •20.10 TOPS-20

- •20.12 Macintosh Operating System and Windows

- •20.13 Mach

- •20.14 Other Systems

- •Exercises

- •Bibliographical Notes

- •Bibliography

- •Credits

- •Index

13.7 Performance |

615 |

device typically resorts to dropping incoming messages. Consider a network card whose input buffer is full. The network card must simply drop further messages until there is enough buffer space to store incoming messages.

The benefit of using STREAMS is that it provides a framework for a modular and incremental approach to writing device drivers and network protocols. Modules may be used by different streams and hence by different devices. For example, a networking module may be used by both an Ethernet network card and a 802.11 wireless network card. Furthermore, rather than treating character-device I/O as an unstructured byte stream, STREAMS allows support for message boundaries and control information when communicating between modules. Most UNIX variants support STREAMS, and it is the preferred method for writing protocols and device drivers. For example, System V UNIX and Solaris implement the socket mechanism using STREAMS.

13.7 Performance

I/O is a major factor in system performance. It places heavy demands on the CPU to execute device-driver code and to schedule processes fairly and efficiently as they block and unblock. The resulting context switches stress the CPU and its hardware caches. I/O also exposes any inefficiencies in the interrupt-handling mechanisms in the kernel. In addition, I/O loads down the memory bus during data copies between controllers and physical memory and again during copies between kernel buffers and application data space. Coping gracefully with all these demands is one of the major concerns of a computer architect.

Although modern computers can handle many thousands of interrupts per second, interrupt handling is a relatively expensive task. Each interrupt causes the system to perform a state change, to execute the interrupt handler, and then to restore state. Programmed I/O can be more efficient than interrupt-driven I/O, if the number of cycles spent in busy waiting is not excessive. An I/O completion typically unblocks a process, leading to the full overhead of a context switch.

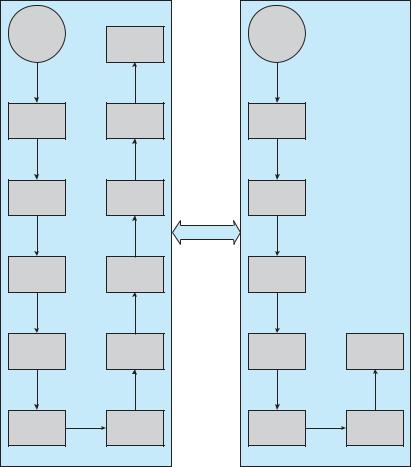

Network traffic can also cause a high context-switch rate. Consider, for instance, a remote login from one machine to another. Each character typed on the local machine must be transported to the remote machine. On the local machine, the character is typed; a keyboard interrupt is generated; and the character is passed through the interrupt handler to the device driver, to the kernel, and then to the user process. The user process issues a network I/O system call to send the character to the remote machine. The character then flows into the local kernel, through the network layers that construct a network packet, and into the network device driver. The network device driver transfers the packet to the network controller, which sends the character and generates an interrupt. The interrupt is passed back up through the kernel to cause the network I/O system call to complete.

Now, the remote system’s network hardware receives the packet, and an interrupt is generated. The character is unpacked from the network protocols and is given to the appropriate network daemon. The network daemon identifies which remote login session is involved and passes the packet to the appropriate subdaemon for that session. Throughout this flow, there are

616 |

Chapter 13 I/O Systems |

character |

system call |

|||

typed |

||||

|

|

completes |

||

hard- |

ware |

context |

switch |

|

interrupt |

interrupt |

|||

generated |

handled |

|||

state |

save |

state |

save |

|

interrupt |

interrupt |

|||

handled |

generated |

|||

|

|

|

network |

|

device |

network |

|||

driver |

adapter |

|||

kernel |

device |

|||

driver |

||||

|

|

|||

context |

switch |

|

|

|

user |

context |

|

||

kernel |

||||

process |

||||

switch |

|

|||

|

|

|

||

|

sending system |

|

||

network |

|

|

||

packet |

|

|

||

received |

|

|

||

hard- |

ware |

|

|

|

network |

|

|

||

adapter |

|

|

||

interrupt |

|

|

||

generated |

|

|

||

state |

save |

|

|

|

device |

|

|

||

driver |

|

|

||

kernel |

network |

|||

subdaemon |

||||

|

|

|||

context |

switch |

context |

switch |

|

network |

context |

|

||

kernel |

||||

daemon |

||||

switch |

|

|||

|

|

|

||

|

receiving system |

|

||

Figure 13.15 Intercomputer communications.

context switches and state switches (Figure 13.15). Usually, the receiver echoes the character back to the sender; that approach doubles the work.

To eliminate the context switches involved in moving each character between daemons and the kernel, the Solaris developers reimplemented the telnet daemon using in-kernel threads. Sun estimated that this improvement increased the maximum number of network logins from a few hundred to a few thousand on a large server.

Other systems use separate front-end processors for terminal I/O to reduce the interrupt burden on the main CPU. For instance, a terminal concentrator can multiplex the traffic from hundreds of remote terminals into one port on a large computer. An I/O channel is a dedicated, special-purpose CPU found in mainframes and in other high-end systems. The job of a channel is to offload I/O work from the main CPU. The idea is that the channels keep the data flowing smoothly, while the main CPU remains free to process the data. Like the device controllers and DMA controllers found in smaller computers, a channel can process more general and sophisticated programs, so channels can be tuned for particular workloads.

13.7 Performance |

617 |

We can employ several principles to improve the efficiency of I/O:

•Reduce the number of context switches.

•Reduce the number of times that data must be copied in memory while passing between device and application.

•Reduce the frequency of interrupts by using large transfers, smart controllers, and polling (if busy waiting can be minimized).

•Increase concurrency by using DMA-knowledgeable controllers or channels to offload simple data copying from the CPU.

•Move processing primitives into hardware, to allow their operation in device controllers to be concurrent with CPU and bus operation.

•Balance CPU, memory subsystem, bus, and I/O performance, because an overload in any one area will cause idleness in others.

I/O devices vary greatly in complexity. For instance, a mouse is simple. The mouse movements and button clicks are converted into numeric values that are passed from hardware, through the mouse device driver, to the application. By contrast, the functionality provided by the Windows disk device driver is complex. It not only manages individual disks but also implements RAID arrays (Section 10.7). To do so, it converts an application’s read or write request into a coordinated set of disk I/O operations. Moreover, it implements sophisticated error-handling and data-recovery algorithms and takes many steps to optimize disk performance.

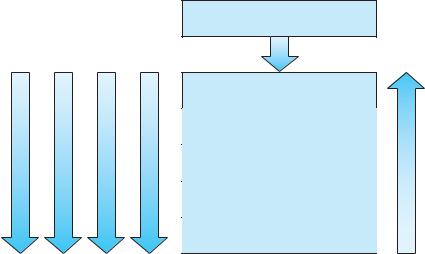

Where should the I/O functionality be implemented —in the device hardware, in the device driver, or in application software? Sometimes we observe the progression depicted in Figure 13.16.

increased time (generations) |

increased efficiency |

increased development cost |

increased abstraction |

new algorithm

application code

kernel code

device-driver code

device-controller code (hardware)

device code (hardware)

increased flexibility

Figure 13.16 Device functionality progression.

618 |

Chapter 13 I/O Systems |

•Initially, we implement experimental I/O algorithms at the application level, because application code is flexible and application bugs are unlikely to cause system crashes. Furthermore, by developing code at the application level, we avoid the need to reboot or reload device drivers after every change to the code. An application-level implementation can be inefficient, however, because of the overhead of context switches and because the application cannot take advantage of internal kernel data structures and kernel functionality (such as efficient in-kernel messaging, threading, and locking).

•When an application-level algorithm has demonstrated its worth, we may reimplement it in the kernel. This can improve performance, but the development effort is more challenging, because an operating-system kernel is a large, complex software system. Moreover, an in-kernel implementation must be thoroughly debugged to avoid data corruption and system crashes.

•The highest performance may be obtained through a specialized implementation in hardware, either in the device or in the controller. The disadvantages of a hardware implementation include the difficulty and expense of making further improvements or of fixing bugs, the increased development time (months rather than days), and the decreased flexibility. For instance, a hardware RAID controller may not provide any means for the kernel to influence the order or location of individual block reads and writes, even if the kernel has special information about the workload that would enable it to improve the I/O performance.

13.8Summary

The basic hardware elements involved in I/O are buses, device controllers, and the devices themselves. The work of moving data between devices and main memory is performed by the CPU as programmed I/O or is offloaded to a DMA controller. The kernel module that controls a device is a device driver. The system-call interface provided to applications is designed to handle several basic categories of hardware, including block devices, character devices, memory-mapped files, network sockets, and programmed interval timers. The system calls usually block the processes that issue them, but nonblocking and asynchronous calls are used by the kernel itself and by applications that must not sleep while waiting for an I/O operation to complete.

The kernel’s I/O subsystem provides numerous services. Among these are I/O scheduling, buffering, caching, spooling, device reservation, and error handling. Another service, name translation, makes the connections between hardware devices and the symbolic file names used by applications. It involves several levels of mapping that translate from character-string names, to specific device drivers and device addresses, and then to physical addresses of I/O ports or bus controllers. This mapping may occur within the file-system name space, as it does in UNIX, or in a separate device name space, as it does in MS-DOS.

STREAMS is an implementation and methodology that provides a framework for a modular and incremental approach to writing device drivers and