- •Preface

- •Contents

- •1.1 What Operating Systems Do

- •1.2 Computer-System Organization

- •1.4 Operating-System Structure

- •1.5 Operating-System Operations

- •1.6 Process Management

- •1.7 Memory Management

- •1.8 Storage Management

- •1.9 Protection and Security

- •1.10 Kernel Data Structures

- •1.11 Computing Environments

- •1.12 Open-Source Operating Systems

- •1.13 Summary

- •Practice Exercises

- •Bibliographical Notes

- •Bibliography

- •2.3 System Calls

- •2.4 Types of System Calls

- •2.5 System Programs

- •2.6 Operating-System Design and Implementation

- •2.9 Operating-System Generation

- •2.10 System Boot

- •2.11 Summary

- •Practice Exercises

- •Bibliographical Notes

- •Bibliography

- •3.1 Process Concept

- •3.2 Process Scheduling

- •3.3 Operations on Processes

- •3.4 Interprocess Communication

- •3.5 Examples of IPC Systems

- •3.7 Summary

- •Practice Exercises

- •Bibliographical Notes

- •Bibliography

- •4.1 Overview

- •4.2 Multicore Programming

- •4.3 Multithreading Models

- •4.4 Thread Libraries

- •4.5 Implicit Threading

- •4.6 Threading Issues

- •4.8 Summary

- •Practice Exercises

- •Bibliographical Notes

- •Bibliography

- •5.1 Background

- •5.3 Peterson’s Solution

- •5.4 Synchronization Hardware

- •5.5 Mutex Locks

- •5.6 Semaphores

- •5.7 Classic Problems of Synchronization

- •5.8 Monitors

- •5.9 Synchronization Examples

- •5.10 Alternative Approaches

- •5.11 Summary

- •Practice Exercises

- •Bibliographical Notes

- •Bibliography

- •6.1 Basic Concepts

- •6.2 Scheduling Criteria

- •6.3 Scheduling Algorithms

- •6.4 Thread Scheduling

- •6.5 Multiple-Processor Scheduling

- •6.6 Real-Time CPU Scheduling

- •6.8 Algorithm Evaluation

- •6.9 Summary

- •Practice Exercises

- •Bibliographical Notes

- •Bibliography

- •7.1 System Model

- •7.2 Deadlock Characterization

- •7.3 Methods for Handling Deadlocks

- •7.4 Deadlock Prevention

- •7.5 Deadlock Avoidance

- •7.6 Deadlock Detection

- •7.7 Recovery from Deadlock

- •7.8 Summary

- •Practice Exercises

- •Bibliography

- •8.1 Background

- •8.2 Swapping

- •8.3 Contiguous Memory Allocation

- •8.4 Segmentation

- •8.5 Paging

- •8.6 Structure of the Page Table

- •8.7 Example: Intel 32 and 64-bit Architectures

- •8.8 Example: ARM Architecture

- •8.9 Summary

- •Practice Exercises

- •Bibliographical Notes

- •Bibliography

- •9.1 Background

- •9.2 Demand Paging

- •9.3 Copy-on-Write

- •9.4 Page Replacement

- •9.5 Allocation of Frames

- •9.6 Thrashing

- •9.8 Allocating Kernel Memory

- •9.9 Other Considerations

- •9.10 Operating-System Examples

- •9.11 Summary

- •Practice Exercises

- •Bibliographical Notes

- •Bibliography

- •10.2 Disk Structure

- •10.3 Disk Attachment

- •10.4 Disk Scheduling

- •10.5 Disk Management

- •10.6 Swap-Space Management

- •10.7 RAID Structure

- •10.8 Stable-Storage Implementation

- •10.9 Summary

- •Practice Exercises

- •Bibliographical Notes

- •Bibliography

- •11.1 File Concept

- •11.2 Access Methods

- •11.3 Directory and Disk Structure

- •11.4 File-System Mounting

- •11.5 File Sharing

- •11.6 Protection

- •11.7 Summary

- •Practice Exercises

- •Bibliographical Notes

- •Bibliography

- •12.2 File-System Implementation

- •12.3 Directory Implementation

- •12.4 Allocation Methods

- •12.5 Free-Space Management

- •12.7 Recovery

- •12.9 Example: The WAFL File System

- •12.10 Summary

- •Practice Exercises

- •Bibliographical Notes

- •Bibliography

- •13.1 Overview

- •13.2 I/O Hardware

- •13.3 Application I/O Interface

- •13.4 Kernel I/O Subsystem

- •13.5 Transforming I/O Requests to Hardware Operations

- •13.6 STREAMS

- •13.7 Performance

- •13.8 Summary

- •Practice Exercises

- •Bibliographical Notes

- •Bibliography

- •14.1 Goals of Protection

- •14.2 Principles of Protection

- •14.3 Domain of Protection

- •14.4 Access Matrix

- •14.5 Implementation of the Access Matrix

- •14.6 Access Control

- •14.7 Revocation of Access Rights

- •14.8 Capability-Based Systems

- •14.9 Language-Based Protection

- •14.10 Summary

- •Practice Exercises

- •Bibliographical Notes

- •Bibliography

- •15.1 The Security Problem

- •15.2 Program Threats

- •15.3 System and Network Threats

- •15.4 Cryptography as a Security Tool

- •15.5 User Authentication

- •15.6 Implementing Security Defenses

- •15.7 Firewalling to Protect Systems and Networks

- •15.9 An Example: Windows 7

- •15.10 Summary

- •Exercises

- •Bibliographical Notes

- •Bibliography

- •16.1 Overview

- •16.2 History

- •16.4 Building Blocks

- •16.5 Types of Virtual Machines and Their Implementations

- •16.6 Virtualization and Operating-System Components

- •16.7 Examples

- •16.8 Summary

- •Exercises

- •Bibliographical Notes

- •Bibliography

- •17.1 Advantages of Distributed Systems

- •17.2 Types of Network-based Operating Systems

- •17.3 Network Structure

- •17.4 Communication Structure

- •17.5 Communication Protocols

- •17.6 An Example: TCP/IP

- •17.7 Robustness

- •17.8 Design Issues

- •17.9 Distributed File Systems

- •17.10 Summary

- •Practice Exercises

- •Bibliographical Notes

- •Bibliography

- •18.1 Linux History

- •18.2 Design Principles

- •18.3 Kernel Modules

- •18.4 Process Management

- •18.5 Scheduling

- •18.6 Memory Management

- •18.7 File Systems

- •18.8 Input and Output

- •18.9 Interprocess Communication

- •18.10 Network Structure

- •18.11 Security

- •18.12 Summary

- •Practice Exercises

- •Bibliographical Notes

- •Bibliography

- •19.1 History

- •19.2 Design Principles

- •19.3 System Components

- •19.4 Terminal Services and Fast User Switching

- •19.5 File System

- •19.6 Networking

- •19.7 Programmer Interface

- •19.8 Summary

- •Practice Exercises

- •Bibliographical Notes

- •Bibliography

- •20.1 Feature Migration

- •20.2 Early Systems

- •20.3 Atlas

- •20.7 CTSS

- •20.8 MULTICS

- •20.10 TOPS-20

- •20.12 Macintosh Operating System and Windows

- •20.13 Mach

- •20.14 Other Systems

- •Exercises

- •Bibliographical Notes

- •Bibliography

- •Credits

- •Index

258 Chapter 5 Process Synchronization

Bibliographical Notes

The mutual-exclusion problem was first discussed in a classic paper by [Dijkstra (1965)]. Dekker’s algorithm (Exercise 5.8)—the first correct software solution to the two-process mutual-exclusion problem —was developed by the Dutch mathematician T. Dekker. This algorithm also was discussed by [Dijkstra (1965)]. A simpler solution to the two-process mutual-exclusion problem has since been presented by [Peterson (1981)] (Figure 5.2). The semaphore concept was suggested by [Dijkstra (1965)].

The classic process-coordination problems that we have described are paradigms for a large class of concurrency-control problems. The boundedbuffer problem and the dining-philosophers problem were suggested in [Dijkstra (1965)] and [Dijkstra (1971)]. The readers –writers problem was suggested by [Courtois et al. (1971)].

The critical-region concept was suggested by [Hoare (1972)] and by [Brinch-Hansen (1972)]. The monitor concept was developed by [Brinch-Hansen (1973)]. [Hoare (1974)] gave a complete description of the monitor.

Some details of the locking mechanisms used in Solaris were presented in [Mauro and McDougall (2007)]. As noted earlier, the locking mechanisms used by the kernel are implemented for user-level threads as well, so the same types of locks are available inside and outside the kernel. Details of Windows 2000 synchronization can be found in [Solomon and Russinovich (2000)]. [Love (2010)] describes synchronization in the Linux kernel.

Information on Pthreads programming can be found in [Lewis and Berg (1998)] and [Butenhof (1997)]. [Hart (2005)] describes thread synchronization using Windows. [Goetz et al. (2006)] present a detailed discussion of concurrent programming in Java as well as the java.util.concurrent package. [Breshears (2009)] and [Pacheco (2011)] provide detailed coverage of synchronization issues in relation to parallel programming. [Lu et al. (2008)] provide a study of concurrency bugs in real-world applications.

[Adl-Tabatabai et al. (2007)] discuss transactional memory. Details on using OpenMP can be found at http://openmp.org. Functional programming using Erlang and Scala is covered in [Armstrong (2007)] and [Odersky et al. ()] respectively.

Bibliography

[Adl-Tabatabai et al. (2007)] A.-R. Adl-Tabatabai, C. Kozyrakis, and B. Saha, “Unlocking Concurrency”, Queue, Volume 4, Number 10 (2007), pages 24–33.

[Armstrong (2007)] J. Armstrong, Programming Erlang Software for a Concurrent World, The Pragmatic Bookshelf (2007).

[Breshears (2009)] C. Breshears, The Art of Concurrency, O’Reilly & Associates (2009).

[Brinch-Hansen (1972)] P. Brinch-Hansen, “Structured Multiprogramming”, Communications of the ACM, Volume 15, Number 7 (1972), pages 574–578.

Bibliography 259

[Brinch-Hansen (1973)] P. Brinch-Hansen, Operating System Principles, Prentice

Hall (1973).

[Butenhof (1997)] D. Butenhof, Programming with POSIX Threads, AddisonWesley (1997).

[Courtois et al. (1971)] P. J. Courtois, F. Heymans, and D. L. Parnas, “Concurrent Control with ‘Readers’ and ‘Writers’”, Communications of the ACM, Volume 14, Number 10 (1971), pages 667–668.

[Dijkstra (1965)] E. W. Dijkstra, “Cooperating Sequential Processes”, Technical report, Technological University, Eindhoven, the Netherlands (1965).

[Dijkstra (1971)] E. W. Dijkstra, “Hierarchical Ordering of Sequential Processes”, Acta Informatica, Volume 1, Number 2 (1971), pages 115–138.

[Goetz et al. (2006)] B. Goetz, T. Peirls, J. Bloch, J. Bowbeer, D. Holmes, and D. Lea, Java Concurrency in Practice, Addison-Wesley (2006).

[Hart (2005)] J. M. Hart, Windows System Programming, Third Edition, AddisonWesley (2005).

[Hoare (1972)] C. A. R. Hoare, “Towards a Theory of Parallel Programming”, in

[Hoare and Perrott 1972] (1972), pages 61–71.

[Hoare (1974)] C. A. R. Hoare, “Monitors: An Operating System Structuring Concept”, Communications of the ACM, Volume 17, Number 10 (1974), pages 549–557.

[Kessels (1977)] J. L. W. Kessels, “An Alternative to Event Queues for Synchronization in Monitors”, Communications of the ACM, Volume 20, Number 7 (1977), pages 500–503.

[Lewis and Berg (1998)] B. Lewis and D. Berg, Multithreaded Programming with Pthreads, Sun Microsystems Press (1998).

[Love (2010)] R. Love, Linux Kernel Development, Third Edition, Developer’s Library (2010).

[Lu et al. (2008)] S. Lu, S. Park, E. Seo, and Y. Zhou, “Learning from mistakes: a comprehensive study on real world concurrency bug characteristics”, SIGPLAN Notices, Volume 43, Number 3 (2008), pages 329–339.

[Mauro and McDougall (2007)] J. Mauro and R. McDougall, Solaris Internals: Core Kernel Architecture, Prentice Hall (2007).

[Odersky et al. ()] M. Odersky, V. Cremet, I. Dragos, G. Dubochet, B. Emir, S. Mcdirmid, S. Micheloud, N. Mihaylov, M. Schinz, E. Stenman, L. Spoon, and M. Zenger.

[Pacheco (2011)] P. S. Pacheco, An Introduction to Parallel Programming, Morgan Kaufmann (2011).

[Peterson (1981)] G. L. Peterson, “Myths About the Mutual Exclusion Problem”,

Information Processing Letters, Volume 12, Number 3 (1981).

[Solomon and Russinovich (2000)] D. A. Solomon and M. E. Russinovich, Inside Microsoft Windows 2000, Third Edition, Microsoft Press (2000).

C H6A P T E R

CPU Scheduling

CPU scheduling is the basis of multiprogrammed operating systems. By switching the CPU among processes, the operating system can make the computer more productive. In this chapter, we introduce basic CPU-scheduling concepts and present several CPU-scheduling algorithms. We also consider the problem of selecting an algorithm for a particular system.

In Chapter 4, we introduced threads to the process model. On operating systems that support them, it is kernel-level threads —not processes —that are in fact being scheduled by the operating system. However, the terms "process scheduling" and "thread scheduling" are often used interchangeably. In this chapter, we use process scheduling when discussing general scheduling concepts and thread scheduling to refer to thread-specific ideas.

CHAPTER OBJECTIVES

•To introduce CPU scheduling, which is the basis for multiprogrammed operating systems.

•To describe various CPU-scheduling algorithms.

•To discuss evaluation criteria for selecting a CPU-scheduling algorithm for a particular system.

•To examine the scheduling algorithms of several operating systems.

6.1Basic Concepts

In a single-processor system, only one process can run at a time. Others must wait until the CPU is free and can be rescheduled. The objective of multiprogramming is to have some process running at all times, to maximize CPU utilization. The idea is relatively simple. A process is executed until it must wait, typically for the completion of some I/O request. In a simple computer system, the CPU then just sits idle. All this waiting time is wasted; no useful work is accomplished. With multiprogramming, we try to use this time productively. Several processes are kept in memory at one time. When

261

262 |

Chapter 6 CPU Scheduling |

•

•

•

load store add store read from file

wait for I/O

store increment index

write to file

wait for I/O

load store add store read from file

wait for I/O

•

•

•

CPU burst

I/O burst

CPU burst

I/O burst

CPU burst

I/O burst

Figure 6.1 Alternating sequence of CPU and I/O bursts.

one process has to wait, the operating system takes the CPU away from that process and gives the CPU to another process. This pattern continues. Every time one process has to wait, another process can take over use of the CPU.

Scheduling of this kind is a fundamental operating-system function. Almost all computer resources are scheduled before use. The CPU is, of course, one of the primary computer resources. Thus, its scheduling is central to operating-system design.

6.1.1CPU–I/O Burst Cycle

The success of CPU scheduling depends on an observed property of processes: process execution consists of a cycle of CPU execution and I/O wait. Processes alternate between these two states. Process execution begins with a CPU burst. That is followed by an I/O burst, which is followed by another CPU burst, then another I/O burst, and so on. Eventually, the final CPU burst ends with a system request to terminate execution (Figure 6.1).

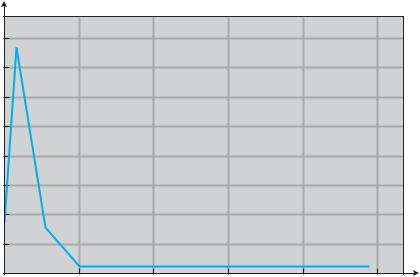

The durations of CPU bursts have been measured extensively. Although they vary greatly from process to process and from computer to computer, they tend to have a frequency curve similar to that shown in Figure 6.2. The curve is generally characterized as exponential or hyperexponential, with a large number of short CPU bursts and a small number of long CPU bursts.

6.1 Basic Concepts |

263 |

|

160 |

|

|

|

|

|

|

140 |

|

|

|

|

|

|

120 |

|

|

|

|

|

frequency |

100 |

|

|

|

|

|

80 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

60 |

|

|

|

|

|

|

40 |

|

|

|

|

|

|

20 |

|

|

|

|

|

|

0 |

8 |

16 |

24 |

32 |

40 |

burst duration (milliseconds)

Figure 6.2 Histogram of CPU-burst durations.

An I/O-bound program typically has many short CPU bursts. A CPU-bound program might have a few long CPU bursts. This distribution can be important in the selection of an appropriate CPU-scheduling algorithm.

6.1.2CPU Scheduler

Whenever the CPU becomes idle, the operating system must select one of the processes in the ready queue to be executed. The selection process is carried out by the short-term scheduler, or CPU scheduler. The scheduler selects a process from the processes in memory that are ready to execute and allocates the CPU to that process.

Note that the ready queue is not necessarily a first-in, first-out (FIFO) queue. As we shall see when we consider the various scheduling algorithms, a ready queue can be implemented as a FIFO queue, a priority queue, a tree, or simply an unordered linked list. Conceptually, however, all the processes in the ready queue are lined up waiting for a chance to run on the CPU. The records in the queues are generally process control blocks (PCBs) of the processes.

6.1.3Preemptive Scheduling

CPU-scheduling decisions may take place under the following four circumstances:

1.When a process switches from the running state to the waiting state (for example, as the result of an I/O request or an invocation of wait() for

the termination of a child process)

264Chapter 6 CPU Scheduling

2.When a process switches from the running state to the ready state (for example, when an interrupt occurs)

3.When a process switches from the waiting state to the ready state (for example, at completion of I/O)

4.When a process terminates

For situations 1 and 4, there is no choice in terms of scheduling. A new process (if one exists in the ready queue) must be selected for execution. There is a choice, however, for situations 2 and 3.

When scheduling takes place only under circumstances 1 and 4, we say that the scheduling scheme is nonpreemptive or cooperative. Otherwise, it is preemptive. Under nonpreemptive scheduling, once the CPU has been allocated to a process, the process keeps the CPU until it releases the CPU either by terminating or by switching to the waiting state. This scheduling method was used by Microsoft Windows 3.x. Windows 95 introduced preemptive scheduling, and all subsequent versions of Windows operating systems have used preemptive scheduling. The Mac OS X operating system for the Macintosh also uses preemptive scheduling; previous versions of the Macintosh operating system relied on cooperative scheduling. Cooperative scheduling is the only method that can be used on certain hardware platforms, because it does not require the special hardware (for example, a timer) needed for preemptive scheduling.

Unfortunately, preemptive scheduling can result in race conditions when data are shared among several processes. Consider the case of two processes that share data. While one process is updating the data, it is preempted so that the second process can run. The second process then tries to read the data, which are in an inconsistent state. This issue was explored in detail in Chapter 5.

Preemption also affects the design of the operating-system kernel. During the processing of a system call, the kernel may be busy with an activity on behalf of a process. Such activities may involve changing important kernel data (for instance, I/O queues). What happens if the process is preempted in the middle of these changes and the kernel (or the device driver) needs to read or modify the same structure? Chaos ensues. Certain operating systems, including most versions of UNIX, deal with this problem by waiting either for a system call to complete or for an I/O block to take place before doing a context switch. This scheme ensures that the kernel structure is simple, since the kernel will not preempt a process while the kernel data structures are in an inconsistent state. Unfortunately, this kernel-execution model is a poor one for supporting real-time computing where tasks must complete execution within a given time frame. In Section 6.6, we explore scheduling demands of real-time systems.

Because interrupts can, by definition, occur at any time, and because they cannot always be ignored by the kernel, the sections of code affected by interrupts must be guarded from simultaneous use. The operating system needs to accept interrupts at almost all times. Otherwise, input might be lost or output overwritten. So that these sections of code are not accessed concurrently by several processes, they disable interrupts at entry and reenable interrupts at exit. It is important to note that sections of code that disable interrupts do not occur very often and typically contain few instructions.