Крючков Фундаменталс оф Нуцлеар Материалс Пхысицал Протецтион 2011

.pdf

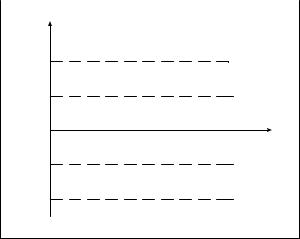

1%. These bounds exceeded (alarm limit) is viewed as the anomaly and indicates that it is necessary to analyze the causes in detail. The bounds of this interval are normally used as the threshold level for the alarm to be generated.

ИР |

|

|

|

ID |

|

|

|

3S |

|

|

Alarm |

2S |

|

× |

Warning |

× |

× |

||

|

|

|

|

0 |

× |

× |

t |

× |

|

||

|

|

||

-2S |

× |

× |

Warning |

|

|||

|

|

||

-3S |

|

|

Alarm |

Fig. 6.2. A chart of NM balance control in an MBA (S – one ID sigma)

Determination models of allowable ID deviations based on accounting NM measurement errors

Determination of the resultant error in the NM balance or, in other words, the ID error, requires a model matching the conditions of the given physical inventory taking in the MBA concerned (the model is expected to “sense” the existing uncertainties).

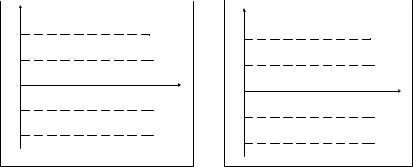

Fig. 6.3 gives examples of the limits calculation models that are not adequate to the existing statistical ID straggling.

Fig. 6.3a shows an overestimation of the tolerable ID statistical uncertainty when there are excessively great limits for the alarm. Meanwhile, a real NM theft or loss may not trigger the alarm. This makes all efforts spent useless.

Fig. 6.3b shows a case of the tolerable ID statistical uncertainty underestimated. Underestimated tolerable limits lead to a high intensity of false alarms, though there is no NM shortage or surplus. Each of the false alarms has to be responded to, which is highly time-taking and costly. Frequent false alarms may cause a real NM theft or loss to be missed.

301

ИР |

|

|

|

|

|

|

|

ID |

|

|

|

|

|

|

|

3S |

|

|

|

|

|

|

Alarm |

2S |

|

|

|

|

|

|

Warning |

0 |

× |

× |

|

× |

× |

× |

t |

× |

|

||||||

|

|

× |

× |

|

|

||

-2S |

|

|

|

|

|

|

Warning |

-3S |

|

|

|

|

|

|

Alarm |

a)

ИР |

|

|

ID |

|

× |

× |

|

|

3S |

|

Alarm |

|

|

× |

2S |

|

Warning |

× |

|

t |

0 |

|

|

-2S |

× |

Warning |

|

||

× |

|

× |

|

Alarm |

|

-3S |

× |

|

|

|

|

|

|

b) |

Fig. 6.3. Examples of inadequate calculation models for ID straggling limits: a – model with the ID uncertainty overestimated;

b – model with the ID uncertainty underestimated

Therefore, the model of plotting tolerable limits for the ID statistical straggling should be reconciled.

Determination of tolerable ID deviations based on historical data

For production facilities with sustained operations, it is also permitted to use earlier inventory difference values. The established limits are determined by the recorded straggling of the ID values from the earlier physical inventory takings in the given MBA. This suggests that no anomalies with NM were detected in the previous PITs. Such approach has some positive points to it: the values of the limits are easily obtained with no computer models involved. This does not require detailed data on measurement errors. All NM accounting and measurement errors may affect the tolerable deviation limits.

General rules both in Russia and in the USA permit use of an approach with determination of the tolerable ID deviation limits based on historical data. This case however requires the applicability of such approach to the ID prediction to be proved. This takes place where no ID error formation structure changes over time.

Use of historical data is also helpful in reconciling computer models when these are used to calculate the allowable ID limits. Historical data provides a quality criterion for checking the estimated ID error. Namely,

302

the observed statistical ID straggling that is expected to match the interval of statistically insignificant ID values: (–2s ID, +2sID).

References

1.Методические рекомендации по проведению физической инвентаризации ядерных материалов на ядерных установках и пунктах хранения ядерных материалов. – РБ–026–04. Утверждено постановлением Федеральной службы по атомному надзору от

29.03.2004, № 1, М., 2004.

2.Глебов В.В., Измайлов А.В., Румянцев А.Н. Введение в системы учета, контроля и физической защиты ядерных материалов. М.:

МИФИ, 2001.

303

CHAPTER 7

COMPUTERIZED NM ACCOUNTING AND CONTROL

SYSTEMS

The prime objective of nuclear material (NM) accounting is early detection of shortage/surplus, loss, theft or unauthorized use of NM, as well as identification of causes for these to emerge and sources of origin thereof. Along with control and physical protection, accounting is what helps with achieving this goal. Accounting systems are also responsible for furnishing state authorities and other respectively authorized official bodies with data they may require for discharge of their functions.

These goals cannot be achieved in full with no respective data collection and handling processes automated.

The requirements imposed by the Federal Information System (FIS) upon reports by operators in nuclear industry, both with respect to the volume of information and the data submission time, cannot be complied with unless modern data processing technologies in place.

Along with the centralized computerized data acquisition and processing system, computerized (automated) NM accounting and control systems established at NM material handling and storage sites shall form the Federal Information System (FIS). Complete FIS requirements to reports, data detailing and data submission frequency place operators under severe constraints with respect to functionality and software support of their computerized NM A&C systems.

7.1. Industry-standard requirements to NM A&C systems

The functions of and requirements to material accounting systems are set forth in OST 95 10537-97, an industry standard of the Russian Federation Ministry for Atomic Energy [1]. We will refer to this standard when discussing the components of accounting and control systems, and review its requirements to the sections studied. This is the earliest industry standard that governs functions, properties, development procedures and requirements to computerized NM A&C systems. Much experience has been gathered in establishment and handling of such systems since the standard adoption time, so a new release of the industry standard is the order of the day.

As defined by the standard, accounting and control (A&C) systems are intended to:

304

∙provide data for accounting and control of nuclear material held at sites, coming in or going out of same or liquidated subject to operator’s legal responsibility;

∙account for any movement of material between material balance areas and any change in the material type or form;

∙give out timely and credible data to support accounting and control.

To realize these functions for each MBA and the site as the whole, the A&C system shall:

∙keep data records of NM quantities and characteristics for all measurements at key points;

∙keep track of any changes in the NM type, form or location, including offsite transactions (shipment/receipt);

∙determine the material quantity in each MBA and at the enterprise as the whole;

∙keep accounting records and reports;

∙oversee the timeliness and correctness of transactions with NM;

∙control and account for personnel and personnel access to data;

∙support inspections and inventory takings;

∙search for product locations on a timely basis;

∙communicate with the enterprise’s physical protection system;

∙protect information circulating within the system;

∙communicate with A&C systems of other enterprises and the Federal Information System.

Two A&C system functionality areas are identified by the standard: administration and management of databases. There are three functions of administration:

∙servicing of customer and administration data queries for the status of the enterprise operations;

∙monitoring and control with respect to authorization of access to the NM A&C system data;

∙information support for investigations of contingencies.

The functions of database control are to:

∙receive and process data on all NM movements within or out of or into the site;

∙form site databases;

∙generate reports in formats and on carriers as required;

∙check information for mutual consistency;

∙search for and put out data on a timely basis;

305

∙keep files and create backup copies.

The standard requires information on NM to be objective, consistent, timely, complete, coherent and successive. The following shall be understood by this. Objective information requires all database entries to have a documented confirmation with all documentation to form a unified system and not to contain contradictions. Timely data suggests that changes in the status of items to be updated on a timely basis (in near real time) and to be complete (not to contain unlogged changes). Coherent requires that once entered data not to be changed or erased with any correction to accounts to be only subject to a specified procedure and recorded. Successive means the consistency of a newly established computerized system with the site’s NM A&C system that already exists.

The information subsystem of the A&C system shall contain complete information on NM, accounting and control documentation, data on the activities arranged for, personnel access authorization data, technical data of the facility required to carry out accounting and control, and instrumentation data.

The standard includes a very important requirement that the information subsystem should be a normal operation system, i.e. an emergency failure or trip thereof should not be harmful to NM accounting.

By its section pertaining to information support requirements, the standard requires incorporation of all types of reports as required by operator, federal and, where international obligations apply, IAEA standards. The system shall generate paper reports in required formats. These requirements of the standard are further detailed by the FIS requirements.

Special requirements are imposed on security of information, these taking into account both security aspects: safety of information when the system fails or breaks down, and security of information against unauthorized access. It is so required that:

∙backup database copies should be created and updated on a regular basis;

∙the track record of each item that has changed hands should be kept to trace the sequence of actions;

∙NM for peaceful and military uses should be accounted for separately;

∙secure communication channels, local networks, and paper and magnetic carriers should be used.

We shall take a closer look at information security issues of computerized A&C systems, components thereof and requirements to the development of such systems in Chapter 8.

306

7.2. Architecture of computerized NM accounting systems

The history of systems for accounting of nuclear material goes back to the very dawn of the nuclear era in the 1940s. Since then, NM accounting systems have gone through a number of evolution phases.

Initially, in the 1940s and the 1950s, nuclear material handling used manual data processing systems. No computers existed at the time and material volumes in use allowed data to be inventoried manually with the respective entry made for each item. Operating these systems involved complications making it hard to systemize information on particular items and search for required data. Time of arrival was the only criterion to which information could be arranged in logs. More complex operations and more items to be handled meant much more time to be spent to process and search for data. Still, such systems may exist nowadays as well, say in educational laboratories with just few items in storage. Also, industry standards may bind operators to keeping hard copies of computerized accounting records.

The advent of computers in the 1960s gave birth to systems based on processing of computerized packages. To operate such a system, one had to create packages on punch cards or other data media and update databases by passing these packages. No database theory was practically developed at this IT evolution stage. Data was stored as so-called flat files. Distinctive of these systems were primitive databases and extremely slow information updates. All this made systems slowly operating and troublesome. Information was practically always backed up manually.

A major breakthrough in the employment of ITs to evolve control and accounting systems was brought about by creation of the first mainframes linked to user stations. By the time (the 1970s), the first database management systems (DBMS) came into being. Coupling rather highpower computers with advantages offered by interactive data input led to high-performance systems having some commercial application to date. Functionality of such systems is however limited because of the need to network large volumes of information and because of the necessity to process all data from all users on the mainframe computer. The requirements to the mainframe and network components are therefore rather rigorous leading so to the whole of the system getting more expensive.

The advent of personal computers has changed all human technologies. These can have varied applications in accounting systems. A PC can be used as:

307

∙a terminal station networked to the mainframe computer;

∙a computer to handle data coming in from the mainframe in file server systems;

∙a database client or server in a client/server system.

Depending on the system architecture, use of PCs may be both advantageous and limiting. Practically all current systems have PC as the basis so we shall give this a closer look.

We shall first define what “client/server” architec ture is [2]. An important function of this architecture is to achieve highly efficient use of computers in a computer system by partitioning tasks into smaller levels and allocating operations at these levels to computers. A number of tasks that form a level require auxiliary operations to be done at another level. So we say that the applicant program is a “client” whi le the auxiliary task serves it, that is, acts as a “server”. A client/se rver application can be defined as composed of essential parts of other applications executed on different computers. A well formed client/server application suggests that processes are run exactly where the process-specific work is done most efficiently.

Applications in existing computerized accounting systems incorporate several application levels, which can be assembled into three global logical levels in three functionality areas:

∙user data presentation logic. This is largely enclosed in the user interface supporting software;

∙business rules support logic. In our case, this should to realize NM accounting standards and regulations and the system functionality;

∙database access logic. This normally includes mechanisms that support a particular DBMS, deal with storing and update of and access to data and support the integrity thereof.

Depending on how operational logic support applications are allocated, one can build various types of client/server systems.

Having all system levels realized on one computer, we will nevertheless deal with a client/server model because processes are divided internally into client and server ones. Such integration is representative of most mainframe-based systems. Listed below are the major features of a so arranged computation process:

∙terminal stations have only application data coming in, the rest of the levels being handled on the base computer;

308

∙where graphic data is involved, all of it is networked. So, depending on how data is processed, the network traffic can be both small and overcrowded;

∙a substantial weakness of such systems is lack of convenient interface and graphics, and limited report creation capabilities;

∙high-power mainframes are expensive.

A recent trend has been towards cheaper mainframes, while, for the other part, the capacity of workstations has enhanced lately drastically. In parallel, the evolution of client/server architectures has led to systems with a very “thin” client. Such systems have most of the data handling processes run on the mainframe. Obviously, the architecture of these systems is nearly conventional.

File server systems are based on architectures realizing another extremity. This suggests use of the server as simply a databank with all application logic realized on the workstation. The greatest advantage of such a system is simplicity. Drawbacks are more numerous though. The biggest one is that all data to handle needs to be networked from the server to the workstation. Such systems are normally realized on a PC basis and offer a user-friendly interface with advanced graphics (graphic data is front-end created and processed).

To realize a client-server architecture, one needs to break down the level of the client and server tasks so that to optimize use of the local network’s computational power and network traffic. This breakdown is typical of systems known as database servers. Such structuring balances use of client and server capabilities in computer systems. Its greatest advantage is a decrease in data communication load as it is only data needed by a particular application that is conveyed over the network.

Client/server systems are also cost-effective. Mainframe-based systems are highly expensive and dear to maintain. Client/server systems are cheap and employ affordable hardware and commercial software. They are also cheap to serve and upgrade.

The major advantages of client/server architectures are as follows:

∙work load is intrinsically distributed among more than computer;

∙users easily share data;

∙data is secured on a centralized basis;

∙better cost efficiency.

Still, such architecture has drawbacks:

∙high load on the application creator;

∙complicated network topology;

309

∙server software changes apply to all clients.

The client/server computation model forms the underlying paradigm of information technologies (IT). It began to evolve in the 1980s when computer industry started to move from centralized systems to systems of multiple-PC networks.

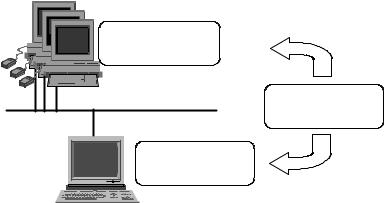

The simplest client/server architecture that was realized in the earliest applications of the kind is shown in Fig. 7.1. This is a dual-linked architecture which links two computers: a server computer and a client computer [3]. As such, it is realizable in two versions. The first one places most operational burden on the client. So, in this case, they say about a ”thick” client and a “thin” server. The server incl udes the DBMS which realizes data access logic. Each of the client computers incorporates applications that realize business and presentation logics.

Workstations

”Thick” client “Thin” server

∙ Presentation logic

(interface)

∙ Business logic

(accountancy rules)

∙ Data access logic

“Thick” server

“Thin” client

Database server

Fig. 7.1. A two-linked client/server model

This approach is simple. Such architecture is still limiting:

∙it requires rather a high-power computer for use as the workstation and the sufficient disk space;

∙in the event of a large data series to be handled by the client that is produced in response to a request, the network may be burdened severely;

∙each workstation-server connection requires rather a high-capacity server RAM; thus, MS SQL Server 6.5 (not in the least the costliest DBMS

310