Diss / (Springer Series in Information Sciences 25) S. Haykin, J. Litva, T. J. Shepherd (auth.), Professor Simon Haykin, Dr. John Litva, Dr. Terence J. Shepherd (eds.)-Radar Array Processing-Springer-Verlag

.pdf

3. Radar Target Parameter Estimation with Array Antennas |

71 |

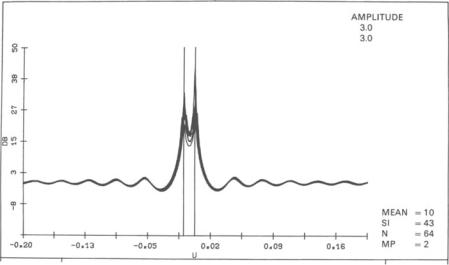

known. Note the similarity of the sidelobe structure to the AR spectrum, Fig. 3.5. However, in Fig. 3.8 no spatial averaging was used (because eigendecomposition, instead of matrix inversion, is required) and, therefore, we have more sidelobes in the plotted angular sector due to the larger effective aperture. There are always two peaks present, but in many cases there is a bias of one grid point (0.09 BW). This indicates higher fluctuations of the angle estimates.

There is a nice relationship between the Maximum-Entropy/Autoregressive, Capon, MUSIC, and the KT methods if the covariance matrix is exact, as described in [3.47]. Ifthe covariance matrix is exact, and ifthe signal power goes to infinity, then the inverse covariance matrix approaches a projection matrix. Therefore, the MUSIC spectrum can, in this case, be interpreted as the Capon spectrum, and the KT spectrum can be interpreted as the ME/AR spectrum. This explains the increased resolutioti of these methods. Furthermore, the Capon spectrum can be interpreted as the harmonic average over the ME/AR spectra of all possible filter lengths. This fact has already been found by Burg [3.20]. In the same way, it turns out that the MUSIC spectrum [3.47] is the weighted harmonic average of the KT spectra over all possible filter lengths. This explains the observed larger fluctuations of ME/AR and KT spectra compared to the Capon and MUSIC spectra, respectively, see Figs. 3.4 and 5,

3.6and 8. Figure 3.9 shows these relationships in the form of a graph.

For radar applications, projection methods are only of interest if they can

be computed fast enough. Therefore, the suboptimum methods, like the HT and YB projections are of special interest. Orthonormalization can be done efficiently by the Gram-Schmidt procedure, which has a nice recursive structure, or by QR decomposition for which a systolic array processor structure exists, see McWhirter [3.48]. If, for example, in the HT method the columns of Z(N x K matrix) are to be orthonormalized, we decompose Z = UD, with U a unitary N x N matrix and D an upper triangular N x K matrix; then the first K columns of U are just the orthonormalized columns of Z. But we need not calculate U. If we use (Z, a) as input matrix for the McWhirter systolic array, then the residual

r = |

min IIZx - al1 2 |

|

|

xeCK |

|

= IIZ(ZHZ)-l ZH a - al1 2 |

(3.23) |

|

= aRPa, where P= Z(ZHZ)-lZH - |

I |

|

= |

l/SHT(u, v) , |

|

will finally come out ofthe array in a direct fashion; see Chap. 5 for more details. The calculation of eigenvectors is still much more complicated. Recursive updates based on power iteration [3.49, 50], modem rank-one-update methods [3.51,52], or systolic arrays for singular value decomposition (SVD) of the data matrix [3.53], normally require the amount of computations of several QR

72 |

U. Nickel |

|

|

r-----,~ |

harmonic averaging |

, |

|

|

l |

|

|

|

SNR |

|

SNR |

|

C1J |

|

C1J |

|

|

weight. harm. averag. |

|

Fig. 3.9. Graphical representation of relationship between Capon, autoregressive, MUSIC, and KT superresolution methods

decompositions. Fast eigenvector determination is a field of intense current research.

b) Algebraic Methods

Instead of generating a continuous angular spectrum from the estimated signal subspace, one may calculate the desired directions by solving suitable algebraic equations.

Root-Finding Methods. All projection methods (as well as linear prediction methods and Capon methods) can be turned into algebraic methods in the case of a linear equally spaced array with Xk = kA./2. In this case, one identifies ak(u) = Zk, with z = exp(jnu). The denominator of the scan pattern Sp (and also SAR' SC,r) can then be considered as a real valued polynomial in the complex variable z of degree 2N. The roots Z(k) of this polynomial, projected on the unit circle, give estimates for z, i.e. of nu and, hence, of the direction ("RootMUSIC"). Ifwe reduce the dimension ofthe covariance matrix to the dimension of the signal subspace plus one, this approach is equivalent to the Pisarenko method [3.54]. A very general formulation ofthe root-finding eigenvector-based signal subspace approach is given by Cadzow et al. [3.55].

ESPRIT Algorithm [3.56]. The method is a completely different approach than the signal subspace methods mentioned previously. The method is applicable to planar arrays, involving the use of two subarrays that differ only by a constant shift. Only this shift vector needs to be known; the actual positions of the array elements are not required. The direction, with respect to the shift vector, is found by analyzing the eigenstructure of the autoand cross-covariance matrix of these two subarrays. Two eigendecompositions are required: first to determine the signal-alone covariance estimate for each subarray, and then to solve the generalized eigenproblem with the two signal covariance estimates. Simulations have shown a superior performance of the ESPRIT method over the MUSIC method for low signal-to-noise ratios [3.57]. However, the required array structure represents a basic restriction for the application of the ESPRIT method to subarray outputs. Only one direction is estimated by the twodimensional array. Otherwise, another shift property is required for the array element positions. The method is sensitive to the assumed noise covariance

3. Radar Target Parameter Estimation with Array Antennas |

73 |

matrix and to numerical errors [3.58]. More experience with this method is necessary.

For all the signal subspace methods described herein, we need to know the dimension of the signal subspace, Ms , and the number of targets, M. This is a difficult detection problem. For radar applications, the detection must be completely automatic and well-defined. For a more complete discussion of the detection problem, the reader is referred to the previous chapter. We have mentioned some methods to determine Ms. However, for real data, the target number M may be different from Ms. M is usually found by comparing the peaks of the scan pattern with a threshold. As the height of the peaks, in general, does not represent the target power, a validation of the presence of a target by checking the estimated amplitude is required. In this case, the methods for accurate amplitude or power estimation, described in Sect. 3.4.3, are useful. The distribution of the sidelobes of the scan pattern is important in setting the threshold for peak detection. The problem arising from cascading these test procedures needs to be investigated.

3.2.6 Parametric Target Model Fitting

For these methods we completely parameterize the signal vector s we expect to receive, and this target model is fitted to the data. Many parameterizations are possible. General descriptions of these methods have been given in [3.5, 59-61]. Although a broader discussion on parametric target model fitting (PTMF) methods has only recently started, it is one of the oldest superresolution methods. The essential ideas were presented in 1968, see [3.62, 63]. Thus, they are as old as the maximum-entropy method.

a) Target Models and Optimization Problem

a) General Radar Target Model. With the geometrical dimensions we have for radar applications, the point target (or plane wave) assumption with unknown complex amplitude is normally most appropriate:

M |

|

S = L a(ui' vilbi = Ab . |

(3.24) |

i=l |

|

The unknown parameters are (Ui,Vi), |

and the complex amplitudes bi |

(i = 1, ... , M), which are comprised in a vector bE (CM and a complex N x M matrix A, Ak,i = ak(Ui, Vi), (k = 1, ... ,N).

The fit of the model to the measured data is, for most PTMF methods, based on the maximum likelihood (ML) principle. The general description of the multiple target, ML detection and estimation problem, and the possible solutions have been presented in Chap. 2 ('true ML estimation'). For different assumptions on the target complex amplitude fluctuations and the noise, the corresponding optimum estimators and tests were derived. In this section, we

74 U. Nickel

focus on practical radar superresolution methods derived from the general procedures.

Maximum likelihood estimation for the model (3.24), under the assumption of uncorrelated Gaussian noise with unit variance, is completely analogous to the one target case (3.2): we maximize the density p ofthe measured-data vectors

Zl' ... , ZK over all unknown parameters 8 of the model (3.24): |

|

P(Zl, ... ,ZK; 8) = 11: -NK exp ( - kt[Zk - S(8)]H[Zk - s(8)] ) . |

(3.25) |

Equivalently, we minimize the negative exponent which is the mean-squared error between measured data and the assumed model:

|

K |

|

min |

L II Zk - s(w, hk ) 112 , |

(3.26) |

"!. 6, •...• 6K k= 1

where w is the vector of directions w = (Ul' Vi' ••• , UM' VM). In this case, we have assumed that the target complex amplitudes are deterministic, but not necessarily fixed. The target complex amplitude may vary from one snapshot to another. This is a very flexible model. The minimum over hk E ([M is a leastsquares problem and for each w the solution is given by

(3.27)

Then (3.26) reduces to the minimization of a function that depends only on the unknown directions:

K |

|

Q(w) = L Ilzk-A(AHA)-lAHzkI12. |

(3.28) |

k=l

This function has also the following equivalent notations [see also the general formulation in Chap. 2, (2.79)]

|

(3.29) |

K |

|

Q(w) = L Z~PCAZk |

(3.30) |

k=l

(3.31)

where PCA = / - A(AH A)-l A" is the projection on the complement of the column space of A. The matrix Rml is the ML estimate of the covariance matrix given in (3.8). The form given in (3.30) shows the relation of this method to the MUSIC method: For MUSIC given in (3.18), we determine the projection matrix from the data, and then measure the signal subspace by the angle with a parameterized signal vector On the other hand, in the approach described here, we parameterize the projection matrix and consider the average

3. Radar Target Parameter Estimation with Array Antennas |

75 |

angle with the data. This makes a difference when we consider correlated targets [3.39]. It is shown there, that for correlated targets, the PTMF method is superior to the MUSIC method; only for uncorrelated targets, is PTMF asymptotically equivalent to MUSIC.

The minimization of (3.30) is equivalent to the maximization of

K |

|

S(w) = L zrA(AHA)-l AHZk . |

(3.32) |

k=l |

|

This form has a very intuitive interpretation: the columns of A contain the |

|

weightings for conventional beamforming in M directions. These weightings are orthogonalized by the matrix (A HA)-1/2, i.e., we have M orthogonal weight vectors (A(A HA)-1/2);, i = 1, ... ,M.Maximizing the function Sin (3.32) is, therefore, equivalent to the maximization of the average output power of these M decoupled beams. If the target number M is equal to one, the decoupling matrix (AH A)-l is a scalar, and equal to liN. The maximization of (3.32) is, then, exactly the conventional one-target estimate of (3.4). Hence, for this signal model, PTMF is a multidimensional extension of the conventional angle estimate.

Because of the deterministic amplitude model in (3.26), the PTMF method is able to resolve even completely correlated targets. This makes PTMF methods of special interest for radar as a method of countering mUlti-path [3.5, 6]. In the following sections we will consider the function (3.28-31) as 'the' function to be minimized for PTMF methods, although PTMF with other signal models is possible.

b) Other Signal Models. For the special low angle tracking problem, more refined models than (3.24) are possible. If we know the target range (and this can be very accurate for short pulses), we can model the propagation paths exactly, including knowledge of the index of refraction in the atmosphere, and the reflection coefficient of the sea's surface. We may then obtain extremely fine resolution [3.8, 9]. However, with these refined models, associated problems with the global minimum of the MSE function (3.26, 28) may require an additional superresolution method, or a measurement at 'a different frequency.

Instead of the deterministic, variable signal model of (3.26), we may also assume signals with uncorrelated Rayleigh amplitudes, i.e. the target complex amplitudes are assumed to be independently Gaussian, distributed with zero mean and variance p2. This leads to a different likelihood function and, therefore, to a different minimization problem. The properties of this type of estimation have been examined by Bohme et al. [3.60,61]. The density of the data vectors is

P(Zl," ., ZK; 8) = n-NKdet[R(w, B)rKexP[- f zrR(w, B)-l Zk ]. k=l

The unknown parameters are 8 = (w, B), where the directions are denoted by w = (Ul' Vi' ••• ,UM' vM ), and the target powers by Pi, ... ,Pit, which are the

76 U. Nickel

elements in the diagonal matrix B. The matrix R(w, B) is the parameterized covariance matrix as in (3.13). The log-likelihood function to be maximized is, therefore:

-In{ det[R(w, B)J} - tr[RmIR(w, B)] . |

(3.33) |

The matrix Rml is the ML estimate of the covariance matrix given in (3.8). From a phased array processing viewpoint (application of beamforming procedures), the function (3.33) is inconvenient and difficult to minimize. In addition, in many cases of radar applications, we have to consider slowly varying complex target amplitudes, e.g., nearly fixed amplitudes with only phase variations, where the stochastic target model is not adequate.

c) Correlated Noise Case. Equatioil.s (3.26,28,32) use the assumption that the data are composed of samples of the signal plus uncorrelated Gaussian noise. If we know the noise covariance matrix Q, we may replace the exponent of (3.25) by

K

- L [Zk - s(3)JHQ- 1[zk - s(3)] .

k=1

This means that for the minimization in (3.26) we may replace the Euclidean vector norm II x 112 = xH X by the weighted quadratic norm II x 112 = xH Q- 1 x. The least-squares solution (3.27) then becomes bk = (A HQ-1 A)-1 AHQ-1 Zk'

Correspondingly, the function (3.28) has a different form [3.59]. If we replace A by Aw = Q- 1/2 A and Z by Zw = Q - 1/2 Z, we see that this approach is exactly the same as the pre-whitening procedure described in (3.17). If we use the stochastic target model in (3.33), in the non-white noise case we may replace the parameterization of the covariance ABAH + 0'2 I by ABAH + Q.

Exact knowledge of the noise covariance is unrealistic for a radar system. In reality, we either have to estimate the noise covariance and then use the techniques described previously, or use adaptive array processing, as described in the other chapters of this book. Alternatively, if we have partial knowledge of the noise (e.g., the jammer directions), we may extend the PTMF method to estimating the remaining noise parameters, too. This has been described by

Bohme and Kraus [3.64].

b) Parameter Estimation

The estimates given by the minimum of the function (3.26) are not normal maximum likelihood estimates, because the number of parameters to be estimated increases with the number of samples. Stoica et al. [3.39J have analyzed the asymptotic properties of this estimate and have found the following results: If the number of array elements N goes to infinity, the estimate given by (3.26) is consistent (i.e., it converges to the true values), even for only one time sample K = 1. However, if the N array elements are given, and the number of time

3. Radar Target Parameter Estimation with Array Antennas |

77 |

samples K goes to infinity, only the direction parameters are estimated consistently, not the complex amplitudes. This implies that, for finite values of N, the direction estimates do not meet the Cramer-Rao bound, i.e., the direction estimates are inefficient. The size of the array element number N determines the efficiency of the direction estimates. From a statistical viewpoint, it is, therefore, desirable to increase the array rather than to increase the number of data snapshots. For high signal-to-noise ratio the PTMF estimate defined by (3.28) also approaches the Cramer-Rao bound.

The Cramer-Rao bound can be used to predict the performance of PTMF parameter estimation, e.g., to analyze the dependency on array configuration, target separation and power, etc. The calculation of the Cramer-Rao bound is mathematically complicated; it has been done in several publications, e.g., [3.39], also [3.5, 61, 65].

For a practical realization, the main problem with PTMF methods is the computational expense to minimize the mean-squared error function (3.28). Many ideas for faster minimization of (3.26) or (3.28) have been presented:

Analytical determination of the optimum parameters for small numbers of elements in special arrangements [3.66, 67]. This is not a solution for large multi-function arrays.

Prony Method. If we have one data vector (K = 1), and if the number of data components equals the number of unknowns, the minimization of (3.26) reduces to a single equation. A solution of this equation for an equally spaced linear antenna can be found by the so-called Prony method. The classical Prony method exactly fits the parameters to the data. This method has been extended to larger numbers of samples (extended Prony method) by least-squares fitting an AR model to the data [3.23]. It is, then, a linear prediction type method. Hudson [3.68] has presented an interesting modification which is related to the Pisarenko method mentioned in Sect. 3.2.5b.

Alternating Projection Methods. The minimization of the M-dimensional function (3.28) for linear arrays (or 2M-dimensional function for planar arrays) can be reduced to a sequence of one-dimensional function minimizations, where, in each step, only one direction is varied and the others are kept fixed. This idea is the basis of the alternating projection method presented in [3.69]. The computation of the function (3.28) is then simplified in the following way: By the projection decomposition lemma [3.14], for any partitioning of the columns of the matrix A = (F, G), the projection PCA can be written as:

PCA = /- A(AHA)-l AH

= PCG - PCGF(FHPCGF)-lFHPCG'

where PCG = / - G(GHG)-lGH. In particular, for A = (a, A'), we have

78 U. Nickel

If only a is varied, we may maximize the following function T, instead of minimizing the function (3.28) or (3.30):

K

T(w) = L· aHPCA'ZkZrpCA,a/(aHPCA,a)

k=1

= L IWHZkI2/(WHW)

k

= KwHRmlw/(WHW),

where w = PCA,a. This involves only a beamforming procedure with the weight vector w = PCA'a. This form shows that the targets whose directions are held fixed are treated as interference sources, and are suppressed by the projection matrix PCA' [3.14]. Of course, convergence does not become faster with alternating projections. It is questionable if there are any savings in the total number of operations by the alternating projection method, compared with the multivariate gradient methods discussed below.

Newton-Type Methods [3.61,64,65]. These are the classical methods for minimizing non-linear functions. Although the number of iterations is much less than with a simple steepest descent gradient algorithm, the direction updates are more complicated to compute. Therefore, simple approximations for the required matrix of second derivatives (the Hessian matrix) are usually used. This method is only attractive for off-line calculations. For real time applications we like to have updates of the estimates for each additional data vector. The method then turns into a stochastic approximation type algorithm.

Stochastic Approximation Methods [3.59]. In this case, we minimize the function (3.28) for K = 1 by a gradient search, but for each iteration step we use a new data vector Zk. No storage of the data matrix or covariance matrix is required. In addition, we can show that this algorithm reduces to a procedure that only uses the outputs of parallel conventional sum and difference beams. This is very useful for phased array radar with digital beamforming. For most applications it represents a considerable reduction in the number of input data, in particular, ifcoherent integration before superresolution is required. For only one target present, this stochastic approximation reduces to nulling the difference beam output, or, in a modified version, to nulling the monopulse ratio. Because the stochastic approximation is also able to follow a non-stationary target situation (if not changing too fast), this algorithm is also suited for target tracking. In this sense it is adaptive.

The problems with stochastic approximation methods are the choice of the gain constant for the updates, convergence rate, and the starting values for the iteration. These are important problems for automatic processing. For the choice of the gain constant and starting values, we can find practical solutions in combination with the target number determination described in Sect. 3.2.6c. In principle, it is possible to accelerate the convergence by also using the matrix of second derivatives instead of the simple gradient, i.e., a 'stochastic Newton

3. Radar Target Parameter Estimation with Array Antennas |

79 |

method'. However, the estimation noise introduced by the stochastic gradient for this method poses some delicate practical problems, in particular, an enhancement of this noise by multiplication with the inverse of the noisy stochastic Hessian matrix is not desirable. When only one target is present, the stochastic Newton method is the same as the iterated use of the general monopulse formula (3.5) of Sect. 3.2.1, if we maximize In(S) of (3.32) instead of S. This shows that the stochastic approximation is a multidimensional extension of conventional angle estimation by monopulse.

A simple method derived from this stochastic approximation is the doublenull tracker of White [3.71]. The name refers to thefact that the difference beams in the stochastic gradient are decoupled in the same way as the sum beams [see interpretation of (3.32)], i.e., we have difference beam patterns with nulls in each assumed target direction.

c) Hypotheses Testing

The target number cannot be found by minimizing (3.26), because this function is monotonically decreasing in M. In the early papers on the PTMF method, there was some doubt that the target number could be uniquely determined. In fact, we have to be careful, because we have to select between nested models: M targets are, of course, equivalent to M + L targets if L targets have vanishing amplitudes.

The determination of the target number by a sequence of tests of nested hypotheses has been considered in [3.59]. The hypothesis "the target number is M" is then characterized by the statement that the expectations of all data vectors lie in the linear space spanned by the columns of the matrix A E (CN x M (linear hull of A):

E{(z1. .. . , zI)T} EHM := U LinH(A)K

weQ

Q is |

a |

set of admissible directions. These hypotheses are nested |

HI c |

H 2 |

•• • H N- I C HN = (CNK. |

Likelihood Ratio Test [3.59] and Chap. 2. Starting with HI we can now

perform a |

sequence |

of likelihood ratio tests |

HM versus KM = (CNK\HM |

|

(M = 1, ... , Mm•.). The likelihood ratio is by definition: |

||||

|

|

sup |

P(ZI' ... ,ZK; SI' ... ,SK) |

|

TM = |

(S., .•• ,sK)e<cNK |

|

, |

|

|

sup |

P(Zl' ... ,ZK; SI' ... ,SK) |

||

|

(s" |

... ,sdeHM |

|

|

and 2In(TM ) is asymptotically X2-distributed with, respectively, 2N - 3M and 2N - 4M degrees of freedom for a linear and planar antenna. We can thus construct tests that asymptotically all have the same bound on the error probability. We stop the sequence of tests once we have decided for the first time on H M' If M is small compared to N, we have to perform a minimum number of