Narayanan V.K., Armstrong D.J. - Causal Mapping for Research in Information Technology (2005)(en)

.pdf

330 Iandoli and Zollo

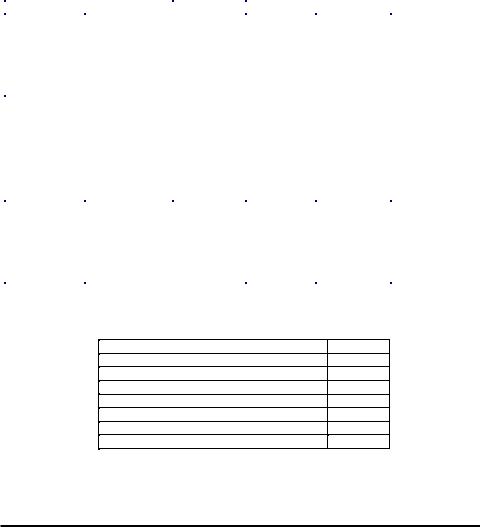

Table 5. Differences and similarities in concept relevance

|

Interview A |

Interview B |

Interview C |

Interview D |

Interview E |

Top four relevant |

-Requirements |

-Requirements |

-Requirements |

-Requirements |

-Requirements |

concepts as |

-Costs |

-Time |

-Time |

-Time |

-Time |

declared in the |

-Time |

-Competencies |

-Costs |

|

-Costs |

interview |

-Quality |

-Costs |

|

|

-Relationship with |

|

|

-Quality |

|

|

the customer |

|

|

|

|

|

-Competencies |

|

|

|

|

|

-Quality |

Top four relevant |

- Costs |

-Time |

- Budget |

-Requirements |

-Requirements |

concepts as |

- Time |

-Risk |

flexibility |

-Quality |

-Time |

emerged from |

- Quality |

-Requirements |

- Risk |

|

-Costs |

domain analysis |

(perceived |

-Quality |

- Team |

|

-Quality |

|

performance) |

-Competencies |

- Requirements |

|

-Project |

|

- Relationship with |

|

- Time |

|

fragmentation |

|

the customer |

|

|

|

-Competencies |

|

- Control |

|

|

|

|

|

- Risk |

|

|

|

|

Top four relevant |

- Time |

- Risk |

- Budget |

-Requirements |

- Project |

concepts as |

- Risk |

- Time |

flexibility |

-Time |

fragmentation |

emerged from |

- Costs |

- Requirements |

- Risk |

|

-Quality |

centrality |

- Control |

- Dimension |

- Requirements |

|

-Budget |

analysis |

|

- Competencies |

|

|

-Time |

|

|

|

|

|

-Requirements |

|

|

|

|

|

-Competencies |

Table 6. Concept importance calculation

Requirements |

21% |

Costs |

17% |

Time |

20% |

Quality |

10% |

Risk |

17% |

Relationship with the customers |

2% |

Competencies |

8% |

Dimension |

5% |

Data Validation

Validation of the results was carried out in two steps. First, the coding and mapping steps were performed independently by each member of the research team for the same interview. Coding and mapping results were then compared, discussed and homogenized with the research team. A research report containing the results was sent to the interviewees and discussed with each of them separately. The aim of the discussion was to verify if the interviewees recognized in the map an adequate representation of their ideas. The concepts dictionary was validated in the same way.

Furthermore, a subsequent group discussion of the results was organized to construct a shared dictionary of the concepts in which each concept was defined as trying to include or improve individual contributions.

Copyright © 2005, Idea Group Inc. Copying or distributing in print or electronic forms without written permission of Idea Group Inc. is prohibited.

Knowledge at Work in Software Devlopment 331

Implications for Decision Support

A Simple DSS For Life-Cycle Selection

In this section we examine the issue of how to employ the results obtained from the analysis presented in the previous section to develop a simple DSS for the problem of life-cycle selection. In particular results emerging from the analysis were integrated into a DSS for life-cycle selection adapted from McConnell’s approach (McConnell, 1996). McConnell’s approach is based on the definition of a selection matrix S = [sij] reported in the Appendix.

The table allows software project managers to compare a set of alternative development models reported in the matrix columns with respect to a set of evaluation criteria reported on the rows. Life-cycle models that are reported in the table can be well-known models drawn from the literature or customized models developed by a company thanks to its know-how and past experience. The same applies for evaluation criteria whose list can be modified and integrated depending on the context of the application.

The value sij is a verbal evaluation assessing the capability of the model j-th to satisfy the i-th criterion. Such evaluations are the result of the analysis of the strengths and weaknesses of each model. The set of judgments contained in each column can be considered the description of the ideal case in which the corresponding life-cycle model should be used. For example, one should use the spiral model if: a) requirements and architecture are very ambiguously defined, b) excellence in reliability, large growth envelope, and capability to manage risks is requested, c) respect for an extremely tight predefined schedule is not required, d) overhead is low, and e) the customer needs excellent visibility on progress.

McConnell suggests that decision-makers evaluate a given project according to the criteria contained in the table and then select the alternative that best fits the characteristics of the specific project.

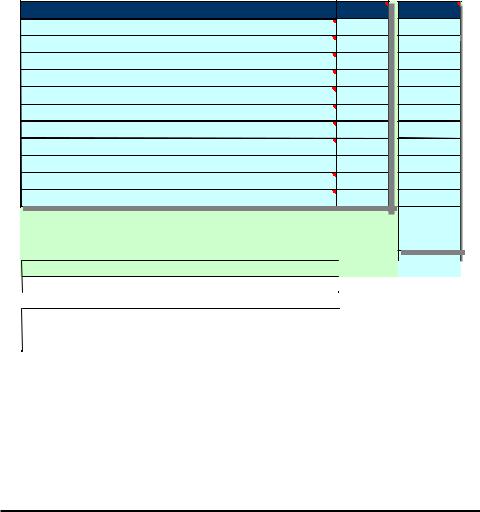

Starting from the Appendix table and using the results obtained from the field analysis, a simple tool to support decision-makers in the selection of the most suitable life cycle was built. The developed tool allows its users to define a selection matrix, to add life-cycle models and evaluation criteria, and to establish weights for evaluation criteria. The input and output interface of the DSS are illustrated in Figure 5.

To identify the best life cycle for a given project, users are asked to evaluate each criterion by assessing the characteristics of the project through a Likert scale ranging from one to five. In the example shown in Figure 5 the evaluator is saying that for the given project, the capability of the life-cycle model to cope with a poor definition of requirements and architecture should be poor to average, the capability to ensure high reliability should be excellent, etc. The user is also required to assign weights representing criteria importance expressed as a percentage and then normalized. The algorithm then calculates a score for each model stored in the selection matrix representing the distance between the profile of the considered project described in terms of the evaluated criteria and the ideal profile corresponding to each model. Consequently, the model to which the lowest score is assigned should be selected as the best one for the given project. In the example

Copyright © 2005, Idea Group Inc. Copying or distributing in print or electronic forms without written permission of Idea Group Inc. is prohibited.

332 Iandoli and Zollo

Figure 5. Input output interface of a DSS for life cycle selection

Evaluation criterion |

Evaluation |

Weights |

Works with poorly understood requirements |

2 |

20% |

Works with poorly understood architecture |

2 |

5% |

Produces highly reliable systems |

5 |

15% |

Produces system with large growth envelope |

5 |

5% |

Manages risks |

5 |

8,0% |

Can be constrained to a predefined schedule |

5 |

2% |

Has low overhead |

4 |

14,0% |

Allows for midcourse corrections |

4 |

15,0% |

Requires little manager or developer |

4 |

12,0% |

Provides customer with progress |

5 |

3% |

Provides management with progress |

5 |

1% |

1= poor 2= poor to average 3= average 4= average to excellent |

|

100% |

5= excellent |

|

|

|

|

|

Waterfall |

Evolutionary |

Spiral |

|

|

|

|

|

|

|

|

||||

1,92 |

1,65 |

1,24 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

On the basis of your judgements, the best life cycle model |

|

Spiral model |

|

|

||

|

|

|

|

||||

|

|

For your project is |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

shown in Figure 5 the numbers 1.92, 1.65 and 1.24 represent the distances between the profile of the given project described in the column “evaluation” and the profiles of, respectively, the waterfall the evolutionary prototyping and the spiral model as contained in the selection matrix. On the basis of such results the best choice is the spiral model.

Causal Map Analysis For DSS Improvement

The analysis of causal maps helps in improving the meaningfulness and the reliability of the DSS presented above. The in-depth analysis performed through causal maps can help companies to elicit unshared knowledge at the individualas well as at the team-level that may be potentially useful. Such knowledge can be discussed and analyzed through the methodology presented in this chapter. Eventually, outputs of the analysis can be integrated into decision support tools described above in the following way:

a)construction of a better (i.e., richer and more complete) definition of evaluation criteria: concept dictionary analysis and group discussion can help researchers identify evaluation criteria on the basis of the experience of developers through the integration of different points of view; existing criteria can be updated and new criteria can be added as new experience is gained;

Copyright © 2005, Idea Group Inc. Copying or distributing in print or electronic forms without written permission of Idea Group Inc. is prohibited.

Knowledge at Work in Software Devlopment 333

b)reduction of ambiguity of evaluation criteria meaning: comparison of individual maps and the dictionary can be used to identify possible discrepancies in meaning attribution to a same evaluation criterion by different developers; as shown in the examples presented in the fifth section, this situation can occur frequently. Through the methodology presented in the fourth and fifth sections, those discrepancies can be elicited; different interpretation can be integrated into more comprehensive ones, while incoherence and conflicts can be analyzed and discussed in depth. Analysis through discussion and self-reflection increases knowledge sharing, people’s involvement, and the participation of team members in the decision-making process concerning project development;

c)assessment of criteria relevance through calculation of weights representing criteria importance: quantitative analysis of causal maps permits the estimation of criteria importance through weights that are more reliable than weights expressed in a direct way since they take into account concept relevance in the considered domain of analysis and causal patterns between them.

Evaluations can be expressed by the project manager or through a group discussion. It is also possible to implement multi-person aggregation algorithms to collect separately and aggregate opinions of different experts. Evaluation sessions can be stored in a database and re-used in similar situation according to a case-based reasoning approach (Schanck, 1986; Kolodner, 1991), with ex post comments about the validity of the choice made. Through time the conjoint application of causal mapping and DSS can allow companies to store knowledge and past experiences that can be continuously revised and updated.

Conclusions and Implications: Knowledge Management through a Cognitive Approach

The adoption of formal methodologies for software development contributes to substantially improve software development projects in terms of both efficiency and effectiveness. Nevertheless, such methodologies are often perceived as constraining and limiting developers’ knowledge and capabilities. As a consequence, promised benefits are not achieved if developers resist their adoption. This chapter shows that an investigation of the development process in its early stage from a cognitive point of view can:

a)reduce the degree of resistance through involvement and consensus policy in the choice phase;

b)help to identify in advance multiple meanings and interpretations used by team members and possible conflicts arising from such differences;

c)enrich team knowledge and competencies through multiple interpretations;

Copyright © 2005, Idea Group Inc. Copying or distributing in print or electronic forms without written permission of Idea Group Inc. is prohibited.

334 Iandoli and Zollo

d)resolve differences and possible divergences through internal discussions or through training initiatives;

e)provide team members a deeper knowledge, higher visibility of the process and a higher level of awareness of the problems in a specific development process.

As outlined in the introduction of this chapter, by eliciting and mapping individual knowledge, the methodology shows how it is possible to:

a)Identify critical factors that impact the success of new projects;

b)Compare different individual interpretations represented through causal maps concerning the meaning and the importance of choice variables, to verify the existence of overlapping perceptions, shared beliefs and conflicting interpretations;

c)Analyze individual knowledge and use the results of such analysis for the design of more effective decision support tools for software life cycle selection.

Critical factors are elicited through interviews and then represented through causal maps.

The concept dictionary contains the description of the meaning of each variable. Centrality and domain analysis help to identify the most critical and recurring issues as perceived by team members. Finally, individual map comparisons permit us to identify those factors that have different and divergent meanings according to different individuals. Therefore one may say that critical factors are such because of their relevance, and because of the presence of several, multifaceted and/or conflicting individual interpretations.

Representing developers’ discourse through causal maps provides analysts with an immediate tool to compare how individuals frame a same problem, and how complex and articulated is the structure of their reasoning in the given context of analysis. The number of concepts, number and kind of connections, and low or high presence of feedback are structural characteristics that are easy to identify in causal maps. Difference in relevance of a concept can be calculated through centrality and domain analysis. The comparison of different concept dictionaries can help to identify differences in meaning.

Finally, the analysis of individual knowledge obtained through causal maps can be used to improve the design of DSS in software development as shown in this chapter. The basic idea is to integrate the formal and explicit knowledge contained in a DSS with human expert knowledge, which is usually situated, highly contextualized, unshared and tacit. Through the proposed approach this could be done in several ways (e.g., by adding new evaluation criteria, new life-cycle models, by redefining criteria weights, or by merging different individual interpretations into more complete and richer definition of criteria’s meaning).

At a first level of intervention, the in-depth analysis carried out using causal mapping can be used to improve the reliability and meaningfulness of the system, as shown in the case of McConnell’s approach. At a more sophisticated level, the use of causal mapping

Copyright © 2005, Idea Group Inc. Copying or distributing in print or electronic forms without written permission of Idea Group Inc. is prohibited.

Knowledge at Work in Software Devlopment 335

can be considered as a fundamental step in the cognitive approach to knowledge management in software development. Actually, what causal mapping can help to do is to elicit and model expert knowledge to construct formal and manageable representations of individual knowledge. Such representations can be used for descriptive purposes and analyzed to improve the design of formal decision support tools. What really matters is that from this perspective decision support tools can be conceived as open platforms which can be continuously revised, updated and enlarged through knowledge elicitation and mapping, as individual and organizational knowledge gradually increase thanks to new experience and know-how. For instance, a simple tool like McConnell’s table could be transformed into a knowledge base for a DSS by storing knowledge over time (e.g., adding new evaluation criteria, new development models, by revising weights and reformulating meaning of evaluation criteria).

A remarkable advantage offered by causal mapping is that knowledge elicitation and mapping is actually obtained through a strong involvement of employees and managers. This brings about many advantages not strictly related to decision support issues such as:

a)people’s involvement may increase employee motivation;

b)companies gaining more knowledge about how things go on in their organization, i.e., they can investigate the theory-in–use and detect possible discrepancies with the organizational espoused theory contained in formal procedures, documentation and organizational charts (Argyris & Schon, 1978);

c)divergent or conflicting interpretations can be elicited and analyzed;

d)group discussions can allow team members to have an occasion to reflect on their problem framing and to compare their opinions and cognitive schemata and attitude with other team members;

e)improvements in training and learning can be obtained.

Lessons Learned

The methodology and the case study presented above can be further discussed to highlight both conceptual and theoretical implications for knowledge management and learning in knowledge-intensive organizations, as well as in terms of practical implications for the management of software projects.

Theoretical Contribution: Causal Maps as Tools To

Investigate Organizational Learning

In the proposed approach, the emphasis is on the problem-setting stage in the decisionmaking process when managers must identify key issues and alternative evaluation approaches for the development of a new software project.

Copyright © 2005, Idea Group Inc. Copying or distributing in print or electronic forms without written permission of Idea Group Inc. is prohibited.

336 Iandoli and Zollo

This stage can be investigated through the analysis of formal processes used by the organization for life-cycle selection (espoused theory following Argyris and Schön’s terminology) and the actual way in which the selection is implemented (theory in use). The espoused theory and the theory-in-use do not always coincide and it is important to understand the reasons for such a discrepancy. Describing the espoused theory, explicit by definition, and the theory-in-use, very often tacit and not easy to formalize, means to map the organizational knowledge employed by the organization in the lifecycle selection process.

Knowledge engineering approaches (Schreiber et al., 2000), causal mappings and studies on organizational cognition (Weick, 1976, Huff, 1990, Eden & Ackermann, 1996) can offer theoretical as well as methodological contributions to support organizational knowledge mapping.

From this perspective, life cycle selection, as any organizational evaluation, is a product of the organizational knowledge, here intended as the combination of formal espoused theories and idiosyncratic situated theories in use. If the analysis of official organizational procedures can shed light on the espoused theory, causal mapping can help to elicit the theory in use and, at least to certain extent, to evaluate how and in which sense it differs form the espoused theory.

An in-depth analysis through causal maps can help to elicit the theory in use, very often only partially shared and more or less tacit, to compare different theories in use, and to incorporate such knowledge into formal tools, such as DSS, and procedures, in other words into the espoused theory.

When this occurs one might accept that an organization has updated its knowledge, i.e., organizational learning has occurred. Even if issues such as what is organizational learning, how and if it actually happens, etc., are largely debated and questioned in the literature, the contribution to such a debate advanced in this chapter and offered as reflection for the reader is that causal maps can be a methodological tool to investigate organizational learning, especially in knowledge-intensive organizations such as software companies.

In particular, the process of software development, involving an intense level of decision-making activity both at the individual as well as at the organizational level, can be investigated within this framework. For instance, the selection of a life-cycle model and the way through which such choice occurs is actually influenced by the existing theory-in-use and the espoused theory about “how to develop the project given certain constraints.”

Summing up, the methodological implications deriving from such a theoretical perspective can be condensed in terms of the following recommendations:

a)both the theory-in-use and the espoused theory about how the organization makes a decision should be analyzed;

b)theory-in-use can be elicited and analyzed through causal maps by asking people to explain how they usually make a decision, which variables influence their choice, what is the meaning of these variables, etc.;

Copyright © 2005, Idea Group Inc. Copying or distributing in print or electronic forms without written permission of Idea Group Inc. is prohibited.

Knowledge at Work in Software Devlopment 337

c)useful implications for the design of the “right” selection method can be drawn from such an analysis.

Managerial Implications

Increased reliability/meaningfulness for decision support tools and organizational analysis, and self-reflection can be considered as the added value obtainable through a cognitive approach to knowledge management in software development. It is quite straightforward to maintain that the methodology proposed in this chapter, by combining a cognitive approach with a decision-support system orientation, can help organizations in facilitating or enabling knowledge elaboration and creation. With reference to the wellknown Nonaka and Takeuchi terminology (1995), the proposed methodology can contribute to achieve this result through the following mechanisms:

a)Knowledge socialization through group discussion;

b)Knowledge externalization through causal maps;

c)Combination of implicit knowledge contained in interviews and explicit knowledge contained in manual, procedures and DSS;

d)Internalization, through self-reflection.

Group discussion for data validation and comparison facilitate individual knowledge sharing and hence knowledge socialization (causal maps can also be constructed through a group interview — an approach not tempted in this chapter). Interview coding and mapping help knowledge externalization through the formal representation obtained with coding sheets, concept dictionaries, and above all causal maps. Combination can be favored by integrating results from different sources of explicit knowledge such as existing DSS, formal methodologies for software development and new explicit knowledge contained in individual causal maps, and group maps could also be constructed by combining individual knowledge. Finally, causal maps facilitate internalization in two ways. First, by allowing an individual to analyze formal representations of their problemframing and problem-solving strategies, maps play a reflexive function inducing individuals to actively reflect and re-evaluate their ways of thinking with respect to a specific context of action. Second, once elicited knowledge is integrated into a new DSS, it is then critically reviewed and applied by individuals in everyday practice.

Acknowledgements

The authors wish to thank Valerio Teta and Mario Capaccio for their support in the field analysis and for their suggestions and collaborations during analysis of the results, and Vincenzo D’Angelo who participated in the field research. The authors also wish to thank the anonymous reviewers for their valuable suggestions and contributions.

Copyright © 2005, Idea Group Inc. Copying or distributing in print or electronic forms without written permission of Idea Group Inc. is prohibited.

338 Iandoli and Zollo

Notes

Even though the paper is the fruit of the collaboration of the authors, in this version, Sections 2,4, 5 and 6 are by Luca Iandoli, Sections 1 and 3 are by G. Zollo, and the rest is work common to both the authors.

References

Allison, G. (1971). Essence of decision explaining the Cuban Missile Crisis. Boston, MA: Little Brown.

Argyris C., & Schon D.A. (1978). Organizational learning. A theory of action perspective. Reading, MA: Addison-Wesley.

Axelrod, R. (Ed.) (1976). Structure of decision: The cognitive maps of political elites.

Princeton, NJ: Princeton University Press.

Blackler, F. (2002). Knowledge, knowledge work and organizations. In C.W. Choo and N. Bontis (Eds.), The strategic management of intellectual capital and organizational knowledge. Oxford: Oxford University Press.

Boar, B. (1984). Application prototyping. New York: Wiley and Sons.

Boehm, B. (1988). A spiral model for software development and enhancement. Computer, 5, 61-72.

Boehm, B. (1981), Software engineering economics. Englewood Cliffs, NJ: Prentice Hall.

Boehm, B., & Basili, V. (2000, May). Gaining intellectual control of software development.

IEEE Computer, 27-33.

Booch, G., Jacobson, I., & Rumbaugh, J. (1999). The unified software development process. Reading, MA: Addison-Wesley.

Bradac, M., Perry D., & Votta, L. (1994). Prototyping a process monitoring experiment,

IEEE Transactions on Software Engineering, 10, 774-784.

Brown, J., & Duguid, P. (1991). Organizational learning and communities-of-practices: Toward a unified view of working, learning, and innovation. Organization Science, 2(1), 40-57.

Calori, R. (2000). Ordinary theorists in mixed industries. Organizational Studies, 6, 10311057.

Capaldo G., & Zollo, G. (2001). Applying fuzzy logic to personnel assessment: a case study. Omega, 29, 585-597.

Choo, C.W. (1998). The knowing organization. Oxford: Oxford University Press.

Choo, C.W., & Bontis, N. (2002). The strategic management of intellectual capital and organizational knowledge. Oxford: Oxford University Press.

Crozier, M. (1964). The bureaucratic phenomenon. London: Tavistock.

Copyright © 2005, Idea Group Inc. Copying or distributing in print or electronic forms without written permission of Idea Group Inc. is prohibited.

Knowledge at Work in Software Devlopment 339

Cyert, R., & March, J.G. (1963). A behavioral theory of the firm. Englewood Cliffs, NJ: Prentice Hall.

Daft, R.L., & Weick, K. (1984). Toward a model of organization as interpretation systems.

Academy of Management Review, 2, 284 - 295.

Davis, F. (1989). Perceived usefulness, perceived ease of use and user acceptance of information technology. Management Information Systems Quarterly, 13, 318-339.

Eden, C., & Ackermann, F. (1992). The analysis of causal map. International Journal of Management Studies, 29, 310-324.

Fletcher, K.E., & Huff, A.S. (1990). Argument Mapping. In A.S. Huff (Ed.), Mapping strategic thought. Chichester: Wiley and Sons.

Gainer, J. (2003). Process improvement: The capability maturity model. Retrieved from the World Wide Web at: http://www.itmweb.com/f051098.htm

Galambos, J.A., Abelson, R.P., & Black, J.B. (1986). Knowledge structures. Hillsdale, NJ: Lawrence Earlbaum.

Huff, A.S. (Ed.) (1990). Mapping strategic thought. Chichester: Wiley.

Humphrey, W.S. (1989). Managing the software process. Reading, MA: AddisonWesley.

Jones, C. (1991). Applied software measurements. New York: McGraw Hill.

Kosko, B. (1992). Neural networks and fuzzy systems. Englewood Cliffs, NJ: Prentice Hall. March, J.B. (1988). Decisions and organizations. Oxford: Blackwell.

Matson, J., Barret B., & Mellichamp, J. (1994). Software development cost estimation using function point. IEEE Transactions on Software Engineering, 4, 275-287.

McClure, C. (1989). CASE is software automation. Englewood Cliffs, NJ: Prentice-Hall.

McConnell, S. C. (1996). Rapid development: Taming wild software schedules. Redmond, WA: Microsoft Press.

Mintzberg, H., Raisinghani, D., & Theoret, A. (1976). The structure of “unstructured” decision processes. Administrative Science Quarterly, 21, 246-274.

Nelson, K. M., Nadkarni, S., Narayanan, V. K., & Ghods, M. (2000). Understanding software operations support expertise: A causal mapping approach. Management Information Systems Quarterly, 24, 475-507.

Nicolini D., & Meznar, M.B. (1995). The social construction of organizational learning: conceptual and practical issues in the field. Human Relations, 7, 727-746.

Nisbett, R., & Ross, L. (1980). Human inference: Strategies and shortcomings of social judgement. Englewood Cliffs, NJ: Prentice Hall.

Nonaka, I., & Takeuchi, H. (1995). The knowledge creating company. Oxford: Oxford University Press.

Paulk, M.C., Curtis, B, Chrissis, M.B., & Weber, C.V. (1993). Capability maturity model for software version 1.1, Report CMU/SEI-93-TR-24, Software Engineering Institute, Carnegie Mellon University, Pittsburgh, PA.

Copyright © 2005, Idea Group Inc. Copying or distributing in print or electronic forms without written permission of Idea Group Inc. is prohibited.