Теория информации / Cover T.M., Thomas J.A. Elements of Information Theory. 2006., 748p

.pdf11.5 EXAMPLES OF SANOV’S THEOREM |

365 |

From (11.110), it follows that P has the form

|

|

|

|

|

P (x) = |

2λx |

|

, |

(11.113) |

|

|

|

|

|

6 |

λi |

|||

= |

|

|

= 0 |

|

i=1 |

2 |

|

numerically, we obtain |

|

with |

λ |

chosen |

so that |

iP (i) = 4. Solving |

|||||

λ |

0.2519, P |

|

( .1031, 0.1227, 0.1461, 0.1740, 0.2072, 0.2468), and |

||||||

|| |

Q) |

= |

|

624 |

therefore D(P |

|

0.0624 bit. Thus, the probability that the average |

||

of 10000 throws is greater than or equal to 4 is ≈ 2− . |

||||

Example 11.5.2 |

(C oins) |

Suppose that we have a fair coin and want |

||

to estimate the probability of observing more than 700 heads in a series of 1000 tosses. The problem is like Example 11.5.1. The probability is

P (Xn ≥ 0.7) = 2− |

nD(P |

|| |

|

, |

(11.114) |

||

|

|

. |

|

Q) |

|

|

|

where P is the (0.7, 0.3) distribution and Q is the (0.5, 0.5) distribution. In this case, D(P ||Q) = 1 − H (P ) = 1 − H (0.7) = 0.119. Thus, the probability of 700 or more heads in 1000 trials is approximately 2−119.

Example 11.5.3 (Mutual dependence) Let Q(x, y) be a given joint distribution and let Q0(x, y) = Q(x)Q(y) be the associated product distribution formed from the marginals of Q. We wish to know the likelihood that a sample drawn according to Q0 will “appear” to be jointly distributed according to Q. Accordingly, let (Xi , Yi ) be i.i.d. Q0(x, y) = Q(x)Q(y). We define joint typicality as we did in Section 7.6; that is, (xn, yn) is jointly typical with respect to a joint distribution Q(x, y) iff the sample entropies are close to their true values:

|

− n |

− |

|

≤ |

, |

|

(11.115) |

||||

|

|

|

1 log Q(xn) |

|

H (X) |

|

|

||||

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

− n |

− |

|

≤ |

, |

|

(11.116) |

||||

|

|

|

1 log Q(yn) |

|

H (Y ) |

|

|

||||

|

|

|

|

|

|

|

|

|

|

|

|

and |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

− n |

|

|

− |

|

|

|

≤ |

. |

(11.117) |

||

|

1 log Q(xn, yn) |

|

H (X, Y ) |

|

|||||||

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

We wish to calculate the probability (under the product distribution) of seeing a pair (xn, yn) that looks jointly typical of Q [i.e., (xn, yn)

11.6 CONDITIONAL LIMIT THEOREM |

367 |

E

P

P *

Q

FIGURE 11.5. Pythagorean theorem for relative entropy.

number of terms. Since the number of terms is polynomial in the length of the sequences, the sum is equal to the largest term to first order in the exponent.

We now strengthen the argument to show that not only is the probability of the set E essentially the same as the probability of the closest type P but also that the total probability of other types that are far away from P is negligible. This implies that with very high probability, the type observed is close to P . We call this a conditional limit theorem.

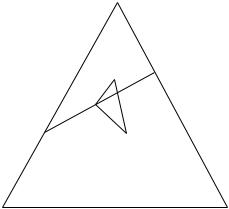

Before we prove this result, we prove a “Pythagorean” theorem, which gives some insight into the geometry of D(P ||Q). Since D(P ||Q) is not a metric, many of the intuitive properties of distance are not valid for D(P ||Q). The next theorem shows a sense in which D(P ||Q) behaves like the square of the Euclidean metric (Figure 11.5).

Theorem 11.6.1 For a closed convex set E P and distribution Q / E, let P E be the distribution that achieves the minimum distance to Q; that is,

D(P |

|| |

= P E |

|| |

(11.121) |

Q) |

min D(P |

Q). |

||

|

|

|

|

|

Then |

|

|

|

|

D(P ||Q) ≥ D(P ||P ) + D(P ||Q) |

(11.122) |

|||

for all P E.

368 INFORMATION THEORY AND STATISTICS

Note. The main use of this theorem is as follows: Suppose that we have a sequence Pn E that yields D(Pn||Q) → D(P ||Q). Then from the Pythagorean theorem, D(Pn||P ) → 0 as well.

Proof: Consider any P E. Let |

|

Pλ = λP + (1 − λ)P . |

(11.123) |

Then Pλ → P as λ → 0. Also, since E is convex, Pλ E for 0 ≤ λ ≤ 1. Since D(P ||Q) is the minimum of D(Pλ||Q) along the path P → P , the derivative of D(Pλ||Q) as a function of λ is nonnegative at λ = 0. Now

|

|

|

|

|

|

Dλ = D(Pλ||Q) = Pλ(x) log |

Pλ(x) |

(11.124) |

|||||||||||||||||||||

|

|

|

|

|

|

Q(x) |

|

|

|

|

|||||||||||||||||||

and |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

dDλ |

|

(P (x) |

− |

P |

(x)) log |

Pλ(x) |

|

+ |

(P (x) |

− |

P (x)) . |

(11.125) |

||||||||||||||||

|

dλ |

|

Q(x) |

||||||||||||||||||||||||||

|

= |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

||||||||||

Setting λ = 0, so that Pλ |

= |

P and using the fact that P (x) |

P |

||||||||||||||||||||||||||

(x) |

= |

1, we have |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

= |

||||||||||

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

0 |

≤ |

|

dDλ |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

(11.126) |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

||||||

|

|

|

|

|

dλ |

λ=0 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|||

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

||

|

|

|

|

= (P (x) − P (x)) log |

P (x) |

|

|

|

|

|

|

|

|

(11.127) |

|||||||||||||||

|

|

|

|

Q(x) |

|

|

|

|

|

|

|

|

|||||||||||||||||

|

|

|

|

= P (x) log |

P (x) |

|

|

|

P (x) |

|

|||||||||||||||||||

|

|

|

|

|

|

|

− P (x) log |

|

|

|

|

(11.128) |

|||||||||||||||||

|

|

|

|

Q(x) |

Q(x) |

||||||||||||||||||||||||

|

|

|

|

= P (x) log |

P (x) P (x) |

P (x) log |

P (x) |

(11.129) |

|||||||||||||||||||||

|

|

|

|

|

|

|

− |

|

|

||||||||||||||||||||

|

|

|

|

Q(x) |

P (x) |

Q(x) |

|||||||||||||||||||||||

|

|

|

|

= D(P ||Q) − D(P ||P ) − D(P ||Q), |

|

|

|

|

|

|

(11.130) |

||||||||||||||||||

which proves the theorem. |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|||||||||||

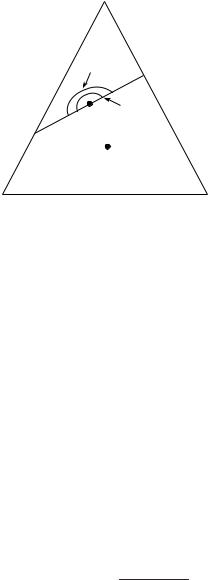

Note that the relative entropy D(P ||Q) behaves like the square of the Euclidean distance. Suppose that we have a convex set E in Rn. Let A be a point outside the set, B the point in the set closest to A, and C any

11.6 CONDITIONAL LIMIT THEOREM |

369 |

A

B

C

FIGURE 11.6. Triangle inequality for distance squared.

other point in the set. Then the angle between the lines BA and BC must be obtuse, which implies that lAC2 ≥ lAB2 + lBC2 , which is of the same form as Theorem 11.6.1. This is illustrated in Figure 11.6.

We now prove a useful lemma which shows that convergence in relative entropy implies convergence in the L1 norm.

Definition The L1 distance between any two distributions is defined as

||P1 − P2||1 = |P1(a) − P2(a)|. |

(11.131) |

|

a X |

|

|

Let A be the set on which P1(x) > P2(x). Then |

|

|

||P1 − P2||1 = |P1(x) − P2(x)| |

|

(11.132) |

x X |

|

|

= (P1(x) − P2(x)) + c (P2(x) − P1(x)) |

(11.133) |

|

x A |

x A |

|

= P1(A) − P2(A) + P2(Ac) − P1(Ac) |

(11.134) |

|

= P1(A) − P2(A) + 1 − P2(A) − 1 + P1(A) |

(11.135) |

|

= 2(P1(A) − P2(A)). |

|

(11.136) |

370 INFORMATION THEORY AND STATISTICS

Also note that

B |

1 |

|

− |

2 |

|

= |

1 |

|

− |

2 |

|

= |

2 |

|

|

max (P |

(B) |

|

P |

(B)) |

|

P |

(A) |

|

P |

(A) |

|

||P1 − P2 |

||1 |

. (11.137) |

|

|

|

|

|

|

|

||||||||||

X

The left-hand side of (11.137) is called the variational distance between P1 and P2.

Lemma 11.6.1

D(P1||P2) ≥ |

1 |

||P1 |

− P2||12. |

(11.138) |

2 ln 2 |

Proof: We first prove it for the binary case. Consider two binary distributions with parameters p and q with p ≥ q. We will show that

p log |

p |

|

(1 |

|

|

p) log |

1 − p |

|

|

|

|

4 |

|

|

(p |

|

|

q)2. |

|

|

(11.139) |

||||||||||||||||

q + |

− |

|

|

|

|

|

|

|

|

|

|

|

− |

|

|

||||||||||||||||||||||

|

|

|

|

|

|

|

|

|

|

1 |

− |

q ≥ |

|

2 ln 2 |

|

|

|

|

|

|

|

|

|||||||||||||||

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

||||||||||||||||||

The difference g(p, q) between the two sides is |

|

|

|

|

|

|

|

|

|

|

|||||||||||||||||||||||||||

|

|

= |

|

|

|

|

q + |

|

|

− |

|

|

|

|

1 q − |

|

2 ln 2 |

|

|

− |

|

|

|

||||||||||||||

g(p, q) |

|

p log |

p |

|

|

(1 |

|

|

p) log |

1 |

− p |

|

|

4 |

|

(p |

|

q)2. |

(11.140) |

||||||||||||||||||

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

||||||||||||||||||||||

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

− |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

||

Then |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

dg(p, q) |

|

|

|

|

|

p |

|

|

|

|

|

1 − p |

|

|

|

|

|

4 |

|

2(q |

p) |

(11.141) |

||||||||||||||

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

||||||||||

|

|

dq |

= − q ln 2 + (1 |

|

− |

q) ln 2 |

− 2 ln 2 |

|

|

− |

|

||||||||||||||||||||||||||

|

|

|

|

|

|

|

|

||||||||||||||||||||||||||||||

|

|

|

|

|

|

|

|

|

|

q − p |

|

|

|

|

|

|

4 |

(q |

|

|

p) |

|

|

|

|

|

(11.142) |

||||||||||

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|||||||||||||

|

|

|

|

|

|

= q(1 |

− |

q) ln 2 |

|

− ln 2 |

− |

|

|

|

|

|

|

|

|

|

|

||||||||||||||||

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|||||||||||||||||||

|

|

|

|

|

|

≤ 0 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

(11.143) |

|||

since q(1 − q) ≤ 41 and |

q ≤ p. For |

|

q = p, g(p, q) = 0, and hence |

||||||||||||||||||||||||||||||||||

g(p, q) ≥ 0 for q ≤ p, which proves the lemma for the binary case. |

|||||||||||||||||||||||||||||||||||||

For the general case, for any two distributions P1 and P2, let |

|

||||||||||||||||||||||||||||||||||||

|

|

|

|

|

|

|

|

|

A = {x : P1(x) > P2(x)}. |

|

|

|

|

|

|

|

(11.144) |

||||||||||||||||||||

Define a new binary random variable Y = φ (X), the indicator of the set A,

and let ˆ1 and ˆ2 be the distributions of . Thus, ˆ1 and ˆ2 correspond

P P Y P P

to the quantized versions of P1 and P2. Then by the data-processing

372 INFORMATION THEORY AND STATISTICS

where P (a) minimizes D(P |

Q) over P satisfying |

P (a)a2 |

≥ |

α. This |

|||

minimization results in |

|| |

|

|

|

|

|

|

P (a) = Q(a) |

eλa2 |

|

, |

|

(11.150) |

||

a Q(a)e |

λa2 |

|

|||||

|

|

|

|

|

|

|

|

where λ is chosen to satisfy P (a)a2 = α. Thus, the conditional distribution on X1 given a constraint on the sum of the squares is a (normalized) product of the original probability mass function and the maximum entropy probability mass function (which in this case is Gaussian).

Proof of Theorem: Define the sets

St = {P P : D(P ||Q) ≤ t }. |

(11.151) |

|||||||

The set St is convex since D(P ||Q) is a convex function of P . Let |

||||||||

D |

= |

|

|

|| |

= |

P E |

|| |

(11.152) |

|

D(P |

Q) |

min D(P |

Q). |

||||

|

|

|

|

|

|

|

|

|

|

|

|

|

|| |

|

|

|

P . Now define |

Then P is unique, since D(P Q) is strictly convex in |

||||||||

the set |

|

|

|

|

|

|

|

|

|

|

|

A = SD +2δ ∩ E |

|

(11.153) |

|||

and |

|

|

|

|

|

|

|

|

|

|

B = E − SD +2δ ∩ E. |

|

(11.154) |

||||

Thus, A B = E. These sets are illustrated in Figure 11.7. Then |

||||||||

Qn(B) = |

|

|

|

|

Qn(T (P )) |

(11.155) |

||

|

|

P E∩Pn:D(P ||Q)>D +2δ |

|

|

||||

|

≤ |

|

|

|

|

2−nD(P ||Q) |

(11.156) |

|

|

|

P E∩Pn:D(P ||Q)>D +2δ |

|

|

||||

|

≤ |

|

|

|

|

2−n(D +2δ) |

(11.157) |

|

|

|

P E∩Pn:D(P ||Q)>D +2δ |

|

|

||||

|

≤ |

(n |

+ |

1)|X|2−n(D +2δ) |

|

(11.158) |

||

|

|

|

|

|

|

|

||

374 INFORMATION THEORY AND STATISTICS

which goes to 0 as n → ∞. Hence the conditional probability of B goes to 0 as n → ∞, which implies that the conditional probability of A goes to 1.

We now show that all the members of A are close to P in relative entropy. For all members of A,

D(P ||Q) ≤ D + 2δ. |

(11.167) |

Hence by the “Pythagorean” theorem (Theorem 11.6.1), |

|

D(P ||P ) + D(P ||Q) ≤ D(P ||Q) ≤ D + 2δ, |

(11.168) |

which in turn implies that |

|

D(P ||P ) ≤ 2δ, |

(11.169) |

since D(P ||Q) = D . Thus, Px A implies that D(Px||Q) ≤ D + 2δ, and therefore that D(Px||P ) ≤ 2δ. Consequently, since Pr{PXn A|PXnE} → 1, it follows that

Pr(D(PXn ||P ) ≤ 2δ|PXn E) → 1 |

(11.170) |

as n → ∞. By Lemma 11.6.1, the fact that the relative entropy is small implies that the L1 distance is small, which in turn implies that maxa X

|PXn (a) − P (a)| is small. Thus, Pr(|PXn (a) − P (a)| ≥ |PXn E) →

0 as n → ∞. Alternatively, this can be written as

Pr(X1 = a|PXn E) → P (a) |

in probability, a X. (11.171) |

In this theorem we have only proved that the marginal distribution goes to P as n → ∞. Using a similar argument, we can prove a stronger version of this theorem:

Pr(X1 = a1, X2 = a2, . . . , Xm

m |

|

|

= am|PXn E) → P (ai ) |

in probability. |

(11.172) |

i=1

This holds for fixed m as n → ∞. The result is not true for m = n, since there are end effects; given that the type of the sequence is in E, the last elements of the sequence can be determined from the remaining elements, and the elements are no longer independent. The conditional limit