Теория информации / Cover T.M., Thomas J.A. Elements of Information Theory. 2006., 748p

.pdf

288 GAUSSIAN CHANNEL

max |

1 |

log |

2n|KX + KZ | |

|

|

|

(9.154) |

|||||

|

|

|

|

|

|

|||||||

≤ tr(KX )≤nP 2n |

|

|

|KZ | |

|

|

|

1 |

|

|

|||

max |

1 |

log |

|

|KX + KZ |

| |

+ |

|

(9.155) |

||||

|

|KZ | |

|

|

|

||||||||

= tr(KX )≤nP 2n |

|

|

|

2 |

|

|

||||||

1 |

|

|

bits per transmission, |

(9.156) |

||||||||

≤ Cn + |

|

|

|

|||||||||

2 |

|

|||||||||||

where the inequalities follow from Theorem 9.6.1, Lemma 9.6.3, and the definition of capacity without feedback, respectively.

We now prove Pinsker’s statement that feedback can at most double the capacity of colored noise channels.

Theorem 9.6.3 Cn,FB ≤ 2Cn.

Proof: It is enough to show that

1 1 |

log |

|KX+Z | |

≤ |

1 |

log |

|KX + KZ | |

, |

(9.157) |

|||

|

|

|

|

|

|||||||

2 2n |

|KZ | |

2n |

|KZ | |

||||||||

|

|

|

|

||||||||

for it will then follow that by maximizing the right side and then the left side that

|

|

|

|

1 |

Cn,FB ≤ Cn. |

|

|

(9.158) |

|||||||||

|

|

|

|

|

2 |

|

|

||||||||||

We have |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

1 |

log |

|KX + KZ | |

(a) |

1 |

|

log |

| 21 KX+Z + 21 KX−Z | |

(9.159) |

|||||||||

|

2n |

|KZ | |

|

2n |

|

|KZ | |

|||||||||||

|

|

= |

|

|

|

|

|

|

|||||||||

|

|

|

|

(b) |

1 |

|

|

|

1 |

1 |

|

||||||

|

|

|

|

|

log |

|KX+Z | 2 |

|KX−Z | 2 |

(9.160) |

|||||||||

|

|

|

|

≥ |

|

|

|

|

|

|

|KZ | |

||||||

|

|

|

|

|

2n |

|

|

||||||||||

|

|

|

|

(c) |

1 |

|

|

|

1 |

1 |

|

||||||

|

|

|

|

|

log |

|KX+Z | 2 |

|KZ | 2 |

(9.161) |

|||||||||

|

|

|

|

≥ |

|

2n |

|

|

|KZ | |

||||||||

|

|

|

|

|

1 |

|

|

|

|||||||||

|

|

|

|

(d) 1 |

log |

|KX+Z | |

|

(9.162) |

|||||||||

|

|

|

|

= |

|

|

|

|

|

|

|||||||

|

|

|

|

|

2 2n |

|

|KZ | |

|

|

|

|||||||

and the result is proved. Here (a) follows from Lemma 9.6.1, (b) is the inequality in Lemma 9.6.4, and (c) is Lemma 9.6.5 in which causality is used.

SUMMARY 289

Thus, we have shown that Gaussian channel capacity is not increased by more than half a bit or by more than a factor of 2 when we have feedback; feedback helps, but not by much.

|

|

|

|

|

|

|

|

|

SUMMARY |

|

|

|||||

Maximum entropy. maxEX2=α h(X) = 21 log 2π eα. |

|

|||||||||||||||

Gaussian channel. |

Y |

|

= |

X |

i + |

Z |

i ; |

Z |

( , N ) |

|

|

|||||

1 |

n |

2 |

|

|

|

|

i |

|

|

|

i N 0 |

; power constraint |

||||

n |

i=1 xi ≤ P ; and |

|

|

|

|

|

|

|

|

|

|

|

|

|||

|

|

= |

2 |

|

+ N |

|

|

|

|

|

|

|

|

|

||

|

C |

|

1 |

log 1 |

|

P |

|

|

|

bits per transmission. |

(9.163) |

|||||

|

|

|

|

|

|

|

|

|||||||||

Bandlimited additive white Gaussian noise channel. Bandwidth W ; two-sided power spectral density N0/2; signal power P ; and

|

= |

|

1 |

+ N0W |

|

|

|

|

C |

|

W log |

|

P |

bits per second. |

(9.164) |

||

|

|

|

||||||

Water-filling (k parallel Gaussian channels). Yj = Xj + Zj , j = 1,

|

|

|

|

k |

Xj2 ≤ P ; and |

|

|||

2, . . . , k; Zj N(0, Nj ); j =1 |

|

||||||||

C |

|

k |

1 |

log |

1 |

|

(ν − Ni )+ |

, |

(9.165) |

= |

|

2 |

+ |

|

|||||

|

|

|

Ni |

|

|||||

|

|

i=1 |

|

|

|

|

|

|

|

where ν is chosen so that (ν − Ni )+ = nP .

Additive nonwhite Gaussian noise channel. Yi = Xi + Zi ; Zn

N(0, KZ ); and

C |

|

1 |

n |

1 |

log 1 |

|

(ν − λi )+ |

, |

(9.166) |

= n |

|

2 |

+ |

|

|||||

|

|

λi |

|

||||||

|

|

|

i=1 |

|

|

|

|

|

|

where λ1, λ2, . . . , λn are the eigenvalues of KZ and ν is chosen so that

i (ν − λi )+ = P .

Capacity without feedback

C |

max |

1 |

log |

|KX + KZ | |

. |

(9.167) |

|

|

|||||

|

n = tr(KX )≤nP 2n |

|

|KZ | |

|

||

290 GAUSSIAN CHANNEL

Capacity with feedback |

|

|

|

|

|

|

|

|

C |

max |

1 |

log |

|KX+Z | |

. |

(9.168) |

||

|

||||||||

|

n,FB = tr(KX )≤nP 2n |

|

|

|

|KZ | |

|

||

Feedback bounds |

|

|

|

|

|

|

|

|

|

|

|

1 |

|

|

|

(9.169) |

|

|

Cn,FB ≤ Cn + |

|

. |

|

|

|||

|

2 |

|

|

|||||

|

Cn,FB ≤ 2Cn. |

|

|

(9.170) |

||||

PROBLEMS

9.1Channel with two independent looks at Y . Let Y1 and Y2 be conditionally independent and conditionally identically distributed given X.

(a)Show that I (X; Y1, Y2) = 2I (X; Y1) − I (Y1; Y2).

(b)Conclude that the capacity of the channel

X |

|

|

|

|

(Y1, Y2) |

|

|

|

|

||

|

|

|

|

|

|

is less than twice the capacity of the channel

X |

|

|

|

|

Y1 |

|

|

|

|

||

|

|

|

|

|

|

9.2Two-look Gaussian channel

X |

|

|

|

|

(Y1, Y2) |

|

|

|

|

||

|

|

|

|

|

|

Consider the ordinary Gaussian channel with two correlated looks at X, that is, Y = (Y1, Y2), where

Y1 |

= X + Z1 |

(9.171) |

Y2 |

= X + Z2 |

(9.172) |

PROBLEMS 291

with a power constraint P on X, and (Z1, Z2) N2(0, K), where

'(

K = |

N |

Nρ |

(9.173) |

Nρ |

N . |

Find the capacity C for

(a)ρ = 1

(b)ρ = 0

(c)ρ = −1

9.3Output power constraint . Consider an additive white Gaussian

noise channel with an expected output power constraint P . Thus, Y = X + Z, Z N (0, σ 2), Z is independent of X, and EY 2 ≤ P . Find the channel capacity.

9.4Exponential noise channels. Yi = Xi + Zi , where Zi is i.i.d. exponentially distributed noise with mean µ. Assume that we have

a mean constraint on the signal (i.e., EXi ≤ λ). Show that the capacity of such a channel is C = log(1 + µλ ).

9.5Fading channel . Consider an additive noise fading channel

V Z

X

Y

Y

Y = XV + Z,

where Z is additive noise, V is a random variable representing fading, and Z and V are independent of each other and of X. Argue that knowledge of the fading factor V improves capacity by showing that

I (X; Y |V ) ≥ I (X; Y ).

9.6Parallel channels and water-filling . Consider a pair of parallel

Gaussian channels:

Y1 |

|

X1 |

|

Z1 |

, |

(9.174) |

Y |

= |

X |

+ |

Z |

|

|

2 |

2 |

2 |

|

|

292 GAUSSIAN CHANNEL

where |

|

|

|

|

|

|

|

|

|

|

|

|

|

Z |

|

0, ' |

σ 2 |

|

02 |

( , |

|

(9.175) |

|

|

|

Z1 |

1 |

|

|

||||||

|

|

2 |

N |

|

0 |

|

σ2 |

|

|

|

|

and there is a power constraint E(X2 |

+ |

X2) |

≤ 2 |

P |

. Assume that |

||||||

2 |

2 |

|

|

|

1 |

2 |

|

|

|||

σ1 |

> σ2 |

. At what power does the channel stop behaving like a |

|||||||||

single channel with noise variance σ22, and begin behaving like a pair of channels?

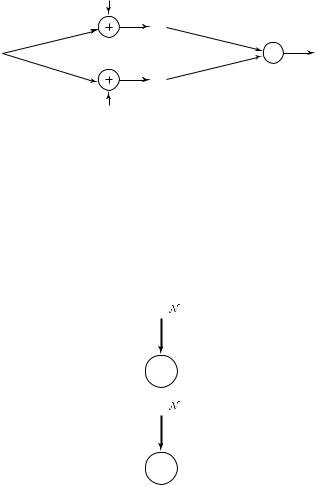

9.7Multipath Gaussian channel . Consider a Gaussian noise channel with power constraint P , where the signal takes two different paths and the received noisy signals are added together at the antenna.

Z1

Y1

X |

∑ |

Y |

Y2

Z2

(a)Find the capacity of this channel if Z1 and Z2 are jointly normal with covariance matrix

KZ = ' |

σ 2 |

ρσ 2 |

(. |

ρσ 2 |

σ 2 |

(b)What is the capacity for ρ = 0, ρ = 1, ρ = −1?

9.8Parallel Gaussian channels. Consider the following parallel Gaussian channel:

Z1 ~ (0, N1)

X1

Y1

Y1

Z2 ~ (0, N2)

X2

Y2

Y2

PROBLEMS |

293 |

where Z1 N(0,N1) and Z2 N(0,N2) are independent Gaussian random variables and Yi = Xi + Zi . We wish to allocate power to the two parallel channels. Let β1 and β2 be fixed. Consider a total cost constraint β1P1 + β2P2 ≤ β, where Pi is the power allocated to the ith channel and βi is the cost per unit power in that channel. Thus, P1 ≥ 0 and P2 ≥ 0 can be chosen subject to the cost constraint β.

(a)For what value of β does the channel stop acting like a single channel and start acting like a pair of channels?

(b)Evaluate the capacity and find P1 and P2 that achieve capacity for β1 = 1, β2 = 2, N1 = 3, N2 = 2, and β = 10.

9.9Vector Gaussian channel . Consider the vector Gaussian noise channel

|

|

Y = X + Z, |

|

|

|||

where |

2 |

X = (X1, X2, X3), |

Z = (Z1, Z2, Z3), Y = (Y1, Y2, Y3), |

||||

E X |

|

≤ P , and |

|

|

|

|

|

|

|

|

0 |

1 |

1 |

||

|

|

|

|

1 |

0 |

1 |

|

|

|

Z N 0, 1 |

1 |

2 . |

|||

Find the capacity. The answer may be surprising.

9.10Capacity of photographic film. Here is a problem with a nice answer that takes a little time. We’re interested in the capacity of photographic film. The film consists of silver iodide crystals, Poisson distributed, with a density of λ particles per square inch. The film is illuminated without knowledge of the position of the silver iodide particles. It is then developed and the receiver sees only the silver iodide particles that have been illuminated. It is assumed that light incident on a cell exposes the grain if it is there and otherwise results in a blank response. Silver iodide particles that are not illuminated and vacant portions of the film remain blank. The question is: What is the capacity of this film?

We make the following assumptions. We grid the film very finely into cells of area dA. It is assumed that there is at most one silver iodide particle per cell and that no silver iodide particle is intersected by the cell boundaries. Thus, the film can be considered to be a large number of parallel binary asymmetric channels with crossover probability 1 − λdA. By calculating the capacity of this binary asymmetric channel to first order in dA (making the

294 GAUSSIAN CHANNEL

necessary approximations), one can calculate the capacity of the film in bits per square inch. It is, of course, proportional to λ. The question is: What is the multiplicative constant?

The answer would be λ bits per unit area if both illuminator and receiver knew the positions of the crystals.

9.11Gaussian mutual information. Suppose that (X, Y, Z) are jointly Gaussian and that X → Y → Z forms a Markov chain. Let X and

Y have correlation coefficient ρ1 and let Y and Z have correlation coefficient ρ2. Find I (X; Z).

9.12Time-varying channel . A train pulls out of the station at constant

velocity. The received signal energy thus falls off with time as 1/ i2. The total received signal at time i is

1

Yi = i Xi + Zi ,

where Z1, Z2, . . . are i.i.d. N (0, N ). The transmitter constraint for block length n is

1 |

n |

|

xi2(w) ≤ P , w {1, 2, . . . , 2nR }. |

n |

|

|

i=1 |

Using Fano’s inequality, show that the capacity C is equal to zero for this channel.

9.13 Feedback capacity . Let |

(Z1, Z2) N (0, K), K = ' |

1 |

ρ |

(. |

|

ρ |

1 |

||||

Find the maximum of 1 log |

|KX+Z | |

with and without feedback given |

|||

2 |

|KZ | |

|

|

|

|

a trace (power) constraint tr(KX) ≤ 2P .

9.14Additive noise channel . Consider the channel Y = X + Z, where X is the transmitted signal with power constraint P , Z is independent additive noise, and Y is the received signal. Let

|

|

) |

0 |

with probability |

1 |

|

Z |

= |

10 |

|

|||

|

|

Z |

with probability |

9 |

, |

|

|

|

10 |

where Z N (0, N ). Thus, Z has a mixture distribution that is the mixture of a Gaussian distribution and a degenerate distribution with mass 1 at 0.