Encyclopedia of Sociology Vol

.5.pdf

STATISTICAL INFERENCE

Type I (α) and Type II (β) Errors

|

|

True Situation in the |

|

|

|

Population |

|

|

Decision Made by the |

H0, the null |

H1, the main |

Researcher |

hypothesis, |

hypothesis, |

|

|

|

is true |

is true |

Ho, the null hypothesis is true |

1 – α |

β |

|

Hr, the main hypothesis is true |

α |

1 – β |

|

Table 2 |

|

|

|

error has been described as ‘‘the chances of discovering things that aren’t so’’ (Cohen 1990, p. 1304). The focus on Type I error reflects a conservative view among scientists. Type I error guards against doing something new (as specified by H1) when it is not going to be helpful.

Type II, or ß, error is the probability of failing to reject H0 when H1 is true in the population. If one failed to reject the null hypothesis that the new program was no better (H0) when it was truly better (H1), one would put newborn children at needless risk. Type II error is the chance of missing something new (as specified by H1) when it really would be helpful.

Power is 1 − ß. Power measures the likelihood of rejecting the null hypothesis when the alternative hypothesis is true. Thus, if there is a real effect in the population, a study that has a power of .80 can reject the null hypothesis with a likelihood of

.80. The power of a statistical test is measured by how likely it is to do what one usually wants to do: demonstrate support for the main hypothesis when the main hypothesis is true. Using the example of a treatment for drug abuse among pregnant women, the power of a test is the ability to demonstrate that the program is effective if this is really true.

Power can be increased. First, get a larger sample. The larger the sample, the more power to find results that exist in the population. Second, increase the α level. Rather than using the .01 level of significance, a researcher can pick the .05 or even the .10. The larger α is, the more powerful the test is in its ability to reject the null hypothesis when the alternative is true.

There are problems with both approaches. Increasing sample size makes the study more costly. If there are risks to the subjects who participate,

adding cases exposes additional people to that risk. An example of this would be a study that exposed subjects to a new drug treatment program that might create more problems than it solved. A larger sample will expose more people to these risks.

Since Type I and Type II errors are inversely related, raising α reduces ß thus increasing the power of the test. However, sociologists are hesitant to raise α since doing so increases the chance of deciding something is important when it is not important. With a small sample, using a small α level such as .001 means there is a great risk of ß error. Many small-scale studies have a Type II error of over .50. This is common in research areas that rely on small samples. For example, a review of one volume of the Journal of Abnormal Psychology

(this journal includes many small-sample studies) found that those studies average Type II error of

.56 (Cohen 1990). This means the psychologist had inadequate power to reject the null hypothesis when H1 was true. When H1 was true, the chance of rejecting H0 (i.e., power) was worse than that resulting from flipping a coin.

Some areas that rely on small samples because of the cost of gathering data or to minimize the potential risk to subjects require researchers to plan their sample sizes to balance α, power, sample size, and the minimum size of effect that is theoretically important. For example, if a correlation of .1 is substantively significant, a power of .80 is important, and an α = .01 is desired, a very large sample is required. If a correlation is substantively and theoretically important only if it is over .5, a much smaller sample is adequate. Procedures for doing a power analysis are available in Cohen (1988); see also Murphy and Myous (1998).

Power analysis is less important for many sociological studies that have large samples. With a large sample, it is possible to use a conservative α error rate and still have sufficient power to reject the null hypothesis when H1 is true. Therefore, sociologists pay less attention to ß error and power than do researchers in fields such as medicine and psychology. When a sociologist has a sample of 10,000 cases, the power is over .90 that he or she will detect a very small effect as statistically significant. When tests are extremely powerful to detect small effects, researchers must focus on the substantive significance of the effects. A correlation of

3031

STATISTICAL INFERENCE

.07 may be significant at the .05 level with 10,000 cases, but that correlation is substantively trivial.

STATISTICAL AND SUBSTANTIVE

SIGNIFICANCE

Some researchers and many readers confuse statistical significance with substantive significance. Statistical inference does not ensure substantive significance, that is, ensure that the result is important. A correlation of .1 shows a weak relationship between two variables whether it is statistically significant or not. With a sample of 100 cases, this correlation will not be statistically significant; with a sample of 10,000 cases, it will be statistically significant. The smaller sample shows a weak relationship that might be a zero relationship in the population. The larger sample shows a weak relationship that is all but certainly a weak relationship in the population, although it is not zero. In this case, the statistical significance allows one to be confident that the relationship in the population is substantively weak.

Whenever a person reads that a result is statistically significant, he or she is confident that there is some relationship. The next step is to decide whether it is substantively significant or substantively weak. Power analysis is one way to make this decision. One can illustrate this process by testing the significance of a correlation. A population correlation of .1 is considered weak, a population correlation of .3 is considered moderate, and a population correlation of .5 or more is considered strong. In other words, if a correlation is statistically significant but .1 or lower, one has to recognize that this is a weak relationship—it is statistically significant but substantively weak. It is just as important to explain to the readers that the relationship is substantively weak as it is to report that it is statistically significant. By contrast, if a sample correlation is .5 and is statistically significant, one can say the relationship is both statistically and substantively significant.

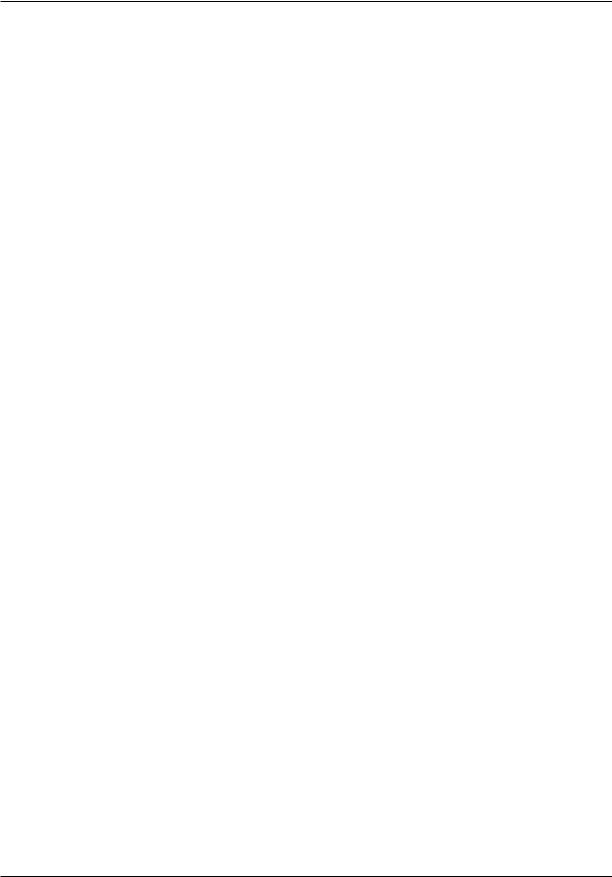

Figure 3 shows power curves for testing the significance of a correlation. These curves illustrate the need to be sensitive to both statistical significance and substantive significance. The curve on the extreme left shows the power of a test to show that a sample correlation, r, is statistically significant when the population correlation, ρ (rho), is .5. With a sample size of around 100, the power

of a test to show statistical significance approaches 1.0, or 100 percent. This means that any correlation that is this strong in the population can be shown to be statistically significant with a small sample.

What happens when the correlation in the population is weak? Suppose the true correlation in the population is .2. A sample with 500 cases almost certainly will produce a sample correlation that is statistically significant, since the power is approaching 1.0. Many sociological studies have 500 or more cases and produce results showing that substantively weak relationships, ρ = .2, are statistically significant. Figure 3 shows that even if the population correlation is just .1, a sample of 1,000 cases has the power to show a sample result that is statistically significant. Thus, any time a sample is 1,000 or larger, one has to be especially careful to avoid confusing statistical and substantive significance.

The guidelines for distinguishing between statistical and substantive significance are direct but often are ignored by researchers:

1.If a result is not statistically significant, regardless of its size in the sample, one should be reluctant to generalize it to the population.

2.If a result is statistically significant in the sample, this means that one can generalize it to the population but does not indicate whether it is a weak or a strong relationship.

3.If a result is statistically significant and strong in the sample, one can both generalize it to the population and assert that it is substantively significant.

4.If a result is statistically significant and weak in the sample, one can both generalize it to the population and assert that it is substantively weak in the population.

This reasoning applies to any test of significance. If a researcher found that girls have an average score of 100.2 on verbal skills and boys have an average score of 99.8, with girls and boys having a standard deviation of 10, one would think this as a very weak relationship. If one constructed a histogram for both girls and boys, one would find them almost identical. This difference is not substantively significant. However, if there was a sufficiently

3032

STATISTICAL INFERENCE

|

1 |

|

|

|

|

|

|

|

|

|

|

|

0.9 |

|

|

|

|

|

|

|

|

|

|

|

0.8 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

ρ = .5 |

|

|

|

|

|

|

|

|

0.7 |

|

|

|

|

|

|

|

|

|

|

|

0.6 |

|

ρ = .3 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Power |

0.5 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

0.4 |

|

ρ = .2 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

0.3 |

|

|

|

|

|

|

|

|

|

|

|

0.2 |

|

|

|

|

|

|

|

|

|

|

|

0.1 |

|

|

|

ρ = .1 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

0 |

|

|

|

|

|

|

|

|

|

|

|

0 |

100 |

200 |

300 |

400 |

500 |

600 |

700 |

800 |

900 |

1,000 |

Sample Size

Figure 3. Power of test of r, α = .05

large sample of girls and boys, say, n = 10,000, it could be shown that the difference is statistically significant. The statistical significance means that there is some difference, that the means for girls and boys are not identical. It is necessary to use judgment, however, to determine that the difference is substantively trivial. An abuse of statistical inference that can be committed by sociologists who do large-scale research is to confuse statistical and substantive significance.

NONRANDOM SAMPLES AND

STATISTICAL INFERENCE

Very few researchers use true random samples. Sometimes researchers use convenience sampling. An example is a social psychologist who has every student in a class participate in an experiment. The students in this class are not a random sample of the general population or even of students in a university. Should statistical inference be used here?

Other researchers may use the entire population. If one wants to know if male faculty members are paid more than female faculty members at a particular university, one may check the payroll for every faculty member. There is no sample— one has the entire population. What is the role of statistical inference in this instance?

Many researchers would use a test of significance in both cases, although the formal logic of statistical inference is violated. They are taking a ‘‘what if’’ approach. If the results they find could have occurred by a random process, they are less confident in their results than they would be if the results were statistically significant. Economists and demographers often report statistical inference results when they have the entire population. For example, if one examines the unemployment rates of blacks and whites over a ten-year period, one may find that the black rate is about twice the white rate. If one does a test of significance, it is unclear what the population is to which one wants

3033

STATISTICAL METHODS

to generalize. A ten-year period is not a random selection of all years. The rationale for doing statistical inference with population data and nonprobability samples is to see if the results could have been attributed to a chance process.

A related problem is that most surveys use complex sample designs rather than strictly random designs. A stratified sample or a clustered sample may be used to increase efficiency or reduce the cost of a survey. For example, a study might take a random sample of 20 high schools from a state and then interview 100 students from each of those schools. This survey will have 2,000 students but will not be a random sample because the 100 students from each school will be more similar to each other than to 100 randomly selected students. For instance, the 100 students from a school in a ghetto may mostly have minority status and mostly be from families that have a low income in a population with a high proportion of single-parent families. By contrast, 100 students from a school in an affluent suburb may be disproportionately white and middle class.

The standard statistical inference procedures discussed here that are used in most introductory statistics texts and in computer programs such as SAS and SPSS assume random sampling. When a different sampling design is used, such as a cluster design, a stratified sample, or a longitudinal design, the test of significance will be biased. In most cases, the test of significance will underestimate the standard errors and thus overestimate the test statistic (z, t, F). The extent to which this occurs is known as the ‘‘design effect.’’ The most typical design effect is greater than 1.0, meaning that the computed test statistic is larger than it should be. Specialized programs allow researchers to estimate design effects and incorporate them in the computation of the test statistics. The most widely used of these procedures are WesVar, which is available from SPSS, and SUDAAN, a stand-alone program. Neither program has been widely used by sociologists, but their use should increase in the future.

REFERENCES

Agresti, Alan, and Barbara Finlay 1996 Statistical Methods for the Social Sciences. Englewood Cliffs, N.J.: Pren- tice-Hall.

Blalock, Hubert M., Jr. 1979 Social Statistics. New York:

McGraw-Hill.

Bohrnstedt, George W., and David Knoke 1988 Statistics for Social Data Analysis, 2nd ed. Itasca, Ill.: F.E. Peakcock.

Brown, Steven R., and Lawrence E. Melamed 1990

Experimental Design and Analysis. Newbury Park, Calif.: Sage.

Cohen, Jacob 1988 Statistical Power Analysis for the Behavioral Sciences, 2nd ed. Hillsdale, N.J.: Erlbaum.

——— 1990 ‘‘Things I Have Learned (So Far).’’ American Psychologist 45:1304–1312.

Loether, Herman J., and Donald G. McTavish 1993

Descriptive and Inferential Statistics. New York: Allyn and Bacon.

Murphy, Kelvin R., and Brentt Myous, eds. 1998 Statistical Power Analysis: A Simple and Graphic Model for Traditional and Modern Hypothesis Tests. Hillsdale, N.J.: Erlbaum.

Raymondo, James 1999 Statistical Analysis in the Social Sciences. New York: McGraw-Hill.

Vaughan, Eva D. 1997 Statistics: Tools for Understanding Data in Behavioral Sciences. Englewood Cliffs, N.J.: Prentice-Hall.

ALAN C. ACOCK

STATISTICAL METHODS

In the 1960s, the introduction, acceptance, and application of multivariate statistical methods transformed quantitative sociological research. Regression methods from biometrics and economics; factor analysis from psychology; stochastic modeling from engineering, biometrics, and statistics; and methods for contingency table analysis from sociology and statistics were developed and combined to provide a rich variety of statistical methods. Along with the introduction of these techniques came the institutionalization of quantitative methods. In 1961, the American Sociological Association (ASA) approved the Section on Methodology as a result of efforts organized by Robert McGinnis and Albert Reiss. The ASA’s yearbook,

Sociological Methodology, first appeared in 1969 under the editorship of Edgar F. Borgatta and George W. Bohrnstedt. Those editors went on to establish the quarterly journal Sociological Methods and Research in 1972. During this period, the National Institute of Mental Health began funding training

3034

STATISTICAL METHODS

programs that included rigorous training in quantitative methods.

This article traces the development of statistical methods in sociology since 1960. Regression, factor analysis, stochastic modeling, and contingency table analysis are discussed as the core methods that were available or were introduced by the early 1960s. The development of additional methods through the enhancement and combination of these methods is then considered. The discussion emphasizes statistical methods for causal modeling; consequently, methods for data reduction (e.g., cluster analysis, smallest space analysis), formal modeling, and network analysis are not considered.

THE BROADER CONTEXT

By the end of the 1950s, the central ideas of mathematical statistics that emerged from the work of R. A. Fisher and Karl Pearson were firmly established. Works such as Fisher’s Statistical Methods for Research Workers (1925), Kendall’s Advanced Theory of Statistics (1943, 1946), Cramér’s Mathematical Methods of Statistics (1946), Wilks’s Mathematical Statistics (1944), Lehman’s Testing Statistical Hypotheses (1959), Scheffé’s The Analysis of Variance (1959), and Doob’s Stochastic Processes

(1953) systematized the key results of mathematical statistics and provided the foundation for developments in applied statistics for decades to come. By the start of the 1960s, multivariate methods were applied routinely in psychology, economics, and the biological sciences. Applied treatments were available in works such as Snedecor’s Statistical Methods (1937), Wold’s Demand Analysis (Wold and Juréen, 1953), Anderson’s An Introduction to Multivariate Statistical Analysis (1958), Simon’s Models of Man (1957), Thurstone’s Multiple-Factor Analysis (1947), and Finney’s Probit Analysis (1952).

These methods are computationally intensive, and their routine application depended on developments in computing. BMD (Biomedical Computing Programs) was perhaps the first widely available statistical package, appearing in 1961 (Dixon et al. 1981). SPSS (Statistical Package for the Social Sciences) appeared in 1970 as a result of efforts by a group of political scientists at Stanford to develop a general statistical package specifically

for social scientists (Nie et al. 1975). In addition to these general-purpose programs, many specialized programs appeared that were essential for the methods discussed below. At the same time, continuing advances in computer hardware increased the availability of computing by orders of magnitude, facilitating the adoption of new statistical methods.

DEVELOPMENTS IN SOCIOLOGY

It is within the context of developments in mathematical statistics, sophisticated applications in other fields, and rapid advances in computing that major changes occurred in quantitative sociological research. Four major methods serve as the cornerstones for later developments: regression, factor analysis, stochastic processes, and contingency table analysis.

Regression Analysis and Structural Equation Models. Regression analysis is used to estimate the effects of a set of independent variables on one or more dependent variables. It is arguably the most commonly applied statistical method in the social sciences. Before 1960, this method was relatively unknown to sociologists. It was not treated in standard texts and was rarely seen in the leading sociological journals. The key notions of multiple regression were introduced to sociologists in Blalock’s Social Statistics (1960). The generalization of regression to systems of equations and the accompanying notion of causal analysis began with Blalock’s Causal Inferences in Nonexperimental Research (1964) and Duncan’s ‘‘Path Analysis: Sociological Examples’’ (1966). Blalock’s work was heavily influenced by the economist Simon’s work on correlation and causality (Simon 1957) and the economist Wold’s work on simultaneous equation systems (Wold and Juréen 1953). Duncan’s work added the influence of the geneticist Wright’s work in path analysis (Wright 1934). The acceptance of these methods by sociologists required a substantive application that demonstrated how regression could contribute to the understanding of fundamental sociological questions. In this case, the question was the determination of occupational standing and the specific work was the substantively and methodologically influential The American Occupational Structure by Blau and Duncan (1967), a work unsurpassed in its integration

3035

STATISTICAL METHODS

of method and substance. Numerous applications of regression and path analysis soon followed. The diversity of influences, problems, and approaches that resulted from Blalock and Duncan’s work is shown in Blalock’s reader Causal Models in the Social Sciences (1971), which became the handbook of quantitative methods in the 1970s.

Regression models have been extended in many ways. Bielby and Hauser (1977) have reviewed developments involving systems of equations. Regression methods for time series analysis and forecasting (often called Box-Jenkins models) were given their classic treatment in Box and Jenkins’s Time Series Analysis (1970). Regression diagnostics have provided tools for exploring characteristics of the data set that is to be analyzed. Methods for identifying outlying and influential observations have been developed (Belsley et al. 1980), along with major advances in classic problems such as heteroscedasticity (White 1980) and specification (Hausman 1978). All these extensions have been finding their way into sociological practice.

Factor Analysis. Factor analysis, a technique developed by psychometricians, was the second major influence on quantitative sociological methods. Factor analysis is based on the idea that the covariation among a larger set of observed variables can be reduced to the covariation among a smaller set of unobserved or latent variables. By 1960, this method was well known and applications appeared in most major sociology journals. Statistical and computational advances in applying maximumlikelihood estimation to the factor model ( Jöreskog 1969) were essential for the development of the covariance structure model discussed below.

Stochastic Processes. Stochastic models were the third influence on the development of quantitative sociological methods. Stochastic processes model the change in a variable over time in cases where a chance process governs the change. Examples of stochastic processes include change in occupational status over a career (Blumen et al. 1955), friendship patterns, preference for job locations (Coleman 1964), and the distribution of racial disturbances (Spilerman 1971). While the mathematical and statistical details for many stochastic models had been worked out by 1960, they were relatively unknown to sociologists until the

publication of Coleman’s Introduction to Mathematical Sociology (1964) and Bartholomew’s Stochastic Models for Social Processes (1967). These books presented an array of models that were customized for specific social phenomena. While these models had great potential, applications were rare because of the great mathematical sophistication of the models and the lack of general-purpose software for estimating the models. Nonetheless, the influence of these methods on the development of other techniques was great. For example, Markov chain models for social mobility had an important influence on the development of loglinear models.

Contingency Table Analysis and Loglinear Models. Methods for categorical data were the fourth influence on quantitative methods. The analysis of contingency tables has a long tradition in sociology. Lazarsfeld’s work on elaboration analysis and panel analysis had a major influence on the way research was done at the start of the 1960s (Lazarsfeld and Rosenberg 1955). While these methods provided useful tools for analyzing categorical data and especially survey data, they were nonstatistical in the sense that issues of estimation and hypothesis testing generally were ignored. Important statistical advances for measures of association in two-way tables were made in a series of papers by Goodman and Kruskal that appeared during the 1950s and 1960s (Goodman and Kruskal 1979). In the 1960s, nonstatistical methods for analyzing contingency tables were replaced by the loglinear model. This model made the statistical analysis of multiway tables possible. Early developments are found in papers by Birch (1963) and Goodman (1964). The development of the general model was completed largely through the efforts of Frederick Mosteller, Stephen E. Fienberg, Yvonne M. M. Bishop, Shelby Haberman, and Leo A. Goodman, which were summarized in Bishop et al.’s Discrete Multivariate Analysis (1975). Applications in sociology appeared shortly after Goodman’s (1972) didactic presentation and the introduction of ECTA (Fay and Goodman 1974), a program for loglinear analysis. Since that time, the model has been extended to specific types of variables (e.g., ordinal), more complex structures (e.g., association models), and particular substantive problems (e.g., networks) (see Agresti [1990] for a treatment of recent developments). As with regression models, many early applications appeared in the area

3036

STATISTICAL METHODS

of stratification research. Indeed, many developments in loglinear analysis were motivated by substantive problems encountered in sociology and related fields.

ADDITIONAL METHODS

From these roots in regression, factor analysis, stochastic processes, and contingency table analysis, a wide variety of methods emerged that are now applied frequently by sociologists. Notions from these four areas were combined and extended to produce new methods. The remainder of this article considers the major methods that resulted.

Covariance Structure Models. The covariance structure model is a combination of the factor and regression models. While the factor model allowed imperfect multiple indicators to be used to extract a more accurately measured latent variable, it did not allow the modeling of causal relations among the factors. The regression model, conversely, did not allow imperfect measurement and multiple indicators. The covariance structure model resulted from the merger of the structural or causal component of the regression model with the measurement component of the factor model. With this model, it is possible to specify that each latent variable has one or more imperfectly measured observed indicators and that a causal relationship exists among the latent variables. Applications of such a model became practical after the computational breakthroughs made by Jöreskog, who published LISREL (linear structural relations) in 1972 ( Jöreskog and van Thillo 1972). The importance of this program is reflected by the use of the phrase ‘‘LISREL models’’ to refer to this area.

Initially, the model was based on analyzing the covariances among observed variables, and this gave rise to the name ‘‘covariance structure analysis.’’ Extensions of the model since 1973 have made use of additional types of information as the model has been enhanced to deal with multiple groups, noninterval observed variables, and estimation with less restrictive assumptions. These extensions have led to alternative names for these methods, such as ‘‘mean and covariance structure models’’ and, more recently, ‘‘structural equation modeling’’ (see Bollen [1989] and Browne and

Arminger [1995] for a discussion of these and other extensions).

Event History Analysis. Many sociological problems deal with the occurrence of an event. For example, does a divorce occur? When is one job given up for another? In such problems, the outcome to be explained is the time when the event occurred. While it is possible to analyze such data with regression, that method is flawed in two basic respects. First, event data often are censored. That is, for some members of the sample the event being predicted may not have occurred, and consequently a specific time for the event is missing. Even assuming that the censored time is a large number to reflect the fact that the event has not occurred, this will misrepresent cases in which the event occurred shortly after the end of the study. If one assigns a number equal to the time when the data collection ends or excludes those for whom the event has not occurred, the time of the event will be underestimated. Standard regression cannot deal adequately with censoring problems. Second, the regression model generally assumes that the errors in predicting the outcome are normally distributed, which is generally unrealistic for event data. Statistical methods for dealing with these problems began to appear in the 1950s and were introduced to sociologists in substantive papers examining social mobility (Spilerman 1972; Sorensen 1975; Tuma 1976). Applications of these methods were encouraged by the publication in 1976 of Tuma’s program RATE for event history analysis (Tuma and Crockford 1976). Since that time, event history analysis has become a major form of analysis and an area in which sociologists have made substantial contributions (see Allison [1995] and Petersen [1995] for reviews of these methods).

Categorical and Limited Dependent Variables.

If the dependent variable is binary, nominal, ordinal, count, or censored, the usual assumptions of the regression model are violated and estimates are biased. Some of these cases can be handled by the methods discussed above. Event history analysis deals with certain types of censored variables; loglinear analysis deals with binary, nominal, count, and ordinal variables when the independent variables are all nominal. Many other cases exist that require additional methods. These methods are called quantal response models or models for categorical, limited, or qualitative dependent vari-

3037

STATISTICAL METHODS

ables. Since the types of dependent variables analyzed by these methods occur frequently in the social sciences, they have received a great deal of attention by econometricians and sociologists (see Maddala [1983] and Long [1997] for reviews of these models and Cameron and Trivedi [1998] on count models).

Perhaps the simplest of these methods is logit analysis, in which the dependent variable is binary or nominal with a combination of interval and nominal independent variables. Logit analysis was introduced to sociologists by Theil (1970). Probit analysis is a related technique that is based on slightly different assumptions. McKelvey and Zavoina (1975) extend the logit and probit models to ordinal outcomes. A particularly important type of limited dependent variable occurs when the sample is selected nonrandomly. For example, in panel studies, cases that do not respond to each wave may be dropped from the analysis. If those who do not respond to each wave differ nonrandomly from those who do respond (e.g., those who are lost because of moving may differ from those who do not move), the resulting sample is not representative. To use an example from a review article by Berk (1983), in cases of domestic violence, police may write a report only if the violence exceeds some minimum level, and the resulting sample is biased to exclude cases with lower levels of violence. Regression estimates based on this sample will be biased. Heckman’s (1979) influential paper stimulated the development of sample selection models, which were introduced to sociologists by Berk (1983). These and many other models for limited dependent variables are extremely well suited to sociological problems. With the increasing availability of software for these models, their use is becoming more common than even that of the standard regression model.

Latent Structure Analysis. The objective of latent structure analysis is the same as that of factor analysis: to explain covariation among a larger number of observed variables in terms of a smaller number of latent variables. The difference is that factor analysis applies to interval-level observed and latent variables, whereas latent structure analysis applies to observed data that are noninterval. As part of the American soldier study, Paul F. Lazarsfeld, Sam Stouffer, Louis Guttman, and others developed techniques for ‘‘factor ana-

lyzing’’ nominal data. While many methods were developed, latent structure analysis has emerged as the most popular. Lazarsfeld coined the term ‘‘latent structure analysis’’ to refer to techniques for extracting latent variables from observed variables obtained from survey research. The specific techniques depend on the characteristics of the observed and latent variables. If both are continuous, the method is called factor analysis, as was discussed above. If both are discrete, the method is called latent class analysis. If the factors are continuous but the observed data are discrete, the method is termed latent trait analysis. If the factors are discrete but the data are continuous, the method is termed latent profile analysis. The classic presentation of these methods is presented in Lazarsfeld and Henry’s Latent Structure Analysis (1968). Although these developments were important and their methodological concerns were clearly sociological, these ideas had few applications during the next twenty years. While the programs ECTA, RATE, and LISREL stimulated applications of the loglinear, event history, and covariance structure models, respectively, the lack of software for latent structure analysis inhibited its use. This changed with Goodman’s (1974) algorithms for estimation and Clogg’s (1977) program MLLSA for estimating the models. Substantive applications began appearing in the 1980s, and the entire area of latent structure analysis has become a major focus of statistical work.

Multilevel and Panel Models. In most of the models discussed here, observations are assumed to be independent. This assumption can be violated for many reasons. For example, in panel data, the same individual is measured at multiple time points, and in studies of schools, all the children in each classroom may be included in the sample. Observations in a single classroom or for the same person over time tend to be more similar than are independent observations. The problems caused by the lack of independence are addressed by a variety of related methods that gained rapid acceptance beginning in the 1980s, when practical issue of estimation were solved. When the focus is on clustering with social groups (such as schools), the methods are known variously as hierarchical models, random coefficient models, and multilevel methods. When the focus is on clustering with panel data, the methods are referred to as models for cross-section and time series data, or simply

3038

STATISTICAL METHODS

panel analysis. The terms ‘‘fixed and random effects models’’ and ‘‘covariance component models’’ also are used. (See Hsiao [1995] for a review of panel models for continuous outcomes and Hamerle and Ronning [1995] for panel models for categorical outcomes. Bryk and Raudenbush [1992] review hierarchical linear models.)

Computer-Intensive Methods. The availability of cheap computing has led to the rapid development and application of computer-intensive methods that will change the way data are analyzed over the next decade. Methods of resampling, such as the bootstrap and the jackknife, allow practical solutions to previously intractable problems of statistical inference (Efron and Tibshirani 1993). This is done by recomputing a test statistic perhaps 1,000 times, using artificially constructed data sets. Computational algorithms for Bayesian analysis replace difficult or impossible algebraic derivations with computer-intensive simulation methods, such as the Markov chain algorithm, the Gibbs sampler, and the Metropolis algorithm (Gelman et al. 1995). Related developments have occurred in the treatment of missing data, with applications of the EM algorithm and Markov chain Monte Carlo techniques (Schafer 1997).

Other Developments. The methods discussed above represent the major developments in statistical methods in sociology since the 1960s. With the rapid development of mathematical statistics and advances in computing, new methods have continued to appear. Major advances have been made in the treatment of missing data (Little and Rubin 1987). Developments in statistical graphics (Cleveland 1985) are reflected in the increasing number of graphics appearing in sociological journals. Methods that require less restrictive distributional assumptions and are less sensitive to errors in the data being analyzed are now computationally feasible. Robust methods have been developed that are insensitive to small departures from the underlying assumptions (Rousseeuw and Leroy 1987). Resampling methods (e.g., bootstrap methods) allow estimation of standard errors and confidence intervals when the underlying distributional assumptions (e.g., normality) are unrealistic or the formulas for computing standard errors are intractable by letting the observed data assume the role of the underlying population (Stine 1990). Recent work by Muthén (forthcom-

ing) and others combines the structural component of the regression model, latent variables from factor and latent structure models, hierarchical modeling, and characteristics of limited variables into a single model. The development of Mplus (Muthén and Muthén 1998) makes routine application of this general model feasible.

CONCLUSIONS

The introduction of structural equation models in the 1960s changed the way sociologists viewed data and viewed the social world. Statistical developments in areas such as econometrics, biometrics, and psychometrics were imported directly into sociology. At the same time, other methods were developed by sociologists to deal with substantive problems of concern to sociology. A necessary condition for these changes was the steady decline in the cost of computing, the development of efficient numerical algorithms, and the availability of specialized software. Without developments in computing, these methods would be of little use to substantive researchers. As the power of desktop computers grows and the ease and flexibility of statistical packages increase, the application of sophisticated statistical methods has become more accessible to the average researcher than the card sorter was for constructing contingency tables in the 1950s and 1960s. As computing power continues to develop, new and promising methods are appearing with each issue of the journals in this area.

Acceptance of these methods has not been universal or without costs. Critiques of the application of quantitative methods have been written by both sympathetic (Lieberson 1985; Duncan 1984) and unsympathetic (Coser 1975) sociologists as well as statisticians (Freedman 1987) and econometricians (Leamer 1983). While these critiques have made practitioners rethink their approaches, the developments in quantitative methods that took shape in the 1960s will continue to influence sociological practice for decades to come.

REFERENCES

Agresti, Alan 1990 Categorical Data Analysis. New

York: Wiley.

Allison, Paul D. 1995 Survival Analysis Using the SAS® System: A Practical Guide. Cary, NC: SAS Institute.

3039

STATISTICAL METHODS

Anderson, T. W. 1958 An Introduction to Multivariate Statistical Analysis. New York: Wiley.

Bartholomew, D. J. 1967 Stochastic Models for Social Processes. New York: Wiley.

Belsley, David A., Edwin Kuh, and Roy E. Welsch 1980

Regression Diagnostics: Identifying Influential Data and Sources of Collinearity. New York: Wiley.

Berk, R. A. 1983 ‘‘An Introduction to Sample Selection Bias in Sociological Data.’’ American Sociological Review 48:386–398.

Bielby, William T., and Robert M. Hauser 1977 ‘‘Structural Equation Models.’’ Annual Review of Sociology. 3:137–161.

Birch, M. W. 1963. ‘‘Maximum Likelihood in ThreeWay Contingency Tables.’’ Journal of the Royal Statistical Society Series B 27:220–233.

Bishop, Y. M. M., S. E. Fienberg, and P. W. Holland 1975

Discrete Multivariate Analysis: Theory and Practice. Cambridge, Mass.: MIT Press.

Blalock, Hubert M., Jr. 1960 Social Statistics. New York:

McGraw-Hill.

——— 1964. Causal Inferences in Nonexperimental Research. Chapel Hill: University of North Carolina Press.

———, 1971 Causal Models in the Social Sciences. Chicago: Aldine.

Blau, Peter M., and Otis Dudley Duncan 1967 The American Occupational Structure. New York: Wiley.

Blumen, I., M. Kogan, and P. J. McCarthy 1955 Industrial Mobility of Labor as a Probability Process. Cornell Studies of Industrial and Labor Relations, vol. 6. Ithaca, N.Y. Cornell University Press.

Bollen, Kenneth A. 1989 Structural Equations with Latent Variables. New York: Wiley.

Borgatta, Edgar F., and George W. Bohrnstedt, eds. 1969 Sociological Methodology. San Francisco: Jossey-Bass.

———, eds. 1972 Sociological Methods and Research. Beverly Hills, Calif.: Sage.

Box, George E. P., and Gwilym M. Jenkins 1970 Time Series Analysis. San Francisco: Holden-Day.

Browne, Michael W., and Gerhard Arminger 1995 ‘‘Specification and Estimation of Meanand CovarianceStructure Models.’’ In Gerhard Arminger, Clifford C. Clogg, and Michael E. Sobel, eds., Handbook of Statistical Modeling for the Social and Behavioral Sciences. New York: Plenum.

Bryk, Anthony S., and Stephen W. Raudenbush 1992

Hierarchical Linear Models: Applications and Data Analysis Methods. Newbury Park, Calif.: Sage.

Cameron, A. Colin, and Pravin K. Trivedi 1998 Regression Analysis of Count Data. New York: Cambridge University Press.

Cleveland, William S. 1985 The Elements of Graphing Data. Monterey, Calif.: Wadsworth.

Clogg, Clifford C. 1977 MLLSA: Maximum Likelihood

Latent Structure Analysis. State College: Pennsylvania

State University.

Coleman, James S. 1964 Introduction to Mathematical Sociology. Glencoe, Ill.: Free Press.

Coser, Lewis F. 1975 ‘‘Presidential Address: Two Methods in Search of Substance.’’ American Sociological Review 40:691–700.

Cramér, Harald 1946 Mathematical Methods of Statistics. Princeton, N.J.: Princeton University Press.

Dixon, W. J. chief ed. 1981 BMD Statistical Software. Berkeley: University of California Press.

Doob, J. L. 1953. Stochastic Processes. New York: Wiley.

Duncan, Otis Dudley 1966 ‘‘Path Analysis: Sociological Examples.’’ American Journal of Sociology 72:1–16.

——— 1984 Notes on Social Measurement: Historical and Critical. New York: Russell Sage Foundation.

Efron, Bradley, and Robert J. Tibshirani 1993 An Introduction to the Bootstrap. New York: Chapman and Hall.

Fay, Robert, and Leo A. Goodman 1974 ECTA: Everyman’s Contingency Table Analysis.

Finney, D. J. 1952 Probit Analysis, 2nd ed. Cambridge, UK: Cambridge University Press.

Fisher, R. A. 1925 Statistical Methods for Research Workers. Edinburgh: Oliver and Boyd.

Freedman, David A. 1987 ‘‘As Others See Us: A Case Study in Path Analysis.’’ Journal of Educational Statistics 12:101–128.

Gelman, Andrew, John B. Carlin, Hal S. Stern, and Donald B. Rubin 1995 Bayesian Data Analysis. New York: Chapman and Hall.

Goodman, Leo A. 1964 ‘‘Simple Methods of Analyzing Three-Factor Interaction in Contingency Tables.’’

Journal of the American Statistical Association 58:319–352.

———1972. ‘‘A Modified Multiple Regression Approach to the Analysis of Dichotomous Variables.’’

American Sociological Review 37:28–46.

———1974 ‘‘The Analysis of Systems of Qualitative Variables When Some of the Variables Are Unobservable. Part I: A Modified Latent Structure Approach.’’ American Journal of Sociology 79:1179–1259.

———, and William H. Kruskal 1979 Measures of Association for Cross Classification. New York: Springer-Verlag.

3040