- •System classifications

- •5Th semester:

- •3Rd module: Performance parameters of control systems

- •12 Lectures, 4x6 (24 hours) laboratory works

- •4Th module: Modern automatic control theory

- •1.1 General systems ideas

- •1.2 What is control theory? - an initial discussion

- •1.3 What is automatic control

- •1.4 Some examples of control systems

- •2 Mathematical basics of automatic control theory

- •Components

- •3 System classifications Linear Systems control

- •Nonlinear Systems control

- •Decentralized Systems

- •Main control strategies

- •Intelligent control

2 Mathematical basics of automatic control theory

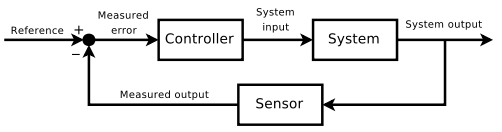

Automatic control is the research area and theoretical base for mechanization and automation, employing methods from mathematics and engineering. A central concept is that of the system which is to be controlled, such as a rudder, propeller or ballistic missile. The systems studied within automatic control are mostly the linear systems. Automatic control is also a methodology or philosophy of analyzing and designing a system that can self-regulate a plant (such as a machine or a process) operating condition or parameters by the controller with minimal human intervention. A regulator such as a thermostat is an example of a device studied in automatic control.

Components

Sensor(s), which measure some physical state such as temperature or liquid level.

Responder(s), which may be simple electrical or mechanical systems or complex special purpose digital controllers or general purpose computers.

Actuator(s), which effect a response to the sensor(s) under the command of the responder, for example, by controlling a gas flow to a burner in a heating system or electricity to a motor in a refrigerator or pump.

Building Basic Principles: From Classical to Modern.

Our goal is to present a clear exposition of the basic principles of frequency- and time-domain design techniques.

The classical methods of control engineering are thoroughly covered:

Laplace transforms and transfer functions;

root locus design;

Routh-Hurwitz stability analysis;

frequency response methods, including Bode, Nyquist, and Nichols;

steady-state error for standard test signals;

second-order system approximations;

phase and gain margin;

control system bandwidth.

In addition, coverage of the state variable method is significant. Fundamental notions of controllability and observability for state variable models are discussed. Full state feedback design with Ackermann's formula for pole placement is presented, along with a discussion on the limitations of state variable feedback. Observers are introduced as a means to provide state estimates when the complete state is not measured.

3 System classifications Linear Systems control

For MIMO systems, pole placement can be performed mathematically using a state space representation of the open-loop system and calculating a feedback matrix assigning poles in the desired positions. In complicated systems this can require computer-assisted calculation capabilities, and cannot always ensure robustness. Furthermore, all system states are not in general measured and so observers must be included and incorporated in pole placement design.

Nonlinear Systems control

Processes in industries like robotics and the aerospace industry typically have strong nonlinear dynamics. In control theory it is sometimes possible to linearize such classes of systems and apply linear techniques, but in many cases it can be necessary to devise from scratch theories permitting control of nonlinear systems. These, e.g., feedback linearization, backstepping, sliding mode control, trajectory linearization control normally take advantage of results based on Lyapunov's theory. Differential geometry has been widely used as a tool for generalizing well-known linear control concepts to the non-linear case, as well as showing the subtleties that make it a more challenging problem.