- •Preface

- •Biological Vision Systems

- •Visual Representations from Paintings to Photographs

- •Computer Vision

- •The Limitations of Standard 2D Images

- •3D Imaging, Analysis and Applications

- •Book Objective and Content

- •Acknowledgements

- •Contents

- •Contributors

- •2.1 Introduction

- •Chapter Outline

- •2.2 An Overview of Passive 3D Imaging Systems

- •2.2.1 Multiple View Approaches

- •2.2.2 Single View Approaches

- •2.3 Camera Modeling

- •2.3.1 Homogeneous Coordinates

- •2.3.2 Perspective Projection Camera Model

- •2.3.2.1 Camera Modeling: The Coordinate Transformation

- •2.3.2.2 Camera Modeling: Perspective Projection

- •2.3.2.3 Camera Modeling: Image Sampling

- •2.3.2.4 Camera Modeling: Concatenating the Projective Mappings

- •2.3.3 Radial Distortion

- •2.4 Camera Calibration

- •2.4.1 Estimation of a Scene-to-Image Planar Homography

- •2.4.2 Basic Calibration

- •2.4.3 Refined Calibration

- •2.4.4 Calibration of a Stereo Rig

- •2.5 Two-View Geometry

- •2.5.1 Epipolar Geometry

- •2.5.2 Essential and Fundamental Matrices

- •2.5.3 The Fundamental Matrix for Pure Translation

- •2.5.4 Computation of the Fundamental Matrix

- •2.5.5 Two Views Separated by a Pure Rotation

- •2.5.6 Two Views of a Planar Scene

- •2.6 Rectification

- •2.6.1 Rectification with Calibration Information

- •2.6.2 Rectification Without Calibration Information

- •2.7 Finding Correspondences

- •2.7.1 Correlation-Based Methods

- •2.7.2 Feature-Based Methods

- •2.8 3D Reconstruction

- •2.8.1 Stereo

- •2.8.1.1 Dense Stereo Matching

- •2.8.1.2 Triangulation

- •2.8.2 Structure from Motion

- •2.9 Passive Multiple-View 3D Imaging Systems

- •2.9.1 Stereo Cameras

- •2.9.2 3D Modeling

- •2.9.3 Mobile Robot Localization and Mapping

- •2.10 Passive Versus Active 3D Imaging Systems

- •2.11 Concluding Remarks

- •2.12 Further Reading

- •2.13 Questions

- •2.14 Exercises

- •References

- •3.1 Introduction

- •3.1.1 Historical Context

- •3.1.2 Basic Measurement Principles

- •3.1.3 Active Triangulation-Based Methods

- •3.1.4 Chapter Outline

- •3.2 Spot Scanners

- •3.2.1 Spot Position Detection

- •3.3 Stripe Scanners

- •3.3.1 Camera Model

- •3.3.2 Sheet-of-Light Projector Model

- •3.3.3 Triangulation for Stripe Scanners

- •3.4 Area-Based Structured Light Systems

- •3.4.1 Gray Code Methods

- •3.4.1.1 Decoding of Binary Fringe-Based Codes

- •3.4.1.2 Advantage of the Gray Code

- •3.4.2 Phase Shift Methods

- •3.4.2.1 Removing the Phase Ambiguity

- •3.4.3 Triangulation for a Structured Light System

- •3.5 System Calibration

- •3.6 Measurement Uncertainty

- •3.6.1 Uncertainty Related to the Phase Shift Algorithm

- •3.6.2 Uncertainty Related to Intrinsic Parameters

- •3.6.3 Uncertainty Related to Extrinsic Parameters

- •3.6.4 Uncertainty as a Design Tool

- •3.7 Experimental Characterization of 3D Imaging Systems

- •3.7.1 Low-Level Characterization

- •3.7.2 System-Level Characterization

- •3.7.3 Characterization of Errors Caused by Surface Properties

- •3.7.4 Application-Based Characterization

- •3.8 Selected Advanced Topics

- •3.8.1 Thin Lens Equation

- •3.8.2 Depth of Field

- •3.8.3 Scheimpflug Condition

- •3.8.4 Speckle and Uncertainty

- •3.8.5 Laser Depth of Field

- •3.8.6 Lateral Resolution

- •3.9 Research Challenges

- •3.10 Concluding Remarks

- •3.11 Further Reading

- •3.12 Questions

- •3.13 Exercises

- •References

- •4.1 Introduction

- •Chapter Outline

- •4.2 Representation of 3D Data

- •4.2.1 Raw Data

- •4.2.1.1 Point Cloud

- •4.2.1.2 Structured Point Cloud

- •4.2.1.3 Depth Maps and Range Images

- •4.2.1.4 Needle map

- •4.2.1.5 Polygon Soup

- •4.2.2 Surface Representations

- •4.2.2.1 Triangular Mesh

- •4.2.2.2 Quadrilateral Mesh

- •4.2.2.3 Subdivision Surfaces

- •4.2.2.4 Morphable Model

- •4.2.2.5 Implicit Surface

- •4.2.2.6 Parametric Surface

- •4.2.2.7 Comparison of Surface Representations

- •4.2.3 Solid-Based Representations

- •4.2.3.1 Voxels

- •4.2.3.3 Binary Space Partitioning

- •4.2.3.4 Constructive Solid Geometry

- •4.2.3.5 Boundary Representations

- •4.2.4 Summary of Solid-Based Representations

- •4.3 Polygon Meshes

- •4.3.1 Mesh Storage

- •4.3.2 Mesh Data Structures

- •4.3.2.1 Halfedge Structure

- •4.4 Subdivision Surfaces

- •4.4.1 Doo-Sabin Scheme

- •4.4.2 Catmull-Clark Scheme

- •4.4.3 Loop Scheme

- •4.5 Local Differential Properties

- •4.5.1 Surface Normals

- •4.5.2 Differential Coordinates and the Mesh Laplacian

- •4.6 Compression and Levels of Detail

- •4.6.1 Mesh Simplification

- •4.6.1.1 Edge Collapse

- •4.6.1.2 Quadric Error Metric

- •4.6.2 QEM Simplification Summary

- •4.6.3 Surface Simplification Results

- •4.7 Visualization

- •4.8 Research Challenges

- •4.9 Concluding Remarks

- •4.10 Further Reading

- •4.11 Questions

- •4.12 Exercises

- •References

- •1.1 Introduction

- •Chapter Outline

- •1.2 A Historical Perspective on 3D Imaging

- •1.2.1 Image Formation and Image Capture

- •1.2.2 Binocular Perception of Depth

- •1.2.3 Stereoscopic Displays

- •1.3 The Development of Computer Vision

- •1.3.1 Further Reading in Computer Vision

- •1.4 Acquisition Techniques for 3D Imaging

- •1.4.1 Passive 3D Imaging

- •1.4.2 Active 3D Imaging

- •1.4.3 Passive Stereo Versus Active Stereo Imaging

- •1.5 Twelve Milestones in 3D Imaging and Shape Analysis

- •1.5.1 Active 3D Imaging: An Early Optical Triangulation System

- •1.5.2 Passive 3D Imaging: An Early Stereo System

- •1.5.3 Passive 3D Imaging: The Essential Matrix

- •1.5.4 Model Fitting: The RANSAC Approach to Feature Correspondence Analysis

- •1.5.5 Active 3D Imaging: Advances in Scanning Geometries

- •1.5.6 3D Registration: Rigid Transformation Estimation from 3D Correspondences

- •1.5.7 3D Registration: Iterative Closest Points

- •1.5.9 3D Local Shape Descriptors: Spin Images

- •1.5.10 Passive 3D Imaging: Flexible Camera Calibration

- •1.5.11 3D Shape Matching: Heat Kernel Signatures

- •1.6 Applications of 3D Imaging

- •1.7 Book Outline

- •1.7.1 Part I: 3D Imaging and Shape Representation

- •1.7.2 Part II: 3D Shape Analysis and Processing

- •1.7.3 Part III: 3D Imaging Applications

- •References

- •5.1 Introduction

- •5.1.1 Applications

- •5.1.2 Chapter Outline

- •5.2 Mathematical Background

- •5.2.1 Differential Geometry

- •5.2.2 Curvature of Two-Dimensional Surfaces

- •5.2.3 Discrete Differential Geometry

- •5.2.4 Diffusion Geometry

- •5.2.5 Discrete Diffusion Geometry

- •5.3 Feature Detectors

- •5.3.1 A Taxonomy

- •5.3.2 Harris 3D

- •5.3.3 Mesh DOG

- •5.3.4 Salient Features

- •5.3.5 Heat Kernel Features

- •5.3.6 Topological Features

- •5.3.7 Maximally Stable Components

- •5.3.8 Benchmarks

- •5.4 Feature Descriptors

- •5.4.1 A Taxonomy

- •5.4.2 Curvature-Based Descriptors (HK and SC)

- •5.4.3 Spin Images

- •5.4.4 Shape Context

- •5.4.5 Integral Volume Descriptor

- •5.4.6 Mesh Histogram of Gradients (HOG)

- •5.4.7 Heat Kernel Signature (HKS)

- •5.4.8 Scale-Invariant Heat Kernel Signature (SI-HKS)

- •5.4.9 Color Heat Kernel Signature (CHKS)

- •5.4.10 Volumetric Heat Kernel Signature (VHKS)

- •5.5 Research Challenges

- •5.6 Conclusions

- •5.7 Further Reading

- •5.8 Questions

- •5.9 Exercises

- •References

- •6.1 Introduction

- •Chapter Outline

- •6.2 Registration of Two Views

- •6.2.1 Problem Statement

- •6.2.2 The Iterative Closest Points (ICP) Algorithm

- •6.2.3 ICP Extensions

- •6.2.3.1 Techniques for Pre-alignment

- •Global Approaches

- •Local Approaches

- •6.2.3.2 Techniques for Improving Speed

- •Subsampling

- •Closest Point Computation

- •Distance Formulation

- •6.2.3.3 Techniques for Improving Accuracy

- •Outlier Rejection

- •Additional Information

- •Probabilistic Methods

- •6.3 Advanced Techniques

- •6.3.1 Registration of More than Two Views

- •Reducing Error Accumulation

- •Automating Registration

- •6.3.2 Registration in Cluttered Scenes

- •Point Signatures

- •Matching Methods

- •6.3.3 Deformable Registration

- •Methods Based on General Optimization Techniques

- •Probabilistic Methods

- •6.3.4 Machine Learning Techniques

- •Improving the Matching

- •Object Detection

- •6.4 Quantitative Performance Evaluation

- •6.5 Case Study 1: Pairwise Alignment with Outlier Rejection

- •6.6 Case Study 2: ICP with Levenberg-Marquardt

- •6.6.1 The LM-ICP Method

- •6.6.2 Computing the Derivatives

- •6.6.3 The Case of Quaternions

- •6.6.4 Summary of the LM-ICP Algorithm

- •6.6.5 Results and Discussion

- •6.7 Case Study 3: Deformable ICP with Levenberg-Marquardt

- •6.7.1 Surface Representation

- •6.7.2 Cost Function

- •Data Term: Global Surface Attraction

- •Data Term: Boundary Attraction

- •Penalty Term: Spatial Smoothness

- •Penalty Term: Temporal Smoothness

- •6.7.3 Minimization Procedure

- •6.7.4 Summary of the Algorithm

- •6.7.5 Experiments

- •6.8 Research Challenges

- •6.9 Concluding Remarks

- •6.10 Further Reading

- •6.11 Questions

- •6.12 Exercises

- •References

- •7.1 Introduction

- •7.1.1 Retrieval and Recognition Evaluation

- •7.1.2 Chapter Outline

- •7.2 Literature Review

- •7.3 3D Shape Retrieval Techniques

- •7.3.1 Depth-Buffer Descriptor

- •7.3.1.1 Computing the 2D Projections

- •7.3.1.2 Obtaining the Feature Vector

- •7.3.1.3 Evaluation

- •7.3.1.4 Complexity Analysis

- •7.3.2 Spin Images for Object Recognition

- •7.3.2.1 Matching

- •7.3.2.2 Evaluation

- •7.3.2.3 Complexity Analysis

- •7.3.3 Salient Spectral Geometric Features

- •7.3.3.1 Feature Points Detection

- •7.3.3.2 Local Descriptors

- •7.3.3.3 Shape Matching

- •7.3.3.4 Evaluation

- •7.3.3.5 Complexity Analysis

- •7.3.4 Heat Kernel Signatures

- •7.3.4.1 Evaluation

- •7.3.4.2 Complexity Analysis

- •7.4 Research Challenges

- •7.5 Concluding Remarks

- •7.6 Further Reading

- •7.7 Questions

- •7.8 Exercises

- •References

- •8.1 Introduction

- •Chapter Outline

- •8.2 3D Face Scan Representation and Visualization

- •8.3 3D Face Datasets

- •8.3.1 FRGC v2 3D Face Dataset

- •8.3.2 The Bosphorus Dataset

- •8.4 3D Face Recognition Evaluation

- •8.4.1 Face Verification

- •8.4.2 Face Identification

- •8.5 Processing Stages in 3D Face Recognition

- •8.5.1 Face Detection and Segmentation

- •8.5.2 Removal of Spikes

- •8.5.3 Filling of Holes and Missing Data

- •8.5.4 Removal of Noise

- •8.5.5 Fiducial Point Localization and Pose Correction

- •8.5.6 Spatial Resampling

- •8.5.7 Feature Extraction on Facial Surfaces

- •8.5.8 Classifiers for 3D Face Matching

- •8.6 ICP-Based 3D Face Recognition

- •8.6.1 ICP Outline

- •8.6.2 A Critical Discussion of ICP

- •8.6.3 A Typical ICP-Based 3D Face Recognition Implementation

- •8.6.4 ICP Variants and Other Surface Registration Approaches

- •8.7 PCA-Based 3D Face Recognition

- •8.7.1 PCA System Training

- •8.7.2 PCA Training Using Singular Value Decomposition

- •8.7.3 PCA Testing

- •8.7.4 PCA Performance

- •8.8 LDA-Based 3D Face Recognition

- •8.8.1 Two-Class LDA

- •8.8.2 LDA with More than Two Classes

- •8.8.3 LDA in High Dimensional 3D Face Spaces

- •8.8.4 LDA Performance

- •8.9 Normals and Curvature in 3D Face Recognition

- •8.9.1 Computing Curvature on a 3D Face Scan

- •8.10 Recent Techniques in 3D Face Recognition

- •8.10.1 3D Face Recognition Using Annotated Face Models (AFM)

- •8.10.2 Local Feature-Based 3D Face Recognition

- •8.10.2.1 Keypoint Detection and Local Feature Matching

- •8.10.2.2 Other Local Feature-Based Methods

- •8.10.3 Expression Modeling for Invariant 3D Face Recognition

- •8.10.3.1 Other Expression Modeling Approaches

- •8.11 Research Challenges

- •8.12 Concluding Remarks

- •8.13 Further Reading

- •8.14 Questions

- •8.15 Exercises

- •References

- •9.1 Introduction

- •Chapter Outline

- •9.2 DEM Generation from Stereoscopic Imagery

- •9.2.1 Stereoscopic DEM Generation: Literature Review

- •9.2.2 Accuracy Evaluation of DEMs

- •9.2.3 An Example of DEM Generation from SPOT-5 Imagery

- •9.3 DEM Generation from InSAR

- •9.3.1 Techniques for DEM Generation from InSAR

- •9.3.1.1 Basic Principle of InSAR in Elevation Measurement

- •9.3.1.2 Processing Stages of DEM Generation from InSAR

- •The Branch-Cut Method of Phase Unwrapping

- •The Least Squares (LS) Method of Phase Unwrapping

- •9.3.2 Accuracy Analysis of DEMs Generated from InSAR

- •9.3.3 Examples of DEM Generation from InSAR

- •9.4 DEM Generation from LIDAR

- •9.4.1 LIDAR Data Acquisition

- •9.4.2 Accuracy, Error Types and Countermeasures

- •9.4.3 LIDAR Interpolation

- •9.4.4 LIDAR Filtering

- •9.4.5 DTM from Statistical Properties of the Point Cloud

- •9.5 Research Challenges

- •9.6 Concluding Remarks

- •9.7 Further Reading

- •9.8 Questions

- •9.9 Exercises

- •References

- •10.1 Introduction

- •10.1.1 Allometric Modeling of Biomass

- •10.1.2 Chapter Outline

- •10.2 Aerial Photo Mensuration

- •10.2.1 Principles of Aerial Photogrammetry

- •10.2.1.1 Geometric Basis of Photogrammetric Measurement

- •10.2.1.2 Ground Control and Direct Georeferencing

- •10.2.2 Tree Height Measurement Using Forest Photogrammetry

- •10.2.2.2 Automated Methods in Forest Photogrammetry

- •10.3 Airborne Laser Scanning

- •10.3.1 Principles of Airborne Laser Scanning

- •10.3.1.1 Lidar-Based Measurement of Terrain and Canopy Surfaces

- •10.3.2 Individual Tree-Level Measurement Using Lidar

- •10.3.2.1 Automated Individual Tree Measurement Using Lidar

- •10.3.3 Area-Based Approach to Estimating Biomass with Lidar

- •10.4 Future Developments

- •10.5 Concluding Remarks

- •10.6 Further Reading

- •10.7 Questions

- •References

- •11.1 Introduction

- •Chapter Outline

- •11.2 Volumetric Data Acquisition

- •11.2.1 Computed Tomography

- •11.2.1.1 Characteristics of 3D CT Data

- •11.2.2 Positron Emission Tomography (PET)

- •11.2.2.1 Characteristics of 3D PET Data

- •Relaxation

- •11.2.3.1 Characteristics of the 3D MRI Data

- •Image Quality and Artifacts

- •11.2.4 Summary

- •11.3 Surface Extraction and Volumetric Visualization

- •11.3.1 Surface Extraction

- •Example: Curvatures and Geometric Tools

- •11.3.2 Volume Rendering

- •11.3.3 Summary

- •11.4 Volumetric Image Registration

- •11.4.1 A Hierarchy of Transformations

- •11.4.1.1 Rigid Body Transformation

- •11.4.1.2 Similarity Transformations and Anisotropic Scaling

- •11.4.1.3 Affine Transformations

- •11.4.1.4 Perspective Transformations

- •11.4.1.5 Non-rigid Transformations

- •11.4.2 Points and Features Used for the Registration

- •11.4.2.1 Landmark Features

- •11.4.2.2 Surface-Based Registration

- •11.4.2.3 Intensity-Based Registration

- •11.4.3 Registration Optimization

- •11.4.3.1 Estimation of Registration Errors

- •11.4.4 Summary

- •11.5 Segmentation

- •11.5.1 Semi-automatic Methods

- •11.5.1.1 Thresholding

- •11.5.1.2 Region Growing

- •11.5.1.3 Deformable Models

- •Snakes

- •Balloons

- •11.5.2 Fully Automatic Methods

- •11.5.2.1 Atlas-Based Segmentation

- •11.5.2.2 Statistical Shape Modeling and Analysis

- •11.5.3 Summary

- •11.6 Diffusion Imaging: An Illustration of a Full Pipeline

- •11.6.1 From Scalar Images to Tensors

- •11.6.2 From Tensor Image to Information

- •11.6.3 Summary

- •11.7 Applications

- •11.7.1 Diagnosis and Morphometry

- •11.7.2 Simulation and Training

- •11.7.3 Surgical Planning and Guidance

- •11.7.4 Summary

- •11.8 Concluding Remarks

- •11.9 Research Challenges

- •11.10 Further Reading

- •Data Acquisition

- •Surface Extraction

- •Volume Registration

- •Segmentation

- •Diffusion Imaging

- •Software

- •11.11 Questions

- •11.12 Exercises

- •References

- •Index

8 3D Face Recognition |

353 |

of the two transforms provided a marginal improvement of around 0.3 % over Haar wavelets alone. If the evaluation was limited to neutral expressions, a reasonable verification scenario, verification rates improved to as high as 99 %. A rank-1 identification rate of 97 % was reported.

8.10.2 Local Feature-Based 3D Face Recognition

Three-dimensional face recognition algorithms that construct a global representation of the face using the full facial area are often termed holistic. Often, such methods are quite sensitive to pose, facial expressions and occlusions. For example, a small change in the pose can alter the depth maps and normal maps of a 3D face. This error will propagate to the feature vector extraction stage (where a feature vector represents a 3D face scan) and subsequently affect matching. For global representation and features to be consistent between multiple 3D face scans of the same identity, the scans must be accurately normalized with respect to pose. We have already discussed some limitations and problems associated with pose normalization in Sect. 8.5.5. Due to the difficulty of accurate localization of fiducial points, pose normalization is never perfect. Even the ICP based pose normalization to a reference 3D face cannot perfectly normalize the pose with high repeatability because dissimilar surfaces have multiple comparable local minima rather than a single, distinctively low global minimum. Another source of error in pose normalization is a consequence of the non-rigid nature of the face. Facial expressions can change the curvatures of a face and displace fiducial points leading to errors in pose correction.

In contrast to holistic approaches, a second category of face recognition algorithms extracts local features [96] from faces and matches them independently. Although local features have been discussed before for the purpose of fiducial point localization and pose correction, in this section we will focus on algorithms that use local features for 3D face matching. In case of pose correction, the local features are chosen such that they are generic to the 3D faces of all identities. However, for face matching, local features may be chosen such that they are unique to every identity.

In feature-based face recognition, the first step is to determine the locations from where to extract the local features. Extracting local features at every point would be computationally expensive. However, detecting a subset of points (i.e. keypoints) over the 3D face can significantly reduce the computation time of the subsequent feature extraction and matching phases. Uniform or arbitrary sparse sampling of the face will result in features that are not unique (or sufficiently descriptive) resulting in sub-optimal recognition performance. Ideally keypoints must be repeatedly detectable at locations on a 3D face where invariant and descriptive features can be extracted. Moreover, the keypoint identification (if required) and features should be robust to noise and pose variations.

354 |

A. Mian and N. Pears |

8.10.2.1 Keypoint Detection and Local Feature Matching

Mian et al. [65] proposed a keypoint detection and local feature extraction algorithm for 3D face recognition. Details of the technique are as follows. The point cloud is first resampled at uniform intervals of 4 mm on the x, y plane. Taking each sample point p as a center, a sphere of radius r is used to crop a local surface from the face. The value of r is chosen as a trade-off between the feature’s descriptiveness and sensitivity to global variations, such as those due to varying facial expression. Larger r will result in more descriptive features at the cost of being more sensitive to global variations and vice-versa.

This local point cloud is then translated to zero mean and a decorrelating rotation is applied using the eigenvectors of its covariance matrix, as described earlier for full face scans in Sect. 8.7.1. Equivalently, we could use SVD on the zero-mean data matrix associated with this local point cloud to determine the eigenvectors, as described in Sect. 8.7.2. The result of these operations is that the local points are aligned along their principal axes and we determine the maximum and minimum coordinate values for the first two of these axes (i.e. the two with the largest eigenvalues). This gives a measure of the spread of the local data over these two principal directions.

We compute the difference in these two principal axes spreads and denote it by δ. If the variation in surface is symmetric as in the case of a plane or a sphere, the value of δ will be close to zero. However, in the case of asymmetric variation of the surface, δ will have a value dependent on the asymmetry. The depth variation is important for the descriptiveness of the feature and the asymmetry is essential for defining a local coordinate basis for the subsequent extraction of local features.

If the value of δ is greater than a threshold t1, p qualifies as a keypoint. If t1 is set to zero, every point will qualify as a keypoint and as t1 is increased, the total number of keypoints will decrease. Only two thresholds are required for keypoint detection namely r and t1 which were empirically chosen as r1 = 20 mm and t1 = 2 mm by Mian et al. [65]. Since the data space is known (i.e. human faces), r and t1 can be chosen easily. Mian et al. [65] demonstrated that this algorithm can detect keypoints with high repeatability on the 3D faces of the same identity. Interestingly, the keypoint locations are different for different individuals providing a coarse classification between different identities right at the keypoint detection stage. This keypoint detection technique is generic and was later extended to other 3D objects as well where the scale of the feature (i.e. r ) was automatically chosen [66].

In PCA-based alignment, there is an inherent two-fold ambiguity. This is resolved by assuming that the faces are roughly upright and the patch is rotated by the smaller of the possible angles to align it with its principal axis. A smooth surface is fitted to the points that have been mapped into a local frame, using the approximation given in [27] for robustness to noise and outliers. The surface is sampled on a uniform 20 × 20 xy lattice. To avoid boundary effects, a larger region is initially cropped using r2 > r for surface fitting using a larger lattice and then only the central 20 × 20 samples covering the r region are used as a feature vector of dimension 400. Note that this feature is essentially a depth map of the local surface defined

8 3D Face Recognition |

355 |

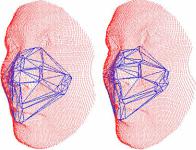

Fig. 8.13 Illustration of a keypoint on a 3D face and its corresponding texture. Figure courtesy of [65]

in the local coordinate basis with the location of the keypoint p as origin and the principal axes as the direction vectors. Figure 8.13 shows a keypoint and its local corresponding feature.

A constant threshold t1 usually results in a different number of keypoints on each 3D face scan. Therefore, an upper limit of 200 is imposed on the total number of local features to prevent bias in the recognition results. To reduce the dimensionality of the features, they are projected to a PCA subspace defined by their most significant eigenvectors. Mian et al. [65] showed that 11 dimensions are sufficient to conserve 99 % of the variance of the features. Thus each face scan is represented by 200 features of dimension 11 each. Local features from the gallery and probe faces are projected to the same 11 dimensional subspace and whitened so that the variation along each dimension is equal. The features are then normalized to unit vectors and the angle between them is used as a matching metric.

For a probe feature, the gallery face feature that forms the minimum angle with it is considered to be its match. Only one-to-one matches are allowed i.e. if a gallery feature matches more than one probe feature, only the one with the minimum angle is considered. Thus gallery faces generally have a different number of matches with a probe face.

The matched keypoints of the probe face are meshed using Delaunay triangulation and its edges are used to construct a similar 3D mesh from the corresponding keypoints of the gallery face. If the matches are spatially consistent, the two meshes will be similar (see Fig. 8.14). The similarity between the two meshes is given by:

|

= nε |

nε |

| |

− |

| |

|

|

|

γ |

1 |

|

εpi |

|

εgi |

, |

(8.42) |

|

|

|

|

||||||

i

where εpi and εgi are the lengths of the corresponding edges of the probe and gallery meshes, respectively. The value nε is the number of edges. Note that γ is invariant to pose.

A fourth similarity measure between the faces is calculated as the mean Euclidean distance d between the corresponding vertices (keypoints) of the meshes after least squared minimization. The four similarity measures namely the average

356 |

A. Mian and N. Pears |

Fig. 8.14 Graph of feature points matched between two faces. Figure courtesy of [65]

angle between the matching features, the number of matches, γ and d are normalized on a scale of 0 to 1 and combined using a confidence weighted sum rule:

s |

= |

θ |

¯ + |

κ |

m(1 − m) + κγ γ + κd d, |

(8.43) |

|

κ |

θ |

|

|

where κx is the confidence in individual similarity metric defined as a ratio between the best and second best matches of the probe face with the gallery. The gallery face with the minimum value of s is declared as the identity of the probe. The algorithm achieved 96.1 % rank-1 identification rate and 98.6 % verification rate at 0.1 % FAR on the complete FRGC v2 data set. Restricting the evaluation to neutral expression face scans resulted in a verification rate of 99.4 %.

8.10.2.2 Other Local Feature-Based Methods

Another example of local feature based 3D face recognition is that of Chua et al. [21] who extracted point signatures [20] of the rigid parts of the face for expression robust 3D face recognition. A point signature is a one dimensional invariant signature describing the local surface around a point. The signature is extracted by centering a sphere of fixed radius at that point. The intersection of the sphere with the objects surface gives a 3D curve whose orientation can be normalized using its normal and a reference direction. The 3D curve is projected perpendicularly to a plane, fitted to the curve, forming a 2D curve. This projection gives a signed distance profile called the point signature. The starting point of the signature is defined by a vector from the point to where the 3D curve gives the largest positive profile distance. Chua et al. [21] do not provide a detailed experimental analysis of the point signatures for 3D face recognition.

Local features have also been combined with global features to achieve better performance. Xu et al. [90] combined local shape variations with global geometric features to perform 3D face recognition. Finally, Al-Osaimi et al. [4] also combined local and global geometric cues for 3D face recognition. The local features represented local similarities between faces while the global features provided geometric consistency of the spatial organization of the local features.