- •Contents

- •Preface to second edition

- •1 Introduction

- •1.2 Applying technology in an environment

- •1.3 The human role in systems

- •1.4 Ethical issues

- •1.7 Common practice and good practice

- •1.8 Bugs and emergent phenomena

- •1.10 Knowledge is a jigsaw puzzle

- •1.11 To the student

- •1.12 Some road-maps

- •2 System components

- •2.2 Handling hardware

- •2.3 Operating systems

- •2.4 Filesystems

- •2.5 Processes and job control

- •2.6 Networks

- •2.7 IPv4 networks

- •2.8 Address space in IPv4

- •2.9 IPv6 networks

- •3 Networked communities

- •3.1 Communities and enterprises

- •3.2 Policy blueprints

- •3.4 User behavior: socio-anthropology

- •3.5 Clients, servers and delegation

- •3.6 Host identities and name services

- •3.8 Local network orientation and analysis

- •4 Host management

- •4.1 Global view, local action

- •4.2 Physical considerations of server room

- •4.3 Computer startup and shutdown

- •4.5 Installing a Unix disk

- •4.6 Installation of the operating system

- •4.7 Software installation

- •4.8 Kernel customization

- •5 User management

- •5.1 Issues

- •5.2 User registration

- •5.3 Account policy

- •5.4 Login environment

- •5.5 User support services

- •5.6 Controlling user resources

- •5.7 Online user services

- •5.9 Ethical conduct of administrators and users

- •5.10 Computer usage policy

- •6 Models of network and system administration

- •6.5 Creating infrastructure

- •6.7 Competition, immunity and convergence

- •6.8 Policy and configuration automation

- •7.2 Methods: controlling causes and symptoms

- •7.4 Declarative languages

- •7.6 Common assumptions: clock synchronization

- •7.7 Human–computer job scheduling

- •7.9 Preventative host maintenance

- •7.10 SNMP tools

- •7.11 Cfengine

- •8 Diagnostics, fault and change management

- •8.1 Fault tolerance and propagation

- •8.2 Networks and small worlds

- •8.3 Causality and dependency

- •8.4 Defining the system

- •8.5 Faults

- •8.6 Cause trees

- •8.7 Probabilistic fault trees

- •8.9 Game-theoretical strategy selection

- •8.10 Monitoring

- •8.12 Principles of quality assurance

- •9 Application-level services

- •9.1 Application-level services

- •9.2 Proxies and agents

- •9.3 Installing a new service

- •9.4 Summoning daemons

- •9.5 Setting up the DNS nameservice

- •9.7 E-mail configuration

- •9.8 OpenLDAP directory service

- •9.10 Samba

- •9.11 The printer service

- •9.12 Java web and enterprise services

- •10 Network-level services

- •10.1 The Internet

- •10.2 A recap of networking concepts

- •10.3 Getting traffic to its destination

- •10.4 Alternative network transport technologies

- •10.5 Alternative network connection technologies

- •10.6 IP routing and forwarding

- •10.7 Multi-Protocol Label Switching (MPLS)

- •10.8 Quality of Service

- •10.9 Competition or cooperation for service?

- •10.10 Service Level Agreements

- •11 Principles of security

- •11.1 Four independent issues

- •11.2 Physical security

- •11.3 Trust relationships

- •11.7 Preventing and minimizing failure modes

- •12 Security implementation

- •12.2 The recovery plan

- •12.3 Data integrity and protection

- •12.5 Analyzing network security

- •12.6 VPNs: secure shell and FreeS/WAN

- •12.7 Role-based security and capabilities

- •12.8 WWW security

- •12.9 IPSec – secure IP

- •12.10 Ordered access control and policy conflicts

- •12.11 IP filtering for firewalls

- •12.12 Firewalls

- •12.13 Intrusion detection and forensics

- •13 Analytical system administration

- •13.1 Science vs technology

- •13.2 Studying complex systems

- •13.3 The purpose of observation

- •13.5 Evaluating a hierarchical system

- •13.6 Deterministic and stochastic behavior

- •13.7 Observational errors

- •13.8 Strategic analyses

- •13.9 Summary

- •14 Summary and outlook

- •14.3 Pervasive computing

- •B.1 Make

- •B.2 Perl

- •Bibliography

- •Index

11.3. TRUST RELATIONSHIPS |

427 |

11.3 Trust relationships

There are many implicit trust relationships in computer systems. It is crucial to understand them. If we do not understand where we are placing our trust, that trust can be exploited by attackers who have thought more carefully than we have.

For example, any host that shares users’ home-directories (such as an NFS server or DFS server) trusts the identities and personal integrity of the users on the hosts which mount those directories. Some accidents are prevented by mapping administrator privileges (root) to the user nobody on remote Unix systems, but this is not security, only a trivial obstacle. The Unix root user can always use ‘su’ to become any user in its password file and access/change any data within those filesystems; similarly for Windows. The .rhosts and hosts.equiv files on Unix machines grant root (or other user) privileges to other hosts without the need for authentication; these are very hazardous.

When collecting software from remote servers, we should also make sure that it comes from a machine that is trustworthy, particularly if the files could lead to privileged access to the system. For example, it would be foolhardy to copy a privileged program such as the Unix program /bin/ps from a host one knows nothing about. This program runs with root privileges. If someone were to replace that version of ps with a Trojan horse command, the system would have effectively been opened to attack at the heart of the system.

Most users trust anonymous FTP servers where they collect free software. In any remote copy we are setting up an implicit trust relationship. First of all we trust integrity of the host we are collecting files from. Secondly we trust that they have the same username database with regard to access control (i.e there are not two different users with identical user IDs on the two systems). The root user on the collecting host has the same rights to read files as the root user on the server. The same applies to any matched username.

In any remote file transfer one is also forced to trust the integrity of the data received. No matter how hard a program may work to authenticate the identity of the host, even once the host’s identity is verified, the accuracy or trustworthiness of unknown data is still in doubt. This has nothing to do with encryption as users sometimes believe: encrypted connections do not change these trust relationships; they improve the privacy of the data being transmitted but neither their accuracy nor their trustworthiness.

Implicit trust relationships lie at the heart of so many software systems which grant access to services or resources that it would be impossible to list them all here. Trust relationships are important to grasp because they can lead to security holes.

11.4 Security policy and definition of security

Security only has meaning when we have defined a frame of reference. It is intrinsically connected to our own appreciation of risks. It must be based on a thorough risk analysis.

428 |

CHAPTER 11. PRINCIPLES OF SECURITY |

Principle 59 (Risk). There is always a non-zero level of risk associated with any system.

Clearly, there is a finite chance that a dinosaur-killer asteroid will strike your computer and destroy it. This risk is not very high, but it is real. Few organizations would care to try to protect themselves against this eventuality and would consider themselves secure if this were the only remaining threat, but this parodic example makes an important point. Security is intrinsically related to a threat assessment. An acceptable definition of a secure system is:

Definition 8 (Secure system). threat has been analyzed and accepted as policy.

A secure system is one in which every possible where all the risks have been assessed and

Clearly this is a tall order, and it is probably impossible to realize, but we should be clear about what this means: it is a definition that tells us that security is determined by policy – as acceptable risk.

Defining what we, a local community, mean by security is essential. Only then will we know when security has been breached, and what to do about it. Some sites, which contain sensitive data, require strict security and spend a lot of time enforcing it, others do not particularly care about their data and would rather not waste their time on pointless measures to protect them. Security must be balanced against convenience [171]. How secure must we be

•From outside the organization?

•From inside the organization (different host)?

•From inside the organization (same host)?

•Against the interruption of services?

•From user error?

Finally, how much inconvenience are the users of the system willing to endure in order to uphold this level of security? This point should not be underestimated: if users consider security to be a nuisance, they try to circumvent it.

Suggestion 13 (Work defensively). Expect the worst, do your best, preferably in advance of a problem.

Visible security can be a problem in itself. Systems which do not implement highlevel security tend to attract only low-level crackers – and those who manage to break in tend to use the systems only as a springboard to go to other places. The more security one implements, and the more visible it is, the more of a challenge it is for a cracker. So spending a lot of time on security might only have the effect of asking for trouble.

Suggestion 14 (Network security). Extremely sensitive data should not be placed on a computer which is attached in any way to a public network.

11.4. SECURITY POLICY AND DEFINITION OF SECURITY |

429 |

What resources are we trying to protect?

•Secrets: Some sites have secrets they wish to protect. They might be government or trade secrets or the solutions to a college exam.

•Personnel data: In your country there are probably rules about what you must do to safeguard sensitive personal information. This goes for any information about employees, patients, customers or anyone else we deal with. Information about people is private.

•CPU usage/System downtime: We might not have any data that we are afraid will fall into the wrong hands. It might simply be that the system is so important to us that we cannot afford the loss of time incurred by having someone screw it up. If the system is down, everything stops.

•Abuse of the system: It might simply be that we do not want anyone using our system to do something for which they are not authorized, like breaking into other systems.

Who are we trying to protect them from?

•Competitors, who might gain an advantage by learning your secrets.

•Malicious intruders. Note that people with malicious intent might come from inside or outside our organization. It is wrong to think that the enemy is simply everyone outside of our domain. Too many organizations think ‘inside/outside’ instead of dealing with proper access control. If one always ensures that systems and data are protected on a need-to-know basis, then there is no reason to discriminate between inside or outside of an organization.

•Old employees with a grudge against the organization.

Next: what will happen if the system is compromised?

•Loss of money

•Threat of legal action against you

•Missed deadlines

•Loss of reputation.

How much work will we need to put into protecting the system? Who are the people trying to break in?

•Sophisticated spies

•Tourists, just poking around

•Braggers, trying to impress.

430 |

CHAPTER 11. PRINCIPLES OF SECURITY |

Finally: what risk is acceptable? If we have a secret which is worth 4 lira, would we be interested in spending 5 lira to secure it? Where does one draw the line? How much is security worth?

The social term in the security equation should never be forgotten. One can spend a hundred thousand dollars on the top of the range firewall to protect data from network intrusion, but someone could walk into the building and look over an unsuspecting shoulder to obtain it instead, or use a receiver to collect the stray radiation from your monitors. Are employees leaving sensitive printouts lying around? Are we willing to place our entire building in a Faraday cage to avoid remote detection of the radiation expelled by monitors? In the final instance, someone could just point a gun at someone’s head and ask nicely for their secrets. An example of security policies can be found at RFC 1244 and British Standard/ISO17799.

11.5 RFC 2196 and BS/ISO 17799

Security standards are attempts at capturing the essence of security management. Rather than focusing on the technologies that can be used to implement security, as most texts do, these standards attempt to capture the more important points of how these technologies and methods can be used in concert to address the actual risks. There are two main standards to consider; both are worth careful study.

RFC 2196 (Site Security Handbook, 1997) replaces an older RFC, 1244, and is a guide to producing a site security policy that is addressed at system administrators. It emphasizes that a policy must be closely tied to administrative practice, as we have iterated in this book. The document correctly points out that:

•It must be implementable through system administration procedures, publishing of acceptable use guidelines, or other appropriate methods.

•It must be enforceable with security tools, where appropriate, and with sanctions, where actual prevention is not technically feasible.

•It must clearly define the areas of responsibility for the users, administrators and management.

The standard goes on to describe the elements of such a policy, including purchasing guidelines, privacy policy, access policy, accountability policy, authentication policy, a statement of availability requirements, a maintenance policy and a reporting policy in case of security breach. It also iterates the importance of documentation and supporting information for users and staff.

The RFC describes the protection of infrastructure and network services and discusses specific technologies (DNS, NIS, FTP etc), mainly related to Unix milieux.

ISO 17799 (Information Technology – Code of Practice for Security Management) is a recently recognized security standard that is based upon the British Standard BS7799, published in 1999. ISO 17799 was published in 2000 and accepted as a British Standard in 2001. It is an excellent starting place for formulating an organization’s security policy. It is less technical than RFC 2196, but goes into greater detail from a logistic viewpoint. It has been constructed with great care

11.5. RFC 2196 AND BS/ISO 17799 |

431 |

and expertise. Compliance with ISO 17799 is far from trivial, even for the most security conscious of organizations.

As with all ISO standards, one can obtain a certificate of compliance in the final instance. It is almost certainly too early to recommend such a certification, since it is both costly and of limited value to an organization; nevertheless, the standard is a fine summary of basic security ideas that reiterates many of the ideas explored in this book. Copyright prevents us from detailing the standard here, or quoting from its many interesting guidelines, but we can describe its layout. The document describes ten points:

1.Security policy: The standard iterates the importance of a security policy that sets clear goals, and demonstrates a commitment by an organization to the seriousness of its security. The policy should ensure that legal and contractual commitments are met, and that the economic ramifications of a security breach are understood. The policy itself must be maintained and updated by a responsible party.

2.Organizational security: A security team should be assembled that sets policy and provides multi-disciplinary advice. The team should allocate responsibilities within the organization for maintaining security of assets at all levels. Levels of authorization to assets must be determined. Contact with law-enforcement organizations and regulatory bodies should be secured and policies and procedures should be peer-reviewed for quality assurance. Any ‘outsourcing’, i.e. third party contracts, must address the risks associated with opening the organization’s security borders.

3.Asset classification and control: Organizations should have an inventory of their assets, which classifies each asset according to its appropriate level of security. Procedures for labelling and handling different levels of information, including electronic and paper transmission (post, fax, E-mail etc.) and speech (telephone or conversation over dinner) must be determined.

4.Personnel security: In order to reduce the risks of human error, malicious attacks by theft, fraud or vandalism, staff should be screened and given security responsibilities. Confidentiality (non-disclosure (NDA)) agreements, as well as terms and conditions of employment may be used as a binding agreement of responsibility. Most importantly, training of staff (users) in the possible threats and their combative procedures is needed to ensure compliance with policy. A response contingency plan should be drawn up and familiarized to the staff, so that security breaches and weaknesses are reported immediately.

5.Physical and environmental security: All systems must have physical security; this usually involves a ‘security perimeter’ with physical constraints against theft and intrusion detection systems, as well as a controlled, safe environment. Secure areas can provide extra levels of security for special tasks. Equipment should be protected from physical threats (including fire, coffee, food etc.) and have uninterruptible power supplies where appropriate. Cables must be secured from damage and wire-tapping. Desks and screens

432 |

CHAPTER 11. PRINCIPLES OF SECURITY |

and refuse/trash should be cleared when not in use, to avoid accidental disclosure of confidential information.

6.Communications and operations management: The processing and handling of information must be specified with appropriate procedures for the level of information. Change management should include appropriate authorizations and significant documentation of changes to allow analysis in the case of problems. Procedures must be documented for responding to all kinds of threat. Analysis (causality) and audit trails should be planned for breaches. Housekeeping, backups, safe disposal of information and materials and other regular maintenance should be in place and be integrated into the scheme. System administration features heavily here: security and administration go hand in hand; they are not separate issues. This part of the standard covers many miscellaneous issues.

7.Access control: User management, password and key management, access rights, securing unattended equipment. Enforced pathways to assets that prevent ‘back-doors’. Segregation of independent assets and authentication for access. Use of securable operating systems, restriction of privilege. Clock synchronization. Mobile computing issues.

8.Systems development and maintenance: Security should be built into systems from the start. Input–output validation. Policy on cryptographic controls, including signatures and certificates. Interestingly, the standard recommends against open source software. Covert channels (secret channels that bypass security controls) must be avoided.

9.Business continuity management: Each organization should estimate the impact of catastrophes and security breaches on its business. Will the organization be able to continue after such a breach? What will be the impact? The standard suggests the testing of this continuity plan.

10.Compliance: Laws and regulations must be obeyed, with regard to each country and contractual obligation. Regulation of personal information and cryptographic methods must be taken into account in different parts of the world. Compliance with the organization’s own policy must be secured by auditing.

The ISO standard is a good introduction to human–computer security that can be recommended for any organization.

11.6 System failure modes

Since the explosion of interest in the Internet, the possibility of hosts being attacked from outside sources has become a significant problem. With literally millions of users on the net, the tiny percentage of malicious users becomes a large number. The number of ways in which a system can fail are thus many and varied.

11.6. SYSTEM FAILURE MODES |

433 |

11.6.1Social engineering

Network attackers, i.e. system crackers, are people. It is easy to become so fascinated by the network that we forget that people can just walk into a building and steal something. If one can avoid complex technical expertise in order to break in to a system, then why not do it? There is more than one way to crack a system.

The only secure computer is a computer which is locked into a room, not connected to a network, shielded from all electromagnetic radiation. In social studies of large companies, it has been demonstrated that – in spite of expensive firewall software and sophisticated anti-cracking technology – all most crackers had to do to break into the system was to make a phone call to an unwary employee of the company and ask for their username and password [327]. Some crackers posed as system administrators trying to fix a bug, others simply questioned them as in a marketing survey until they gave away information which allowed the crackers to guess their passwords. Some crackers will go one step further and visit the building they are trying to break into, going through the garbage/refuse to look for documents which would give clues about security. Most people do not understand the lengths that people will go to to break into systems if they really want to. For example, consider this conversation between a cracker and a student user at a university.

"Hello - I am calling from the student finance office to tell you that your term grant has been deposited directly in your K-Bank account, as you requested."

"What?! I didn’t ask for that! I don’t even have an account at K-Bank!?"

"Oh really? Are you sure? What is your student number?"

"s123456" (Attacker now has a user name)

"Okay, here it is, strange...looks legitimate. Well look, if you like I could make an exception and push this through quickly."

"That would be great - I really need that money!"

"Okay, I could get in trouble for this, but if you give me your password, I can fix this straight away."

Notice how the attacker builds trust by claiming to be in a difficult situation himself (or herself).

A few things can be done to counteract social threats:

•Train all staff and make them aware of the threat of social engineering.

•Keep a clean desks policy, so that no information can be picked up by cleaning staff or people who wander into the building after hours.

•Never leave ‘live’ equipment unattended or unlocked.

434 |

CHAPTER 11. PRINCIPLES OF SECURITY |

•Always be suspicious of a caller whom you do not know. Ask for ID. Some social engineers might build up trust over time, so be suspicious of unusual requests too.

•Always have two persons verify requests for information or access.

•Never disclose information over the telephone, especially through a voice-mail system. Phone calls can be spoofed.

•Warn others about suspicious callers.

•Examine system logs, check the system regularly, run checks to ensure consistency to look for other signs of attack.

•Make hard-copies of messages sent with all the headers printed out. Many people don’t know how to hide their true identity on the Internet.

•Log all calls and visits.

•Make proper backups of computers.

•Inform the whole system of attacks so that anyone who knows something can help you.

•Do not assume that what someone tells you is true.

•Have a clear security policy.

•Threats of serious crimes should probably be reported to the company responsible for sending the message, and perhaps even the police.

11.6.2Security through obscurity

‘Security through obscurity’ have become the dirty words of the computing industry – a chant of ridicule to scorn flawed systems. The idea is that making something difficult to find or awkward to use does not prevent a determined attacker or an insidious threat.

In the mid-1990s, when security first came to the general public’s attention, it was felt that the general apathy towards security made it important to underline this fact. There was a commonly held belief that, if one made it difficult for intruders to find out information, they would not bother to try. This, of course, is completely wrong. Sometimes the reverse is even true; it acts as a red rag to a bull. If determined attackers can see that there is nothing worth finding they might leave systems alone. If everything is concealed they will assume that there must be something interesting worth breaking in for.

However, there is another side to the coin with obscurity that is generally suppressed. The scorn has gone perhaps too far. Obscurity, i.e. hiding things, does provide a low-level filter against casual threats. If this were not the case, why would anyone be interested in encryption for privacy, or bother to keep passwords in a secret location? Sometimes obscurity is the only possible security. It only

11.6. SYSTEM FAILURE MODES |

435 |

depends on how likely the obscurity is to work. There is always a non-zero risk. The question is only how likely the strategy is to work.

In a careful risk analysis, obscuring data or assets can reduce the risk of lowlevel attacks, and thereby reduce the total risk, but it rarely reduces the risk of the most insidious or persistent threats; these are the threats that security experts like to emphasize, since they are usually trying to determine any and every point of failure. Thus, the likelihood of obscurity to work as a strategy depends on the likelihood of persistent attacks to a system. The Queen of England does not hide the crown jewels under her bed – while this might prevent her staff from finding them, a more reliable security is needed against thieves. The same applies to any system.

Security through obscurity is a naive form of security which at best delays break-ins.

Example 16. Shadow passwords are an example of this. By making the encrypted password list inaccessible to normal users, one makes it harder for them to automate the search for poor passwords, but one does not prevent it! It is still possible to guess passwords in exactly the same way as before, but it takes much longer. The NT password database is not in a readable format. Some people have claimed that this makes it more secure than the Unix password file. Since then tools have been written which rewrite the NT password file in Unix format with visible encrypted passwords. In other words, making it difficult for people to break in does not make it impossible.

Example 17. NFS export access control lists make it harder for an arbitrary machine on the same network to get access to an exported filesystem, but not impossible. They can sniff the network or guess root filehandles. Despite this largely being security by obscurity, we should still bother to limit the list of systems that can gain easy access to this information.

Clearly there is no need to give away information to potential intruders. Information should be available to everyone on a need-to-know basis, whether they be local users or people from outside the organization. But at the same time, obscurity is no real protection. Even the invisible man could get shot. Time spent securing systems is better than time spent obscuring them. Obscurity might attract more attention than we want and make the system as obscure to us as to a potential intruder.

11.6.3Honey pots and sacrificial lambs

A honey pot is a host which is made to look attractive to attackers. It is usually placed on a network with the intention of catching an intruder or distracting them from more important systems. A sacrificial lamb host is one which is not considered to be particularly important to the domain. If it is compromised by an attacker then that is an acceptable loss and no real harm is done.

Some network administrators believe that the use of such machines contributes to security. For example, WWW servers are often placed on sacrificial lamb machines which are placed outside firewalls. If the machine is compromised then it can simply be reinstalled and the data reloaded from a secure backup.

436 |

CHAPTER 11. PRINCIPLES OF SECURITY |

This practice might seem rather dubious. There is certainly no evidence to support the idea that either honey pot havens or sacrificial lamb chops actually improve security, by any definition, but they provide management options.

11.6.4Security holes

One way that outside users can attack a system is by exploiting security holes in software. Classic examples usually involve setuid-root programs, which give normal users temporary superuser access to the system. Typical examples are programs like sendmail and finger. These programs are constantly being fixed, but even so, new security holes are found with alarming regularity. Faults in software leave back-doors open to intruders. The premier way of limiting such attacks is to build a so-called firewall around your network, see section 12.12.

The computer emergency response team (CERT) was established in the wake of the Internet Worm incident to monitor potential security threats. CERT publish warnings to a mailing list about known security holes. This is also available on the newsgroup comp.security.announce. Several other organizations, often run by staff who work as security consultants, are now involved in computer security monitoring. For instance, the SANS organization performs an admirable job of keeping the community informed about security developments, both technical and political. Moreover, old phreaker organizations like Phrack and the l0pht (pronounced loft) now apply their extensive knowledge of system vulnerabilities for the good of the network community. See refs. [4, 300, 72, 279, 239, 189].

11.6.5System homogeneity

In a site with a lot of different kinds of platforms, perhaps several Unix variants, NT and Windows 9x, the job of closing security holes is much harder. Inhomogeneity often provides the determined intruder with more possibilities for bug-finding. It also increases the system’s unpredictability. You might ask yourself whether you need so many different kinds of platform. If you do, then perhaps a firewall solution would provide an extra level of protection, giving you a better chance of being able to upgrade your systems before something serious happens. The converse of this viewpoint is that systems with a variety of operating systems can provide a backup if one type of system becomes compromised; e.g. if an important NT server is compromised, it might be good to have a Unix backup.

11.6.6Modem pools

Some companies expend considerable effort to secure their network connections, but forget that they have dial-in modems. Modem pools are a prime target for attackers because they are often easy targets. There are many problems associated with modem pools. Sometimes they are quite unexpected. For example, if one has network access to Windows systems using the same modem then those systems are automatically on a shared segment and can use one another’s resources, regardless of whether they have any logical relationship. Modems can also succumb to denial of service attacks by repetitive dialing.

11.6. SYSTEM FAILURE MODES |

437 |

Modems should never allow users to gain access to a part of the network which needs to be secure. Modems should never be allowed to be back-doors into firewalled networks by being placed on the secure side of a firewall.

11.6.7Laptops and mobile devices

Laptop computers and Personal Digital Assistants (PDA) are increasingly popular and they are popular targets for thieves. There have been cases of laptop computers containing sensitive information being stolen, often enough to give crackers access to further systems, or simply to give competitors the information they wanted!

11.6.8Backups

If you make backups of important data (private data) then you must take steps to secure the backups also. If an intruder can steal your backups, then he or she does not need to steal the originals.

11.6.9TCP/IP security

On top of the hardware, there are many levels of protocol which make network communication work. Many of these layers are invisible or are irrelevant to us, but there are two layers in the protocol stack which are particularly relevant to network security, namely the IP layer and the TCP/UDP layer.

11.6.10The Internet protocol (IPv4)

The Internet protocol was conceived in the 1970s as a military project. The aim was to produce a routable network protocol. The version of this protocol in use today is version 4, with a few patches. Let’s review some of the basics of IPv4. TCP/IP is a transmission protocol which builds on lower level protocols like Ethernet and gives it extra features like ‘streams’ or virtual circuits, with automatic handshaking. UDP is a cheaper version of this protocol which is used for services which do not require connection-based communication. The TCP/IP protocol stack consists of several layers (see figure 2.6).

At the application level we have text-based protocols like telnet and FTP etc. Under these lies the TCP (Transmission Control Protocol) which provides reliable connection-based handshaking, in a virtual circuit. TCP and UDP introduce the concept of the port and the socket (=port+IP address). We base our communications on these, and thus we also base the security of our communications on these. Under TCP/UDP is the IP transport layer, then Ethernet or token ring etc. ICMP is a small protocol which is used by network hardware to send control and error messages as a part of the IP protocol set, e.g. ping uses ICMP.

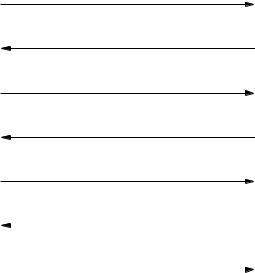

With all of its encapsulation packaging, a TCP/IP packet looks like figure 11.1. TCP packets are ‘reliable’, connection-oriented data. They form streams or continuous data-flows with handshaking. This is accomplished by using a three-way handshake based on so-called SYN (synchronize) and ACK (acknowledge) bits in the TCP header. Suppose host A wishes to set up a connection with host B. Host A

438 |

|

|

CHAPTER 11. PRINCIPLES OF SECURITY |

||

Ethernet |

IP |

TCP |

Application data.... |

Ethernet |

|

header |

header |

header |

trailer |

||

|

|||||

Figure 11.1: Encapsulation with Ethernet and TCP/IP.

sends a TCP segment to host B with its SYN bit set and a sequence number X which will be used to keep track of the order of the segments. Host B replies to this with its SYN and ACK bits set, with Acknowledgement=X+1 and a new sequence number Y. Host A then replies to host B with the first data and the Acknowledge field=Y+1. The reason why each side acknowledges every segment with a sequence number which is one greater than the previous number sent is that the Acknowledgement field actually determines the next sequence number expected. This sequence is actually a weakness which network attackers have been able to exploit through different connections, in ‘sequence number guessing’ attacks. Now many implementations of TCP allow random initial sequence numbers.

The purpose of this circuit connection is to ensure that both hosts know about every packet which is sent from source to destination. Because TCP guarantees delivery, it retransmits any segment for which it has not received an ACK after a certain period of time (the TCP timeout).

At the end of a transmission the sender sends a FIN (finished) bit, which is replied to with FIN/ACK. In fact closing connections is quite complex since both sides must close their end of the connection reliably. See the reference literature for further details of this.

TELNET CLIENT |

|

|

|

|

TELNET SERVER |

|

|

send |

|

|

|

|

|

|

|

|

|

random port |

|

|

|

|

well-known |

|

|

|

|

port 23 |

|

(active open) |

|

|

|

|

|

|

recv |

|

|

(passive open) |

|

|

|

|

|

||

|

|

|

|

|

Figure 11.2: A telnet connection.

Let us consider telnet as an example and see how the telnet connection looks at the TCP level (see figure 11.2). Telnet opens a socket from a random port address (e.g. 54657) to a standard well-known port (23) where the telnet service lives. The combination of a port number at an IP address over a communication channel is called a socket. The only security in the telnet service lies in the fact that port 23 is a reserved port which only root can use. (Ports 0–1023 are reserved.)

The TCP protocol guarantees to deliver data to their destination in the right order, without losing anything. In order to do this it breaks up a message into segments and numbers the parts of the message according to a sequence. It then confirms that every part of that sequence has been received. If no confirmation of receipt is received, the source retransmits the data after a timeout. The TCP

11.6. SYSTEM FAILURE MODES |

439 |

header contains handshaking bits. Reliable delivery is achieved through a threeway handshake. Host A begins by sending host B a packet with a SYN (synchronize) bit set and a sequence number. This provides a starting reference for the sequence of communication. Host B replies to this message with a SYN/ACK which confirms receipt of an open-connection request and provides a new sequence number which confirms identity. Host A acknowledges this and makes a request. Then host B replies with actual data. We can see this in an actual example (see figure 11.3). This handshaking method of sending sequence numbers with acknowledgement allows the TCP protocol to guarantee and order every piece of a transmission. The ACK return values are incremented by one because in earlier implementations this would be the next packet required in the sequence. This predictability in the sequence is unfortunately a weakness which can be exploited by so-called sequence guessing attacks. Today, in modern implementations, sequence numbers are randomized to avoid this form of attack. Older operating systems still suffer from this problem. Future implementations of TCP/IP will be able to solve this problem by obscuring the sequence numbers entirely through encryption.

CLIENT

Active open

Client close

SERVER

SYN, seq=1000

Passive open

SYN, seq=2000, ack=1001

ack=2001,seq=3000,len=0

Ready to send

ack=seq+len, send data

ack=seq+len, FIN, seq=9000

|

ack=9001, FIN, seq=10000 |

Server close |

|

|

ack=10001 |

Connection closed |

|

|

|

|

|

Figure 11.3: The TCP three-way handshake.

The TCP handshake is useful for filtering traffic at the router level, since it gives us something concrete to latch onto. TCP would rather drop a connection than break one of its promises about data integrity, so if we want to block telnet connections, say, we only have to break one part of this fragile loop. The usual strategy is to filter all incoming connections which do not have their ACK bit set, using router filtering rules. This will prevent any new connections from being established by outside machines contacting servers inside the network. This rule, however, does not prevent packets from entering the network that belong to