- •Summary Contents

- •Detailed Contents

- •Figures

- •Tables

- •Preface

- •The Disciplinary Players

- •Broad Perspectives

- •Some Key Guiding Principles

- •Why Did Agriculture Develop in the First Place?

- •The Significance of Agriculture vis-a-vis Hunting and Gathering

- •Group 1: The "niche" hunter-gatherers of Africa and Asia

- •Group 3: Hunter-gatherers who descend from former agriculturalists

- •To the Archaeological Record

- •The Hunter-Gatherer Background in the Levant, 19,000 to 9500 ac (Figure 3.3)

- •The Pre-Pottery Neolithic A (ca. 9500 to 8500 Bc)

- •The Pre-Pottery Neolithic B (ca. 8500 to 7000 Bc)

- •The Spread of the Neolithic Economy through Europe

- •Southern and Mediterranean Europe

- •Cyprus, Turkey, and Greece

- •The Balkans

- •The Mediterranean

- •Temperate and Northern Europe

- •The Danubians and the northern Mesolithic

- •The TRB and the Baltic

- •The British Isles

- •Hunters and farmers in prehistoric Europe

- •Agricultural Dispersals from Southwest Asia to the East

- •Central Asia

- •The Indian Subcontinent

- •The domesticated crops of the Indian subcontinent

- •The consequences of Mehrgarh

- •Western India: Balathal to jorwe

- •Southern India

- •The Ganges Basin and northeastern India

- •Europe and South Asia in a Nutshell

- •The Origins of the Native African Domesticates

- •The Archaeology of Early Agriculture in China

- •Later Developments (post-5000 ec) in the Chinese Neolithic

- •South of the Yangzi - Hemudu and Majiabang

- •The spread of agriculture south of Zhejiang

- •The Background to Agricultural Dispersal in Southeast Asia

- •Early Farmers in Mainland Southeast Asia

- •Early farmers in the Pacific

- •Some Necessary Background

- •Current Opinion on Agricultural Origins in the Americas

- •The Domesticated Crops

- •Maize

- •The other crops

- •Early Pottery in the Americas (Figure 8.3)

- •Early Farmers in the Americas

- •The Andes (Figure 8.4)

- •Amazonia

- •Middle America (with Mesoamerica)

- •The Southwest

- •Thank the Lord for the freeway (and the pipeline)

- •Immigrant Mesoamerican farmers in the Southwest?

- •Issues of Phylogeny and Reticulation

- •Introducing the Players

- •How Do Languages Change Through Time?

- •Macrofamilies, and more on the time factor

- •Languages in Competition - Language Shift

- •Languages in competition - contact-induced change

- •Indo-European

- •Indo-European from the Pontic steppes?

- •Where did PIE really originate and what can we know about it?

- •Colin Renfrew's contribution to the Indo-European debate

- •Afroasiatic

- •Elamite and Dravidian, and the Inds-Aryans

- •A multidisciplinary scenario for South Asian prehistory

- •Nilo-Saharan

- •Niger-Congo, with Bantu

- •East and Southeast Asia, and the Pacific

- •The Chinese and Mainland Southeast Asian language families

- •Austronesian

- •Piecing it together for East Asia

- •"Altaic, " and some difficult issues

- •The Trans New Guinea Phylum

- •The Americas - South and Central

- •South America

- •Middle America, Mesoamerica, and the Southwest

- •Uto-Aztecan

- •Eastern North America

- •Algonquian and Muskogean

- •Iroquoian, Siouan, and Caddoan

- •Did the First Farmers Spread Their Languages?

- •Do genes record history?

- •Southwest Asia and Europe

- •South Asia

- •Africa

- •East Asia

- •The Americas

- •Did Early Farmers Spread through Processes of Demic Diffusion?

- •Homeland, Spread, and Friction Zones, plus Overshoot

- •Notes

- •References

- •Index

Why Did Agriculture Develop in the First Place?

This is one of the most enduring questions posed by archaeologists and one that probably generates more debates than any other major archaeological question. We still do not really know the full answers for all regions. Most of the theorizing about agricultural origins has obviously been driven by the situation in Southwest Asia, with Mesoamerica and China following, but it is essential to remember that what may work for one region may not necessarily work well for another. Environments, chronologies, and cultural trajectories differed. Yet it may still be asked if there are any cross-regional regularities across the worldwide set of primary transitions. At the outset, there would appear to be two.

Firstly, the primary development of agriculture could not have occurred anywhere in the world without deliberate planting and a regular annual cycle of cultivation. Such would have been necessary for the selection to operate that ultimately produced the domesticated plants, and both of these activities would obviously have begun, in the first instance, with plants that were morphologically wild. Planting away from the areas where the wild forms grew would also have helped immensely in fixing and stabilizing any trends toward domestication, especially in the cases of those plants that were crossrather than self-fertilizing, and thus subject to constant back-crossing with wild individuals.

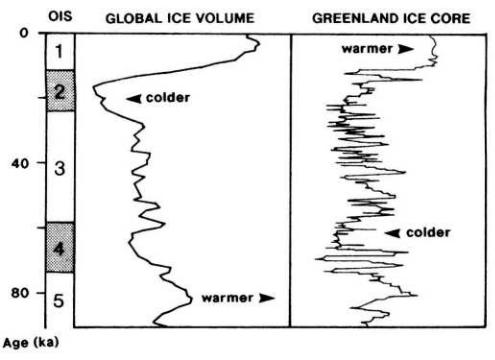

Secondly, agriculture seemingly could not have occurred without the postglacial amelioration and ultimate Holocene stabilization of warm and rainy climates in those tropical and temperate zones where food production was ultimately developed. This is a most significant finding of recent paleoclimatic research. Basically, the argument runs that postglacial climates of the period between 20,000 and 11,500 years ago were not only mostly cold and dry, but also extremely variable, such that major swings of temperature and moisture supply could have taken place literally on decadal scales. This is now well established from ice cores, deep sea cores, and continental pollen profiles. Under such conditions, according to an increasing number of authors (van

Andel 2000; Chappell 2001; Richerson et al. 2001), any incipient attempts to cultivate and domesticate plants would have been doomed to early failure. Indeed, as we will see in the next chapter, at least one short-lived attempt to domesticate rye does seem to have occurred at Abu Hureyra in northern Syria at about 13,000 years ago.

With the Holocene amelioration of climate to conditions like those of the present, a rapid change that occurred about 11,500 years ago, the world's climates became warmer, wetter, and a good deal more reliable on a short-term basis (Figure 2.3). It was this reliability that gave the early edge to farming, by means of a chain of circumstances leading on from an increasing density of wild food supplies, through increasing settlement sedentism and population size, and so to the "competitive ratchet" between regional populations intent on maximizing their economic and demographic well-being vis-a-vis other groups (Richerson et al. 2001). Once agricultural trends began they almost never turned back (although, as we will see later in this chapter, some farmers did return to being hunters and gatherers).

At first sight, this explanation might seem so obvious as to render all previous attempts to explain early agriculture superfluous. But it does not explain everything, since the whole world population did not suddenly switch to farming, wherever it was climatically possible, in 9500 Bc. Holocene climate was clearly the ultimate enabler of early farming, but it was not the proximate cause behind individual transitions. To understand proximate causes we need to review the history of early agricultural causation theory within archaeology.

Figure 2.3 Synthetic diagram to show the rapid changes in world climate after the peak of the last glaciation. The Holocene with its relatively stable warm conditions is at the top of both diagrams (OIS = oxygen isotope stage, ka = millennia ago). From van Andel 2000.

Those who ask why agriculture developed, as a form of human behavior different from hunting and gathering, adopt many differing theoretical perspectives. Some explanations focus on a background of affluence, others on stress, especially environmental or population stress. Some favor conscious choice, others prefer unconscious Darwinian selection. Some like revolution, others prefer gradualism.

Many early theories were focused on affluence, combined with shots of good luck. William Perry (1937:46), for instance, believed that "year after year the gentle Nile would, by means of its perfect irrigation cycle, be growing millet and barley for the Egyptians. All that would be necessary, therefore, would be for some genius to think of making channels to enable the water to flow over a wider area, and thus to cultivate more food." Carl Sauer (1952) preferred situations of hunter-gatherer affluence and leisure time in riverine and coastal situations in Southeast Asia. Robert Braidwood (1960:134) favored cultural readiness in western Asia: "In my opinion there is no need to complicate the

story with extraneous `causes'. The food producing revolution seems to have occurred as the culmination of the ever increasing cultural differentiation and specialization of human communities." Stress received little recognition in these particular theories and none offered coherent chains of causality for the beginnings of cultivation. Indeed, we now know that agriculture did not begin at all in a primary sense in either Egypt or Southeast Asia.

Perhaps the most famous of the early stress-based theories was that of Gordon Childe, who believed that desiccation forced humans and animals together in oasis situations in Southwest Asia at the end of the last glaciation, eventually leading to domestication - hence the famous "propinquity hypothesis" (Childe 1928, 1936). We now know that domestication really took off in earnest in the wetter climatic conditions of the early Holocene. But, as will be indicated in chapter 3, there is a twist to the situation that means that Childe could have been partly right. Periodic spells of drought stress, especially during the Younger Dryas (11,000-9500 BC), are known to have affected Southwest Asia and probably China as the overall postglacial climatic amelioration occurred. Such stresses could have stimulated early, maybe short-lived, attempts at cultivation to maintain food supplies, especially in the millennia before 9500 BC. Childe was perhaps not too far off the mark.

Most modem explanations for the origins of agriculture bring in at least one "twist" of this type, in the sense of a factor of stress imposed either periodically or continuously over a situation of generalized affluence. But there are deep debates as to exactly what were the fundamental stress factors that led people to cultivate plants. Were they social, demographic, or environmental in nature, or a combination of all three? Were they continuous or periodic? Were they mild or severe?

One school of thought favors a primacy of social stress (perhaps better termed "social encouragement" in this context), fueled by competition between individuals and groups. Developments toward agriculture in resource-rich environments could have been stimulated by conscious striving for opportunities and rewards, including the reward of increased community population size and strength vis-a-vis other communities (Cowgill 1975). In similar vein, many have suggested that competitive social demands for increased food supplies could have led to food production.' We would expect such developments to occur in societies that valued competitive feasting or an

accumulation of exotic valuables, via exchanges involving food, to validate status. Brian Hayden (1990) stresses that such developments would have occurred in relatively rich environments, in which groups would have been allowed to circumvent the strong ethic of inter-family food sharing that characterizes many ethnographic hunter-gatherers. In food-rich environments, families could have stored food for their own use under conditions of relatively high sedentism, thus leading to the individual or family accumulation of wealth that a system of competitive feasting demands. In poor and continually stressful environments such accumulation would have been replaced by more survivaloriented modes of sharing, and these form structural impediments to any shift to food production and consequent private accumulation of surplus.

Other authorities have stressed the significance of demographic stress, rather than social encouragement or competition. The clearest statement of this is by Mark Cohen (1977a), foreshadowed in part by Philip Smith (1972) and Ester Boserup (1965). Cohen's model focuses on continuous population growth during improving environmental conditions in the later Pleistocene, leading to dietary shifts into more productive but less palatable resources (for instance, from large game meat toward marine resources, cereals, and small game). Eventually, people were obliged to shift into plant cultivation, essentially to feed growing populations. Population packing led via necessity to increased sedentism, thus further promoting a higher birth rate and a gradual snowballing of ever-increasing population density. The adoption of agriculture by Cohen's model was a gradual process, but one occurring in varying environments around the world whenever and wherever food production became demographically necessary. Animal domestication followed later as wild meat resources declined.

Related population growth or "packing" models were favored even earlier for Southwest Asia and Mesoamerica. Lewis Binford (1968) and Kent Flannery (1969) both proposed that increasing post-Pleistocene population densities in favorable coastal zones, occupied by relatively sedentary fisher-foragers, led to an outflow of people into marginal zones, resulting in cereal cultivation in order to increase food supplies. This hypothesis also emphasized that initial plant cultivation would be most likely to have taken place on the edges of the wild ranges of the plants concerned, because stresses in supply here would obviously be higher than in core areas of plentiful and reliable supply (Flannery 1969).

The evidence that hunter-gatherer populations were indeed growing in various places in the Old World after the last glacial maximum and prior to the beginnings of agriculture is quite strong for some regions, and the significance of such population growth will be highlighted for Southwest Asia later. There is no doubt that this could have been a significant factor.

Another very important factor amongst the last hunter-gatherers involved in transitions to agriculture, and one undoubtedly related to increasing population density, is widely believed to have been settlement sedentism (Harris 1977a; LeBlanc 2003b). Many scholars now believe that agriculture, especially in the Levant, could only have arisen among sedentary rather than seasonally mobile societies. Unfortunately, however, the demonstration of sedentism in prehistory is one of the most difficult tasks that an archaeologist can face. Biological indicators (for instance, presence of bones of migratory bird species, age profiles of mammal species with seasonal reproduction cycles) can sometimes give ambiguous results - the presence of people during a certain season can often be identified, but not the absence. Archaeologists are often forced to fall back on generalized assumptions about the existence of sedentism made in terms of the presence in sites of commensal animal species (mice, rats, sparrows etc. in the Levant) and in terms of the seeming permanence of houses and other structural remains. The whole issue can be extremely uncertain for there are many ethnographic situations, amongst hunter-gatherers and agriculturalists alike, where settlements can have a degree of permanence but the populations that inhabit them can fluctuate from season to season.' The same probably applies to many of the seemingly sedentary settlements of "affluent" hunter-gatherers in prehistory. Perhaps in awareness of this, Susan Kent (1989) has defined sedentism as requiring residence for more than six months of a year in one location. Ofer Bar-Yosef (2002:44) suggests nine months for the preNeolithic Levant.

Given that sedentism to some degree was apparently so important in agricultural origins, it becomes necessary to ask where sedentary societies were located in the pre-agricultural world of the terminal Pleistocene and early Holocene. So far, the archaeological record gives few indications of absolute sedentism, and even a high degree of sedentism was perhaps not present in many places apart from the Levant and southeastern Turkey, Jomon Japan (where sedentary hunter-gatherers seemingly resisted the adoption of

agriculture), Sudan, perhaps central Mexico, and perhaps the northern Andes.' No doubt, archaeological specialists in various parts of the world will have data to claim that sedentism existed in other areas, but in the context of terminal Pleistocene and early Holocene hunter-gatherers it is my impression that it was rather rare, especially if one demands all-year-round permanence of a total population in a village-like situation.

Despite these conceptual problems, any major increase in the degree of sedentism would have become a stress factor in itself because it would have encouraged a growing population, via shorter birth intervals, and would also have placed a greater strain on food supplies and other resources in the immediate vicinity of a campsite or village.' Fellner (1995) has suggested that the development of plant cultivation in Southwest Asia was a deliberate act to allow small and already sedentary huntergatherer villages to amalgamate into much larger, and better-fed, agriculturalist ones - more food, more political and social power (Cowgill 1975).

These social and demographic stress hypotheses are convincing as a group, but they by no means explain everything. For instance, why did agriculture not develop in all "affluent" hunter-gatherer regions in prehistory, and especially amongst many of the more affluent groups of the ethnographic record who inhabited agriculturally possible regions, such as those in some parts of coastal or riverine Australia, California, or British Columbia? The American groups, in particular, had relatively high degrees of settlement sedentism. Despite such apparent situations of non-fit, however, some form of reduction of continuous sharing behavior must have been an essential ingredient in the early agricultural equation, and affluence and feasting combined with a shift toward a sedentary residence pattern with concomitant storage are good ways to reduce this. The affluence models are not to be dismissed lightly and they certainly reflect substantial common sense.

Nevertheless, somewhere in all of this there has to be another factor. What if the full story was not just affluence tilted by the sedentizing and productive demands of social or demographic pressures, but also affluence overshadowed by the threat of episodes of environmental stress? Severe environmental or food stress alone is probably not the answer - the starving or devastated populations who tragically inhabit our TV screens are the least likely, without massive outside help, to revolutionize their economies. But there are gradations of

environmental stress, and stress can vary in intensity and in periodicity. Perhaps the sharp retraction of resources at about 11,000 Bc associated with the onset of the Younger Dryas could have led to some early experiments in agriculture in the Levant. Most actual establishments of farming economies apparently followed later, and in this regard it needs to be stressed that early Holocene climates were not absolutely stable, never changing at all from year to year. Climatic variability in the Pleistocene may have been too great to allow agriculture to develop permanently at all, but the opposite - unchanging conditions - could presumably have been just as unrewarding. The old saying, "necessity is the mother of invention," was surely not founded in total vacuity. A combined explanation of affluence alternating with mild environmental stress, especially in "risky" but highly productive early Holocene environments with periodic fluctuations in food supplies, is becoming widely favored by many archaeologists today as one explanation for the shift to early agriculture. Indeed, in an analysis of ethnographic "protoagricultural" activities by huntergatherers, Lawrence Keeley (1995) points out that most such activity has been recorded for groups in high risk environments in low to middle latitudes. Risk, and smart circumvention thereof, has been a clue to many successes in life and history.

There is one other general theory to be discussed here, one rather different from the theories related above and one that requires neither affluence nor stress of any kind, nor even conscious awareness or choice on the part of the human populations involved. The view of Eric Higgs and Michael Jarman (1972), that animal domestication had been developing since far back in Pleistocene times as humans gradually refined their hunting and husbandry practices, is an early precursor of this, but it has been most powerfully presented for plants by David Rindos (1980, 1984, 1989). Rindos took a very gradualist and Darwinist view of co-evolutionary selection and change, in which plants were thought to have co-evolved with humans for as long as they have been predated by humans, adapting to the different seed dispersal mechanisms and selective processes set in train by human intervention. From this viewpoint, plants have always been undergoing some degree of phenotypic change as a result of human activity and resource management. However, Rindos did recognize that the actual transition to conscious cultivation ("agricultural domestication") was probably driven by population increase, and that it was the cause of much subsequent human population instability and expansion.

The essence of Rindos' theory was really unconscious Darwinian selection as opposed to conscious choice, and it was not tailored to fit any specific region. In my view, a long process of pre-agricultural co-evolution might fit with what we know of the New Guinea and Amazon situations with their focus on tuber and fruit crops, perhaps better than, for instance, Southwest Asia, China, or Mesoamerica, where cereals seem to have undergone rather explosive episodes of domestication. But it does not tell us anything about the historical reasons why particular groups of prehistoric foragers crossed the Rubicon into systematic agriculture, any more than Darwin himself was able to state how animal species could acquire completely new genetic features through mutation.

It is necessary, therefore, to emphasize that the regional beginnings of agriculture must have involved such a complex range of variables that we would be blind to ignore any of the above factors - prior sedentism, affluence and choice, human-plant co-evolution, environmental change and periodic stress, population pressure, and certainly the availability of suitable candidates for domestication. And this is not all. Charles Heiser (1990) suggested that planting began in order to appease the gods after harvests of wild plants - a hypothesis incapable of being tested, but interesting nevertheless. Jacques Cauvin (2000; Cauvin et al. 2001) suggested that a "revolution of symbols" formed an immediate precedent to the emergence of plant domestication in the Levant, the symbolism in this case involving human female figurines and representations of bulls. Again, this is an intriguing but rather untestable hypothesis, and a little imprecise on causal mechanism.

Indeed, most suggested "causes" overlap so greatly that it is often hard to separate them. Environmental change and increasing stability into the Holocene may be the most significant underlying facilitator, but even this will produce little in the absence of the "right" social and faunal/floral background combinations. Thus, there can be no one-line explanation for the origins of agriculture. Neither can we ignore the possibility of human choice and conscious inventiveness. Any overall explanation for a trend as complex as a transition to agriculture must be "layered in time," meaning that different causative factors will have occurred in sequential and reinforcing ways.