Architecture (computer science)

|

I |

|

INTRODUCTION |

Architecture (computer science), a general term referring to the structure of all or part of a computer system. The term also covers the design of system software, such as the operating system (the program that controls the computer), as well as referring to the combination of hardware and basic software that links the machines on a computer network. Computer architecture refers to an entire structure and to the details needed to make it functional. Thus, computer architecture covers computer systems, microprocessors, circuits, and system programs. Typically the term does not refer to application programs, such as spreadsheets or word processing, which are required to perform a task but not to make the system run.

|

II |

|

DESIGN ELEMENTS |

In designing a computer system, architects consider five major elements that make up the system's hardware: the arithmetic/logic unit, control unit, memory, input, and output. The arithmetic/logic unit performs arithmetic and compares numerical values. The control unit directs the operation of the computer by taking the user instructions and transforming them into electrical signals that the computer's circuitry can understand. The combination of the arithmetic/logic unit and the control unit is called the central processing unit (CPU). The memory stores instructions and data. The input and output sections allow the computer to receive and send data, respectively.

Different hardware architectures are required because of the specialized needs of systems and users. One user may need a system to display graphics extremely fast, while another system may have to be optimized for searching a database or conserving battery power in a laptop computer.

In addition to the hardware design, the architects must consider what software programs will operate the system. Software, such as programming languages and operating systems, makes the details of the hardware architecture invisible to the user. For example, computers that use the C programming language or a UNIX operating system may appear the same from the user's viewpoint, although they use different hardware architectures.

|

III |

|

PROCESSING ARCHITECTURE |

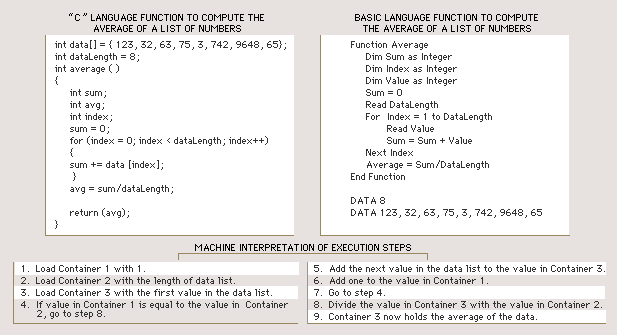

When a computer carries out an instruction, it proceeds through five steps. First, the control unit retrieves the instruction from memory—for example, an instruction to add two numbers. Second, the control unit decodes the instructions into electronic signals that control the computer. Third, the control unit fetches the data (the two numbers). Fourth, the arithmetic/logic unit performs the specific operation (the addition of the two numbers). Fifth, the control unit saves the result (the sum of the two numbers).

Early computers used only simple instructions because the cost of electronics capable of carrying out complex instructions was high. As this cost decreased in the 1960s, more complicated instructions became possible. Complex instructions (single instructions that specify multiple operations) can save time because they make it unnecessary for the computer to retrieve additional instructions. For example, if seven operations are combined in one instruction, then six of the steps that fetch instructions are eliminated and the computer spends less time processing that operation. Computers that combine several instructions into a single operation are called complex instruction set computers (CISC).

However, most programs do not often use complex instructions, but consist mostly of simple instructions. When these simple instructions are run on CISC architectures they slow down processing because each instruction—whether simple or complex—takes longer to decode in a CISC design. An alternative strategy is to return to designs that use only simple, single-operation instruction sets and make the most frequently used operations faster in order to increase overall performance. Computers that follow this design are called reduced instruction set computers (RISC).

RISC designs are especially fast at the numerical computations required in science, graphics, and engineering applications. CISC designs are commonly used for nonnumerical computations because they provide special instruction sets for handling character data, such as text in a word processing program. Specialized CISC architectures, called digital signal processors, exist to accelerate processing of digitized audio and video signals.

|

IV |

|

OPEN AND CLOSED ARCHITECTURES |

The CPU of a computer is connected to memory and to the outside world by means of either an open or a closed architecture. An open architecture can be expanded after the system has been built, usually by adding extra circuitry, such as a new microprocessor computer chip connected to the main system. The specifications of the circuitry are made public, allowing other companies to manufacture these expansion products.

Closed architectures are usually employed in specialized computers that will not require expansion—for example, computers that control microwave ovens. Some computer manufacturers have used closed architectures so that their customers can purchase expansion circuitry only from them. This allows the manufacturer to charge more and reduces the options for the consumer.

|

V |

|

NETWORK ARCHITECTURE |

Computers communicate with other computers via networks. The simplest network is a direct connection between two computers. However, computers can also be connected over large networks, allowing users to exchange data, communicate via electronic mail, and share resources such as printers.

Computers can be connected in several ways. In a ring configuration, data are transmitted along the ring and each computer in the ring examines this data to determine if it is the intended recipient. If the data are not intended for a particular computer, the computer passes the data to the next computer in the ring. This process is repeated until the data arrive at their intended destination. A ring network allows multiple messages to be carried simultaneously, but since each message is checked by each computer, data transmission is slowed.

In a bus configuration, computers are connected through a single set of wires, called a bus. One computer sends data to another by broadcasting the address of the receiver and the data over the bus. All the computers in the network look at the address simultaneously, and the intended recipient accepts the data. A bus network, unlike a ring network, allows data to be sent directly from one computer to another. However, only one computer at a time can transmit data. The others must wait to send their messages.

In a star configuration, computers are linked to a central computer called a hub. A computer sends the address of the receiver and the data to the hub, which then links the sending and receiving computers directly. A star network allows multiple messages to be sent simultaneously, but it is more costly because it uses an additional computer, the hub, to direct the data.

|

VI |

|

RECENT ADVANCES |

One problem in computer architecture is caused by the difference between the speed of the CPU and the speed at which memory supplies instructions and data. Modern CPUs can process instructions in 3 nanoseconds (3 billionths of a second). A typical memory access, however, takes 100 nanoseconds and each instruction may require multiple accesses. To compensate for this disparity, new computer chips have been designed that contain small memories, called caches, located near the CPU. Because of their proximity to the CPU and their small size, caches can supply instructions and data faster than normal memory. Cache memory stores the most frequently used instructions and data and can greatly increase efficiency.

Although a larger cache memory can hold more data, it also becomes slower. To compensate, computer architects employ designs with multiple caches. The design places the smallest and fastest cache nearest the CPU and locates a second larger and slower cache farther away. This arrangement allows the CPU to operate on the most frequently accessed instructions and data at top speed and to slow down only slightly when accessing the secondary cache. Using separate caches for instructions and data also allows the CPU to retrieve an instruction and data simultaneously.

Another strategy to increase speed and efficiency is the use of multiple arithmetic/logic units for simultaneous operations, called superscalar execution. In this design, instructions are acquired in groups. The control unit examines each group to see if it contains instructions that can be performed together. Some designs execute as many as six operations simultaneously. It is rare, however, to have this many instructions run together, so on average the CPU does not achieve a six-fold increase in performance.

Multiple computers are sometimes combined into single systems called parallel processors. When a machine has more than one thousand arithmetic/logic units, it is said to be massively parallel. Such machines are used primarily for numerically intensive scientific and engineering computation. Parallel machines containing as many as sixteen thousand computers have been constructed.

Computer Science

|

I |

|

INTRODUCTION |

Computer Science, study of the theory, experimentation, and engineering that form the basis for the design and use of computers—devices that automatically process information. Computer science traces its roots to work done by English mathematician Charles Babbage, who first proposed a programmable mechanical calculator in 1837. Until the advent of electronic digital computers in the 1940s, computer science was not generally distinguished as being separate from mathematics and engineering. Since then it has sprouted numerous branches of research that are unique to the discipline.

|

II |

|

THE DEVELOPMENT OF COMPUTER SCIENCE |

Early work in the field of computer science during the late 1940s and early 1950s focused on automating the process of making calculations for use in science and engineering. Scientists and engineers developed theoretical models of computation that enabled them to analyze how efficient different approaches were in performing various calculations. Computer science overlapped considerably during this time with the branch of mathematics known as numerical analysis, which examines the accuracy and precision of calculations.

As the use of computers expanded between the 1950s and the 1970s, the focus of computer science broadened to include simplifying the use of computers through programming languages—artificial languages used to program computers, and operating systems—computer programs that provide a useful interface between a computer and a user. During this time, computer scientists were also experimenting with new applications and computer designs, creating the first computer networks, and exploring relationships between computation and thought.

In the 1970s, computer chip manufacturers began to mass produce microprocessors—the electronic circuitry that serves as the main information processing center in a computer. This new technology revolutionized the computer industry by dramatically reducing the cost of building computers and greatly increasing their processing speed. The microprocessor made possible the advent of the personal computer, which resulted in an explosion in the use of computer applications. Between the early 1970s and 1980s, computer science rapidly expanded in an effort to develop new applications for personal computers and to drive the technological advances in the computing industry. Much of the earlier research that had been done began to reach the public through personal computers, which derived most of their early software from existing concepts and systems.

Computer scientists continue to expand the frontiers of computer and information systems by pioneering the designs of more complex, reliable, and powerful computers; enabling networks of computers to efficiently exchange vast amounts of information; and seeking ways to make computers behave intelligently. As computers become an increasingly integral part of modern society, computer scientists strive to solve new problems and invent better methods of solving current problems.

The goals of computer science range from finding ways to better educate people in the use of existing computers to highly speculative research into technologies and approaches that may not be viable for decades. Underlying all of these specific goals is the desire to better the human condition today and in the future through the improved use of information.

|

III |

|

THEORY AND EXPERIMENT |

Computer science is a combination of theory, engineering, and experimentation. In some cases, a computer scientist develops a theory, then engineers a combination of computer hardware and software based on that theory, and experimentally tests it. An example of such a theory-driven approach is the development of new software engineering tools that are then evaluated in actual use. In other cases, experimentation may result in new theory, such as the discovery that an artificial neural network exhibits behavior similar to neurons in the brain, leading to a new theory in neurophysiology.

It might seem that the predictable nature of computers makes experimentation unnecessary because the outcome of experiments should be known in advance. But when computer systems and their interactions with the natural world become sufficiently complex, unforeseen behaviors can result. Experimentation and the traditional scientific method are thus key parts of computer science.

|

IV |

|

MAJOR BRANCHES OF COMPUTER SCIENCE |

Computer science can be divided into four main fields: software development, computer architecture (hardware), human-computer interfacing (the design of the most efficient ways for humans to use computers), and artificial intelligence (the attempt to make computers behave intelligently). Software development is concerned with creating computer programs that perform efficiently. Computer architecture is concerned with developing optimal hardware for specific computational needs. The areas of artificial intelligence (AI) and human-computer interfacing often involve the development of both software and hardware to solve specific problems.

|

A |

|

Software Development |

In developing computer software, computer scientists and engineers study various areas and techniques of software design, such as the best types of programming languages and algorithms (see below) to use in specific programs, how to efficiently store and retrieve information, and the computational limits of certain software-computer combinations. Software designers must consider many factors when developing a program. Often, program performance in one area must be sacrificed for the sake of the general performance of the software. For instance, since computers have only a limited amount of memory, software designers must limit the number of features they include in a program so that it will not require more memory than the system it is designed for can supply.

Software engineering is an area of software development in which computer scientists and engineers study methods and tools that facilitate the efficient development of correct, reliable, and robust computer programs. Research in this branch of computer science considers all the phases of the software life cycle, which begins with a formal problem specification, and progresses to the design of a solution, its implementation as a program, testing of the program, and program maintenance. Software engineers develop software tools and collections of tools called programming environments to improve the development process. For example, tools can help to manage the many components of a large program that is being written by a team of programmers.

Algorithms and data structures are the building blocks of computer programs. An algorithm is a precise step-by-step procedure for solving a problem within a finite time and using a finite amount of memory. Common algorithms include searching a collection of data, sorting data, and numerical operations such as matrix multiplication. Data structures are patterns for organizing information, and often represent relationships between data values. Some common data structures are called lists, arrays, records, stacks, queues, and trees.

Computer scientists continue to develop new algorithms and data structures to solve new problems and improve the efficiency of existing programs. One area of theoretical research is called algorithmic complexity. Computer scientists in this field seek to develop techniques for determining the inherent efficiency of algorithms with respect to one another. Another area of theoretical research called computability theory seeks to identify the inherent limits of computation.

Software engineers use programming languages to communicate algorithms to a computer. Natural languages such as English are ambiguous—meaning that their grammatical structure and vocabulary can be interpreted in multiple ways—so they are not suited for programming. Instead, simple and unambiguous artificial languages are used. Computer scientists study ways of making programming languages more expressive, thereby simplifying programming and reducing errors. A program written in a programming language must be translated into machine language (the actual instructions that the computer follows). Computer scientists also develop better translation algorithms that produce more efficient machine language programs.

Databases and information retrieval are related fields of research. A database is an organized collection of information stored in a computer, such as a company’s customer account data. Computer scientists attempt to make it easier for users to access databases, prevent access by unauthorized users, and improve access speed. They are also interested in developing techniques to compress the data, so that more can be stored in the same amount of memory. Databases are sometimes distributed over multiple computers that update the data simultaneously, which can lead to inconsistency in the stored information. To address this problem, computer scientists also study ways of preventing inconsistency without reducing access speed.

Information retrieval is concerned with locating data in collections that are not clearly organized, such as a file of newspaper articles. Computer scientists develop algorithms for creating indexes of the data. Once the information is indexed, techniques developed for databases can be used to organize it. Data mining is a closely related field in which a large body of information is analyzed to identify patterns. For example, mining the sales records from a grocery store could identify shopping patterns to help guide the store in stocking its shelves more effectively.

Operating systems are programs that control the overall functioning of a computer. They provide the user interface, place programs into the computer’s memory and cause it to execute them, control the computer’s input and output devices, manage the computer’s resources such as its disk space, protect the computer from unauthorized use, and keep stored data secure. Computer scientists are interested in making operating systems easier to use, more secure, and more efficient by developing new user interface designs, designing new mechanisms that allow data to be shared while preventing access to sensitive data, and developing algorithms that make more effective use of the computer’s time and memory.

The study of numerical computation involves the development of algorithms for calculations, often on large sets of data or with high precision. Because many of these computations may take days or months to execute, computer scientists are interested in making the calculations as efficient as possible. They also explore ways to increase the numerical precision of computations, which can have such effects as improving the accuracy of a weather forecast. The goals of improving efficiency and precision often conflict, with greater efficiency being obtained at the cost of precision and vice versa.

Symbolic computation involves programs that manipulate nonnumeric symbols, such as characters, words, drawings, algebraic expressions, encrypted data (data coded to prevent unauthorized access), and the parts of data structures that represent relationships between values. One unifying property of symbolic programs is that they often lack the regular patterns of processing found in many numerical computations. Such irregularities present computer scientists with special challenges in creating theoretical models of a program’s efficiency, in translating it into an efficient machine language program, and in specifying and testing its correct behavior.

|

B |

|

Computer Architecture |

Computer architecture is the design and analysis of new computer systems. Computer architects study ways of improving computers by increasing their speed, storage capacity, and reliability, and by reducing their cost and power consumption. Computer architects develop both software and hardware models to analyze the performance of existing and proposed computer designs, then use this analysis to guide development of new computers. They are often involved with the engineering of a new computer because the accuracy of their models depends on the design of the computer’s circuitry. Many computer architects are interested in developing computers that are specialized for particular applications such as image processing, signal processing, or the control of mechanical systems. The optimization of computer architecture to specific tasks often yields higher performance, lower cost, or both.

|

C |

|

Artificial Intelligence |

Artificial intelligence (AI) research seeks to enable computers and machines to mimic human intelligence and sensory processing ability, and models human behavior with computers to improve our understanding of intelligence. The many branches of AI research include machine learning, inference, cognition, knowledge representation, problem solving, case-based reasoning, natural language understanding, speech recognition, computer vision, and artificial neural networks.

A key technique developed in the study of artificial intelligence is to specify a problem as a set of states, some of which are solutions, and then search for solution states. For example, in chess, each move creates a new state. If a computer searched the states resulting from all possible sequences of moves, it could identify those that win the game. However, the number of states associated with many problems (such as the possible number of moves needed to win a chess game) is so vast that exhaustively searching them is impractical. The search process can be improved through the use of heuristics—rules that are specific to a given problem and can therefore help guide the search. For example, a chess heuristic might indicate that when a move results in checkmate, there is no point in examining alternate moves.

|

D |

|

Robotics |

Another area of computer science that has found wide practical use is robotics—the design and development of computer controlled mechanical devices. Robots range in complexity from toys to automated factory assembly lines, and relieve humans from tedious, repetitive, or dangerous tasks. Robots are also employed where requirements of speed, precision, consistency, or cleanliness exceed what humans can accomplish. Roboticists—scientists involved in the field of robotics—study the many aspects of controlling robots. These aspects include modeling the robot’s physical properties, modeling its environment, planning its actions, directing its mechanisms efficiently, using sensors to provide feedback to the controlling program, and ensuring the safety of its behavior. They also study ways of simplifying the creation of control programs. One area of research seeks to provide robots with more of the dexterity and adaptability of humans, and is closely associated with AI.

|

E |

|

Human-Computer Interfacing |

Human-computer interfaces provide the means for people to use computers. An example of a human-computer interface is the keyboard, which lets humans enter commands into a computer and enter text into a specific application. The diversity of research into human-computer interfacing corresponds to the diversity of computer users and applications. However, a unifying theme is the development of better interfaces and experimental evaluation of their effectiveness. Examples include improving computer access for people with disabilities, simplifying program use, developing three-dimensional input and output devices for virtual reality, improving handwriting and speech recognition, and developing heads-up displays for aircraft instruments in which critical information such as speed, altitude, and heading are displayed on a screen in front of the pilot’s window. One area of research, called visualization, is concerned with graphically presenting large amounts of data so that people can comprehend its key properties.

|

V |

|

CONNECTION OF COMPUTER SCIENCE TO OTHER DISCIPLINES |

Because computer science grew out of mathematics and electrical engineering, it retains many close connections to those disciplines. Theoretical computer science draws many of its approaches from mathematics and logic. Research in numerical computation overlaps with mathematics research in numerical analysis. Computer architects work closely with the electrical engineers who design the circuits of a computer.

Beyond these historical connections, there are strong ties between AI research and psychology, neurophysiology, and linguistics. Human-computer interface research also has connections with psychology. Roboticists work with both mechanical engineers and physiologists in designing new robots.

Computer science also has indirect relationships with virtually all disciplines that use computers. Applications developed in other fields often involve collaboration with computer scientists, who contribute their knowledge of algorithms, data structures, software engineering, and existing technology. In return, the computer scientists have the opportunity to observe novel applications of computers, from which they gain a deeper insight into their use. These relationships make computer science a highly interdisciplinary field of study.

Parallel Processing

|

I |

|

INTRODUCTION |

Parallel Processing, computer technique in which multiple operations are carried out simultaneously. Parallelism reduces computational time. For this reason, it is used for many computationally intensive applications such as predicting economic trends or generating visual special effects for feature films.

Two common ways that parallel processing is accomplished are through multiprocessing or instruction-level parallelism. Multiprocessing links several processors—computers or microprocessors (the electronic circuits that provide the computational power and control of computers)—together to solve a single problem. Instruction-level parallelism uses a single computer processor that executes multiple instructions simultaneously.

If a problem is divided evenly into ten independent parts that are solved simultaneously on ten computers, then the solution requires one tenth of the time it would take on a single nonparallel computer where each part is solved in sequential order. Many large problems are easily divisible for parallel processing; however, some problems are difficult to divide because their parts are interdependent, requiring the results from another part of the problem before they can be solved.

Portions of a problem that cannot be calculated in parallel are called serial. These serial portions determine the computation time for a problem. For example, suppose a problem has nine million computations that can be done in parallel and one million computations that must be done serially. Theoretically, nine million computers could perform nine-tenths of the total computation simultaneously, leaving one-tenth of the total problem to be computed serially. Therefore, the total execution time is only one-tenth of what it would be on a single nonparallel computer, despite the additional nine million processors.

|

II |

|

PARALLEL ARCHITECTURE |

In 1966 American electrical engineer Michael Flynn distinguished four classes of processor architecture (the design of how processors manipulate data and instructions). Data can be sent either to a computer's processor one at a time, in a single data stream, or several pieces of data can be sent at the same time, in multiple data streams. Similarly, instructions can be carried out either one at a time, in a single instruction stream, or several instructions can be carried out simultaneously, in multiple instruction streams.

Serial computers have a Single Instruction stream, Single Data stream (SISD) architecture. One piece of data is sent to one processor. For example, if 100 numbers had to be multiplied by the number 3, each number would be sent to the processor, multiplied, and the result stored; then the next number would be sent and calculated, until all 100 results were calculated. Applications that are suited for SISD architectures include those that require complex interdependent decisions, such as word processing.

A Multiple Instruction stream, Single Data stream (MISD) processor replicates a stream of data and sends it to multiple processors, each of which then executes a separate program. For example, the contents of a database could be sent simultaneously to several processors, each of which would search for a different value. Problems well-suited to MISD parallel processing include computer vision systems that extract multiple features, such as vegetation, geological features, or manufactured objects, from a single satellite image.

A Single Instruction stream, Multiple Data stream (SIMD) architecture has multiple processing elements that carry out the same instruction on separate data. For example, a SIMD machine with 100 processing elements can simultaneously multiply 100 numbers each by the number 3. SIMD processors are programmed much like SISD processors, but their operations occur on arrays of data instead of individual values. SIMD processors are therefore also known as array processors. Examples of applications that use SIMD architecture are image-enhancement processing and radar processing for air-traffic control.

A Multiple Instruction stream, Multiple Data stream (MIMD) processor has separate instructions for each stream of data. This architecture is the most flexible, but it is also the most difficult to program because it requires additional instructions to coordinate the actions of the processors. It also can simulate any of the other architectures but with less efficiency. MIMD designs are used on complex simulations, such as projecting city growth and development patterns, and in some artificial-intelligence programs.

|

A |

|

Parallel Communication |

Another factor in parallel-processing architecture is how processors communicate with each other. One approach is to let processors share a single memory and communicate by reading each other's data. This is called shared memory. In this architecture, all the data can be accessed by any processor, but care must be taken to prevent the linked processors from inadvertently overwriting each other's results.

An alternative method is to connect the processors and allow them to send messages to each other. This technique is known as message passing or distributed memory. Data are divided and stored in the memories of different processors. This makes it difficult to share information because the processors are not connected to the same memory, but it is also safer because the results cannot be overwritten.

In shared memory systems, as the number of processors increases, access to the single memory becomes difficult, and a bottleneck forms. To address this limitation, and the problem of isolated memory in distributed memory systems, distributed memory processors also can be constructed with circuitry that allows different processors to access each other's memory. This hybrid approach, known as distributed shared memory, eliminates the bottleneck and sharing problems of both architectures.

|

III |

|

COST OF PARALLEL COMPUTING |

Parallel processing is more costly than serial computing because multiple processors are expensive and the speedup in computation is rarely proportional to the number of additional processors.

MIMD processors require complex programming to coordinate their actions. Finding MIMD programming errors also is complicated by time-dependent interactions between processors. For example, one processor might require the result from a second processor's memory before that processor has produced the result and put it into its memory. This results in an error that is difficult to identify.

Programs written for one parallel architecture seldom run efficiently on another. As a result, to use one program on two different parallel processors often involves a costly and time-consuming rewrite of that program.

|

IV |

|

FUTURE TRENDS AND APPLICATIONS |

When a parallel processor performs more than 1000 operations at a time, it is said to be massively parallel. In most cases, problems that are suited to massive parallelism involve large amounts of data, such as in weather forecasting, simulating the properties of hypothetical pharmaceuticals, and code breaking. Massively parallel processors today are large and expensive, but technology soon will permit an SIMD processor with 1024 processing elements to reside on a single integrated circuit.

Researchers are finding that the serial portions of some problems can be processed in parallel, but on different architectures. For example, 90 percent of a problem may be suited to SIMD, leaving 10 percent that appears to be serial but merely requires MIMD processing. To accommodate this finding two approaches are being explored: heterogeneous parallelism combines multiple parallel architectures, and configurable computers can change their architecture to suit each part of the problem.

In 1996 International Business Machines Corporation (IBM) challenged Garry Kasparov, the reigning world chess champion, to a chess match with a supercomputer called Deep Blue. The computer utilized 256 microprocessors in a parallel architecture to compute more than 100 million chess positions per second. Kasparov won the match with three wins, two draws, and one loss. Deep Blue was the first computer to win a game against a world champion with regulation time controls. Some experts predict these types of parallel processing machines will eventually surpass human chess playing ability, and some speculate that massive calculating power will one day substitute for intelligence. Deep Blue serves as a prototype for future computers that will be required to solve complex problems.

World Wide Web

|

I |

|

INTRODUCTION |

World Wide Web (WWW), computer-based network of information resources that a user can move through by using links from one document to another. The information on the World Wide Web is spread over computers all over the world. The World Wide Web is often referred to simply as “the Web.”

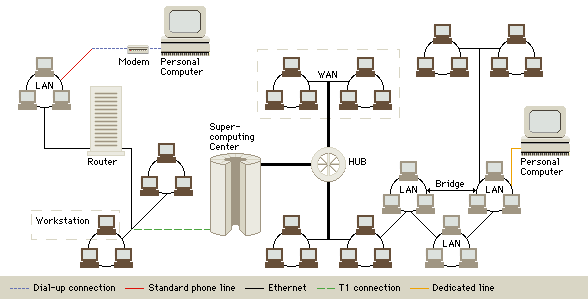

Internet Topology

The Internet and the Web are each a series of interconnected computer networks. Personal computers or workstations are connected to a Local Area Network (LAN) either by a dial-up connection through a modem and standard phone line, or by being directly wired into the LAN. Other modes of data transmission that allow for connection to a network include T-1 connections and dedicated lines. Bridges and hubs link multiple networks to each other. Routers transmit data through networks and determine the best path of transmission.

The Web has become a very popular resource since it first became possible to view images and other multimedia on the Internet, a worldwide network of computers, in 1993. The Web offers a place where companies, institutions, and individuals can display information about their products, research, or their lives. Anyone with access to a computer connected to the Web can view most of that information. A small percentage of information on the Web is only accessible to subscribers or other authorized users. The Web has become a forum for many groups and a marketplace for many companies. Museums, libraries, government agencies, and schools make the Web a valuable learning and research tool by posting data and research. The Web also carries information in a wide spectrum of formats. Users can read text, view pictures, listen to sounds, and even explore interactive virtual environments on the Web.

|

II |

|

A WEB OF COMPUTERS |

Like all computer networks, the Web connects two types of computers–clients and servers—using a standard set of rules for communication between the computers. The server computers store the information resources that make up the Web, and Web users use client computers to access the resources. A computer-based network may be a public network—such as the worldwide Internet—or a private network, such as a company’s intranet. The Web is part of the Internet. The Internet also encompasses other methods of linking computers, such as Telnet, File Transfer Protocol, and Gopher, but the Web has quickly become the most widely used part of the Internet. It differs from the other parts of the Internet in the rules that computers use to talk to each other and in the accessibility of information other than text. It is much more difficult to view pictures or other multimedia files with methods other than the Web.

Enabling client computers to display Web pages with pictures and other media was made possible by the introduction of a type of software called a browser. Each Web document contains coded information about what is on the page, how the page should look, and to which other sites the document links. The browser on the client’s computer reads this information and uses it to display the page on the client’s screen. Almost every Web page or Web document includes links, called hyperlinks, to other Web sites. Hyperlinks are a defining feature of the Web—they allow users to travel between Web documents without following a specific order or hierarchy.

|

III |

|

HOW THE WEB WORKS |

When users want to access the Web, they use the Web browser on their client computer to connect to a Web server. Client computers connect to the Web in one of two ways. Client computers with dedicated access to the Web connect directly to the Web through a router (a piece of computer hardware that determines the best way to connect client and server computers) or by being part of a larger network with a direct connection to the Web. Client computers with dial-up access to the Web connect to the Web through a modem, a hardware device that translates information from the computer into signals that can travel over telephone lines. Some modems send signals over cable television lines or special high-capacity telephone lines such as Integrated Services Digital Network (ISDN) or Asymmetric Digital Subscriber Loop (ADSL) lines. The client computer and the Web server use a set of rules for passing information back and forth. The Web browser knows another set of rules with which it can open and display information that reaches the client computer.

Web servers hold Web documents and the media associated with them. They can be ordinary personal computers, powerful mainframe computers, or anywhere in the range between the two. Client computers access information from Web servers, and any computer that a person uses to access the Web is a client, so a client could be any type of computer. The set of rules that clients and servers use to talk to each other is called a protocol. The Web, and all Internet formats, uses the protocol called TCP/IP (Transmission Control Protocol/Internet Protocol). However, each part of the Internet—such as the Web, gopher systems, and File Transfer Protocol (FTP) systems—uses a slightly different system to transfer files between clients and servers.

The address of a Web document helps the client computer find and connect to the server that holds the page. The address of a Web page is called a Uniform Resource Locator (URL). A URL is a compound code that tells the client’s browser three things: the rules the client should use to reach the site, the Internet address that uniquely designates the server, and the location within the server’s file system for a given item. An example of a URL is http://encarta.msn.com/. The first part of the URL, http://, shows that the site is on the World Wide Web. Most browsers are also capable of retrieving files with formats from other parts of the Internet, such as gopher and FTP. Other Internet formats use different codes in the first part of their URLs—for example, gopher uses gopher:// and FTP uses ftp://. The next part of the URL, encarta.msn.com, gives the name, or unique Internet address, of the server on which the Web site is stored. Some URLs specify certain directories or files, such as http://encarta.msn.com/explore/default.asp—explore is the name of the directory in which the file default.asp is found.

The Web holds information in many forms, including text, graphical images, and any type of digital media files: including video, audio, and virtual reality files. Some elements of Web pages are actually small software programs in their own right. These objects, called applets (from a small application, another name for a computer program), follow a set of instructions written by the person that programmed the applet. Applets allow users to play games on the Web, search databases, perform virtual scientific experiments, and many other actions.

The codes that tell the browser on the client computer how to display a Web document correspond to a set of rules called Hypertext Markup Language (HTML). Each Web document is written as plain text, and the instructions that tell the client computer how to present the document are contained within the document itself, encoded using special symbols called HTML tags. The browser knows how to interpret the HTML tags, so the document appears on the user’s screen as the document designer intended. In addition to HTML, some types of objects on the Web use their own coding. Applets, for example, are mini-computer programs that are written in computer programming languages such as Visual Basic and Java.

Client-server communication, URLs, and HTML allow Web sites to incorporate hyperlinks, which users can use to navigate through the Web. Hyperlinks are often phrases in the text of the Web document that link to another Web document by providing the document’s URL when the user clicks their mouse on the phrase. The client’s browser usually differentiates between hyperlinks and ordinary text by making the hyperlinks a different color or by underlining the hyperlinks. Hyperlinks allow users to jump between diverse pages on the Web in no particular order. This method of accessing information is called associative access, and scientists believe it bears a striking resemblance to the way the human brain accesses stored information. Hyperlinks make referencing information on the Web faster and easier than using most traditional printed documents.

|

IV |

|

WHO USES THE WEB |

Even though the World Wide Web is only a part of the Internet, surveys have shown that over 75 percent of Internet use is on the Web. That percentage is likely to grow in the future.

One of the most remarkable aspects of the World Wide Web is its users. They are a cross section of society. Users include students who need to find materials for a term paper, physicians who need to find out about the latest medical research, and college applicants investigating campuses or even filling out application and financial aid forms online. Other users include investors who can look up the trading history of a company’s stock and evaluate data on various commodities and mutual funds. All of this information is readily available on the Web. Users can often find graphs of a company’s financial information that show the information in several different ways.

Travelers investigating a possible trip can take virtual tours, check on airline schedules and fares, and even book a flight on the Web. Many destinations—including parks, cities, resorts, and hotels—have their own Web sites with guides and local maps. Major delivery companies also have Web sites from which customers can track their shipments, finding out where their packages are or when they were delivered.

Government agencies have Web sites where they post regulations, procedures, newsletters, and tax forms. Many elected officials—including almost all members of the United States Congress—have Web sites, where they express their views, list their achievements, and invite input from the voters. The Web also contains directories of e-mail and postal mail addresses and phone numbers.

Many merchants and publishers now do business on the Web. Web users can shop at Web sites of major bookstores, clothing sellers, and other retailers. Many major newspapers have special Web editions that are issued even more frequently than daily. The major broadcast networks use the Web to provide supplementary materials for radio and television shows, especially documentaries. Electronic journals in almost every scholarly field are now on the Web. Most museums now offer the Web user a virtual tour of their exhibits and holdings. These businesses and institutions usually use their Web sites to complement the non-Web parts of the operations. Some receive extra revenues from selling advertising space on their Web sites. Some businesses, especially publishers, provide limited information to ordinary Web users, but offer much more to users who buy a subscription.

|

V |

|

HISTORY |

The World Wide Web was developed by British physicist and computer scientist Timothy Berners-Lee as a project within the European Organization for Nuclear Research (CERN) in Geneva, Switzerland. Berners-Lee first began working with hypertext in the early 1980s. His implementation of the Web became operational at CERN in 1989, and it quickly spread to universities in the rest of the world through the high-energy physics community of scholars. Groups at the National Center for Supercomputing Applications at the University of Illinois in Champaign-Urbana also researched and developed Web technology. They developed the first major browser, named Mosaic, in 1993. Mosaic was the first browser to come in several different versions, each of which was designed to run on a different operating system. Operating systems are the basic software that control computers.

The architecture of the Web is amazingly straightforward. For the user, the Web is attractive to use because it is built upon a graphical user interface (GUI), a method of displaying information and controls with pictures. The Web also works on diverse types of computing equipment because it is made up of a small set of programs. This small set makes it relatively simple for programmers to write software that can translate information on the Web into a form that corresponds to a particular operating system. The Web’s methods of storing information associatively, retrieving documents with hypertext links, and naming Web sites with URLs make it a smooth extension of the rest of the Internet. This allows easy access to information between different parts of the Internet.

|

VI |

|

FUTURE TRENDS |

People continue to extend and improve on World Wide Web technology. Computer scientists predict that users will likely see at least five new ways in which the Web has been extended: new ways of searching the Web, new ways of restricting access to intellectual property, more integration of entire databases into the Web, more access to software libraries, and more and more electronic commerce.

HTML will probably continue to go through new forms with extended capabilities for formatting Web pages. Other complementary programming and coding systems such as Visual Basic scripting, Virtual Reality Markup Language (VMRL), Active X programming, and Java scripting will probably continue to gain larger roles in the Web. This will result in more powerful Web pages, capable of bringing information to users in more engaging and exciting ways.

On the hardware side, faster connections to the Web will allow users to download more information, making it practical to include more information and more complicated multimedia elements on each Web page. Software, telephone, and cable companies are planning partnerships that will allow information from the Web to travel into homes along improved telephone lines and coaxial cable such as that used for cable television. New kinds of computers, specifically designed for use with the Web, may become increasingly popular. These computers are less expensive than ordinary computers because they have fewer features, retaining only those required by the Web. Some computers even use ordinary television sets, instead of special computer monitors, to display content from the Web.

Neural Network

|

I |

|

INTRODUCTION |

Neural Network, in computer science, highly interconnected network of information-processing elements that mimics the connectivity and functioning of the human brain. Neural networks address problems that are often difficult for traditional computers to solve, such as speech and pattern recognition. They also provide some insight into the way the human brain works. One of the most significant strengths of neural networks is their ability to learn from a limited set of examples.

Neural networks were initially studied by computer and cognitive scientists in the late 1950s and early 1960s in an attempt to model sensory perception in biological organisms. Neural networks have been applied to many problems since they were first introduced, including pattern recognition, handwritten character recognition, speech recognition, financial and economic modeling, and next-generation computing models.

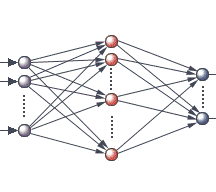

Artificial Neural Network

The neural networks that are increasingly being used in computing mimic those found in the nervous systems of vertebrates. The main characteristic of a biological neural network, top, is that each neuron, or nerve cell, receives signals from many other neurons through its branching dendrites. The neuron produces an output signal that depends on the values of all the input signals and passes this output on to many other neurons along a branching fiber called an axon. In an artificial neural network, bottom, input signals, such as signals from a television camera’s image, fall on a layer of input nodes, or computing units. Each of these nodes is linked to several other “hidden’ nodes between the input and output nodes of the network. There may be several layers of hidden nodes, though for simplicity only one is shown here. Each hidden node performs a calculation on the signals reaching it and sends a corresponding output signal to other nodes. The final output is a highly processed version of the input.

|

II |

|

HOW A NEURAL NETWORK WORKS |

Neural networks fall into two categories: artificial neural networks and biological neural networks. Artificial neural networks are modeled on the structure and functioning of biological neural networks. The most familiar biological neural network is the human brain. The human brain is composed of approximately 100 billion nerve cells called neurons that are massively interconnected. Typical neurons in the human brain are connected to on the order of 10,000 other neurons, with some types of neurons having more than 200,000 connections. The extensive number of neurons and their high degree of interconnectedness are part of the reason that the brains of living creatures are capable of making a vast number of calculations in a short amount of time.

|

A |

|

Neurons |

Biological neurons have a fairly simple large-scale structure, although their operation and small-scale structure is immensely complex. Neurons have three main parts: a central cell body, called the soma, and two different types of branched, treelike structures that extend from the soma, called dendrites and axons. Information from other neurons, in the form of electrical impulses, enters the dendrites at connection points called synapses. The information flows from the dendrites to the soma, where it is processed. The output signal, a train of impulses, is then sent down the axon to the synapses of other neurons.

Artificial neurons, like their biological counterparts, have simple structures and are designed to mimic the function of biological neurons. The main body of an artificial neuron is called a node or unit. Artificial neurons may be physically connected to one another by wires that mimic the connections between biological neurons, if, for instance, the neurons are simple integrated circuits. However, neural networks are usually simulated on traditional computers, in which case the connections between processing nodes are not physical but are instead virtual.

Artificial neurons may be either discrete or continuous. Discrete neurons send an output signal of 1 if the sum of received signals is above a certain critical value called a threshold value, otherwise they send an output signal of 0. Continuous neurons are not restricted to sending output values of only 1s and 0s; instead they send an output value between 1 and 0 depending on the total amount of input that they receive—the stronger the received signal, the stronger the signal sent out from the node and vice-versa. Continuous neurons are the most commonly used in actual artificial neural networks.

|

B |

|

Artificial Neural Network Architecture |

The architecture of a neural network is the specific arrangement and connections of the neurons that make up the network. One of the most common neural network architectures has three layers. The first layer is called the input layer and is the only layer exposed to external signals. The input layer transmits signals to the neurons in the next layer, which is called a hidden layer. The hidden layer extracts relevant features or patterns from the received signals. Those features or patterns that are considered important are then directed to the output layer, the final layer of the network. Sophisticated neural networks may have several hidden layers, feedback loops, and time-delay elements, which are designed to make the network as efficient as possible in discriminating relevant features or patterns from the input layer.

|

III |

|

DIFFERENCES BETWEEN NEURAL NETWORKS AND TRADITIONAL COMPUTERS |

Neural networks differ greatly from traditional computers (for example personal computers, workstations, mainframes) in both form and function. While neural networks use a large number of simple processors to do their calculations, traditional computers generally use one or a few extremely complex processing units. Neural networks also do not have a centrally located memory, nor are they programmed with a sequence of instructions, as are all traditional computers.

The information processing of a neural network is distributed throughout the network in the form of its processors and connections, while the memory is distributed in the form of the weights given to the various connections. The distribution of both processing capability and memory means that damage to part of the network does not necessarily result in processing dysfunction or information loss. This ability of neural networks to withstand limited damage and continue to function well is one of their greatest strengths.

Neural networks also differ greatly from traditional computers in the way they are programmed. Rather than using programs that are written as a series of instructions, as do all traditional computers, neural networks are “taught” with a limited set of training examples. The network is then able to “learn” from the initial examples to respond to information sets that it has never encountered before. The resulting values of the connection weights can be thought of as a ‘program’.

Neural networks are usually simulated on traditional computers. The advantage of this approach is that computers can easily be reprogrammed to change the architecture or learning rule of the simulated neural network. Since the computation in a neural network is massively parallel, the processing speed of a simulated neural network can be increased by using massively parallel computers—computers that link together hundreds or thousands of CPUs in parallel to achieve very high processing speeds.

|

IV |

|

NEURAL NETWORK LEARNING |

In all biological neural networks the connections between particular dendrites and axons may be reinforced or discouraged. For example, connections may become reinforced as more signals are sent down them, and may be discouraged when signals are infrequently sent down them. The reinforcement of certain neural pathways, or dendrite-axon connections, results in a higher likelihood that a signal will be transmitted along that path, further reinforcing the pathway. Paths between neurons that are rarely used slowly atrophy, or decay, making it less likely that signals will be transmitted along them.

The role of connection strengths between neurons in the brain is crucial; scientists believe they determine, to a great extent, the way in which the brain processes the information it takes in through the senses. Neuroscientists studying the structure and function of the brain believe that various patterns of neurons firing can be associated with specific memories. In this theory, the strength of the connections between the relevant neurons determines the strength of the memory. Important information that needs to be remembered may cause the brain to constantly reinforce the pathways between the neurons that form the memory, while relatively unimportant information will not receive the same degree of reinforcement.

|

A |

|

Connection Weights |

To mimic the way in which biological neurons reinforce certain axon-dendrite pathways, the connections between artificial neurons in a neural network are given adjustable connection weights, or measures of importance. When signals are received and processed by a node, they are multiplied by a weight, added up, and then transformed by a nonlinear function. The effect of the nonlinear function is to cause the sum of the input signals to approach some value, usually +1 or 0. If the signals entering the node add up to a positive number, the node sends an output signal that approaches +1 out along all of its connections, while if the signals add up to a negative value, the node sends a signal that approaches 0. This is similar to a simplified model of a how a biological neuron functions—the larger the input signal, the larger the output signal.

|

B |

|

Training Sets |

Computer scientists teach neural networks by presenting them with desired input-output training sets. The input-output training sets are related patterns of data. For instance, a sample training set might consist of ten different photographs for each of ten different faces. The photographs would then be digitally entered into the input layer of the network. The desired output would be for the network to signal one of the neurons in the output layer of the network per face. Beginning with equal, or random, connection weights between the neurons, the photographs are digitally entered into the input layer of the neural network and an output signal is computed and compared to the target output. Small adjustments are then made to the connection weights to reduce the difference between the actual output and the target output. The input-output set is again presented to the network and further adjustments are made to the connection weights because the first few times that the input is entered, the network will usually choose the incorrect output neuron. After repeating the weight-adjustment process many times for all input-output patterns in the training set, the network learns to respond in the desired manner.

A neural network is said to have learned when it can correctly perform the tasks for which it has been trained. Neural networks are able to extract the important features and patterns of a class of training examples and generalize from these to correctly process new input data that they have not encountered before. For a neural network trained to recognize a series of photographs, generalization would be demonstrated if a new photograph presented to the network resulted in the correct output neuron being signaled.

A number of different neural network learning rules, or algorithms, exist and use various techniques to process information. Common arrangements use some sort of system to adjust the connection weights between the neurons automatically. The most widely used scheme for adjusting the connection weights is called error back-propagation, developed independently by American computer scientists Paul Werbos (in 1974), David Parker (in 1984/1985), and David Rumelhart, Ronald Williams, and others (in 1985). The back-propagation learning scheme compares a neural network’s calculated output to a target output and calculates an error adjustment for each of the nodes in the network. The neural network adjusts the connection weights according to the error values assigned to each node, beginning with the connections between the last hidden layer and the output layer. After the network has made adjustments to this set of connections, it calculates error values for the next previous layer and makes adjustments. The back-propagation algorithm continues in this way, adjusting all of the connection weights between the hidden layers until it reaches the input layer. At this point it is ready to calculate another output.

|

V |

|

IMPLEMENTATIONS AND FUTURE TECHNOLOGY |

Neural networks have been applied to many tasks that are easy for humans to accomplish, but difficult for traditional computers. Because neural networks mimic the brain, they have shown much promise in so-called sensory processing tasks such as speech recognition, pattern recognition, and the transcription of hand-written text. In some settings, neural networks can perform as well as humans. Neural-network-based backgammon software, for example, rivals the best human players.

While traditional computers still outperform neural networks in most situations, neural networks are superior in recognizing patterns in extremely large data sets. Furthermore, because neural networks have the ability to learn from a set of examples and generalize this knowledge to new situations, they are excellent for work requiring adaptive control systems. For this reason, the United States National Aeronautics and Space Administration (NASA) has extensively studied neural networks to determine whether they might serve to control future robots sent to explore planetary bodies in our solar system. In this application, robots could be sent to other planets, such as Mars, to carry out significant and detailed exploration autonomously.

An important advantage that neural networks have over traditional computer systems is that they can sustain damage and still function properly. This design characteristic of neural networks makes them very attractive candidates for future aircraft control systems, especially in high performance military jets. Another potential use of neural networks for civilian and military use is in pattern recognition software for radar, sonar, and other remote-sensing devices.

Motherboard

Motherboard, in computer science, the main circuit board in a computer. The most important computer chips and other electronic components that give function to a computer are located on the motherboard. The motherboard is a printed circuit board that connects the various elements on it through the use of traces, or electrical pathways. The motherboard is indispensable to the computer and provides the main computing capability.

Personal computers normally have one central processing unit (CPU), or microprocessor, which is located with other chips on the motherboard. The manufacturer and model of the CPU chip carried by the motherboard is a key criterion for designating the speed and other capabilities of the computer. The CPU in many personal computers is not permanently attached to the motherboard, but is instead plugged into a socket so that it may be removed and upgraded.

Motherboards also contain important computing components, such as the basic input/output system (BIOS), which contains the basic set of instructions required to control the computer when it is first turned on; different types of memory chips such as random access memory (RAM) and cache memory; mouse, keyboard, and monitor control circuitry; and logic chips that control various parts of the computer’s function. Having as many of the key components of the computer as possible on the motherboard improves the speed and operation of the computer.

Users may expand their computer’s capability by inserting an expansion board into special expansion slots on the motherboard. Expansion slots are standard with nearly all personal computers and offer faster speed, better graphics capabilities, communication capability with other computers, and audio and video capabilities. Expansion slots come in either half or full size, and can transfer 8 or 16 bits (the smallest units of information that a computer can process) at a time, respectively.

The pathways that carry data on the motherboard are called buses. The amount of data that can be transmitted at one time between a device, such as a printer or monitor, and the CPU affects the speed at which programs run. For this reason, buses are designed to carry as much data as possible. To work properly, expansion boards must conform to bus standards such as integrated drive electronics (IDE), Extended Industry Standard Architecture (EISA), or small computer system interface (SCSI).

Central Processing Unit

|

I |

|

INTRODUCTION |

Central Processing Unit (CPU), in computer science, microscopic circuitry that serves as the main information processor in a computer. A CPU is generally a single microprocessor made from a wafer of semiconducting material, usually silicon, with millions of electrical components on its surface. On a higher level, the CPU is actually a number of interconnected processing units that are each responsible for one aspect of the CPU’s function. Standard CPUs contain processing units that interpret and implement software instructions, perform calculations and comparisons, make logical decisions (determining if a statement is true or false based on the rules of Boolean algebra), temporarily store information for use by another of the CPU’s processing units, keep track of the current step in the execution of the program, and allow the CPU to communicate with the rest of the computer.

|

II |

|

HOW A CPU WORKS |

|

A |

|

CPU Function |

A CPU is similar to a calculator, only much more powerful. The main function of the CPU is to perform arithmetic and logical operations on data taken from memory or on information entered through some device, such as a keyboard, scanner, or joystick. The CPU is controlled by a list of software instructions, called a computer program. Software instructions entering the CPU originate in some form of memory storage device such as a hard disk, floppy disk, CD-ROM, or magnetic tape. These instructions then pass into the computer’s main random access memory (RAM), where each instruction is given a unique address, or memory location. The CPU can access specific pieces of data in RAM by specifying the address of the data that it wants.

As a program is executed, data flow from RAM through an interface unit of wires called the bus, which connects the CPU to RAM. The data are then decoded by a processing unit called the instruction decoder that interprets and implements software instructions. From the instruction decoder the data pass to the arithmetic/logic unit (ALU), which performs calculations and comparisons. Data may be stored by the ALU in temporary memory locations called registers where it may be retrieved quickly. The ALU performs specific operations such as addition, multiplication, and conditional tests on the data in its registers, sending the resulting data back to RAM or storing it in another register for further use. During this process, a unit called the program counter keeps track of each successive instruction to make sure that the program instructions are followed by the CPU in the correct order.

|

B |

|

Branching Instructions |

The program counter in the CPU usually advances sequentially through the instructions. However, special instructions called branch or jump instructions allow the CPU to abruptly shift to an instruction location out of sequence. These branches are either unconditional or conditional. An unconditional branch always jumps to a new, out of order instruction stream. A conditional branch tests the result of a previous operation to see if the branch should be taken. For example, a branch might be taken only if the result of a previous subtraction produced a negative result. Data that are tested for conditional branching are stored in special locations in the CPU called flags.

|

C |

|

Clock Pulses |

The CPU is driven by one or more repetitive clock circuits that send a constant stream of pulses throughout the CPU’s circuitry. The CPU uses these clock pulses to synchronize its operations. The smallest increments of CPU work are completed between sequential clock pulses. More complex tasks take several clock periods to complete. Clock pulses are measured in Hertz, or number of pulses per second. For instance, a 100-megahertz (100-MHz) processor has 100 million clock pulses passing through it per second. Clock pulses are a measure of the speed of a processor.

|

D |

|

Fixed-Point and Floating-Point Numbers |

Most CPUs handle two different kinds of numbers: fixed-point and floating-point numbers. Fixed-point numbers have a specific number of digits on either side of the decimal point. This restriction limits the range of values that are possible for these numbers, but it also allows for the fastest arithmetic. Floating-point numbers are numbers that are expressed in scientific notation, in which a number is represented as a decimal number multiplied by a power of ten. Scientific notation is a compact way of expressing very large or very small numbers and allows a wide range of digits before and after the decimal point. This is important for representing graphics and for scientific work, but floating-point arithmetic is more complex and can take longer to complete. Performing an operation on a floating-point number may require many CPU clock periods. A CPU’s floating-point computation rate is therefore less than its clock rate. Some computers use a special floating-point processor, called a coprocessor, that works in parallel to the CPU to speed up calculations using floating-point numbers. This coprocessor has become standard on many personal computer CPUs, such as Intel’s Pentium chip.

|

III |

|

HISTORY |

|

A |

|

Early Computers |

In the first computers, CPUs were made of vacuum tubes and electric relays rather than microscopic transistors on computer chips. These early computers were immense and needed a great deal of power compared to today’s microprocessor-driven computers. The first general purpose electronic computer, the ENIAC (Electronic Numerical Integrator And Computer), was completed in 1946 and filled a large room. About 18,000 vacuum tubes were used to build ENIAC’s CPU and input/output circuits. Between 1946 and 1956 all computers had bulky CPUs that consumed massive amounts of energy and needed continual maintenance, because the vacuum tubes burned out frequently and had to be replaced.

|

B |

|

The Transistor |

A solution to the problems posed by vacuum tubes came in 1947, when American physicists John Bardeen, Walter Brattain, and William Shockley first demonstrated a revolutionary new electronic switching and amplifying device called the transistor. The transistor had the potential to work faster and more reliably and to consume much less power than a vacuum tube. Despite the overwhelming advantages transistors offered over vacuum tubes, it took nine years before they were used in a commercial computer. The first commercially available computer to use transistors in its circuitry was the UNIVAC (UNIVersal Automatic Computer), delivered to the United States Air Force in 1956.

|

C |

|

The Integrated Circuit |

Development of the computer chip started in 1958 when Jack Kilby of Texas Instruments demonstrated that it was possible to integrate the various components of a CPU onto a single piece of silicon. These computer chips were called integrated circuits (ICs) because they combined multiple electronic circuits on the same chip. Subsequent design and manufacturing advances allowed transistor densities on integrated circuits to increase tremendously. The first ICs had only tens of transistors per chip compared to the 3 million to 5 million transistors per chip common on today’s CPUs.

In 1967 Fairchild Semiconductor introduced a single integrated circuit that contained all the arithmetic logic functions for an eight-bit processor. (A bit is the smallest unit of information used in computers. Multiples of a bit are used to describe the largest-size piece of data that a CPU can manipulate at one time.) However, a fully working integrated circuit computer required additional circuits to provide register storage, data flow control, and memory and input/output paths. Intel Corporation accomplished this in 1971 when it introduced the Intel 4004 microprocessor. Although the 4004 could only manage four-bit arithmetic, it was powerful enough to become the core of many useful hand calculators at the time. In 1975 Micro Instrumentation Telemetry Systems introduced the Altair 8800, the first personal computer kit to feature an eight-bit microprocessor. Because microprocessors were so inexpensive and reliable, computing technology rapidly advanced to the point where individuals could afford to buy a small computer. The concept of the personal computer was made possible by the advent of the microprocessor CPU. In 1978 Intel introduced the first of its x86 CPUs, the 8086 16-bit microprocessor. Although 16-bit microprocessors are still common, today’s microprocessors are becoming increasingly sophisticated, with many 32-bit and even 64-bit CPUs available. High-performance processors can run with internal clock rates that exceed 500 MHz, or 500 million clock pulses per second.

|

IV |

|

CURRENT DEVELOPMENTS |

The competitive nature of the computer industry and the use of faster, more cost-effective computing continue the drive toward faster CPUs. The minimum transistor size that can be manufactured using current technology is fast approaching the theoretical limit. In the standard technique for microprocessor design, ultraviolet (short wavelength) light is used to expose a light-sensitive covering on the silicon chip. Various methods are then used to etch the base material along the pattern created by the light. These etchings form the paths that electricity follows in the chip. The theoretical limit for transistor size using this type of manufacturing process is approximately equal to the wavelength of the light used to expose the light-sensitive covering. By using light of shorter wavelength, greater detail can be achieved and smaller transistors can be manufactured, resulting in faster, more powerful CPUs. Printing integrated circuits with X-rays, which have a much shorter wavelength than ultraviolet light, may provide further reductions in transistor size that will translate to improvements in CPU speed.

Many other avenues of research are being pursued in an attempt to make faster CPUs. New base materials for integrated circuits, such as composite layers of gallium arsenide and gallium aluminum arsenide, may contribute to faster chips. Alternatives to the standard transistor-based model of the CPU are also being considered. Experimental ideas in computing may radically change the design of computers and the concept of the CPU in the future. These ideas include quantum computing, in which single atoms hold bits of information; molecular computing, where certain types of problems may be solved using recombinant DNA techniques; and neural networks, which are computer systems with the ability to learn.

Computer Memory

|

I |

|

INTRODUCTION |

Computer Memory, device that stores data for use by a computer. Most memory devices represent data with the binary number system. In the binary number system, numbers are represented by sequences of the digits 0 and 1. In a computer, these numbers correspond to the on and off states of the computer’s electronic circuitry. Each binary digit is called a bit, which is the basic unit of memory in a computer. A group of eight bits is called a byte, and can represent decimal numbers ranging from 0 to 255. When these numbers are each assigned to a letter, digit, or symbol, in what is known as a character code, a byte can also represent a single character.