- •Contents

- •Updated Information

- •About This Book

- •Introduction to ESX Configuration

- •Networking

- •Introduction to Networking

- •Networking Concepts Overview

- •Network Services

- •View Networking Information in the vSphere Client

- •View Network Adapter Information in the vSphere Client

- •vNetwork Standard Switches

- •Port Groups

- •Port Group Configuration for Virtual Machines

- •Add a Virtual Machine Port Group

- •VMkernel Networking Configuration

- •TCP/IP Stack at the VMkernel Level

- •Set Up VMkernel Networking

- •Service Console Configuration

- •Set Up Service Console Networking

- •Configure Service Console Ports

- •Set the Default Gateway

- •Display Service Console Information

- •Using DHCP for the Service Console

- •vNetwork Standard Switch Properties

- •Change the Number of Ports for a vSwitch

- •Change the Speed of an Uplink Adapter

- •Add Uplink Adapters

- •Cisco Discovery Protocol

- •Enable CDP on an ESX Host

- •View Cisco Switch Information on the vSphere Client

- •vNetwork Distributed Switch Architecture

- •Configuring a vNetwork Distributed Switch

- •Create a vNetwork Distributed Switch

- •Add Hosts to a vNetwork Distributed Switch

- •Manage Hosts on a vDS

- •Edit General vNetwork Distributed Switch Settings

- •Edit Advanced vNetwork Distributed Switch Settings

- •View Network Adapter Information for a vNetwork Distributed Switch

- •Upgrade a vDS to a Newer Version

- •dvPort Groups

- •Add a dvPort Group

- •Edit General dvPort Group Properties

- •Edit Advanced dvPort Group Properties

- •dvPorts

- •Monitor dvPort State

- •Configure dvPort Settings

- •Private VLANs

- •Create a Private VLAN

- •Remove a Primary Private VLAN

- •Remove a Secondary Private VLAN

- •Configuring vNetwork Distributed Switch Network Adapters

- •Managing Physical Adapters

- •Add an Uplink to a vNetwork Distributed Switch

- •Remove an Uplink from a vNetwork Distributed Switch

- •Managing Virtual Network Adapters

- •Create a VMkernel Network Adapter on a vNetwork Distributed Switch

- •Create a Service Console Network Adapter on a vNetwork Distributed Switch

- •Migrate an Existing Virtual Adapter to a vNetwork Distributed Switch

- •Migrate a Virtual Adapter to a vNetwork Standard Switch

- •Edit the VMkernel Configuration on a vNetwork Distributed Switch

- •Edit the Service Console Configuration on a vNetwork Distributed Switch

- •Remove a Virtual Adapter

- •Configuring Virtual Machine Networking on a vNetwork Distributed Switch

- •Migrate Virtual Machines to or from a vNetwork Distributed Switch

- •Connect an Individual Virtual Machine to a dvPort Group

- •Network I/O Control

- •Enable Network I/O Control on a vDS

- •Edit Network Resource Pool Settings

- •Advanced Networking

- •Internet Protocol Version 6

- •Enable IPv6 Support on an ESX Host

- •VLAN Configuration

- •Networking Policies

- •Load Balancing and Failover Policy

- •Edit the Failover and Load Balancing Policy on a vSwitch

- •Edit the Failover and Load Balancing Policy on a Port Group

- •Edit the Teaming and Failover Policy on a dvPort Group

- •Edit dvPort Teaming and Failover Policies

- •VLAN Policy

- •Edit the VLAN Policy on a dvPort Group

- •Edit dvPort VLAN Policies

- •Security Policy

- •Edit the Layer 2 Security Policy on a vSwitch

- •Edit the Layer 2 Security Policy Exception on a Port Group

- •Edit the Security Policy on a dvPort Group

- •Edit dvPort Security Policies

- •Traffic Shaping Policy

- •Edit the Traffic Shaping Policy on a vSwitch

- •Edit the Traffic Shaping Policy on a Port Group

- •Edit the Traffic Shaping Policy on a dvPort Group

- •Edit dvPort Traffic Shaping Policies

- •Port Blocking Policies

- •Edit the Port Blocking Policy on a dvPort Group

- •Edit dvPort Port Blocking Policy

- •Change the DNS and Routing Configuration

- •MAC Addresses

- •MAC Address Generation

- •Set Up a MAC Address

- •TCP Segmentation Offload and Jumbo Frames

- •Enabling TSO

- •Enable TSO Support for a Virtual Machine

- •Check Whether TSO Is Enabled on a VMkernel Interface

- •Enabling Jumbo Frames

- •Create a Jumbo Frames-Enabled vSwitch

- •Enable Jumbo Frames on a vNetwork Distributed Switch

- •Enable Jumbo Frame Support on a Virtual Machine

- •Create a Jumbo Frames-Enabled VMkernel Interface

- •NetQueue and Networking Performance

- •Enable NetQueue on an ESX Host

- •Disable NetQueue on an ESX Host

- •VMDirectPath I/O

- •Configure Passthrough Devices on a Host

- •Configure a PCI Device on a Virtual Machine

- •Networking Best Practices

- •Mounting NFS Volumes

- •Networking Configuration for Software iSCSI and Dependent Hardware iSCSI

- •Create iSCSI Port for a Single NIC

- •Using Multiple NICs for Software and Dependent Hardware iSCSI

- •Create Additional iSCSI Ports for Multiple NICs

- •Configuring Networking on Blade Servers

- •Configure a Virtual Machine Port Group with a VLAN on a Blade Server

- •Configure a VMkernel Port with a VLAN on a Blade Server

- •Troubleshooting

- •Troubleshooting Service Console Networking

- •Rename Network Adapters by Using the Service Console

- •Troubleshooting Physical Switch Configuration

- •Troubleshooting Port Group Configuration

- •Storage

- •Introduction to Storage

- •About ESX Storage

- •Types of Physical Storage

- •Local Storage

- •Networked Storage

- •Supported Storage Adapters

- •Target and Device Representations

- •Understanding Fibre Channel Naming

- •Understanding iSCSI Naming and Addressing

- •Understanding Storage Device Naming

- •About ESX Datastores

- •VMFS Datastores

- •Creating and Increasing VMFS Datastores

- •Sharing a VMFS Volume Across ESX Hosts

- •NFS Datastore

- •How Virtual Machines Access Storage

- •Comparing Types of Storage

- •Displaying Storage Adapters

- •View Storage Adapters Information

- •Copy Storage Adapter Identifiers to the Clipboard

- •Viewing Storage Devices

- •Display Storage Devices for a Host

- •Display Storage Devices for an Adapter

- •Copy Storage Device Identifiers to the Clipboard

- •Displaying Datastores

- •Review Datastore Properties

- •Configuring ESX Storage

- •Local SCSI Storage

- •Fibre Channel Storage

- •iSCSI Storage

- •iSCSI Initiators

- •Setting Up Independent Hardware iSCSI Adapters

- •View Independent Hardware iSCSI Adapters

- •Change Name and IP Address for Independent Hardware Initiators

- •Setting Up and Configuring Software iSCSI Adapter

- •Enable the Software iSCSI Adapter

- •Setting Up and Configuring Dependent Hardware iSCSI Adapters

- •Dependent Hardware iSCSI Considerations

- •View Dependent Hardware iSCSI Adapters

- •Determine Association Between Dependent Hardware iSCSI and Physical Network Adapters

- •Bind iSCSI Ports to iSCSI Adapters

- •Configuring Discovery Addresses for iSCSI Initiators

- •Set Up Dynamic Discovery

- •Set Up Static Discovery

- •Configuring CHAP Parameters for iSCSI Adapters

- •Choosing CHAP Authentication Method

- •Set Up CHAP Credentials for an iSCSI Initiator

- •Set Up CHAP Credentials for a Target

- •Disable CHAP

- •Configuring Additional Parameters for iSCSI

- •Configure Advanced Parameters for iSCSI

- •Datastore Refresh and Storage Rescan Operations

- •Perform Storage Rescan

- •Create VMFS Datastores

- •Network Attached Storage

- •NFS Datastores as Repositories for Commonly Used Files

- •Create an NFS-Based Datastore

- •Creating a Diagnostic Partition

- •Create a Diagnostic Partition

- •Managing Storage

- •Managing Datastores

- •Rename Datastores

- •Group Datastores

- •Delete Datastores

- •Unmount Datastores

- •Changing VMFS Datastore Properties

- •Increase VMFS Datastores

- •Upgrade Datastores

- •Managing Duplicate VMFS Datastores

- •Mounting VMFS Datastores with Existing Signatures

- •Mount a VMFS Datastore with an Existing Signature

- •Resignaturing VMFS Copies

- •Resignature a VMFS Datastore Copy

- •Using Multipathing with ESX

- •Managing Multiple Paths

- •VMware Multipathing Module

- •VMware SATPs

- •VMware PSPs

- •VMware NMP Flow of I/O

- •Multipathing with Local Storage and Fibre Channel SANs

- •Multipathing with iSCSI SAN

- •Path Scanning and Claiming

- •Viewing the Paths Information

- •View Datastore Paths

- •View Storage Device Paths

- •Setting a Path Selection Policy

- •Change the Path Selection Policy

- •Disable Paths

- •Storage Hardware Acceleration

- •Hardware Acceleration Requirements and Benefits

- •Hardware Acceleration Support Status

- •Turn off Hardware Acceleration

- •Thin Provisioning

- •About Virtual Disk Formats

- •Create Thin Provisioned Virtual Disks

- •View Virtual Machine Storage Resources

- •Determine the Disk Format of a Virtual Machine

- •Convert a Virtual Disk from Thin to Thick

- •Handling Datastore Over-Subscription

- •Turn off vCenter Server Storage Filters

- •vCenter Server Storage Filtering

- •Raw Device Mapping

- •About Raw Device Mapping

- •Benefits of Raw Device Mapping

- •Limitations of Raw Device Mapping

- •Raw Device Mapping Characteristics

- •RDM Virtual and Physical Compatibility Modes

- •Dynamic Name Resolution

- •Raw Device Mapping with Virtual Machine Clusters

- •Comparing Available SCSI Device Access Modes

- •Managing Mapped LUNs

- •Create Virtual Machines with RDMs

- •Manage Paths for a Mapped Raw LUN

- •Security

- •Security for ESX Systems

- •ESX Architecture and Security Features

- •Security and the Virtualization Layer

- •Security and Virtual Machines

- •Security and the Virtual Networking Layer

- •Creating a Network DMZ on a Single ESX Host

- •Creating Multiple Networks Within a Single ESX Host

- •Security and the Service Console

- •Security Resources and Information

- •Securing an ESX Configuration

- •Securing the Network with Firewalls

- •Firewalls for Configurations with vCenter Server

- •Firewalls for Configurations Without vCenter Server

- •Connecting to vCenter Server Through a Firewall

- •Connecting to the Virtual Machine Console Through a Firewall

- •Connecting ESX Hosts Through Firewalls

- •Configuring Firewall Ports for Supported Services and Management Agents

- •Allow Access to ESX for a Service or Management Agent

- •Automating Service Behavior Based on Firewall Settings

- •Configure How Service Startup Relates to Firewall Configuration

- •TCP and UDP Ports for Management Access

- •Securing Virtual Machines with VLANs

- •Security Considerations for VLANs

- •VLANs as Part of a Broader Security Implementation

- •Properly Configure VLANs

- •Create Separate Communications Between Management Tools and the Service Console

- •Virtual Switch Protection and VLANs

- •Securing Virtual Switch Ports

- •MAC Address Changes

- •Forged Transmissions

- •Promiscuous Mode Operation

- •Internet Protocol Security

- •Add a Security Association

- •Remove a Security Association

- •List Available Security Associations

- •Create a Security Policy

- •Remove a Security Policy

- •List Available Security Policies

- •Securing iSCSI Storage

- •Securing iSCSI Devices Through Authentication

- •Enabling Challenge Handshake Authentication Protocol (CHAP) for iSCSI SANs

- •Disabling iSCSI SAN Authentication

- •Protecting an iSCSI SAN

- •Authentication and User Management

- •Securing ESX Through Authentication and Permissions

- •About Users, Groups, Permissions, and Roles

- •Understanding Users

- •Understanding Groups

- •Understanding Password Requirements

- •Understanding Permissions

- •Assigning root User Permissions

- •Understanding vpxuser Permissions

- •Understanding Roles

- •Assigning the No Access Role

- •Assigning the Read Only Role

- •Assigning the Administrator Role

- •Working with Users and Groups on ESX Hosts

- •View, Sort, and Export a List of Users and Groups

- •Add a User to the Users Table

- •Modify the Settings for a User

- •Remove a User or Group

- •Add a Group to the Groups Table

- •Add or Remove Users from a Group

- •Configure a Host to Use a Directory Service

- •Add a Host to a Directory Service Domain

- •Use Host Profiles to Apply Permissions to Hosts

- •Encryption and Security Certificates for ESX

- •Enable Certificate Checking and Verify Host Thumbprints

- •Generate New Certificates for the ESX Host

- •Replace a Default Certificate with a CA-Signed Certificate

- •Configure SSL Timeouts

- •Modifying ESX Web Proxy Settings

- •Configure the Web Proxy to Search for Certificates in Nondefault Locations

- •Change Security Settings for a Web Proxy Service

- •Service Console Security

- •General Security Recommendations

- •Log In to the Service Console

- •Service Console Firewall Configuration

- •Determine the Service Console Firewall Security Level

- •Set the Service Console Firewall Security Level

- •Open a Port in the Service Console Firewall

- •Close a Port in the Service Console Firewall

- •Troubleshooting When Firewalls are Overwritten

- •Password Restrictions

- •Password Aging

- •Change Default Password Aging Restrictions for a Host

- •Change Default Password Aging Restrictions for Users

- •Password Strength and Complexity

- •Change Default Password Complexity for the pam_passwdqc.so Plug-In

- •Configure a Password Reuse Rule

- •Using the pam_cracklib.so Authentication Plug-In

- •Switch to the pam_cracklib.so Plug-In

- •Cipher Strength

- •setuid and setgid Flags

- •Disable Optional Applications

- •Default setuid Applications

- •Default setgid Applications

- •SSH Security

- •Change the Default SSH Configuration

- •Security Patches and Security Vulnerability Scanning Software

- •Security Best Practices and Scenarios

- •Security Approaches for Common ESX Deployments

- •Single-Customer Deployment

- •Multiple-Customer Restricted Deployment

- •Multiple-Customer Open Deployment

- •Virtual Machine Recommendations

- •Installing Antivirus Software

- •Limiting Exposure of Sensitive Data Copied to the Clipboard

- •Enable Copy and Paste Operations Between the Guest Operating System and Remote Console

- •Removing Unnecessary Hardware Devices

- •Prevent a Virtual Machine User or Process from Disconnecting Devices

- •Limiting Guest Operating System Writes to Host Memory

- •Modify Guest Operating System Variable Memory Limit

- •Prevent the Guest Operating System Processes from Sending Configuration Messages to the Host

- •Configuring Logging Levels for the Guest Operating System

- •Limit Log File Numbers and Sizes

- •Disable Logging for the Guest Operating System

- •Host Profiles

- •Managing Host Profiles

- •Host Profiles Usage Model

- •Access Host Profiles View

- •Creating a Host Profile

- •Create a Host Profile from Host Profiles View

- •Create a Host Profile from Host

- •Export a Host Profile

- •Import a Host Profile

- •Edit a Host Profile

- •Edit a Policy

- •Enable Compliance Check

- •Manage Profiles

- •Attaching Entities

- •Attach Entities from the Host Profiles View

- •Attach Entities from the Host

- •Applying Profiles

- •Apply a Profile from the Host Profiles View

- •Apply a Profile from the Host

- •Change Reference Host

- •Manage Profiles from a Cluster

- •Updating Profiles From the Reference Host

- •Checking Compliance

- •Check Compliance from the Host Profiles View

- •Check Compliance from Host

- •Check Cluster Compliance

- •Appendixes

- •ESX Technical Support Commands

- •Linux Commands Used with ESX

- •Using vmkfstools

- •vmkfstools Command Syntax

- •vmkfstools Options

- •-v Suboption

- •File System Options

- •Creating a VMFS File System

- •Example for Creating a VMFS File System

- •Extending an Existing VMFS-3 Volume

- •Example for Extending a VMFS-3 Volume

- •Listing Attributes of a VMFS Volume

- •Upgrading a VMFS-2 to VMFS-3

- •Virtual Disk Options

- •Supported Disk Formats

- •Creating a Virtual Disk

- •Example for Creating a Virtual Disk

- •Initializing a Virtual Disk

- •Inflating a Thin Virtual Disk

- •Removing Zeroed Blocks

- •Converting a Zeroedthick Virtual Disk to an Eagerzeroedthick Disk

- •Deleting a Virtual Disk

- •Renaming a Virtual Disk

- •Cloning a Virtual or Raw Disk

- •Example for Cloning a Virtual Disk

- •Migrate VMware Workstation and VMware GSX Server Virtual Machines

- •Extending a Virtual Disk

- •Migrating a VMFS-2 Virtual Disk to VMFS-3

- •Creating a Virtual Compatibility Mode Raw Device Mapping

- •Example for Creating a Virtual Compatibility Mode RDM

- •Creating a Physical Compatibility Mode Raw Device Mapping

- •Listing Attributes of an RDM

- •Displaying Virtual Disk Geometry

- •Checking and Repairing Virtual Disks

- •Managing SCSI Reservations of LUNs

- •Index

Chapter 9 Managing Storage

nPerforms array-specific actions necessary for storage fail-over. For example, for active-passive devices, it can activate passive paths.

VMware PSPs

Path Selection Plug-Ins (PSPs) run with the VMware NMP and are responsible for choosing a physical path for I/O requests.

The VMware NMP assigns a default PSP for each logical device based on the SATP associated with the physical paths for that device. You can override the default PSP.

By default, the VMware NMP supports the following PSPs:

Most Recently Used |

Selects the path the ESX host used most recently to access the given device. If |

|

(VMW_PSP_MRU) |

this path becomes unavailable, the host switches to an alternative path and |

|

|

|

continues to use the new path while it is available. MRU is the default path |

|

|

policy for active-passive arrays. |

Fixed |

Uses the designated preferred path, if it has been configured. Otherwise, it uses |

|

(VMW_PSP_FIXED) |

the first working path discovered at system boot time. If the host cannot use |

|

|

|

the preferred path, it selects a random alternative available path. The host |

|

|

reverts back to the preferred path as soon as that path becomes available. Fixed |

|

|

is the default path policy for active-active arrays. |

|

|

|

|

|

CAUTION If used with active-passive arrays, the Fixed path policy might cause |

|

|

path thrashing. |

|

|

|

VMW_PSP_FIXED_AP |

Extends the Fixed functionality to active-passive and ALUA mode arrays. |

|

Round Robin |

Uses a path selection algorithm that rotates through all available active paths |

|

(VMW_PSP_RR) |

enabling load balancing across the paths. |

|

VMware NMP Flow of I/O

When a virtual machine issues an I/O request to a storage device managed by the NMP, the following process takes place.

1The NMP calls the PSP assigned to this storage device.

2 The PSP selects an appropriate physical path on which to issue the I/O. 3 The NMP issues the I/O request on the path selected by the PSP.

4If the I/O operation is successful, the NMP reports its completion.

5If the I/O operation reports an error, the NMP calls the appropriate SATP.

6 The SATP interprets the I/O command errors and, when appropriate, activates the inactive paths.

7The PSP is called to select a new path on which to issue the I/O.

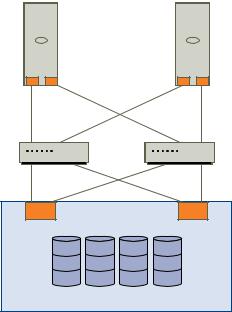

Multipathing with Local Storage and Fibre Channel SANs

In a simple multipathing local storage topology, you can use one ESX host, which has two HBAs. The ESX host connects to a dual-port local storage system through two cables. This configuration ensures fault tolerance if one of the connection elements between the ESX host and the local storage system fails.

To support path switching with FC SAN, the ESX host typically has two or more HBAs available from which the storage array can be reached using one or more switches. Alternatively, the setup can include one HBA and two storage processors so that the HBA can use a different path to reach the disk array.

VMware, Inc. |

119 |

ESX Configuration Guide

In Figure 9-2, multiple paths connect each server with the storage device. For example, if HBA1 or the link between HBA1 and the switch fails, HBA2 takes over and provides the connection between the server and the switch. The process of one HBA taking over for another is called HBA failover.

Figure 9-2. Fibre Channel Multipathing

Host |

Host |

|

1 |

|

2 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

HBA2 |

HBA1 |

HBA3 |

HBA4 |

switch |

|

|

switch |

|

SP1 |

|

SP2 |

storage array

Similarly, if SP1 or the link between SP1 and the switch breaks, SP2 takes over and provides the connection between the switch and the storage device. This process is called SP failover. ESX supports HBA and SP failover with its multipathing capability.

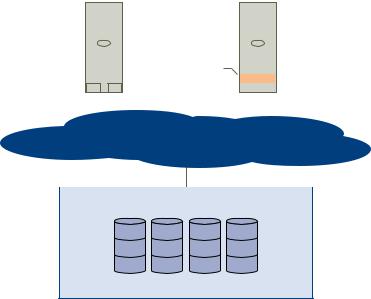

Multipathing with iSCSI SAN

With iSCSI storage, you can take advantage of the multipathing support that the IP network offers. In addition, ESX supports host-based multipathing for all types of iSCSI initiators.

ESX can use multipathing support built into the IP network, which allows the network to perform routing. Through dynamic discovery, iSCSI initiators obtain a list of target addresses that the initiators can use as multiple paths to iSCSI LUNs for failover purposes.

ESX also supports host-based multipathing.

Figure 9-3 shows multipathing setups possible with different types of iSCSI initiators.

120 |

VMware, Inc. |

Chapter 9 Managing Storage

Figure 9-3. Host-Based Multipathing

|

|

hardware |

|

|

|

software |

||||||||||

|

|

|

iSCSI |

|

|

|

iSCSI |

|||||||||

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

||

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

host 1 |

|

|

|

host 2 |

|||||||||

|

|

|

|

|

|

|

|

|

software |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

||

|

|

|

|

|

|

|

|

|

adapter |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

||

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

||

HBA2 |

|

|

|

|

|

HBA1 |

|

|

NIC2 |

|

|

|

|

|

NIC1 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|||||

|

|

|

|

|

|

|

|

IP network |

|

|

|

|

|

|

||

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

SP |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

iSCSI storage

Multipathing with Hardware iSCSI

With the hardware iSCSI, the host typically has two or more hardware iSCSI adapters available, from which the storage system can be reached using one or more switches. Alternatively, the setup might include one adapter and two storage processors so that the adapter can use a different path to reach the storage system.

In the Figure 9-3 illustration, Host1 has two hardware iSCSI adapters, HBA1 and HBA2, that provide two physical paths to the storage system. Multipathing plug-ins on your host, whether the VMkernel NMP or any third-party MPPs, have access to the paths by default and can monitor health of each physical path. If, for example, HBA1 or the link between HBA1 and the network fails, the multipathing plug-ins can switch the path over to HBA2.

Multipathing with Software iSCSI

With the software iSCSI, as shown on Host 2 of Figure 9-3, you can use multiple NICs that provide failover and load balancing capabilities for iSCSI connections between your host and storage systems.

For this setup, because multipathing plug-ins do not have direct access to physical NICs on your host, you must connect each physical NIC to a separate VMkernel port. You then associate all VMkernel ports with the software iSCSI initiator using a port binding technique. As a result, each VMkernel port connected to a separate NIC becomes a different path that the iSCSI storage stack and its storage-aware multipathing plug-ins can use.

For information about how to configure multipathing for the software iSCSI, see “Networking Configuration for Software iSCSI and Dependent Hardware iSCSI,” on page 68.

Path Scanning and Claiming

When you start your ESX host or rescan your storage adapter, the host discovers all physical paths to storage devices available to the host. Based on a set of claim rules defined in the /etc/vmware/esx.conf file, the host determines which multipathing plug-in (MPP) should claim the paths to a particular device and become responsible for managing the multipathing support for the device.

By default, the host performs a periodic path evaluation every 5 minutes causing any unclaimed paths to be claimed by the appropriate MPP.

VMware, Inc. |

121 |