- •Velocity-distribution data of a gas of rubidium atoms, confirming the discovery of a new phase of matter, the Bose–Einstein condensate

- •The National State Tax Service University of Ukraine

- •In the structure of the University Kyiv financial and economic college operates for training junior specialists.

- •Infotech

- •How Removable Storage Works

- •In the following sections, we will take an in-depth look at each of these technologies.

- •In the illustration above, you can see how the disk is divided into tracks (brown) and sectors (yellow).

- •Word processor

- •Word processing

- •The Central Processing Unit

- •Computer

- •Definition of a Network

- •Computer programming

- •Computer programming

- •Technology

- •The Centripetal City: Telecommunications, the Internet, and the Shaping of the Modern Urban Environment

- •1. On the Radar Scope

- •4.Whirlwind

- •7.The First Link

- •10.Request for Proposals

- •12.Logging In

- •13.@ Work

- •14.The Big Demo

- •15.Surfing the Net

- •18.Xerox parc

- •19.Computer Communication Compatibility

- •20.Sun Rises

- •21.Success from Failure

- •23.Adult Supervision

- •24.Wiring the World

- •25.Electronic Meeting Places

- •26.Online Services

- •27.The well

- •29.A Human Face

- •30.Open for Business

- •32.A Bigger Pipe

- •Test your knowledge of Internet stuff!

- •Test your knowledge of Internet stuff!

Infotech

The Shamanistic Tradition |

|

|

The start of the modern science that we call "Computer Science" can be traced back to a long ago age where man still dwelled in caves or in the forest, and lived in groups for protection and survival from the harsher elements on the Earth. Many of these groups possessed some primitive form of animistic religion; they worshipped the sun, the moon, the trees, or sacred animals. Within the tribal group was one individual to whom fell the responsibility for the tribe's spiritual welfare. It was he or she who decided when to hold both the secret and public religious ceremonies, and interceded with the spirits on behalf of the tribe. In order to correctly hold the ceremonies to ensure good harvest in the fall and fertility in the spring, the shamans needed to be able to count the days or to track the seasons. From the shamanistic tradition, man developed the first primitive counting mechanisms -- counting notches on sticks or marks on walls.

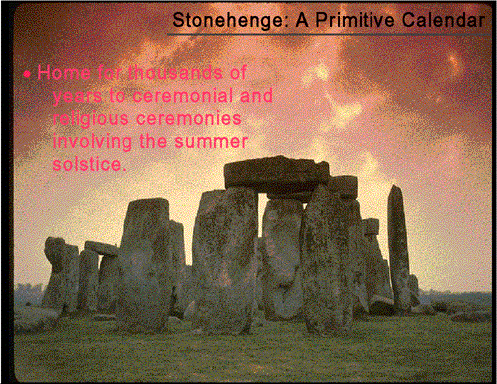

A Primitive Calendar |

Next Index

Prev

|

|

From the caves and the forests, man slowly evolved and built structures such as Stonehenge. Stonehenge, which lies 13km north of Salisbury, England, is believed to have been an ancient form of calendar designed to capture the light from the summer solstice in a specific fashion. The solstices have long been special days for various religious groups and cults. Archeologists and anthropologists today are not quite certain how the structure, believed to have been built about 2800 B.C., came to be erected since the technology required to join together the giant stones and raise them upright seems to be beyond the technological level of the Britons at the time. It is widely believed that the enormous edifice of stone may have been erected by the Druids. Regardless of the identity of the builders, it remains today a monument to man's intense desire to count and to track the occurrences of the physical world around him.

A Primitive Calculator |

Next Index Prev

|

|

Meanwhile in Asia, the Chinese were becoming very involved in commerce with the Japanese, Indians, and Koreans. Businessmen needed a way to tally accounts and bills. Somehow, out of this need, the abacus was born. The abacus is the first true precursor to the adding machines and computers which would follow. It worked somewhat like this:

The value assigned to each pebble (or bead, shell, or stick) is determined not by its shape but by its position: one pebble on a particular line or one bead on a particular wire has the value of 1; two together have the value of 2. A pebble on the next line, however, might have the value of 10, and a pebble on the third line would have the value of 100. Therefore, three properly placed pebbles--two with values of 1 and one with the value of 10--could signify 12, and the addition of a fourth pebble with the value of 100 could signify 112, using a place-value notational system with multiples of 10.

Thus, the abacus works on the principle of place-value notation: the location of the bead determines its value. In this way, relatively few beads are required to depict large numbers. The beads are counted, or given numerical values, by shifting them in one direction. The values are erased (freeing the counters for reuse) by shifting the beads in the other direction. An abacus is really a memory aid for the user making mental calculations, as opposed to the true mechanical calculating machines which were still to come.

Forefathers of Computing

For over a thousand years after the Chinese invented the abacus, not much progress was made to automate counting and mathematics. The Greeks came up with numerous mathematical formulae and theorems, but all of the newly discovered math had to be worked out by hand. A mathematician was often a person who sat in the back room of an establishment with several others and they worked on the same problem. The redundant personnel working on the same problem were there to ensure the correctness of the answer. It could take weeks or months of labourious work by hand to verify the correctness of a proposed theorem. Most of the tables of integrals, logarithms, and trigonometric values were worked out this way, their accuracy unchecked until machines could generate the tables in far less time and with more accuracy than a team of humans could ever hope to achieve.

The

First Mechanical Calculator

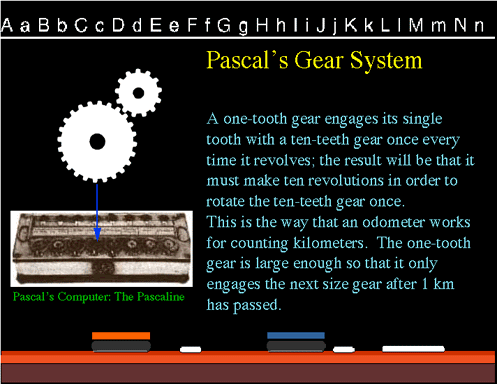

Blaise Pascal, noted mathematician, thinker, and scientist, built the first mechanical adding machine in 1642 based on a design described by Hero of Alexandria (2AD) to add up the distance a carriage travelled. The basic principle of his calculator is still used today in water meters and modern-day odometers. Instead of having a carriage wheel turn the gear, he made each ten-teeth wheel accessible to be turned directly by a person's hand (later inventors added keys and a crank), with the result that when the wheels were turned in the proper sequences, a series of numbers was entered and a cumulative sum was obtained. The gear train supplied a mechanical answer equal to the answer that is obtained by using arithmetic.

This first mechanical calculator, called the Pascaline, had several disadvantages. Although it did offer a substantial improvement over manual calculations, only Pascal himself could repair the device and it cost more than the people it replaced! In addition, the first signs of technophobia emerged with mathematicians fearing the loss of their jobs due to progress.

The Difference Engine

Next Index

Links Prev

|

|

While Tomas of Colmar was developing the first successful commercial calculator, Charles Babbage realized as early as 1812 that many long computations consisted of operations that were regularly repeated. He theorized that it must be possible to design a calculating machine which could do these operations automatically. He produced a prototype of this "difference engine" by 1822 and with the help of the British government started work on the full machine in 1823. It was intended to be steam-powered; fully automatic, even to the printing of the resulting tables; and commanded by a fixed instruction program.

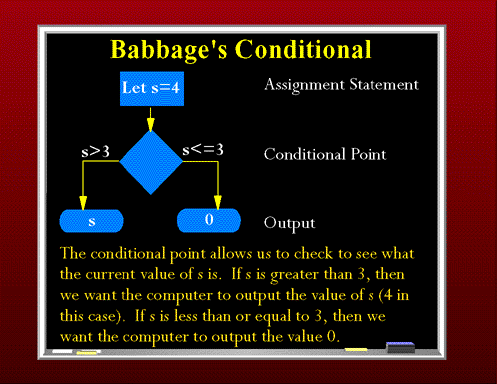

The Conditional |

Next Index Prev

|

|

In 1833, Babbage ceased working on the difference engine because he had a better idea. His new idea was to build an "analytical engine." The analytical engine was a real parallel decimal computer which would operate on words of 50 decimals and was able to store 1000 such numbers. The machine would include a number of built-in operations such as conditional control, which allowed the instructions for the machine to be executed in a specific order rather than in numerical order. The instructions for the machine were to be stored on punched cards, similar to those used on a Jacquard loom.

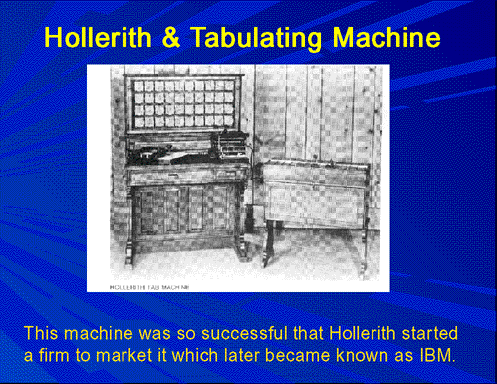

Herman Hollerith |

Next Index Prev

|

|

A step toward automated computation was the introduction of punched cards, which were first successfully used in connection with computing in 1890 by Herman Hollerith working for the U.S. Census Bureau. He developed a device which could automatically read census information which had been punched onto card. Surprisingly, he did not get the idea from the work of Babbage, but rather from watching a train conductor punch tickets. As a result of his invention, reading errors were consequently greatly reduced, work flow was increased, and, more important, stacks of punched cards could be used as an accessible memory store of almost unlimited capacity; furthermore, different problems could be stored on different batches of cards and worked on as needed. Hollerith's tabulator became so successful that he started his own firm to market the device; this company eventually became International Business Machines (IBM).

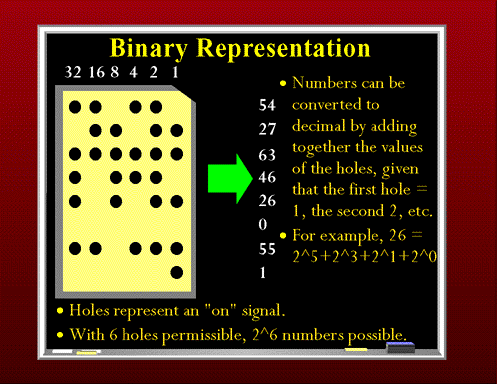

Binary Representation |

Next Index Prev

|

|

Hollerith's machine though had limitations. It was strictly limited to tabulation. The punched cards could not be used to direct more complex computations. In 1941, Konrad Zuse(*), a German who had developed a number of calculating machines, released the first programmable computer designed to solve complex engineering equations. The machine, called the Z3, was controlled by perforated strips of discarded movie film. As well as being controllable by these celluloid strips, it was also the first machine to work on the binary system, as opposed to the more familiar decimal system.

The binary system is composed of 0s and 1s. A punch card with its two states--a hole or no hole-- was admirably suited to representing things in binary. If a hole was read by the card reader, it was considered to be a 1. If no hole was present in a column, a zero was appended to the current number. The total number of possible numbers can be calculated by putting 2 to the power of the number of bits in the binary number. A bit is simply a single occurrence of a binary number--a 0 or a 1. Thus, if you had a possible binary number of 6 bits, 64 different numbers could be generated. (2^(n-1))

Binary representation was going to prove important in the future design of computers which took advantage of a multitude of two-state devices such card readers, electric circuits which could be on or off, and vacuum tubes.

* Zuse died in January of 1996.

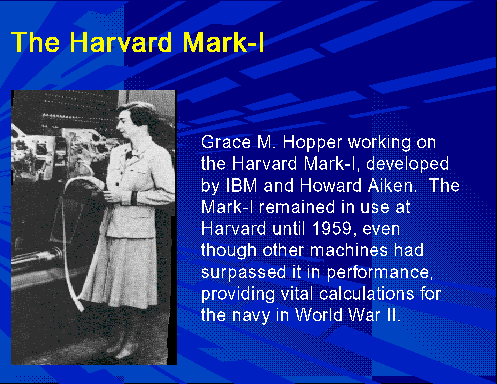

Harvard Mark I |

Next Index Prev

|

|

By the late 1930s punched-card machine techniques had become so well established and reliable that Howard Aiken, in collaboration with engineers at IBM, undertook construction of a large automatic digital computer based on standard IBM electromechanical parts. Aiken's machine, called the Harvard Mark I, handled 23-decimal-place numbers (words) and could perform all four arithmetic operations; moreover, it had special built-in programs, or subroutines, to handle logarithms and trigonometric functions. The Mark I was originally controlled from pre-punched paper tape without provision for reversal, so that automatic "transfer of control" instructions could not be programmed. Output was by card punch and electric typewriter. Although the Mark I used IBM rotating counter wheels as key components in addition to electromagnetic relays, the machine was classified as a relay computer. It was slow, requiring 3 to 5 seconds for a multiplication, but it was fully automatic and could complete long computations without human intervention. The Harvard Mark I was the first of a series of computers designed and built under Aiken's direction.

Alan Turing |

Next Index Links Prev

|

|

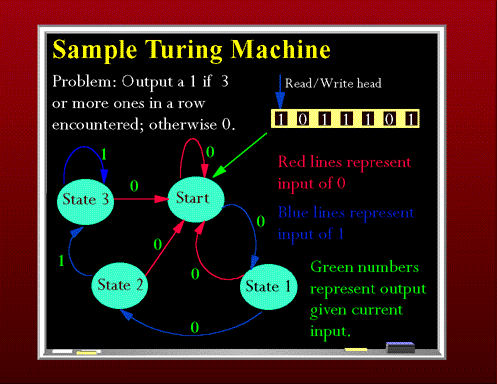

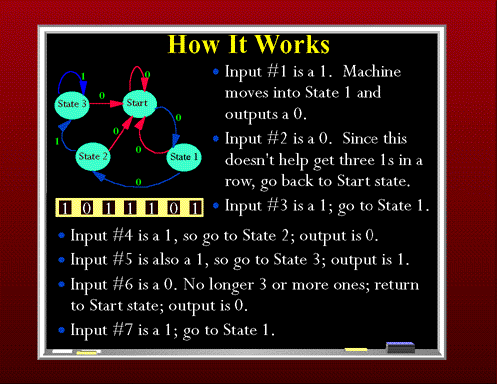

Meanwhile, over in Great Britain, the British mathematician Alan Turing wrote a paper in 1936 entitled On Computable Numbers in which he described a hypothetical device, a Turing machine, that presaged programmable computers. The Turing machine was designed to perform logical operations and could read, write, or erase symbols written on squares of an infinite paper tape. This kind of machine came to be known as a finite state machine because at each step in a computation, the machine's next action was matched against a finite instruction list of possible states.

The Turing machine pictured here above the paper tape, reads in the symbols from the tape one at a time. What we would like the machine to do is to give us an output of 1 anytime it has read at least 3 ones in a row off of the tape. When there are not at least three ones, then it should output a 0. The reading and outputting can go on infinitely. The diagram with the labelled states is known a state diagram and provides a visual path of the possible states that the machine can enter, dependent upon the input. The red arrowed lines indicate an input of 0 from the tape to the machine. The blue arrowed lines indicate an input of 1. Output from the machine is labelled in neon green.

The Turing Machine

Next Index Links Prev

|

|

The machine starts off in the Start state. The first input is a 1 so we can follow the blue line to State 1; output is going to be 0 because three or more ones have not yet been read in. The next input is a 0, which leads the machine back to the starting state by following the red line. The read/write head on the Turing machine advances to the next input, which is a 1. Again, this returns the machine to State 1 and the read/write head advances to the next symbol on the tape. This too is also a 1, leading to State 2. The machine is still outputting 0's since it has not yet encountered three 1s in a row. The next input is also a 1 and following the blue line leads in to State 3, and the machine now outputs a 1 as it has read in at least 3 ones. From this point, as long as the machine keeps reading in 1s, it will stay in State 3 and continue to output 1s. If any 0s are encountered, the machine will return to the Start state and start counting 1s all over.

Turing's purpose was not to invent a computer, but rather to describe problems which are logically possible to solve. His hypothetical machine, however, foreshadowed certain characteristics of modern computers that would follow. For example, the endless tape could be seen as a form of general purpose internal memory for the machine in that the machine was able to read, write, and erase it--just like modern day RAM.

ENIAC |

Next Index Prev

|

|

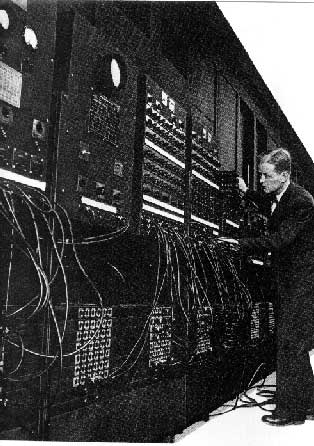

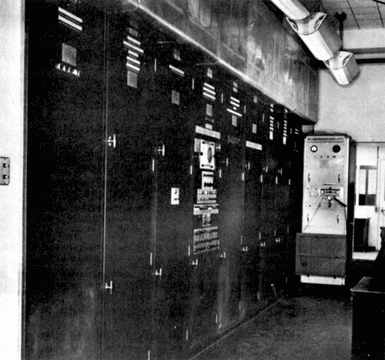

Back in America, with the success of Aiken's Harvard Mark-I as the first major American development in the computing race, work was proceeding on the next great breakthrough by the Americans. Their second contribution was the development of the giant ENIAC machine by John W. Mauchly and J. Presper Eckert at the University of Pennsylvania. ENIAC (Electrical Numerical Integrator and Computer) used a word of 10 decimal digits instead of binary ones like previous automated calculators/computers. ENIAC also was the first machine to use more than 2,000 vacuum tubes, using nearly 18,000 vacuum tubes. Storage of all those vacuum tubes and the machinery required to keep the cool took up over 167 square meters (1800 square feet) of floor space. Nonetheless, it had punched-card input and output and arithmetically had 1 multiplier, 1 divider-square rooter, and 20 adders employing decimal "ring counters," which served as adders and also as quick-access (0.0002 seconds) read-write register storage.

The executable instructions composing a program were embodied in the separate units of ENIAC, which were plugged together to form a route through the machine for the flow of computations. These connections had to be redone for each different problem, together with presetting function tables and switches. This "wire-your-own" instruction technique was inconvenient, and only with some license could ENIAC be considered programmable; it was, however, efficient in handling the particular programs for which it had been designed. ENIAC is generally acknowledged to be the first successful high-speed electronic digital computer (EDC) and was productively used from 1946 to 1955. A controversy developed in 1971, however, over the patentability of ENIAC's basic digital concepts, the claim being made that another U.S. physicist, John V. Atanasoff, had already used the same ideas in a simpler vacuum-tube device he built in the 1930s while at Iowa State College. In 1973, the court found in favor of the company using Atanasoff claim and Atanasoff received the acclaim he rightly deserved.

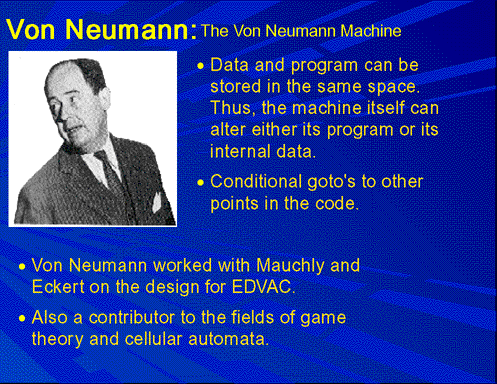

John von Neumann

In 1945, mathematician John von Neumann undertook a study of computation that demonstrated that a computer could have a simple, fixed structure, yet be able to execute any kind of computation given properly programmed control without the need for hardware modification. Von Neumann contributed a new understanding of how practical fast computers should be organized and built; these ideas, often referred to as the stored-program technique, became fundamental for future generations of high-speed digital computers and were universally adopted. The primary advance was the provision of a special type of machine instruction called conditional control transfer--which permitted the program sequence to be interrupted and reinitiated at any point, similar to the system suggested by Babbage for his analytical engine--and by storing all instruction programs together with data in the same memory unit, so that, when desired, instructions could be arithmetically modified in the same way as data. Thus, data was the same as program.

As a result of these techniques and several others, computing and programming became faster, more flexible, and more efficient, with the instructions in subroutines performing far more computational work. Frequently used subroutines did not have to be reprogrammed for each new problem but could be kept intact in "libraries" and read into memory when needed. Thus, much of a given program could be assembled from the subroutine library. The all-purpose computer memory became the assembly place in which parts of a long computation were stored, worked on piecewise, and assembled to form the final results. The computer control served as an errand runner for the overall process. As soon as the advantages of these techniques became clear, the techniques became standard practice. The first generation of modern programmed electronic computers to take advantage of these improvements appeared in 1947.

This group included computers using random access memory (RAM), which is a memory designed to give almost constant access to any particular piece of information. These machines had punched-card or punched-tape input and output devices and RAMs of 1,000-word. Physically, they were much more compact than ENIAC: some were about the size of a grand piano and required 2,500 small electron tubes, far fewer than required by the earlier machines. The first- generation stored-program computers required considerable maintenance, attained perhaps 70% to 80% reliable operation, and were used for 8 to 12 years. Typically, they were programmed directly in machine language, although by the mid-1950s progress had been made in several aspects of advanced programming. This group of machines included EDVAC and UNIVAC, the first commercially available computers.

EDVAC |

Next Index Prev

|

Image taken originally from The Image Server (http://web.soi.city.ac.uk/archive/image/lists/computers.html) |

EDVAC (Electronic Discrete Variable Automatic Computer) was to be a vast improvement upon ENIAC. Mauchly and Eckert started working on it two years before ENIAC even went into operation. Their idea was to have the program for the computer stored inside the computer. This would be possible because EDVAC was going to have more internal memory than any other computing device to date. Memory was to be provided through the use of mercury delay lines. The idea being that given a tube of mercury, an electronic pulse could be bounced back and forth to be retrieved at will--another two state device for storing 0s and 1s. This on/off switchability for the memory was required because EDVAC was to use binary rather than decimal numbers, thus simplifying the construction of the arithmetic units.

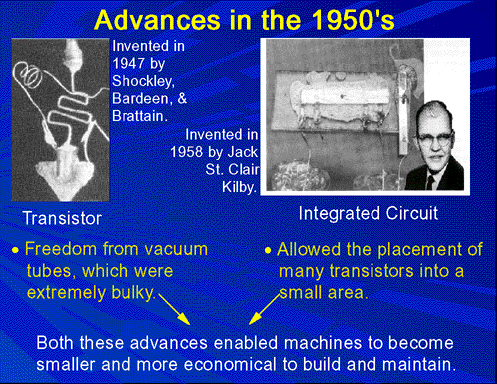

Technology Advances

Next Index Prev

|

|

In the 1950s,two devices would be invented which would improve the computer field and cause the beginning of the computer revolution. The first of these two devices was the transistor. Invented in 1947 by William Shockley, John Bardeen, and Walter Brattain of Bell Labs, the transistor was fated to oust the days of vacuum tubes in computers, radios, and other electronics.

The vacuum tube, used up to this time in almost all the computers and calculating machines, had been invented by American physicist Lee De Forest in 1906. The vacuum tube worked by using large amounts of electricity to heat a filament inside the tube until it was cherry red. One result of heating this filament up was the release of electrons into the tube, which could be controlled by other elements within the tube. De Forest's original device was a triode, which could control the flow of electrons to a positively charged plate inside the tube. A zero could then be represented by the absence of an electron current to the plate; the presence of a small but detectable current to the plate represented a one.

Vacuum tubes were highly inefficient, required a great deal of space, and needed to be replaced often. Computers such as ENIAC had 18,000 tubes in them and housing all these tubes and cooling the rooms from the heat produced by 18,000 tubes was not cheap.. The transistor promised to solve all of these problems and it did so. Transistors, however, had their problems too. The main problem was that transistors, like other electronic components, needed to be soldered together. As a result, the more complex the circuits became, the more complicated and numerous the connections between the individual transistors and the likelihood of faulty wiring increased.

In 1958, this problem too was solved by Jack St. Clair Kilby of Texas Instruments. He manufactured the first integrated circuit or chip. A chip is really a collection of tiny transistors which are connected together when the transistor is manufactured. Thus, the need for soldering together large numbers of transistors was practically nullified; now only connections were needed to other electronic components. In addition to saving space, the speed of the machine was now increased since there was a diminished distance that the electrons had to follow.

The Altair

Next Index Prev

|

|

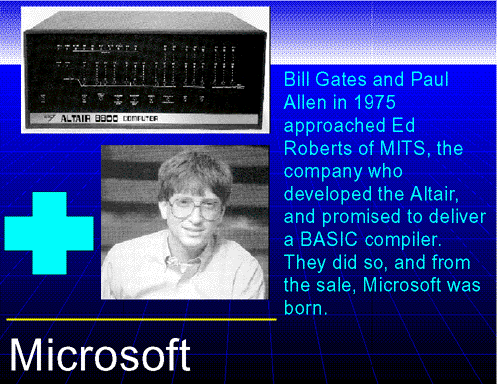

In 1971, Intel released the first microprocessor. The microprocessor was a specialized integrated circuit which was able to process four bits of data at a time. The chip included its own arithmetic logic unit, but a sizable portion of the chip was taken up by the control circuits for organizing the work, which left less room for the data-handling circuitry. Thousands of hackers could now aspire to own their own personal computer. Computers up to this point had been strictly the legion of the military, universities, and very large corporations simply because of their enormous cost for the machine and then maintenance. In 1975, the cover of Popular Electronics featured a story on the "world's first minicomputer kit to rival commercial models....Altair 8800." The Altair, produced by a company called Micro Instrumentation and Telementry Systems (MITS) retailed for $397, which made it easily affordable for the small but growing hacker community.

The Altair was not designed for your computer novice. The kit required assembly by the owner and then it was necessary to write software for the machine since none was yet commercially available. The Altair had a 256 byte memory--about the size of a paragraph, and needed to be coded in machine code- -0s and 1s. The programming was accomplished by manually flipping switches located on the front of the Altair.

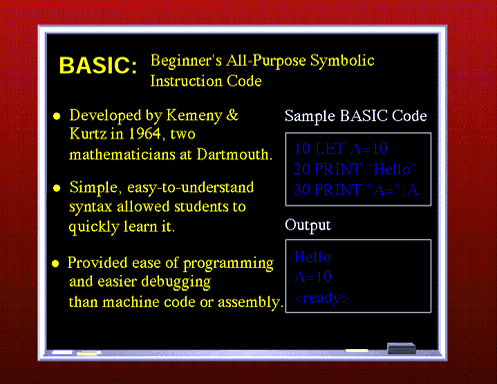

Creation of Microsoft

Two young hackers were intrigued by the Altair, having seen the article in Popular Electronics. They decided on their own that the Altair needed software and took it upon themselves to contact MITS owner Ed Roberts and offer to provide him with a BASIC which would run on the Altair. BASIC (Beginners All-purpose Symbolic Instruction Code) had originally been developed in 1963 by Thomas Kurtz and John Kemeny, members of the Dartmouth mathematics department. BASIC was designed to provide an interactive, easy method for upcoming computer scientists to program computers. It allowed the usage of statements such as print "hello" or let b=10. It would be a great boost for the Altair if BASIC were available, so Robert's agreed to pay for it if it worked. The two young hackers worked feverishly and finished just in time to present it to Roberts. It was a success. The two young hackers? They were William Gates and Paul Allen. They later went on to form Microsoft and produce BASIC and operating systems for various machines.

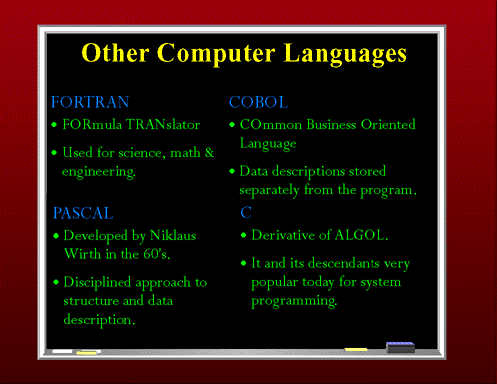

BASIC & Other Languages

BASIC was not the only game in town. By this time, a number of other specialized and general-purpose languages had been developed. A surprising number of today's popular languages have actually been around since the 1950s. FORTRAN, developed by a team of IBM programmers, was one of the first high- level languages--languages in which the programmer does not have to deal with the machine code of 0s and 1s. It was designed to express scientific and mathematical formulas. For a high-level language, it was not very easy to program in. Luckily, better languages came along.

In 1958, a group of computer scientists met in Zurich and from this meeting came ALGOL--ALGOrithmic Language. ALGOL was intended to be a universal, machine-independent language, but they were not successful as they did not have the same close association with IBM as did FORTRAN. A derivative of ALGOL-- ALGOL-60--came to be known as C, which is the standard choice for programming requiring detailed control of hardware. After that came COBOL--COmmon Business Oriented Language. COBOL was developed in 1960 by a joint committee. It was designed to produce applications for the business world and had the novice approach of separating the data descriptions from the actual program. This enabled the data descriptions to be referred to by many different programs.

In the late 1960s, a Swiss computer scientist, Niklaus Wirth, would release the first of many languages. His first language, called Pascal, forced programmers to program in a structured, logical fashion and pay close attention to the different types of data in use. He later followed up on Pascal with Modula-II and III, which were very similar to Pascal in structure and syntax.

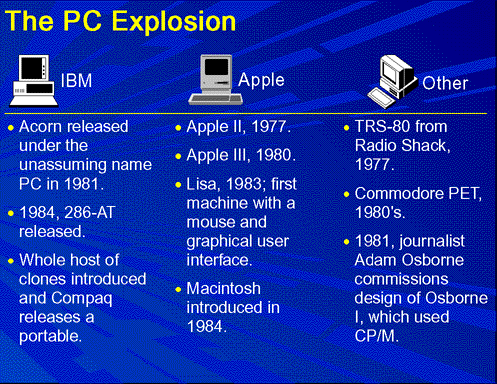

The PC Explosion

Following the introduction of the Altair, a veritable explosion of personal computers occurred, starting with Steve Jobs and Steve Wozniak exhibiting the first Apple II at the First West Coast Computer Faire in San Francisco. The Apple II boasted built-in BASIC, colour graphics, and a 4100 character memory for only $1298. Programs and data could be stored on an everyday audio- cassette recorder. Before the end of the fair, Wozniak and Jobs had secured 300 orders for the Apple II and from there Apple just took off.

Also introduced in 1977 was the TRS-80. This was a home computer manufactured Tandy Radio Shack. In its second incarnation, the TRS-80 Model II, came complete with a 64,000 character memory and a disk drive to store programs and data on. At this time, only Apple and TRS had machines with disk drives. With the introduction of the disk drive, personal computer applications took off as a floppy disk was a most convenient publishing medium for distribution of software.

IBM, which up to this time had been producing mainframes and minicomputers for medium to large-sized businesses, decided that it had to get into the act and started working on the Acorn, which would later be called the IBM PC. The PC was the first computer designed for the home market which would feature modular design so that pieces could easily be added to the architecture. Most of the components, surprisingly, came from outside of IBM, since building it with IBM parts would have cost too much for the home computer market. When it was introduced, the PC came with a 16,000 character memory, keyboard from an IBM electric typewriter, and a connection for tape cassette player for $1265.

By 1984, Apple and IBM had come out with new models. Apple released the first generation Macintosh, which was the first computer to come with a graphical user interface(GUI) and a mouse. The GUI made the machine much more attractive to home computer users because it was easy to use. Sales of the Macintosh soared like nothing ever seen before. IBM was hot on Apple's tail and released the 286-AT, which with applications like Lotus 1-2-3, a spreadsheet, and Microsoft Word, quickly became the favourite of business concerns.

That brings us up to about ten years ago. Now people have their own personal graphics workstations and powerful home computers. The average computer a person might have in their home is more powerful by several orders of magnitude than a machine like ENIAC. The computer revolution has been the fastest growing technology in man's history.

PCs Today

As an example of the wonders of this modern-day technology, let's take a look at this presentation. The whole presentation from start to finish was prepared on a variety of computers using a variety of different software applications. An application is any program that a computer runs that enables you to get things done. This includes things like word processors for creating text, graphics packages for drawing pictures, and communication packages for moving data around the globe.

The colour slides that you have been looking at were prepared on an IBM 486 machine running Microsoft® Windows® 3.1. Windows is a type of operating system. Operating systems are the interface between the user and the computer, enabling the user to type high-level commands such as "format a:" into the computer, rather that issuing complex assembler or C commands. Windows is one of the numerous graphical user interfaces around that allows the user to manipulate their environment using a mouse and icons. Other examples of graphical user interfaces (GUIs) include X-Windows, which runs on UNIX® machines, or Mac OS X, which is the operating system of the Macintosh.

Once Windows was running, I used a multimedia tool called Freelance Graphics to create the slides. Freelance, from Lotus Development Corporation, allows the user to manipulate text and graphics with the explicit purpose of producing presentations and slides. It contains drawing tools and numerous text placement tools. It also allows the user to import text and graphics from a variety of sources. A number of the graphics used, for example, the shaman, are from clip art collections off of a CD-ROM.

The text for the lecture was also created on a computer. Originally, I used Microsoft® Word, which is a word processor available for the Macintosh and for Windows machines. Once I had typed up the lecture, I decided to make it available, slides and all, electronically by placing the slides and the text onto my local Web server.

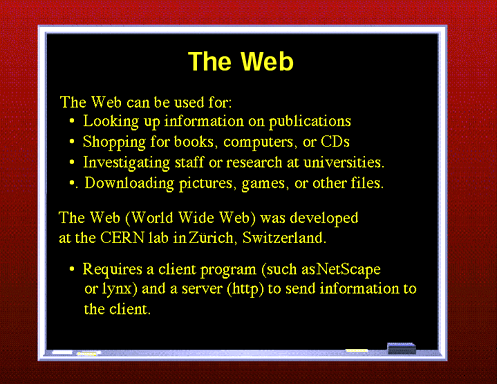

The Web

The Web (or more properly, the World Wide Web) was developed at CERN in Switzerland as a new form of communicating text and graphics across the Internet making use of the hypertext markup language (HTML) as a way to describe the attributes of the text and the placement of graphics, sounds, or even movie clips. Since it was first introduced, the number of users has blossomed and the number of sites containing information and searchable archives has been growing at an unprecedented rate. It is now even possible to order your favourite Pizza Hut pizza in Santa Cruz via the Web!

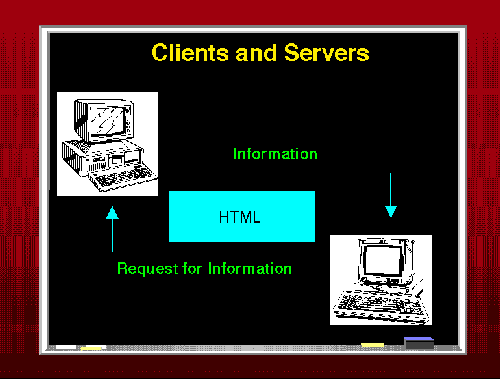

Servers

The actual workings of a Web server are beyond the scope of this course but knowledge of two things is important: 1) In order to use the Web, someone needs to be running a Web server on a machine for which such a server exists; and 2) the local user needs to run an application program to connect to the server; this application is known as a client program. Server programs are available for many types of computers and operating systems, such as Apache for UNIX (and other operating systems), Microsoft Information Interchange Server (IIS) for Windows/NT, and WebStar for the Macintosh. Most client programs available today are capable of displaying images, playing music, or showing movies, and they make use of a graphic interface with a mouse. Common client programs include Netscape, Opera, and Microsoft Internet Explorer (for Windows/Macintosh computers). There are also special clients that only display text, like lynx for UNIX systems, or help the visually impaired.

As mentioned earlier, servers contain files full of information about courses, research interests and games, for example. All of this information is formatted in a language called HTML (hypertext markup language.) HTML allows the user to insert formatting directives into the text, much like some of the first word processors for home computers. Anyone who is currently taking English 100 or has taken English 100 knows that there is a specific style and format for submitting essays. The same is true of HTML documents.

More information about HTML is now readily available everywhere, including in your local bookstore.

This brings us to the conclusion of this lecture. Please e-mail any comments you have to thelectureATeingangNOSPAMorg (replace AT with @ and NOSPAM with .).

httr://www.eingang.org/Lecture

httr://www.cambridge.org/elt/infotech