- •Practical Unit Testing with JUnit and Mockito

- •Table of Contents

- •About the Author

- •Acknowledgments

- •Preface

- •Preface - JUnit

- •Part I. Developers' Tests

- •Chapter 1. On Tests and Tools

- •1.1. An Object-Oriented System

- •1.2. Types of Developers' Tests

- •1.2.1. Unit Tests

- •1.2.2. Integration Tests

- •1.2.3. End-to-End Tests

- •1.2.4. Examples

- •1.2.5. Conclusions

- •1.3. Verification and Design

- •1.5. Tools Introduction

- •Chapter 2. Unit Tests

- •2.1. What is a Unit Test?

- •2.2. Interactions in Unit Tests

- •2.2.1. State vs. Interaction Testing

- •2.2.2. Why Worry about Indirect Interactions?

- •Part II. Writing Unit Tests

- •3.2. Class To Test

- •3.3. Your First JUnit Test

- •3.3.1. Test Results

- •3.4. JUnit Assertions

- •3.5. Failing Test

- •3.6. Parameterized Tests

- •3.6.1. The Problem

- •3.6.2. The Solution

- •3.6.3. Conclusions

- •3.7. Checking Expected Exceptions

- •3.8. Test Fixture Setting

- •3.8.1. Test Fixture Examples

- •3.8.2. Test Fixture in Every Test Method

- •3.8.3. JUnit Execution Model

- •3.8.4. Annotations for Test Fixture Creation

- •3.9. Phases of a Unit Test

- •3.10. Conclusions

- •3.11. Exercises

- •3.11.1. JUnit Run

- •3.11.2. String Reverse

- •3.11.3. HashMap

- •3.11.4. Fahrenheits to Celcius with Parameterized Tests

- •3.11.5. Master Your IDE

- •Templates

- •Quick Navigation

- •Chapter 4. Test Driven Development

- •4.1. When to Write Tests?

- •4.1.1. Test Last (AKA Code First) Development

- •4.1.2. Test First Development

- •4.1.3. Always after a Bug is Found

- •4.2. TDD Rhythm

- •4.2.1. RED - Write a Test that Fails

- •How To Choose the Next Test To Write

- •Readable Assertion Message

- •4.2.2. GREEN - Write the Simplest Thing that Works

- •4.2.3. REFACTOR - Improve the Code

- •Refactoring the Tests

- •Adding Javadocs

- •4.2.4. Here We Go Again

- •4.3. Benefits

- •4.4. TDD is Not Only about Unit Tests

- •4.5. Test First Example

- •4.5.1. The Problem

- •4.5.2. RED - Write a Failing Test

- •4.5.3. GREEN - Fix the Code

- •4.5.4. REFACTOR - Even If Only a Little Bit

- •4.5.5. First Cycle Finished

- •‘The Simplest Thing that Works’ Revisited

- •4.5.6. More Test Cases

- •But is It Comparable?

- •Comparison Tests

- •4.6. Conclusions and Comments

- •4.7. How to Start Coding TDD

- •4.8. When not To Use Test-First?

- •4.9. Should I Follow It Blindly?

- •4.9.1. Write Good Assertion Messages from the Beginning

- •4.9.2. If the Test Passes "By Default"

- •4.10. Exercises

- •4.10.1. Password Validator

- •4.10.2. Regex

- •4.10.3. Booking System

- •Chapter 5. Mocks, Stubs, Test Spies

- •5.1. Introducing Mockito

- •5.1.1. Creating Test Doubles

- •5.1.2. Expectations

- •5.1.3. Verification

- •5.1.4. Conclusions

- •5.2. Types of Test Double

- •5.2.1. Code To Be Tested with Test Doubles

- •5.2.2. The Dummy Object

- •5.2.3. Test Stub

- •5.2.4. Test Spy

- •5.2.5. Mock

- •5.3. Putting it All Together

- •5.4. Example: TDD with Test Doubles

- •5.4.2. The Second Test: Send a Message to Multiple Subscribers

- •Refactoring

- •5.4.3. The Third Test: Send Messages to Subscribers Only

- •5.4.4. The Fourth Test: Subscribe More Than Once

- •Mockito: How Many Times?

- •5.4.5. The Fifth Test: Remove a Subscriber

- •5.4.6. TDD and Test Doubles - Conclusions

- •More Test Code than Production Code

- •The Interface is What Really Matters

- •Interactions Can Be Tested

- •Some Test Doubles are More Useful than Others

- •5.5. Always Use Test Doubles… or Maybe Not?

- •5.5.1. No Test Doubles

- •5.5.2. Using Test Doubles

- •No Winner So Far

- •5.5.3. A More Complicated Example

- •5.5.4. Use Test Doubles or Not? - Conclusion

- •5.6. Conclusions (with a Warning)

- •5.7. Exercises

- •5.7.1. User Service Tested

- •5.7.2. Race Results Enhanced

- •5.7.3. Booking System Revisited

- •5.7.4. Read, Read, Read!

- •Part III. Hints and Discussions

- •Chapter 6. Things You Should Know

- •6.1. What Values To Check?

- •6.1.1. Expected Values

- •6.1.2. Boundary Values

- •6.1.3. Strange Values

- •6.1.4. Should You Always Care?

- •6.1.5. Not Only Input Parameters

- •6.2. How to Fail a Test?

- •6.3. How to Ignore a Test?

- •6.4. More about Expected Exceptions

- •6.4.1. The Expected Exception Message

- •6.4.2. Catch-Exception Library

- •6.4.3. Testing Exceptions And Interactions

- •6.4.4. Conclusions

- •6.5. Stubbing Void Methods

- •6.6. Matchers

- •6.6.1. JUnit Support for Matcher Libraries

- •6.6.2. Comparing Matcher with "Standard" Assertions

- •6.6.3. Custom Matchers

- •6.6.4. Advantages of Matchers

- •6.7. Mockito Matchers

- •6.7.1. Hamcrest Matchers Integration

- •6.7.2. Matchers Warning

- •6.8. Rules

- •6.8.1. Using Rules

- •6.8.2. Writing Custom Rules

- •6.9. Unit Testing Asynchronous Code

- •6.9.1. Waiting for the Asynchronous Task to Finish

- •6.9.2. Making Asynchronous Synchronous

- •6.9.3. Conclusions

- •6.10. Testing Thread Safe

- •6.10.1. ID Generator: Requirements

- •6.10.2. ID Generator: First Implementation

- •6.10.3. ID Generator: Second Implementation

- •6.10.4. Conclusions

- •6.11. Time is not on Your Side

- •6.11.1. Test Every Date (Within Reason)

- •6.11.2. Conclusions

- •6.12. Testing Collections

- •6.12.1. The TDD Approach - Step by Step

- •6.12.2. Using External Assertions

- •Unitils

- •Testing Collections Using Matchers

- •6.12.3. Custom Solution

- •6.12.4. Conclusions

- •6.13. Reading Test Data From Files

- •6.13.1. CSV Files

- •6.13.2. Excel Files

- •6.14. Conclusions

- •6.15. Exercises

- •6.15.1. Design Test Cases: State Testing

- •6.15.2. Design Test Cases: Interactions Testing

- •6.15.3. Test Collections

- •6.15.4. Time Testing

- •6.15.5. Redesign of the TimeProvider class

- •6.15.6. Write a Custom Matcher

- •6.15.7. Preserve System Properties During Tests

- •6.15.8. Enhance the RetryTestRule

- •6.15.9. Make an ID Generator Bulletproof

- •Chapter 7. Points of Controversy

- •7.1. Access Modifiers

- •7.2. Random Values in Tests

- •7.2.1. Random Object Properties

- •7.2.2. Generating Multiple Test Cases

- •7.2.3. Conclusions

- •7.3. Is Set-up the Right Thing for You?

- •7.4. How Many Assertions per Test Method?

- •7.4.1. Code Example

- •7.4.2. Pros and Cons

- •7.4.3. Conclusions

- •7.5. Private Methods Testing

- •7.5.1. Verification vs. Design - Revisited

- •7.5.2. Options We Have

- •7.5.3. Private Methods Testing - Techniques

- •Reflection

- •Access Modifiers

- •7.5.4. Conclusions

- •7.6. New Operator

- •7.6.1. PowerMock to the Rescue

- •7.6.2. Redesign and Inject

- •7.6.3. Refactor and Subclass

- •7.6.4. Partial Mocking

- •7.6.5. Conclusions

- •7.7. Capturing Arguments to Collaborators

- •7.8. Conclusions

- •7.9. Exercises

- •7.9.1. Testing Legacy Code

- •Part IV. Listen and Organize

- •Chapter 8. Getting Feedback

- •8.1. IDE Feedback

- •8.1.1. Eclipse Test Reports

- •8.1.2. IntelliJ IDEA Test Reports

- •8.1.3. Conclusion

- •8.2. JUnit Default Reports

- •8.3. Writing Custom Listeners

- •8.4. Readable Assertion Messages

- •8.4.1. Add a Custom Assertion Message

- •8.4.2. Implement the toString() Method

- •8.4.3. Use the Right Assertion Method

- •8.5. Logging in Tests

- •8.6. Debugging Tests

- •8.7. Notifying The Team

- •8.8. Conclusions

- •8.9. Exercises

- •8.9.1. Study Test Output

- •8.9.2. Enhance the Custom Rule

- •8.9.3. Custom Test Listener

- •8.9.4. Debugging Session

- •Chapter 9. Organization Of Tests

- •9.1. Package for Test Classes

- •9.2. Name Your Tests Consistently

- •9.2.1. Test Class Names

- •Splitting Up Long Test Classes

- •Test Class Per Feature

- •9.2.2. Test Method Names

- •9.2.3. Naming of Test-Double Variables

- •9.3. Comments in Tests

- •9.4. BDD: ‘Given’, ‘When’, ‘Then’

- •9.4.1. Testing BDD-Style

- •9.4.2. Mockito BDD-Style

- •9.5. Reducing Boilerplate Code

- •9.5.1. One-Liner Stubs

- •9.5.2. Mockito Annotations

- •9.6. Creating Complex Objects

- •9.6.1. Mummy Knows Best

- •9.6.2. Test Data Builder

- •9.6.3. Conclusions

- •9.7. Conclusions

- •9.8. Exercises

- •9.8.1. Test Fixture Setting

- •9.8.2. Test Data Builder

- •Part V. Make Them Better

- •Chapter 10. Maintainable Tests

- •10.1. Test Behaviour, not Methods

- •10.2. Complexity Leads to Bugs

- •10.3. Follow the Rules or Suffer

- •10.3.1. Real Life is Object-Oriented

- •10.3.2. The Non-Object-Oriented Approach

- •Do We Need Mocks?

- •10.3.3. The Object-Oriented Approach

- •10.3.4. How To Deal with Procedural Code?

- •10.3.5. Conclusions

- •10.4. Rewriting Tests when the Code Changes

- •10.4.1. Avoid Overspecified Tests

- •10.4.2. Are You Really Coding Test-First?

- •10.4.3. Conclusions

- •10.5. Things Too Simple To Break

- •10.6. Conclusions

- •10.7. Exercises

- •10.7.1. A Car is a Sports Car if …

- •10.7.2. Stack Test

- •Chapter 11. Test Quality

- •11.1. An Overview

- •11.2. Static Analysis Tools

- •11.3. Code Coverage

- •11.3.1. Line and Branch Coverage

- •11.3.2. Code Coverage Reports

- •11.3.3. The Devil is in the Details

- •11.3.4. How Much Code Coverage is Good Enough?

- •11.3.5. Conclusion

- •11.4. Mutation Testing

- •11.4.1. How does it Work?

- •11.4.2. Working with PIT

- •11.4.3. Conclusions

- •11.5. Code Reviews

- •11.5.1. A Three-Minute Test Code Review

- •Size Heuristics

- •But do They Run?

- •Check Code Coverage

- •Conclusions

- •11.5.2. Things to Look For

- •Easy to Understand

- •Documented

- •Are All the Important Scenarios Verified?

- •Run Them

- •Date Testing

- •11.5.3. Conclusions

- •11.6. Refactor Your Tests

- •11.6.1. Use Meaningful Names - Everywhere

- •11.6.2. Make It Understandable at a Glance

- •11.6.3. Make Irrelevant Data Clearly Visible

- •11.6.4. Do not Test Many Things at Once

- •11.6.5. Change Order of Methods

- •11.7. Conclusions

- •11.8. Exercises

- •11.8.1. Clean this Mess

- •Appendix A. Automated Tests

- •A.1. Wasting Your Time by not Writing Tests

- •A.1.1. And what about Human Testers?

- •A.1.2. One More Benefit: A Documentation that is Always Up-To-Date

- •A.2. When and Where Should Tests Run?

- •Appendix B. Running Unit Tests

- •B.1. Running Tests with Eclipse

- •B.1.1. Debugging Tests with Eclipse

- •B.2. Running Tests with IntelliJ IDEA

- •B.2.1. Debugging Tests with IntelliJ IDEA

- •B.3. Running Tests with Gradle

- •B.3.1. Using JUnit Listeners with Gradle

- •B.3.2. Adding JARs to Gradle’s Tests Classpath

- •B.4. Running Tests with Maven

- •B.4.1. Using JUnit Listeners and Reporters with Maven

- •B.4.2. Adding JARs to Maven’s Tests Classpath

- •Appendix C. Test Spy vs. Mock

- •C.1. Different Flow - and Who Asserts?

- •C.2. Stop with the First Error

- •C.3. Stubbing

- •C.4. Forgiveness

- •C.5. Different Threads or Containers

- •C.6. Conclusions

- •Appendix D. Where Should I Go Now?

- •Bibliography

- •Glossary

- •Index

- •Thank You!

Chapter 11. Test Quality

Another form of static analysis is calculation of various code metrics, such as number of lines per class or per method, and the famous cyclomatic complexity7. They will generally tell you whether your code is too complex or not. Obviously, test classes and methods should stick to the same (or even stricter) rules than your production code, so verifying this seems like a good idea. But because you have no logic in your tests (as discussed in Section 10.2), the complexity of your test methods should be very low by default. Thus, such analysis will rarely reveal any problems in your test code.

To conclude, static analysis tools do not help much when it comes to tests code. No tool or metric will tell you if you have written good tests. Use them, listen to what they say, but do not expect too much. If you write your tests following the basic rules given in this section, then there is not much they can do for you.

11.3. Code Coverage

If you can not measure it, you can not improve it.

— William Thomson 1st Baron Kelvin

Having been seriously disappointed by what static code analysis tools have to offer with regard to finding test code smells (see Section 11.2), let us turn towards another group of tools, which are commonly used to assess test quality. Are they any better at finding test smells, and weaknesses of any kind, in your test code?

This section is devoted to a group of popular tools which utilize the code coverage technique8, and can provide a good deal of interesting information regarding your tests. We will discuss both their capabilities and limits. What we are really interested in is whether they can be used to measure, and hopefully improve, test quality. For the time being, we shall leave the technical issues relating to running them and concentrate solely on the usefulness of code coverage tools in the field of test quality measurement.

Code coverage measures which parts of your code were executed: i.e. which parts of the production code were executed during tests. They work by augmenting production code with additional statements, which do not change the semantics of the original code. The purpose of these additional instructions is to record information about executed fragments (lines, methods, statements) of code and to present it, later, as numerical data in coverage reports.

Code coverage tools also measure some other metrics, - e.g. cyclomatic complexity9. However, these do not really relate to test quality, so we shall not be discussing them in this section.

11.3.1. Line and Branch Coverage

"Code coverage" is a broad term which denotes a number of types of coverage measurement, sometimes very different from one another. Wikipedia10 describes them all in details. However, many of them are rather theoretical, and no tool supports them (mainly because their practical use is limited).

7http://en.wikipedia.org/wiki/Cyclomatic_complexity

8See http://en.wikipedia.org/wiki/Code_coverage

9See http://en.wikipedia.org/wiki/Software_metric, and especially http://en.wikipedia.org/wiki/Cyclomatic_complexity, for more information on this topic.

10See http://en.wikipedia.org/wiki/Code_coverage

238

Chapter 11. Test Quality

In this section I will present two types of code coverage measure provided by a popular Cobertura11 tool: line and branch coverage:

•Line coverage is a very simple metric. All it says is whether a particular line (or rather statement) of code has been exercised or not. If a line was "touched" during test execution, then it counts as covered. Simple, yet misleading - as will be discussed further. This kind of coverage is also known as statement coverage.

•Branch coverage focuses on decision points in production code, such as if or while statements with logical && or || operators. This is a much stronger measure than line coverage. In practice, to satisfy branch coverage, you must write tests such that every logical expression in your code gets to be evaluated to true and to false.

The difference between the two is very significant. While getting 100% line coverage is a pretty straightforward affair, much more effort must be made to obtain such a value for branch coverage. The following snippet of code illustrates this12.

Listing 11.1. A simple function to measure coverage

public boolean bar(boolean a, boolean b) { boolean result = false;

if (a && b) { result = true;

}

return result;

}

Table 11.1 shows calls to foo() methods, which should be issued from the test code, in order to obtain 100% line and branch coverage respectively.

Table 11.1. Tests required to obtain 100% coverage

line coverage |

branch coverage |

|

|

foo(true, true) |

foo(true, false) |

|

foo(true, true) |

|

foo(false,false) |

|

|

Now that we understand the types of code coverage provided by Cobertura, let us take a look at the results of its work.

11.3.2. Code Coverage Reports

After the tests have been run, code coverage tools generate a report on coverage metrics. This is possible thanks to the additional information previously added to the production code.

As we shall soon see, coverage reports can be used to gain an overall impression regarding code coverage, but also to scrutinize selected classes. They act as a spyglass and a microscope in one. We shall start with the view from 10,000 feet (i.e. project coverage), then move down to package level, and finally reach ground level with classes.

11http://cobertura.sourceforge.net/

12The code used to illustrate line and branch coverage is based on Wikipedia example: http://en.wikipedia.org/wiki/Code_coverage

239

Chapter 11. Test Quality

Figure 11.1. Code coverage - packages overview

Figure 11.1 presents an overview of some project coverage. For each package the following statistics are given:

•the percentage of line coverage, along with the total number of lines and number of lines covered,

•the percentage of branch coverage, along with the total number of branches and, for purposes of comparison, the number of branches covered.

As you might guess, a red color is used for those parts of the code not executed (not "covered") during the tests. So - the more green, the better? Well, yes and no - we will get to this soon.

Figure 11.2. Code coverage - single package

The report shown in Figure 11.2 gives an overview of code coverage for the whole package, and for individual classes of the package. As with the previous report, it gives information on line and branch coverage. Figure 11.3 provides an example.

240

Chapter 11. Test Quality

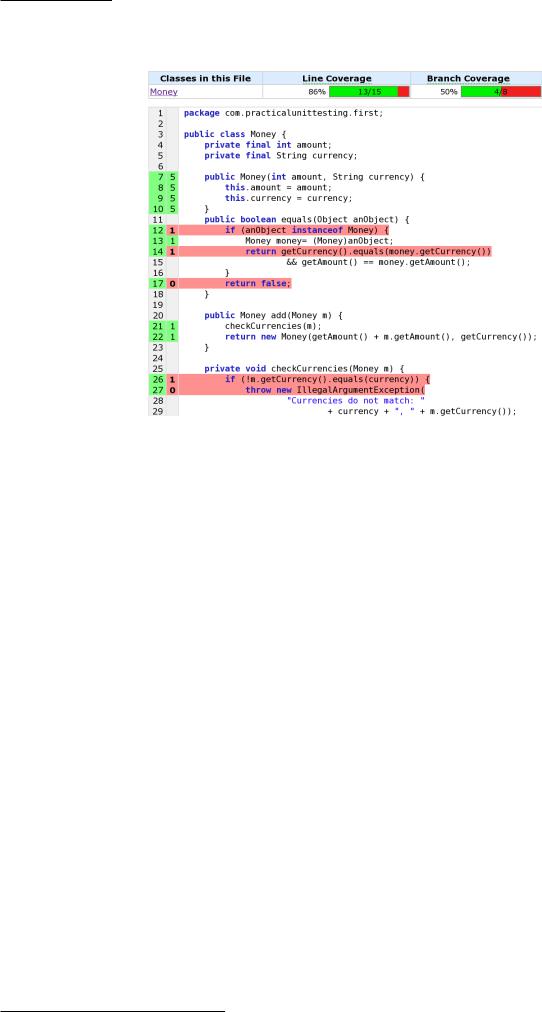

Figure 11.3. Single class coverage

Figure 11.3 presents code for our old friend - the Money class. This report shows precisely which parts of the Money class are covered by tests. The green color denotes parts that have been tested, the red those not executed during the tests. The first column of numbers is the line of code, while the second gives some additional information, about the number of times each line was "touched" during the testing. For example, in the case of line 12 (the if statement) the number on a red background says 1. This means that this if statement has only been executed once. We may guess that the current tests contain only one case, which makes this if evaluate to true. If we were to add another test which evaluated this Boolean expression to false, then the number 1 on a red background would change to 2 on a green background. Also, the coverage of line 17 would change from 0 to 1 (and from red to green).

11.3.3. The Devil is in the Details

Let us take a closer look at this last example of detailed code coverage of the Money class. Listing 11.2 shows tests which have been executed to obtain the following quite high level of coverage: 86% line coverage and 50% branch coverage.

241