- •Practical Unit Testing with JUnit and Mockito

- •Table of Contents

- •About the Author

- •Acknowledgments

- •Preface

- •Preface - JUnit

- •Part I. Developers' Tests

- •Chapter 1. On Tests and Tools

- •1.1. An Object-Oriented System

- •1.2. Types of Developers' Tests

- •1.2.1. Unit Tests

- •1.2.2. Integration Tests

- •1.2.3. End-to-End Tests

- •1.2.4. Examples

- •1.2.5. Conclusions

- •1.3. Verification and Design

- •1.5. Tools Introduction

- •Chapter 2. Unit Tests

- •2.1. What is a Unit Test?

- •2.2. Interactions in Unit Tests

- •2.2.1. State vs. Interaction Testing

- •2.2.2. Why Worry about Indirect Interactions?

- •Part II. Writing Unit Tests

- •3.2. Class To Test

- •3.3. Your First JUnit Test

- •3.3.1. Test Results

- •3.4. JUnit Assertions

- •3.5. Failing Test

- •3.6. Parameterized Tests

- •3.6.1. The Problem

- •3.6.2. The Solution

- •3.6.3. Conclusions

- •3.7. Checking Expected Exceptions

- •3.8. Test Fixture Setting

- •3.8.1. Test Fixture Examples

- •3.8.2. Test Fixture in Every Test Method

- •3.8.3. JUnit Execution Model

- •3.8.4. Annotations for Test Fixture Creation

- •3.9. Phases of a Unit Test

- •3.10. Conclusions

- •3.11. Exercises

- •3.11.1. JUnit Run

- •3.11.2. String Reverse

- •3.11.3. HashMap

- •3.11.4. Fahrenheits to Celcius with Parameterized Tests

- •3.11.5. Master Your IDE

- •Templates

- •Quick Navigation

- •Chapter 4. Test Driven Development

- •4.1. When to Write Tests?

- •4.1.1. Test Last (AKA Code First) Development

- •4.1.2. Test First Development

- •4.1.3. Always after a Bug is Found

- •4.2. TDD Rhythm

- •4.2.1. RED - Write a Test that Fails

- •How To Choose the Next Test To Write

- •Readable Assertion Message

- •4.2.2. GREEN - Write the Simplest Thing that Works

- •4.2.3. REFACTOR - Improve the Code

- •Refactoring the Tests

- •Adding Javadocs

- •4.2.4. Here We Go Again

- •4.3. Benefits

- •4.4. TDD is Not Only about Unit Tests

- •4.5. Test First Example

- •4.5.1. The Problem

- •4.5.2. RED - Write a Failing Test

- •4.5.3. GREEN - Fix the Code

- •4.5.4. REFACTOR - Even If Only a Little Bit

- •4.5.5. First Cycle Finished

- •‘The Simplest Thing that Works’ Revisited

- •4.5.6. More Test Cases

- •But is It Comparable?

- •Comparison Tests

- •4.6. Conclusions and Comments

- •4.7. How to Start Coding TDD

- •4.8. When not To Use Test-First?

- •4.9. Should I Follow It Blindly?

- •4.9.1. Write Good Assertion Messages from the Beginning

- •4.9.2. If the Test Passes "By Default"

- •4.10. Exercises

- •4.10.1. Password Validator

- •4.10.2. Regex

- •4.10.3. Booking System

- •Chapter 5. Mocks, Stubs, Test Spies

- •5.1. Introducing Mockito

- •5.1.1. Creating Test Doubles

- •5.1.2. Expectations

- •5.1.3. Verification

- •5.1.4. Conclusions

- •5.2. Types of Test Double

- •5.2.1. Code To Be Tested with Test Doubles

- •5.2.2. The Dummy Object

- •5.2.3. Test Stub

- •5.2.4. Test Spy

- •5.2.5. Mock

- •5.3. Putting it All Together

- •5.4. Example: TDD with Test Doubles

- •5.4.2. The Second Test: Send a Message to Multiple Subscribers

- •Refactoring

- •5.4.3. The Third Test: Send Messages to Subscribers Only

- •5.4.4. The Fourth Test: Subscribe More Than Once

- •Mockito: How Many Times?

- •5.4.5. The Fifth Test: Remove a Subscriber

- •5.4.6. TDD and Test Doubles - Conclusions

- •More Test Code than Production Code

- •The Interface is What Really Matters

- •Interactions Can Be Tested

- •Some Test Doubles are More Useful than Others

- •5.5. Always Use Test Doubles… or Maybe Not?

- •5.5.1. No Test Doubles

- •5.5.2. Using Test Doubles

- •No Winner So Far

- •5.5.3. A More Complicated Example

- •5.5.4. Use Test Doubles or Not? - Conclusion

- •5.6. Conclusions (with a Warning)

- •5.7. Exercises

- •5.7.1. User Service Tested

- •5.7.2. Race Results Enhanced

- •5.7.3. Booking System Revisited

- •5.7.4. Read, Read, Read!

- •Part III. Hints and Discussions

- •Chapter 6. Things You Should Know

- •6.1. What Values To Check?

- •6.1.1. Expected Values

- •6.1.2. Boundary Values

- •6.1.3. Strange Values

- •6.1.4. Should You Always Care?

- •6.1.5. Not Only Input Parameters

- •6.2. How to Fail a Test?

- •6.3. How to Ignore a Test?

- •6.4. More about Expected Exceptions

- •6.4.1. The Expected Exception Message

- •6.4.2. Catch-Exception Library

- •6.4.3. Testing Exceptions And Interactions

- •6.4.4. Conclusions

- •6.5. Stubbing Void Methods

- •6.6. Matchers

- •6.6.1. JUnit Support for Matcher Libraries

- •6.6.2. Comparing Matcher with "Standard" Assertions

- •6.6.3. Custom Matchers

- •6.6.4. Advantages of Matchers

- •6.7. Mockito Matchers

- •6.7.1. Hamcrest Matchers Integration

- •6.7.2. Matchers Warning

- •6.8. Rules

- •6.8.1. Using Rules

- •6.8.2. Writing Custom Rules

- •6.9. Unit Testing Asynchronous Code

- •6.9.1. Waiting for the Asynchronous Task to Finish

- •6.9.2. Making Asynchronous Synchronous

- •6.9.3. Conclusions

- •6.10. Testing Thread Safe

- •6.10.1. ID Generator: Requirements

- •6.10.2. ID Generator: First Implementation

- •6.10.3. ID Generator: Second Implementation

- •6.10.4. Conclusions

- •6.11. Time is not on Your Side

- •6.11.1. Test Every Date (Within Reason)

- •6.11.2. Conclusions

- •6.12. Testing Collections

- •6.12.1. The TDD Approach - Step by Step

- •6.12.2. Using External Assertions

- •Unitils

- •Testing Collections Using Matchers

- •6.12.3. Custom Solution

- •6.12.4. Conclusions

- •6.13. Reading Test Data From Files

- •6.13.1. CSV Files

- •6.13.2. Excel Files

- •6.14. Conclusions

- •6.15. Exercises

- •6.15.1. Design Test Cases: State Testing

- •6.15.2. Design Test Cases: Interactions Testing

- •6.15.3. Test Collections

- •6.15.4. Time Testing

- •6.15.5. Redesign of the TimeProvider class

- •6.15.6. Write a Custom Matcher

- •6.15.7. Preserve System Properties During Tests

- •6.15.8. Enhance the RetryTestRule

- •6.15.9. Make an ID Generator Bulletproof

- •Chapter 7. Points of Controversy

- •7.1. Access Modifiers

- •7.2. Random Values in Tests

- •7.2.1. Random Object Properties

- •7.2.2. Generating Multiple Test Cases

- •7.2.3. Conclusions

- •7.3. Is Set-up the Right Thing for You?

- •7.4. How Many Assertions per Test Method?

- •7.4.1. Code Example

- •7.4.2. Pros and Cons

- •7.4.3. Conclusions

- •7.5. Private Methods Testing

- •7.5.1. Verification vs. Design - Revisited

- •7.5.2. Options We Have

- •7.5.3. Private Methods Testing - Techniques

- •Reflection

- •Access Modifiers

- •7.5.4. Conclusions

- •7.6. New Operator

- •7.6.1. PowerMock to the Rescue

- •7.6.2. Redesign and Inject

- •7.6.3. Refactor and Subclass

- •7.6.4. Partial Mocking

- •7.6.5. Conclusions

- •7.7. Capturing Arguments to Collaborators

- •7.8. Conclusions

- •7.9. Exercises

- •7.9.1. Testing Legacy Code

- •Part IV. Listen and Organize

- •Chapter 8. Getting Feedback

- •8.1. IDE Feedback

- •8.1.1. Eclipse Test Reports

- •8.1.2. IntelliJ IDEA Test Reports

- •8.1.3. Conclusion

- •8.2. JUnit Default Reports

- •8.3. Writing Custom Listeners

- •8.4. Readable Assertion Messages

- •8.4.1. Add a Custom Assertion Message

- •8.4.2. Implement the toString() Method

- •8.4.3. Use the Right Assertion Method

- •8.5. Logging in Tests

- •8.6. Debugging Tests

- •8.7. Notifying The Team

- •8.8. Conclusions

- •8.9. Exercises

- •8.9.1. Study Test Output

- •8.9.2. Enhance the Custom Rule

- •8.9.3. Custom Test Listener

- •8.9.4. Debugging Session

- •Chapter 9. Organization Of Tests

- •9.1. Package for Test Classes

- •9.2. Name Your Tests Consistently

- •9.2.1. Test Class Names

- •Splitting Up Long Test Classes

- •Test Class Per Feature

- •9.2.2. Test Method Names

- •9.2.3. Naming of Test-Double Variables

- •9.3. Comments in Tests

- •9.4. BDD: ‘Given’, ‘When’, ‘Then’

- •9.4.1. Testing BDD-Style

- •9.4.2. Mockito BDD-Style

- •9.5. Reducing Boilerplate Code

- •9.5.1. One-Liner Stubs

- •9.5.2. Mockito Annotations

- •9.6. Creating Complex Objects

- •9.6.1. Mummy Knows Best

- •9.6.2. Test Data Builder

- •9.6.3. Conclusions

- •9.7. Conclusions

- •9.8. Exercises

- •9.8.1. Test Fixture Setting

- •9.8.2. Test Data Builder

- •Part V. Make Them Better

- •Chapter 10. Maintainable Tests

- •10.1. Test Behaviour, not Methods

- •10.2. Complexity Leads to Bugs

- •10.3. Follow the Rules or Suffer

- •10.3.1. Real Life is Object-Oriented

- •10.3.2. The Non-Object-Oriented Approach

- •Do We Need Mocks?

- •10.3.3. The Object-Oriented Approach

- •10.3.4. How To Deal with Procedural Code?

- •10.3.5. Conclusions

- •10.4. Rewriting Tests when the Code Changes

- •10.4.1. Avoid Overspecified Tests

- •10.4.2. Are You Really Coding Test-First?

- •10.4.3. Conclusions

- •10.5. Things Too Simple To Break

- •10.6. Conclusions

- •10.7. Exercises

- •10.7.1. A Car is a Sports Car if …

- •10.7.2. Stack Test

- •Chapter 11. Test Quality

- •11.1. An Overview

- •11.2. Static Analysis Tools

- •11.3. Code Coverage

- •11.3.1. Line and Branch Coverage

- •11.3.2. Code Coverage Reports

- •11.3.3. The Devil is in the Details

- •11.3.4. How Much Code Coverage is Good Enough?

- •11.3.5. Conclusion

- •11.4. Mutation Testing

- •11.4.1. How does it Work?

- •11.4.2. Working with PIT

- •11.4.3. Conclusions

- •11.5. Code Reviews

- •11.5.1. A Three-Minute Test Code Review

- •Size Heuristics

- •But do They Run?

- •Check Code Coverage

- •Conclusions

- •11.5.2. Things to Look For

- •Easy to Understand

- •Documented

- •Are All the Important Scenarios Verified?

- •Run Them

- •Date Testing

- •11.5.3. Conclusions

- •11.6. Refactor Your Tests

- •11.6.1. Use Meaningful Names - Everywhere

- •11.6.2. Make It Understandable at a Glance

- •11.6.3. Make Irrelevant Data Clearly Visible

- •11.6.4. Do not Test Many Things at Once

- •11.6.5. Change Order of Methods

- •11.7. Conclusions

- •11.8. Exercises

- •11.8.1. Clean this Mess

- •Appendix A. Automated Tests

- •A.1. Wasting Your Time by not Writing Tests

- •A.1.1. And what about Human Testers?

- •A.1.2. One More Benefit: A Documentation that is Always Up-To-Date

- •A.2. When and Where Should Tests Run?

- •Appendix B. Running Unit Tests

- •B.1. Running Tests with Eclipse

- •B.1.1. Debugging Tests with Eclipse

- •B.2. Running Tests with IntelliJ IDEA

- •B.2.1. Debugging Tests with IntelliJ IDEA

- •B.3. Running Tests with Gradle

- •B.3.1. Using JUnit Listeners with Gradle

- •B.3.2. Adding JARs to Gradle’s Tests Classpath

- •B.4. Running Tests with Maven

- •B.4.1. Using JUnit Listeners and Reporters with Maven

- •B.4.2. Adding JARs to Maven’s Tests Classpath

- •Appendix C. Test Spy vs. Mock

- •C.1. Different Flow - and Who Asserts?

- •C.2. Stop with the First Error

- •C.3. Stubbing

- •C.4. Forgiveness

- •C.5. Different Threads or Containers

- •C.6. Conclusions

- •Appendix D. Where Should I Go Now?

- •Bibliography

- •Glossary

- •Index

- •Thank You!

Chapter 11. Test Quality

Code coverage is often used to check the quality of tests, which is not a good idea. As we have learned in this section, coverage measures are not credible indicators of test quality. Code coverage might help by showing you deficiencies in your safety net of tests, but not much more than this. In addition to code coverage, you should use other techniques (i.e. visual inspection - see Section 11.5) to supplement its indications.

11.4. Mutation Testing

High quality software can not be done without high quality testing. Mutation testing measures how “good” our tests are by inserting faults into the program under test. Each fault generates a new program, a mutant, that is slightly different from the original. The idea is that the tests are adequate if they detect all mutants.

— Mattias Bybro A Mutation Testing Tool For Java Programs (2003)

As discussed in the previous section, code coverage is a weak indicator of test quality. Let us now take a look at another approach, called mutation testing, which promises to furnish us with more detailed information regarding the quality of tests.

Mutation testing, although it has been under investigation for decades, is still in its infancy. I discuss this topic here because I believe it has great potential, which is waiting to be discovered. Recent progress in mutation testing tools (i.e. the PIT mutation testing tool14) leads me to believe that this great and exciting idea will finally get the attention it deserves. By reading this section you might just get ahead of your times by learning about a new star that is about to outshine the existing solutions, or …waste your time on something that will never be used in real life.

Suppose you have some classes and a suite of tests. Now imagine that you introduce a change into one of your classes, for example by reverting (negating) one of the if conditional expressions. In doing this you have created a so-called mutant of the original code. What should happen now, if you run all your tests once again? Provided that the suite of tests contains a test that examines this class thoroughly, then this test should fail. If no test fails, this means your test suite is not good enough15. And that is precisely the concept of mutation testing.

11.4.1. How does it Work?

Mutation testing tools create a plethora of "mutants": that is, slightly changed versions of the original production code. Then, they run tests against each mutant. The quality of the tests is assessed by the number of mutants killed by the tests16. The tools differ mainly in the following respects:

•how the mutants are created (they can be brought to life by modifying source code or bytecode),

•the set of available mutators,

•performance (e.g. detecting equivalent mutations, so the tests are not run twice etc.).

Mutants are created by applying various mutation operators - i.e. simple syntactic or semantic transformation rules - to the production code. The most basic mutation operators introduce changes to

14http://pitest.org

15In fact, if all tests still pass, it can also mean that the "mutant" program is equivalent in behaviour to the original program. 16Okay, I admit it: this heuristic sounds like it was taken from the game series Fallout: "the more dead mutants, the better" :).

245

Chapter 11. Test Quality

the various language operators - mathematical (e.g. +, -, *, /), relational (e.g. =, !=, <, >) or logical (e.g. &, |, !). An example of a mutation would be to switch the sign < to > within some logical condition. These simple mutators mimic typical sources of errors - typos or instances of the wrong logical operators being used. Likewise, by changing some values in the code, it is easy to simulate off-by-one errors. Other possible mutations are, for example, removing method calls (possible with void methods), changing returned values, changing constant values, etc. Some tools have also experimented with more Javaspecific mutators, for example relating to Java collections.

11.4.2. Working with PIT

PIT Mutation Testing is a very fresh Java mutation testing tool, which has brought new life to the rather stagnant area of mutation testing tools. It works at the bytecode level, which means it creates mutants without touching the source code. After PIT’s execution has finished, it provides detailed information on created and killed mutants. It also creates an HTML report showing line coverage and a mutation coverage report. We will concentrate on the latter, as line coverage has already been discussed in Section 11.3.

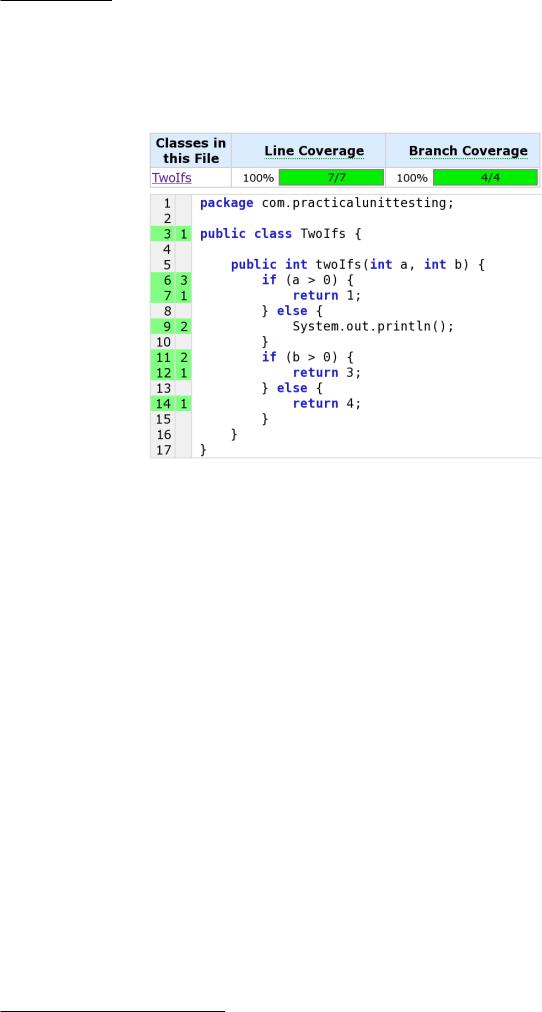

We will use a very simple example to demonstrate PIT in action and confront it with code coverage tools. Listing 11.4 shows our "production code", which will be mutated by PIT17.

Listing 11.4. Method with two if statements

public class TwoIfs {

public int twoIfs(int a, int b) { if (a > 0) {

return 1; } else {

System.out.println();

}

if (b > 0) { return 3;

} else { return 4;

}

}

}

Let us say that we also have a test class which (supposedly) verifies the correctness of the twoIfs() method:

Listing 11.5. Tests for the twoIfs method

public class TwoIfsTest {

@Test

public void testTwoIfs() { TwoIfs twoIfs = new TwoIfs();

assertEquals(1, twoIfs.twoIfs(1, -1)); assertEquals(3, twoIfs.twoIfs(-1, 1)); assertEquals(4, twoIfs.twoIfs(-1, -1));

}

}

17The idea of this code is taken from the StackOverflow discussion about code coverage pitfalls - http://stackoverflow.com/ questions/695811/pitfalls-of-code-coverage.

246

Chapter 11. Test Quality

What is really interesting is that this simple test is good enough to satisfy the code coverage tool - it achieves 100% in respect of both line and branch coverage! Figure 11.4 shows this:

Figure 11.4. 100% code coverage - isn’t that great?

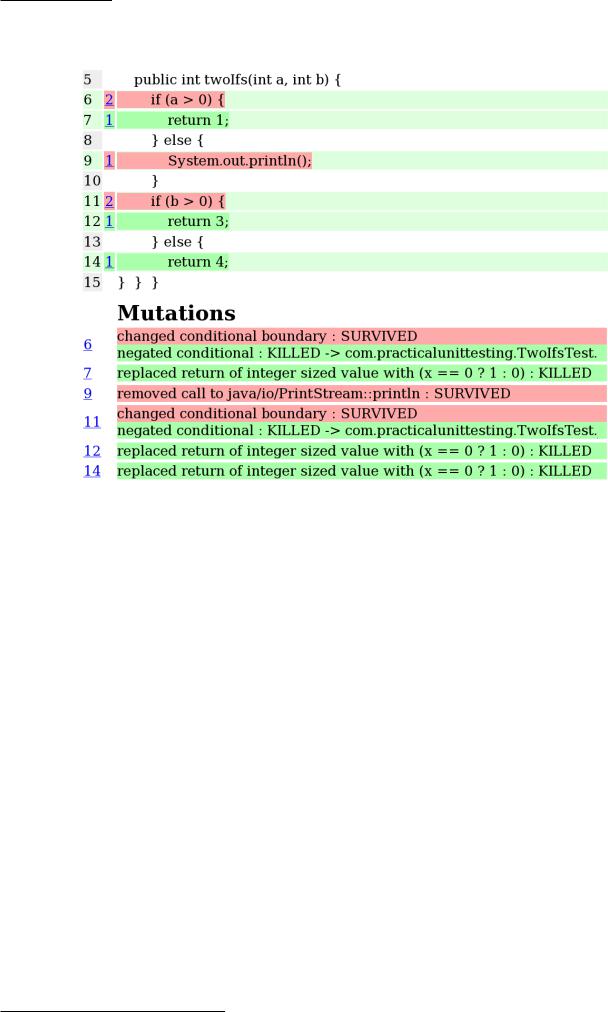

When we execute a PIT analysis, it will create mutants of the production code from Listing 11.4 by reverting the inequality operators and fiddling with comparison values. Then it will run all tests (in our case only the one test shown in Listing 11.5) against each mutant and check if they failed.

The outcome report shows the code that was mutated together with some information about applied mutations. Just like with code coverage reports, the red background denotes "bad" lines of code, which means that some mutations performed on these lines went unnoticed when testing. Below the source code there is a list of applied mutations. From this list we can learn that, for example, one mutant survived the change of conditional boundary in line 6. The "greater than" symbol was changed to "greater or equal" and the tests still passed. The report informs us that such and such a mutant SURVIVED, which means it was not detected by our tests.

247

Chapter 11. Test Quality

Figure 11.5. Mutation testing - PIT report

This simple example shows the difference between code coverage and mutation testing: in short, it is much simpler to satisfy coverage tools, whereas mutation testing tools can detect more holes within your tests.

11.4.3. Conclusions

Mutation testing has been around since the late 1970s but is rarely used outside academia. Executing a huge number of mutants and finding equivalent mutants has been too expensive for practical use.

— Mattias Bybro A Mutation Testing Tool For Java Programs (2003)

Mutation testing looks interesting, but I have never once heard tell of it being used successfully in a commercial project. There could be many reasons why this idea has never made it into developers' toolboxes, but I think the main one is that for a very long time there were no mutation testing tools that were production-ready. Existing tools were lagging behind relative to the progress of Java language (e.g. not supporting Java 5 annotations), and/or were not up to the industry standards and developers’ expectations in terms of reliability and ease of use. Because of this, code coverage, which has had decent tools for years, is today a standard part of every development process, while mutation testing is nowhere to be found. As for today, developers in general not only know nothing about such tools, but are even unaware of the very concept of mutation testing!

This situation is likely to change with the rise of the PIT framework, which offers much higher usability and reliability than any other mutation testing tool so far. But it will surely take time for the whole

248