Rivero L.Encyclopedia of database technologies and applications.2006

.pdf

Knowledge Discovery and Geographical Databases

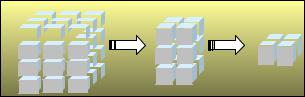

Figure 2. The use of operations: Slice & Dice

|

D |

T |

L |

W |

|

D T |

L |

|

|

|

|

|

|

MD |

0.7 |

0.9 |

0.7 |

|

|

|

|

|

|

||

ND |

|

|

|

Slice |

MD 0.7 |

0.9 |

Dice |

D |

T |

L |

||

E1 |

|

|

|

|

E1 |

0.7 |

0.9 |

|||||

|

|

|

|

|

0.9 |

MD 0.8 |

|

0.9 |

||||

E2 |

|

|

0.09.9 |

0.05.5 |

|

|

|

|

|

|||

0.8 |

|

|

E2 |

|

0.9 |

E |

0. |

|

0.9 |

|||

|

|

|

|

|

|

|

0.9 |

0.8 |

|

0.9 |

||

E3 |

0.7 |

|

0.8 |

0. |

|

|

|

|

|

|

|

|

|

|

0.8 |

0.8 |

|

E3 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

E4 |

|

|

|

|

|

|

|

|

|

|

|

|

By the association of the cartographic representation and OLAP navigation, the user moves in the multi-di- mensional structure and obtains representations of the data via cartographic, tabular posting or statistical diagram which are a function of dimensions, measurements, and the selected levels of hierarchy.

EXAMPLE

One of the main objectives of GIS combined with data warehouse tools is to help decision makers in making the best decision when handling natural or human resources. Then, the quality of the decision heavily depends on the quality of geographic data.

A GIS user needs to be confident in the result of the analysis. The user has to be sure of the status of data in terms of errors (absence or presence of data, wrong coding, imprecise location). The knowledge of the quality of data before any use helps the user to fix the framework. Geographic data control consists in processing some automatic or semiautomatic operations on data. These operations will help in finding and measuring the different kinds of errors. We need estimators to represent the result of measurements: bias on X and Y, standard deviation on X and Y, true, false, missing ratio, present ratio, and so on. The question then concerns the organization and storage of this quality information (Bâazaoui Faïz & Ben Ghezala, 2003).

In this example, we show that the cubic representation permits a good managing of quality information. The result of geographic data control is the generation of quality information that users will take into account during data processing and, more specifically, during the decision process.

The fundamental question is the following: “How will we show the quality information?” In our cube, we have three dimensions:

•Entity-dimension: we consider the set of roads (E1, E2, E3…).

•Attribute-dimension: we consider three attributes (Thickness of the road, Length of the road, and Width of the road).

•Density-dimension: each entity belongs to a (Dense, Non Dense and Medium Dense) zone.

We can notice in this cube that the value 0.9 refers to the quality information of E2 length attribute, which belongs to the non-dense zone. Consequently, this cube gives information on the quality of each road’s attribute. We can extract from this cube some measurements of quality, like average-quality of the width attribute for the whole roads, which belong to a me- dium-density, or a pondered average-quality. With these dimensions, OLAP operations will permit moving up and down along any dimension shown in the cube, for example, Slicing and Dicing: each one selects portion of the cube based on the constant(s) in one or few dimensions. For instance, one may be interested only in E1, E2, and E3 entities, which are not non-dense. They are characterized by thickness and length attributes, as shown in Figure 2.

FUTURE TRENDS

Due to the complex characteristics of geographic databases (huge volumes, lack of standards, multiplicity of data sources, multi-scale requirements, and variability in time), spatial data warehouse, spatial data mining, and spatial OLAP techniques have appeared. Therefore, the construction of the spatial data warehouse is not easy. Our perspective is to work on the modeling and implementation of spatial data warehouse by using a meta model.

CONCLUSION

In this article, we have already introduced data warehouses and given an overview of the most important

310

TEAM LinG

Knowledge Discovery and Geographical Databases

tools of data warehousing: OLAP and data mining. The necessity of these automated methods is created by the huge amount of manipulated data applied to geographical databases. The data mining techniques permits the discovery of non-trivial information, while OLAP prepares aggregations of information from the databases to rapidly answer the queries of the user. At the end, we have presented an example to demonstrate how geographical quality information can be managed by using a multi-dimensional presentation of data (cube).

REFERENCES

Abello, A., Samos, J., & Saltor, F. (2001). Understanding analysis dimensions in a multidimensional objectoriented model. Proceedings of the 3rd International Workshop on Design and Management of Data Warehouses (DMDW’01). Switzerland.

Agrawal, R., Gupta, A., & Sarawagi, A. (1997). Modeling multidimensional databases (ICDE’97), (pp. 232-243).

Bâazaoui, H., Faïz, S., &Ben Ghezala, H. (2000). Geographical datawarehouses and quality. Proceedings of the International Conference on Artificial and Computational Intelligence for Decision, Control and Automation in Engineering and Industrial Applications (ACIDCA’2000), Monastir, Tunisia.

Bâazaoui, H., Faïz, S., & Ben Ghezala, H. (2003). CASME: A case tool for spatial data marts design and generation.

Proceedings of the 5th International Workshop on Design and Management of Data Warehouses (DMDW’03) in conjunction with the 29th International Conference on Very Large Databases (VLDB’03), Berlin, Germany.

Bédard, Y. (2002). Geospatial data warehousing, datamart and SOLAP for geographic knowledge discovery. University of Muenster, Germany.

CNIG. (1993). Quality of exchanged geographical data. Report from the National Council of Geographical Information, Paris, France.

Faïz, S. (1999). Geographic information system: Quality information and data mining. Editions C.L.E.

Faïz, S. (2000). Managing geographic data quality during spatial data mining and spatial OLAP. International Journal of GIM, 28-31.

Faïz, S., Abbassi, K., & Boursier, P. (1998). Applying datamining techniques to generate quality informa-

tion within geographical databases. In Jeansoulin & Goodchild (Eds.), Data quality in GI. Paris: Editions K Hermès.

Golfarelli, M., Maio, D., & Rizzi, S. (1999). The dimensional fact model: A conceptual model for data warehouses.

International Journal of Cooperative Information Systems.

Han, J., Koperski, K., & Stefanovic, N. (1997). GeoMiner: A system prototype for spatial data mining. Proceedings of the ACM-SIGMOD Conference on Management and Data (SIGMOD’1997), Tucson, Arizona (pp. 553-556).

Rivest, S., Bédard, Y., & Marchand, P. (2001). Towards better support for spatial decision-making: Defining the characters ppatial on-line analytical processing (SOLAP)

Geomatica: The Journal of the Canadian Institute of Geomatics.

KEY TERMS

Data Mining: Known as knowledge discovery from databases and refers to the process of extracting interesting and non-trivial patterns or knowledge from databases. Spatial data mining is an extraordinarily demanding field referring to extraction of implicit knowledge and spatial relationships, which are not explicitly stored into geographical databases.

Data Warehouse: An instantiated view of integrated information sources to build mediators. In a spatial data warehouse, data can be combined from many heterogeneous sources to obtain decision support tools. These sources have to be adjusted because they contain data in different representations. Therefore, the construction of a data warehouse requires many operations such as integration, cleaning, and consolidation.

Geographic Data: Characterized by the fact that they are constituted of two kinds of attributes: descriptive or non-spatial attributes, and positioning or spatial attributes. Non-spatial data are not specific of geographic applications, and they are usually handled by standard relational DBMSs. Spatial attributes need additional power in order to design, store, and manipulate the spatial part of geographic entities.

GIS: Geographic Information System (GIS) is a computer system capable of capturing, storing, analyzing, and displaying geographically referenced information.

311

TEAM LinG

OLAP: On-Line Analytical Processing. A particularity of these tools is to systematically preaggregate data, which favor rapid access to information. Therefore, the storing structure is always cubic or pyramid-like. Various algorithms permit the manipulation of cubes of data. Spatial OLAP can be defined as a visual platform built especially to support rapid and easy spatio-temporal analysis and exploration of data following a multidimensional approach comprised of aggregation levels available in cartographic displays as well as in tabular and diagram displays.

Knowledge Discovery and Geographical Databases

Spatial Data Mining: Refers to the process of extracting interesting and implicit knowledge and spatial relationships, which are not explicitly stored into geographical databases.

Spatial OLAP: A visual platform built especially to support rapid and easy spatio-temporal analysis and exploration of data following a multidimensional approach.

312

TEAM LinG

|

313 |

|

Knowledge Discovery from Databases |

|

|

|

K |

|

|

|

|

|

|

|

Jose Hernandez-Orallo

Technical University of Valencia, Spain

INTRODUCTION

As databases are pervading every parcel of reality, they record what happens in and around organizations all over the world. Databases store the detailed history of organizations, institutions, governments, and individuals. An efficient and agile analysis of the data recorded in a database can no longer be done manually.

Knowledge discovery from databases (KDD) is a collection of technologies that aim at extracting nontrivial, implicit, previously unknown, and potentially useful information (Fayyad, Piatetsky-Shapiro, Smyth, & Uthurusamy, 1996) from raw data stored in databases. The extracted patterns, models, or trends can be used to better understand the data, and hence, the context of an organization, and to predict future behaviors in this context that could improve decision making. KDD can be used to answer questions such as, Is there a group of customers buying a special kind of product? Which sequence of financial products improves the chance of contracting a mortgage? Which telephone call patterns suggest a future churn? Are there relevant associations between risk factors in coronary diseases? How can I assess if my e-mail messages are more or less likely to be spam (junk mail)?

The previous questions cannot be answered by other tools usually associated with database technology, such as OLAP tools, decision support systems, executive information systems, and so forth. The key difference is that KDD does not convert information into (more aggregated or interwoven) information, but generates inductive models (under the form of rules, equations, or other kinds of knowledge) that could be sufficiently consistent with the data. In other words, KDD is not a deductive process but an inductive one.

BACKGROUND

The more and more rapid evolution of the context of organizations forces the revision of their knowledge relentlessly (what used to work before no longer works). This provisional character of knowledge helps explain why knowledge discovery from databases, still in its inception, is so successful. The models and patterns that can be obtained by data mining methods are useful for

virtually any area dealing with information: finance, insurance, banking, commerce, marketing, industry, private and public healthcare, medicine, bioengineering, telecommunications, and many other areas (M. J. A. Berry & Linoff, 2004).

The name KDD dates back to the early 1990s and is not a new “technology” itself; KDD is a heterogeneous area that integrates many techniques from several different fields without prejudices, incorporating tools from statistics, machine learning, databases, decision support systems, data visualization, World Wide Web research, among others, in order to obtain novel, valid, and intelligible patterns from data (Berthold & Hand 2002; Dunham 2003; Han & Kamber, 2001; Hand, Mannila, & Smyth, 2000).

PROCESS OF KNOWLEDGE DISCOVERY FROM DATABASES

The KDD process is a complex, elaborate process that comprises several stages (Dunham, 2003; Han & Kamber, 2001): data preparation (including data integration, selection, cleansing, and transformation), data mining, model evaluation and deployment (including model interpretation, use, dissemination and monitoring).

Cross-industry standard process for data mining (CRISP-DM; see http://www.crisp-dm.org for more information) is a standard reference that serves as a guide to carry out a knowledge discovery project and comprises all the stages mentioned (see Figure 1).

Figure 1. Stages of the knowledge discovery process

Business  Data

Data

Understanding  Understanding

Understanding

Deployment |

Data |

|

Preparation |

||

|

Modeling

(Data Mining)

Evaluation

Copyright © 2006, Idea Group Inc., distributing in print or electronic forms without written permission of IGI is prohibited.

TEAM LinG

According to this process, data mining is just a stage of the knowledge discovery process. The data mining stage, also called the modeling stage, converts the prepared data (usually in the form of a “minable view” with an assigned task) into one or more models. This stage is thereby the most characteristic of the whole process, and data mining is frequently used as a synonym for all the process. The process is cyclic because, after a complete cycle, the goals can be revised or extended to start the whole process again.

Many references to KDD choose the data preparation stage as the start of the knowledge discovery process (Dunham, 2003; Fayyad, 1996; Han & Kamber, 2001). The CRISP-DM standard, however, emphasizes that business understanding and data understanding (which includes data integration) must be handled before data preparation. This is reasonable because the first thing to know before starting with a KDD project is what the business needs are, to establish the business goals according to the business context, to see whether there is (or if we can get) enough data to solve them, and, in this case, to specify the data mining objectives.

For example, consider a distribution company that has a problem of inadequate stocks, which generate higher costs and frequently make some perishable products expire. From this problem, one business objective could be to reduce the stock level of perishables. If there is an internal database with enough information about the orders performed by each customer in the last 3 years, and we can complement this with external

Knowledge Discovery from Databases

information about the reference market sale prices of these product categories, it could be sufficient to start looking for models to address the business objective. This business objective could be translated into one or more data mining objectives (e.g., predict how many perishable products by category the customer is to buy week by week, from the orders performed during the last three years and the current market sale price).

Before starting the discussion about each specific KDD stage, it is important to highlight that many key issues on the success of a KDD project have to do with a good understanding of the goals and the feasibility of these goals, as well as a precise assessment of the resources needed (e.g., data, human, software, organizational). Table 1 shows the most important issues for success in a KDD project.

Additionally, a major reason for a possible failure in KDD projects may stem from an excessive focus on technology, that is, implementing data mining because others do, without recognizing what the needs of the organization are and without understanding the resources and data necessary to cover them.

Data Integration and Preparation

Once the data mining goals are clear and the data that will be required to achieve them is located, it is necessary to get all the data and integrate them. Usually, the data can come from many sources, either internal or external. The typical internal data, and generally the main source of

Table 1. Keys to success in a knowledge discovery project

•Business needs must drive the knowledge discovery project. The business problems and, hence, the business objectives must be clearly stated. These business objectives will help one understand the data that will be needed and the scope of the project.

•Business objectives must be translated into specific data mining objectives, which must be relevant for the organization. The data mining objectives must be accompanied with a specification on the required quality of the models to be extracted in terms of a set of metrics and features: expected error, reliability, costs, relevance, comprehensibility, and so forth.

•The knowledge discovery project must be integrated with other plans in the organization and must have the unconditional support from the organization executives.

•Data quality is crucial. The integration of data from several sources (internal or external), its neatness and an adequate organization and availability (using a data warehouse, if necessary) is a sine qua non in knowledge discovery.

•The use of integrated and friendly tools is also decisive, helping the process on many issues, not only for the specific stages of the process but also on other issues, such as documentation, communication tools, workflow tools, and so on.

•The need of a heterogeneous team, comprising not only professionals with a specific data mining training but also professionals from statistics, databases and business. A strong leadership among the group is also essential.

To translate the positive results of knowledge discovery into positive results for the organization, it is necessary to use a holistic evaluation and an ambitious deployment of the models to the know-how and daily operation of the organization.

314

TEAM LinG

Knowledge Discovery from Databases

information, include one or more transactional databases, possibly complemented with additional internal data, in the form of spreadsheets or other kinds of formatted or unformatted data. External data include all other data that can be useful to achieve the data mining objectives, such as demographic, geographic, climate, industrial, calendar, or institutional information.

Some small-scale data mining projects can be done on a small set of tables or even on a couple of data files. But, generally, the size, different sources, and formats of all this information require the integration into one single repository. Repositories of this kind are usually called data warehouses. Data warehouses and related technologies such as OLAP are covered by another entry in this encyclopedia. The reader is referred to this entry for more information.

It is important to note, however, that the data requirements for a data warehouse for OLAP analysis are not exactly the same that those for a data warehouse whose purpose is data mining. Data mining usually requires more fine-grain data (less aggregation) and must gather the data needed for the data mining objectives, not for performing complex reports or complex analytical queries. Even so, OLAP tools and data mining tools can work well together on the same data warehouse, which is designed taking into account both needs.

Along or after the task of determining the required data and integrating them, there is an even more thorny undertaking: data cleansing, selection, and transformation. The quality of data is even more important for data mining than it is for transactional databases. Nevertheless, because the data used for data mining are generally historical, we cannot act on the sources of the data. In many situations, the only possible solution is cleansing the data, by a heterogeneous set of techniques grouped around the term data cleansing, which might include exploratory data analysis, data visualization, and other techniques.

Data cleansing mostly focuses on inconsistent and missing data. First, inconsistent data can only be detected by comparing the data with database constraints or context knowledge or, what is more interesting, by using some kind of statistical analysis for detecting outliers. Outliers might or might not be errors, but they at least get the attention of an expert or tool to check whether they are, indeed, errors. First, in this case, there are many possibilities, including removing the entire row or the entire column, substituting the value by a null, a mean, or an estimated value, among others. Second, missing data may seem easier to detect, at least when the data come from databases that use null values, but can be more difficult if the data repository comes from several sources that are not using null values to represent unknown or nonapplicable data. The ways to handle missing values are

analogous to those for handling wrong data. Data cleans-

ing is essential because many data mining algorithms are K highly sensitive to missing or erroneous values.

Having an integrated and clean data repository is not sufficient to perform data mining on it. Data mining tools may not be able to handle the overall data repository, or this would be simply prohibitive. Consequently, it is important to select the relevant data from the data repository, denormalize and transform them, in order to set up a view, which is called the minable view. Data selection can be done horizontally (data sampling or instance selection) or vertically (feature selection). Data transformation includes a more varied collection of operations, including the creation of derived attributes (by aggregation, combination, numerization, or discretization), by principal component analysis, de-normalization, pivoting, and so forth.

Finally, data integration and preparation must be done with a certain degree of understanding about the data itself, and frequently requires more than half of the time and resources of the whole knowledge discovery process. Furthermore, working on the integration and preparation of data, and especially through data visualization (Fayyad, Grinstein, & Wierse, 2001), generates a better understanding of the data, and this might suggest new data mining objectives or could show that some of them are unfeasible.

Data Mining

The input of the data mining, modeling, or learning stage, is usually a minable view with a task. A data mining task specifies the kind of problem to be solved and the kind of model to be obtained. Table 2 shows the most important data mining tasks.

Data mining tasks can be divided into two main groups: predictive and descriptive, depending on the use of the patterns obtained. Predictive models predict future values of unseen data, such as, a model that predicts the sales for next year. Descriptive models describe or aim to understand the data, such as, there is a strong sequential association between contracting the free-mum-calls service and churn.

To solve a data mining task, we need to use a certain data mining technique, such as decision trees, neural networks, linear models, support vector machines, and so forth. A list of the most popular data mining techniques is shown in Table 3 (Berthold & Hand, 2002; Han & Kamber, 2001; Hand, Mannila, & Smyth, 2000; Mitchell, 1997; Witten & Frank, 1999). There are some techniques that can be used for several tasks. For instance, decision trees are generally used for classification but can also be used regression and for clustering. Other techniques are specific for solving one single task.

315

TEAM LinG

Table 2. Data mining tasks

• Predictive

oClassification. The model predicts a nominal output value (one from two or more categories) with one or more input variables. Related tasks are categorization, ranking, preference learning, class probability, and estimation.

oRegression. The model predicts a numerical output value with one or more input variables. Related tasks are sequential prediction and interpolation.

•Descriptive

oClustering. The model detects “natural” groups in the

data. A related task is summarization.

o Correlation and factorial analysis. The relation between two (bivariate) or more (multivariate) numerical variables is ascertained.

oAssociation discovery (frequent itemsets). The relation between two or more nominal variables is determined. Associations can be undirected, directed, or sequential.

Table 3. Data mining techniques

•Exploratory data analysis and other descriptive statistical techniques

•Parametrical and nonparametrical statistical modeling (e.g., linear models, generalized linear models, discriminant analysis,

•Fisher linear discriminant functions, logistic regression)

•Frequent itemset techniques (e.g., Apriori algorithm and extensions, GRI)

•Bayesian techniques (e.g., Naïve Bayes, Bayesian networks, EM)

•Decision trees and rules (e.g., CART, ID3/C4.5/See5, CN2)

•Relational and structural methods (e.g., inductive logic programming, graph learning)

•Artificial neural networks (e.g., perceptron, multilayer perceptron with backpropagation, Radial Basis Functions)

•Support vector machines and other kernel-based methods (e.g., margin classifiers, soft margin classifiers)

•Evolutionary techniques, fuzzy logic approaches, and other soft computing methods (e.g, genetic algorithms, evolutionary programming, genetic programming, simulated annealing, Wang & Mendel algorithm)

•Distance-based methods and case-based methods (e.g., nearest neighbors, hierarchical clustering [minimum spanning trees], k- means, self-organizing maps, LVQ)

For instance, the a priori algorithm is specific for association rules.

The existence of so many techniques for solving a few tasks is because no technique can be better than the rest for all possible problems. Techniques also differ in other features: some of them are numerical, others can be expressed in the form of rules, some are quick, others are quite slow. Hence, it is useful to try an assortment of techniques for a particular problem and to retain the one that gives the best model.

Model Evaluation, Interpretation, and

Deployment

Any data mining technique generates a tentative model, a hypothesis, which must be assessed before even thinking about using it. Furthermore, if we use several samples

Knowledge Discovery from Databases

of the same data or use several algorithms for solving the same task, we will have several models, and we have to choose from them. There are many evaluation techniques and metrics. Metrics usually give a value of the validity, predictability, or reliability of the model, in terms of the expectation of some defined error or cost. The appropriate metrics depend on the data mining task. For instance, a classification model can be evaluated with several metrics, such as accuracy, cost, precision/recall, area under the ROC (receiver operating charachteristic) curve, log loss, and many others. A regression model can be evaluated by other metrics, such as squared error, absolute error, and others. Association rules are usually evaluated by confidence/support.

To estimate the appropriate metric with reliability it is well known that the same data that was used for training should not be used for evaluation. Hence, there are several techniques to do this better (e.g., train and test evaluation, cross-validation, bootstrapping). Both the evaluation metric and the evaluation technique are necessary to avoid overfitting, a typical problem of learned models, (i.e., lack of generality).

Once the model is evaluated as feasible, it is important to interpret and analyze its consequences. Is it comprehensible? Is it novel? Is it consistent with our previous knowledge? Is it useful? Can be put into practice? All these questions are related to the pristine goal of KDD, to obtain nontrivial, previously unknown, comprehensible, and potentially useful knowledge.

Next, if the model is novel and useful, we would like to apply or scatter it inside the organization. This deployment can be done manually or by embedding the obtained model into software applications. For this purpose, standards for exporting and importing data mining models, such as the predictive model markup language (PMML) standard, defined by the Data Mining Group (see http://www.dmg.org for more information), can be of great help here.

Last but not least, we must recall that the knowledge is always provisional. Therefore, we must monitor the models with the new incoming data and knowledge and revise or regenerate them when they are no longer valid.

FUTURE TRENDS

Current and future areas of research are manifold. First, KDD can be specialized depending on the kind of data handled. Data mining has not only be applied to structured data in the form of, usually relational, databases, but it can also be applied to other heterogeneous kinds of data (e.g., semistructured data, text, Web, multimedia, geographical). This has given names to the following specific areas of KDD: Web mining (Kosala & Blockeel,

316

TEAM LinG

Knowledge Discovery from Databases

2000), text mining (Berry, 2003), and multimedia mining (Simoff, Djeraba, & Zaïane, 2002). Intensive research is being done in these areas.

Other, more general areas of research include dealing with specific, heterogeneous or multirelational data, the latter known as relational data mining (Dzeroski & Lavrac, 2001), data mining query languages, data mining standards, comprehensibility, scalability, tool automation and user friendliness, integration with data warehouses, and data preparation (Domingos & Hulten, 2001; Roddick, 1998; Srikant, 2002; Smyth, 2001). The progress in all these areas will make KDD an even more ubiquitous and indispensable database technology than it is today.

CONCLUSION

This article has shown the main features of the process of KDD: goals, stages and techniques. KDD can be considered inside the group of other analytical, decision-support, and business intelligence tools that use the information contained in databases to support strategic decisions, such as OLAP, EIS, DSS or more classical reporting tools. However, we have shown that the most distinctive trait of KDD is its inductive character, which converts information into models, data into knowledge.

There are many other important issues around KDD not discussed so far. The appearance of data mining software constituted the final boost for KDD. During the 1990s, many companies and organizations released specific data mining algorithms and general purpose tools, known as data mining suites. Although, initially, the tools focused on integrating an assortment of data mining techniques, such as decision trees, neural networks, logistic regression, and so on, nowadays the suites incorporate techniques for all the stages of the KDD process, including techniques for connecting to external data sources, data preparation tools, and data evaluation and interpretation tools. Additionally, data mining suites, either vendor-specific or general, are being more and more integrated with DBMS and other decision support tools, and this trend will be reinforced in the future.

REFERENCES

Berry, M. J. A., & Linoff, G. S. (2004). Data mining techniques: For marketing, sales, and customer relationship management (2nd Ed.). New York: Wiley.

Berry, M. W. (2003). Survey of text mining: Clustering, classification, and retrieval. New York: Springer-Verlag.

Berthold, M., & Hand, D. J. (Eds.). (2002). Intelligent data |

|

|

K |

||

analysis. An introduction (2nd Ed.). Berlin: Springer. |

||

Domingos, P., & Hulten, G. (2001). Catching up with |

|

|

|

||

the data: Research issues in mining data streams. |

|

|

Workshop on Research Issues in Data Mining and Knowl- |

|

|

edge Discovery. |

|

|

Dunham, M. H. (2003). Data mining. Introductory and |

|

|

advanced topics. Upper Saddle River, NJ: Prentice Hall. |

|

|

Dzeroski, S., & Lavrac, N. (2001). Relational data |

|

|

mining. Berlin: Springer. |

|

|

Fayyad, U., Piatetsky-Shapiro, G., Smyth, P., & |

|

|

Uthurusamy, R. (1996). Advances in knowledge discov- |

|

|

ery and data mining, Cambridge, MA: MIT Press. |

|

|

Fayyad, U. M., Grinstein, G., & Wierse, A. (2001). |

|

|

Information visualization in data mining and knowl- |

|

|

edge discovery. San Francisco, CA: Morgan Kaufmann. |

|

|

Han, J., & Kamber, M. (2001). Data mining: Concepts |

|

|

and techniques. San Francisco, CA: Morgan Kaufmann. |

|

|

Hand, D. J., Mannila, H., & Smyth, P. (2000). Principles |

|

|

of data mining. Cambridge, MA: MIT Press. |

|

|

Kosala, R., & Blockeel, H. (2000). Web mining re- |

|

|

search: A survey. ACM SIGKDD Explorations, News- |

|

|

letter of the ACM Special Interest Group on Knowledge |

|

|

Discovery and Data Mining , 2, 1-15. |

|

|

Mitchell, T. M. (1997). Machine learning. New York: |

|

|

McGraw-Hill. |

|

|

Roddick, H. (1998). Data warehousing and data mining: |

|

|

Are we working on the right things? In Y. Kambayashi, D. |

|

|

K. Lee, E.-P. Lim, Y. Masunaga, & M. Mohania (Eds.), |

|

|

Advances in database technologies: Lecture notes in |

|

|

computer science, 1552 (pp. 141-144). Berlin, Ger- |

|

|

many: Springer-Verlag. Lecture Notes in Computer |

|

|

Science. 1552. |

|

|

Simoff, S. J., Djeraba, C., & Zaïane, O. R. (2002). MDM/ |

|

|

KDD 2002: Multimedia data mining between promises and |

|

|

problems. SIGKDD Explorations, 4(2), 118-121. |

|

|

Smyth, P. (2001). Breaking out of thebBlack-box: Re- |

|

|

search challenges in data mining. Workshop on Research |

|

|

Issues in Data Mining and Knowledge Discovery. |

|

|

Srikant, R. (2002). New directions in data mining. Work- |

|

|

shop on Research Issues in Data Mining and Knowledge |

|

|

Discovery. |

|

|

Witten, I. H., & Frank, E. (1999). Tools for data mining. San |

|

|

Francisco: Morgan Kaufmann. |

|

317

TEAM LinG

KEY TERMS

Association Rule: A rule showing the association between two or more nominal attributes. Associations can be directed or undirected. For instance, a rule of the form, If the customer buys French fries and hamburgers she or he buys ketchup, is a directed association rule. The techniques for learning association rules are specific, and many of them, such as the a priori algorithm, are based on the idea of finding frequent item sets in the data.

Classification Model: A pattern or set of patterns that allows a new instance to be mapped to one or more classes. Classification models (also known as classifiers) are learned from data in which a special attribute is selected as the “class.” For instance, a model that classifies customers between likely to sign a mortgage and customers unlikely to do so, is a classification model. Classification models can be learned by many different techniques: decision trees, neural networks, support vector machines, linear and nonlinear discriminants, nearest neighbors, logistic models, Bayesian, fuzzy, genetic techniques, and so forth.

Clustering Model: A pattern or set of patterns that allows examples to be separated into groups. All attributes are treated equally and no attribute is selected as “output.” The goal is to find “clusters” such that elements in the same cluster are similar between them but are different to elements of other clusters. For instance, a model that groups employees according to several features is a clustering model. Clustering models can be learned by many different techniques: k-means, minimum spanning trees (dendrograms), neural networks, Bayesian, fuzzy, genetic techniques, and so forth.

Knowledge Discovery from Databases

Data Mining: It is a central stage in the process of knowledge discovering from databases. This stage transforms data, usually in the form of a “minable view” into some kind of model (e.g., decision tree, neural network, linear or nonlinear equation). Because of its central place in the KDD process and its catchy name, it is frequently used as a synonym for the whole KDD process.

Machine Learning: A discipline in computer science, generally considered a subpart of artificial intelligence, which develops paradigms and techniques for making computers learn autonomously. There are several types of learning: inductive, abductive, and by analogy. Data mining integrates many techniques from inductive learning, devoted to learn general models from data.

Minable View: This term refers to the view constructed from the data repository that is passed to the data mining algorithm. This minable view must include all the relevant features for constructing the model.

Overfitting: A frequent phenomenon associated to learning, wherein models do not generalize sufficiently. A model can be overfitted to the training data and perform badly on fresh data. This means that the model has internalized not only the regularities (patterns) but also the irregularities of the training data (e.g., noise), which are useless for future data.

Regression Model: A pattern or set of patterns that allows a new instance to be mapped to one numerical value. Regression models are learned from data where a special attribute is selected as the output or dependent value. For instance, a model that predicts the sales of the forthcoming year from the sales of the preceding years is a regression model. Regression models can be learned by many different techniques: linear regression, local linear regression, parametric and nonparametric regression, neural networks, and so forth.

·

318

TEAM LinG

|

319 |

|

Knowledge Management in Tourism |

|

|

|

K |

|

|

|

|

|

|

|

DanielXodo

Universidad Nacional del Centro de la Provincia de Buenos Aires, Argentina

Héctor Oscar Nigro

Universidad Nacional del Centro de la Provincia de Buenos Aires, Argentina

INTRODUCTION

Management greatly depends on knowledge, and its detection, creation, transmission, and number of intangibles play a fundamental role in success.

In tourism, information systems must work on immaterial concepts and take steps to satisfy the expectations of multiple potential customers. These systems require complex models of reality and suitable conceptual tools to work out strategies.

This article expounds the use of different mathematical and management techniques that can be applied to the modeling, application, and control of strategic and operative management.

understanding from a primary level (descriptive, procedural, and rational). Hence, the possibilities of organization management success will be improved. Concepts such as “mission” are hard to explain, as its vagueness facilitates a certain mission to be attractive to many people who, at the same time, give their own meaning to it that does not always coincide with that of the others. The same happens with the comprehension of different functions, qualities, or behaviors, which must be shared by numerous actors.

The information systems meant to communicate knowledge vary according to what we want to transmit. However, it is always necessary to reduce ambiguity and unify concepts.

The following four types of formal systems can be distinguished (Simon, 1995):

BACKGROUND

Data, Information, and Knowledge

These three concepts, which are frequently used as synonyms, are different representation stages of reality and its apprehension enables us in different ways.

Data, as simple representations, do not tell us much about themselves unless they are related to other data. It is from this relation that we get information.

Information is useful because of the possibility of intervention (e.g., cause–effect relationships), which is provided by knowledge.

What is knowledge? Knowledge can be understood as representation, production or estate

(Marakas,1999). In relation to this definition, any of the aforementioned concepts are, generically, knowledge. The definition of knowledge will depend on the usage and application of the term.

If we consider knowledge as “behavior support” (Lopez, 1999)—sharing perceptions in a socioeconomic system by means of the knowledge of goals, means, and evaluation of accomplishments—it will be easier to accomplish it effectively and efficiently.

If we consider knowledge taxonomically, we could derive, by means of appropriate processes, a linguistic and assimilative level of conceptualization and shared

a.of beliefs

b.of limit setting

c.of diagnosis control

d.of interactive control

In any of these, knowledge can be represented, generated, or established in different states, according to its usage, relationship, and application. For example, a certain number of guests in a hotel may be rather meaningless, but if we add the capacity, the date, and the category of the hotel to the number of people, it will have different meanings for the owner, the competitor or a new guest. These meanings will be influenced by, among other factors, the vision, the knowledge, and the expectations of the people involved. In this way, we will build up representation models, whose complexity will favor or damage our decisions according to our ability to deal with them.

Knowledge as Representation:

The Indicators

If we take the concept “intellectual capital” to mean useful knowledge, which we develop “increasing the human capital” (Olve, Roy, & Wetter, 2000), it is obvious that people’s knowledge varies from individual to

Copyright © 2006, Idea Group Inc., distributing in print or electronic forms without written permission of IGI is prohibited.

TEAM LinG