3. Measuring information: the bit

In any communication system the message produced by the source is one of several possible messages. The receiver will know what these possibilities are but will not know which one has been selected. Shannon observed that any such message can be represented by a sequence of fundamental units called bits, consisting of either 0s or 1s. The number of bits required for a message depends on the number of possible messages: the more possible messages (and hence the more initial uncertainty at the receiver), the more bits required.

As a simple example, suppose a coin is flipped and the outcome (heads or tails) is to be communicated to a person in the next room. The outcome of the flip of a coin can be represented using one bit of information: 0 for heads and 1 for tails. Similarly, the outcome of a football game might also be represented with one bit: 0 if the home team loses and 1 if the home team wins. These examples emphasize one of the limitations of information theory—it cannot measure (and does not attempt to measure) the meaning or the importance of a message. It requires the same amount of information to distinguish heads from tails as it does to distinguish a win from a loss: one bit.

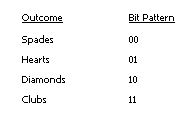

For an example with more than two outcomes, more bits are required. Suppose a playing card is chosen at random from a 52-card deck, and the suit chosen (hearts, spades, clubs, or diamonds) is to be communicated. Communicating the chosen suit (one of four possible messages) requires two bits of information, using the following simple scheme:

|

|

4. Information content and entropy

A fundamental problem in information theory is to find the minimum average number of bits needed to represent a particular message selected from a set of possible messages. Shannon solved this problem by using the notion of entropy. The word entropy is borrowed from physics, in which entropy is a measure of the disorder of a group of particles. In information theory disorder implies uncertainty and, therefore, information content, so in information theory, entropy describes the amount of information in a given message. Entropy also describes the average information content of all the potential messages of a source. This value is useful when, as is often the case, some messages from a source are more likely to be transmitted than are others.

A. Information Content of a Message

If there are a number of possible messages, then each one can be expected to occur a certain fraction of the time. This fraction is called the probability of the message. For example, if an ordinary coin is tossed, the two outcomes, heads or tails, will each occur with probability ½. Similarly, if a card is selected at random from a 52-card deck, each of the four suits will occur with probability ¼.

Shannon determined that the information content of a message is inversely related to its probability of occurrence. The more unlikely a message is, the more information it contains. Based on this concept, the exact information value of a message can be determined mathematically. If the probability of a given outcome is denoted by p, then Shannon defined the information content (I) of that message, measured in bits, to be equal to the base 2 logarithm (log2) of the reciprocal of p. (The log2 of a given number is the exponent that must be given to the number 2 in order to obtain the given number. Log 2 of 8 = 3, for example, because 23 = 8.) The relation between information content and probability can be represented by the equation I = log21/p

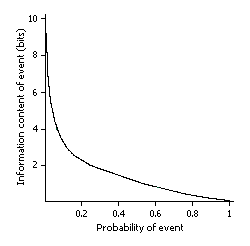

The graph below illustrates a plot of this function. The horizontal axis shows the probability p; p is a number between 0 (an impossible event) and 1 (a certain event). The vertical axis shows the information content.

|

|

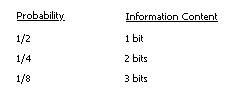

As can be seen in the graph, the less likely or probable an event, the more information it conveys. Here is a short table giving several values of p and the corresponding information content I:

|

|

The more possibilities a source contains, the more information it contains and, therefore, the more bits needed to represent the information content. For example, if a card is picked at random from a 52-card deck and the exact value of the card is to be communicated, then each possibility has probability 1/52. According to the formula I = log21/p, each possible outcome has an information content equal to log252, which equals 5.70044.

B. Entropy of a Source

The entropy of a source is the average information content from all the possible messages. In the example of drawing a card from a 52-card deck, each card has the same chance of being drawn. Since each outcome is equally likely, the entropy of this source is also 5.70044 bits. However, the information content from most sources is more likely to vary from message to message. Information content can vary because some messages may have a better chance of being sent than others have. Messages that are unlikely convey the most information, while messages that are highly probable convey less information.

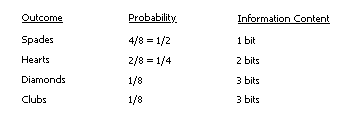

The following example demonstrates the entropy of a source when the outcomes are not equally likely. Consider a deck of 8 cards, consisting of 4 spades, 2 hearts, 1 diamond, and 1 club. The probability of each suit being drawn at random is no longer equal. The probability and the corresponding information content of drawing a given card are listed in the following table:

|

|

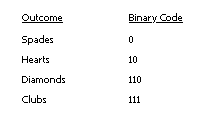

In this case the entropy, or average information content, of the system is exactly: (1/2 × 1) + (1/4 × 2) + (1/8 × 3) + (1/8 × 3) = 1.75 bits. Even though there are four possible messages, some are more likely than others, so it actually takes less than two bits to specify the information content of this system. To see how to represent this particular source using an average of 1.75 bits, consider the following binary code:

|

|

Here the most likely outcome (spades) is represented by a short code and the least likely outcomes are represented by longer codes. Mathematically, the average is exactly 1.75 bits, the source entropy. Information theory states that no further improvement is possible.