Лупин / Лекции_2007 / Введение в параллельное программирование

.pdf

OpenMP* Programming Model:

Fork-Join Parallelism:

Master thread spawns a team of threads as needed.

Parallelism added incrementally until performance are met: i.e. the sequential program evolves into a parallel program.

Parallel Regions

Master

Thread

in red

A Nested A Nested

Parallel Parallel

region region

*Other names and brands may be claimed as the property of others.

User layer

Prog.Layer

System layer

HW

OpenMP* Basic Defs: Solution Stack

End User

Application

Directives, |

|

OpenMP library |

|

Environment |

Compiler |

|

|

variables |

|

|

|

|

||

|

|

|

|

|

OpenMP Runtime library

OS/system support for shared memory and threading

Proc1 |

Proc2 |

Proc3 |

ProcN |

Shared Address Space

*Other names and brands may be claimed as the property of others.

Programming with OpenMP*

The directives are where the real work of OpenMP occurs.

To create a team of threads

#pragma omp parallel

{

/// each thread executes the statements in this block

}

To create a team of threads AND split up a loops iterations among the threads in the team

#pragma omp parallel for for(i=0;i<N;i++)

{

/// each thread gets a subset of the loop iterations

}

Clauses modify the directives:

– Private(list): for the list of variables, create a private copy … one for each thread.

–Reduction (op : list): compute local values and then combined local values into a single private value.

*Other names and brands may be claimed as the property of others.

OpenMP* PI Program :

Parallel for with a reduction

#include <omp.h> |

|

|

static long num_steps = 100000; |

double step; |

|

#define NUM_THREADS 2 |

|

|

void main () |

|

|

{ |

int i; double x, pi, sum = 0.0; |

|

|

step = 1.0/(double) num_steps; |

|

|

omp_set_num_threads(NUM_THREADS); |

|

#pragma omp parallel for reduction(+:sum) private(x) for (i=1;i<= num_steps; i++){

x = (i-0.5)*step;

sum = sum + 4.0/(1.0+x*x);

}

pi = step * sum;

}

*Other names and brands may be claimed as the property of others.

Outline

Parallel programming, wetware, and software

Parallel programming APIs

–Thread Libraries

–Win32 API

–POSIX threads

–Compiler Directives

–OpenMP*

–Message Passing

– MPI

– MPI

More complicated examples

Choosing which API is for you

*Other names and brands may be claimed as the property of others.

Parallel API’s:

MPI: The Message Passing Interface

|

|

|

|

|

|

|

|

|

|

MPI_Send |

|

|

|

|

|

|

|

||

|

MPI_Type_contiguous |

|

|

|

|

|

|

|

|

MPI_Recv_init |

|

|

|||||||

|

|

|

|

|

|

|

|

|

|

|

|

|

|||||||

|

|

MPI_Bcast |

|

|

|

|

|

||||||||||||

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

||||

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|||

|

|

|

MPIAnTypeAPIfreefor |

|

|

|

|

|

|

|

|

|

|

||||||

|

|

|

|

|

|

|

|

|

|

|

|

MPI Group_size |

|

|

|||||

|

|

|

|

|

|

|

|

|

|

|

|

|

|

||||||

|

|

|

|

|

|

|

|

|

|

|

|

|

|||||||

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

||||

MPI_Scan |

|

|

|

|

Writing |

Clustered |

|

|

|||||||||||

MPI: |

An API for |

|

|

||||||||||||||||

|

|

|

|

|

|

|

|

|

|

MPI_Unpack |

|

|

|

|

|

|

|

||

|

|

|

|

Applications |

|

|

|

|

|

|

|

||||||||

|

|

|

|

|

|

|

MPI_COMM_WORLD |

|

|

||||||||||

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

MPI_Allgatherv– A libraryMPIof_Errhandlerroutines to_createcoordinate the

– A library of routines to coordinate the

execution of multiple processes.MPI_Group_compare execution of multiple processes.

MPI_Sendrecv

C$OMP ORDERED– Provides point to point and collective MPI_Startall

– Provides point to pointMPIandBarriercollective

communication in Fortran, C and C++ communication in Fortran, C and C++

|

|

MPI_Start |

|

MPI_Reduce |

MPI_Te cancelled |

MPI_Pack |

||||||||||||

|

|

|

|

|

– Unifies last 15 years of |

cluster computing |

|

|

||||||||||

|

|

|

|

|

|

|||||||||||||

|

|

|

|

|

– Unifies last 15 years of |

cluster computing |

||||||||||||

|

|

|

|

|

and |

MPP |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

||

|

|

|

|

|

and |

MPPpractice |

|

|

|

|

|

|

|

|

|

|

||

|

|

MPI |

_Bsend_init |

|

MPI Re v |

|

|

|

omp_set_lock(lck) |

|

|

|||||||

|

|

|

|

|

|

|

|

|

|

|||||||||

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

||

|

|

|

|

MPI_Sendrecv_replace |

|

|

|

|

|

|

|

|

|

|

|

|||

|

|

|

|

|

|

MPI_Ssend |

|

MPI_Waitall |

|

|||||||||

|

|

|

|

|

|

|

|

|

|

|

|

|||||||

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Programming with MPI

You need to setup the environment … |

define the context |

|

for a group of processes |

|

|

|

Give each process an ID ranging |

|

MPI_Init(&argc, &argv) ; |

|

|

|

from 0 to (numprocs-1) |

|

MPI_Comm_Rank(MPI_COMM_WORLD, &my_id) ;

MPI_Comm_Size(MPI_COMM_WORLD, &numprocs) ;

MPI uses multiple processes … nothing is shared by default. Only by sharing messages.

Many MPI programs only include simple collective communication … for example a reduction:

MPI_Reduce(&sum, &pi, 1, MPI_DOUBLE, MPI_SUM, 0,

MPI_COMM_WORLD) ;

|

Take a local |

|

|

|

|

|

|

|

|

|

|

|

|

|

And send the |

|

|

|

value |

|

Combine into |

|

Using this op |

|

|

|

|

|

|

a single value |

|

(e.g. SUM) |

|

answer to this |

|

|

|

|

|

|

|

|

process ID |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

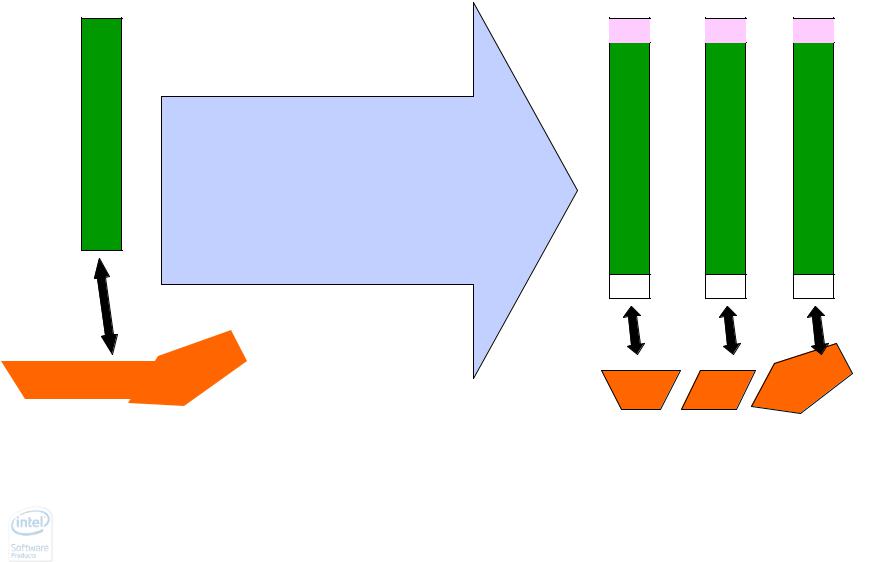

How do most people use MPI:

The Single Program Multiple data Pattern

SPMD pattern:

•Replicate the program.

•Add glue code (based on ID)

•Break up the data

A sequential program |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

A parallel program working on a |

|

|||||||

working on a data set |

|

|||||||

decomposed data set. |

|

|||||||

|

|

|||||||

|

Coordination by passing messages. |

|

||||||

|

|

|||||||

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

MPI: Pi program

#include <mpi.h>

void main (int argc, char *argv[])

{

Assume Num_steps is evenly divided by numprocs

int i, my_id, numprocs; double x, pi, step, sum = 0.0 ; step = 1.0/(double) num_steps ;

MPI_Init(&argc, &argv) ; MPI_Comm_Rank(MPI_COMM_WORLD, &my_id) ; MPI_Comm_Size(MPI_COMM_WORLD, &numprocs) ; my_steps = num_steps/numprocs ;

for (i=my_id*my_steps; i<(my_id+1)*my_steps ; i++)

{

x = (i+0.5)*step;

sum += 4.0/(1.0+x*x);

}

sum *= step ;

MPI_Reduce(&sum, &pi, 1, MPI_DOUBLE, MPI_SUM, 0, MPI_COMM_WORLD) ;

}

Outline

Parallel programming, wetware, and software

Parallel programming APIs

–Thread Libraries

–Win32 API

–POSIX threads

–Compiler Directives

–OpenMP*

–Message Passing

–MPI

More complicated examples

More complicated examples

Choosing which API is for you

Third party names are the property of their owners.