Лупин / Лекции_2007 / Введение в параллельное программирование

.pdf

|

|

Our running Example: The PI program |

|||

|

|

|

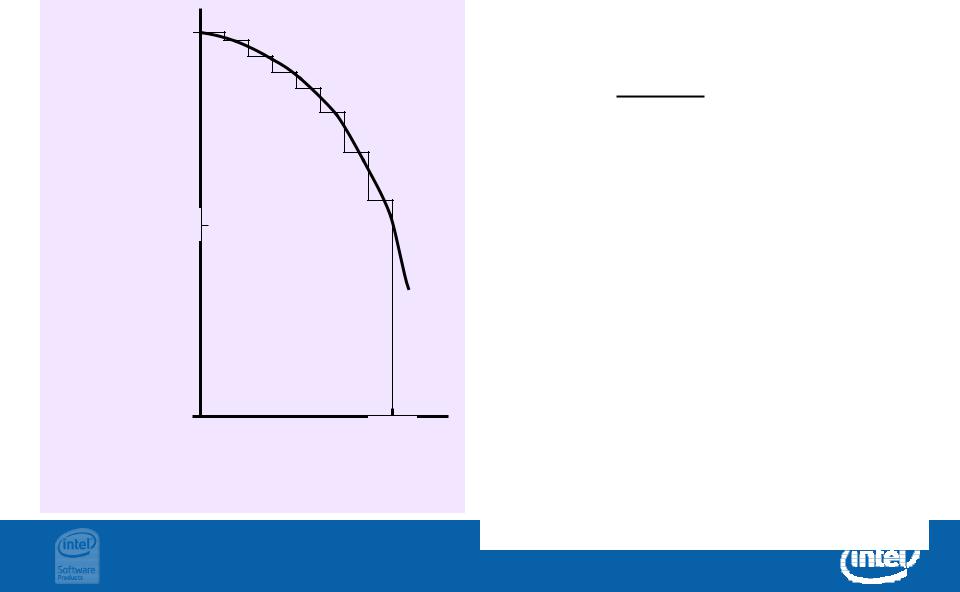

Numerical Integration |

|

|

|

|

|

|

Mathematically, we know that: |

|

|

4.0 |

|

|

1 |

|

|

|

|

|

|

|

|

|

|

|

4.0 |

dx = |

|

|

|

|

(1+x2) |

|

|

|

|

|

|

|

) |

|

|

|

0 |

|

|

|

|

|

|

|

2 |

|

|

|

|

|

x |

|

|

|

We can approximate the |

|

+ |

|

|

|

||

4.0/(1 |

2.0 |

|

|

integral as a sum of |

|

|

|

|

|||

|

|

|

|

||

= |

|

|

|

rectangles: |

|

|

|

|

|

|

|

F(x) |

|

|

|

N |

|

|

|

|

|

|

|

|

|

|

|

F(xi) x |

|

|

|

|

|

i = 0 |

|

|

0.0 |

X |

1.0 |

Where each rectangle has |

|

|

|

||||

|

|

|

|||

|

|

|

|

width x and height F(xi) at |

|

|

|

|

|

the middle of interval i. |

|

PI Program:

The sequential program

static long num_steps = 100000; double step;

void main ()

{ int i; double x, pi, sum = 0.0;

step = 1.0/(double) num_steps;

for (i=1;i<= num_steps; i++){ x = (i-0.5)*step;

sum = sum + 4.0/(1.0+x*x);

}

pi = step * sum;

}

Outline

Parallel programming, wetware, and software

Parallel programming APIs

– Thread Libraries

– Win32 API

– Win32 API

–POSIX threads

–Compiler Directives

–OpenMP*

–Message Passing

–MPI

More complicated examples

Choosing which API is for you

*Other names and brands may be claimed as the property of others.

Threads and Parallel Programming

Operating Systems 101:

–Process: A unit of work managed by an OS with its own address space (the heap) and OS managed resources.

–Threads: resources within a process that execute the instructions in a program. They have their own program counter and a private memory region (a stack) but share the other resources within the process … including the heap.

Threads are the natural unit of execution for parallel programs on shared memory hardware.

The threads share memory so data structures don’t have to be torn apart into distinct pieces.

Programming with thread libraries

The OS provides an API for creating, managing, and destroying threads.

–Windows* threading API

–Posix threads (on Linux)

Advantages of threads libraries:

–The thread library gives you detailed control over the threads.

Disadvantage of thread libraries:

–The thread library REQUIRES that you take detailed control over the threads.

*Other names and brands may be claimed as the property of others.

Win32 API

#include <windows.h> #define NUM_THREADS 2

HANDLE thread_handles[NUM_THREADS]; CRITICAL_SECTION hUpdateMutex;

static long num_steps = 100000; double step;

double global_sum = 0.0;

void Pi (void *arg)

{

int i, start;

double x, sum = 0.0;

start = *(int *) arg;

step = 1.0/(double) num_steps;

for (i=start;i<= num_steps; i=i+NUM_THREADS){

x = (i-0.5)*step;

sum = sum + 4.0/(1.0+x*x);

}

EnterCriticalSection(&hUpdateMutex); global_sum += sum; LeaveCriticalSection(&hUpdateMutex);

}

void main ()

{

double pi; int i; DWORD threadID;

int threadArg[NUM_THREADS];

for(i=0; i<NUM_THREADS; i++) threadArg[i] = i+1;

InitializeCriticalSection(&hUpdateMutex);

for (i=0; i<NUM_THREADS; i++){ thread_handles[i] = CreateThread(0, 0,

(LPTHREAD_START_ROUTINE) Pi, &threadArg[i], 0, &threadID);

}

WaitForMultipleObjects(NUM_THREADS,

thread_handles, TRUE,INFINITE);

pi = global_sum * step;

printf(" pi is %f \n",pi);

}

Win32 thread library: It’s not as bad as it looks

Setup multi-threading support |

void main () |

|

{ |

|

|

|

|

double pi; int i; |

|

|

|

static long num_steps = 100000; |

|

|

double step; |

|

|

] = i+1;

Setup arguments, book); keeping, and launch the threads

Define the work each thread |

0, |

||

|

Pi, |

||

will do and pack it into a |

|

||

|

0, &threadID); |

||

function |

|

|

|

|

} |

|

|

Update the |

|

, |

final answer |

|

|

|

TRUE,INFINITE); |

|

one thread at |

|

|

|

Wait for all the threads to finish |

|

a time |

|

pi = global_sum * step; |

|

|

printf(" pi is %f \n",pi); |

|

} |

Compute |

|

|

|

|

|

and print |

|

|

final answer |

Win32 API

#include <windows.h> #define NUM_THREADS 2

HANDLE thread_handles[NUM_THREADS]; CRITICAL_SECTION hUpdateMutex;

static long num_steps = 100000; double step;

double global_sum = 0.0;

void Pi (void *arg)

{

int i, start;

double x, sum = 0.0;

start = *(int *) arg;

step = 1.0/(double) num_steps;

for (i=start;i<= num_steps; i=i+NUM_THREADS){

x = (i-0.5)*step;

sum = sum + 4.0/(1.0+x*x);

}

EnterCriticalSection(&hUpdateMutex); global_sum += sum; LeaveCriticalSection(&hUpdateMutex);

}

void main ()

{

double pi; int i; DWORD threadID;

int threadArg[NUM_THREADS];

for(i=0; i<NUM_THREADS; i++) threadArg[i] = i+1;

InitializeCriticalSection(&hUpdateMutex);

for (i=0; i<NUM_THREADS; i++){ thread_handles[i] = CreateThread(0, 0,

(LPTHREAD_START_ROUTINE) Pi, &threadArg[i], 0, &threadID);

}

WaitForMultipleObjects(NUM_THREADS,

thread_handles, TRUE,INFINITE);

pi = global_sum * step;

printf(" pi is %f \n",pi);

}

Outline

Parallel programming, wetware, and software

Parallel programming APIs

–Thread Libraries

–Win32 API

–POSIX threads

–Compiler Directives

– OpenMP*

– OpenMP*

–Message Passing

–MPI

More complicated examples

Choosing which API is for you

*Other names and brands may be claimed as the property of others.

Parallel APIs:

OpenMP*

C$OMP FLUSH |

|

|

|

#pragma omp critical |

|

|

|

|

|

|

|

C$ OMP

OMP

THREADPRIVATE(/ABC/)

THREADPRIVATE(/ABC/)

CALL

CALL

OMP

OMP _

_ SET

SET _

_ NUM

NUM _

_ THREADS(10)

THREADS(10)

OpenMP:

OpenMP:

An

An API

API for

for Writing

Writing Multithreaded

Multithreaded Applicationsions

Applicationsions

C$OMP  parallel

parallel

do

do

shared(a,

shared(a,

b,

b,

c)

c)

call

call

omp

omp _

_ test

test _

_ lock(jlok)

lock(jlok)

•

•

A

A set

set of

of compiler

compiler directives

directives and

and library

library routines

routines

forr

forr

call

OMP

OMP _

_ INIT

INIT _

_ LOCK

LOCK

(ilok)

(ilok)

C

C $

$ OMP

OMP

MASTER

MASTER

parallel application

application programmers

programmers

C $

$ OMP

OMP

ATOMIC

ATOMIC

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

C$OMP SINGLE PRIVATE(X) |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|||||

|

setenv OMP_SCHEDULE “dynamic” |

|

|

|

|

||||||||||||||||||

|

|

|

|

|

• Makes it easy |

|

to create multithreaded (MT) |

|

|

|

|

||||||||||||

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|||||||

|

|

|

|

|

programs in Fortran, C and C++ |

|

|

|

|

|

|

|

|||||||||||

|

|

|

|

|

|

|

|

|

|

||||||||||||||

|

|

C$OMP PARALLEL DO ORDERED PRIVATE (A, B, C) |

|

C$OMP ORDERED |

|||||||||||||||||||

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

C$OMP PARALLEL• StandardizesREDUCTIONlast(+:15A,yearsB) |

of |

|

|

|

|

|

|

|

||||||||||||

|

|

|

|

SMP practice |

|||||||||||||||||||

|

|

|

|

|

|

|

|

|

|

|

|

|

C$OMP SECTIONS |

|

|

|

|||||||

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

#pragma omp parallel for private(A, B) |

|

|

|

|

|

|

|

|

|

|

|||||||||||

|

|

|

|

!$OMP BARRIER |

|

|

|

||||||||||||||||

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

||||||

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|||||||||

|

|

|

C$OMP PARALLEL COPYIN(/blk/) |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

||||

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|||||

|

|

|

C$OMP DO lastprivate(XX) |

|

|

||||||||||||||||||

|

|

|

|

|

|

|

|

|

|

|

|||||||||||||

|

|

|

|

|

|

|

|

|

|

|

|||||||||||||

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

||

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Nthrds = OMP_GET_NUM_PROCS() |

|

|

|

omp_set_lock(lck) |

|

|

||||||||||||

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

*Other names and brands may be claimed as the property of others.