- •Preface

- •Introduction

- •1.1 Spatial coordinate systems

- •1.2 Sound fields and their physical characteristics

- •1.2.1 Free-field and sound waves generated by simple sound sources

- •1.2.2 Reflections from boundaries

- •1.2.3 Directivity of sound source radiation

- •1.2.4 Statistical analysis of acoustics in an enclosed space

- •1.2.5 Principle of sound receivers

- •1.3 Auditory system and perception

- •1.3.1 Auditory system and its functions

- •1.3.2 Hearing threshold and loudness

- •1.3.3 Masking

- •1.3.4 Critical band and auditory filter

- •1.4 Artificial head models and binaural signals

- •1.4.1 Artificial head models

- •1.4.2 Binaural signals and head-related transfer functions

- •1.5 Outline of spatial hearing

- •1.6 Localization cues for a single sound source

- •1.6.1 Interaural time difference

- •1.6.2 Interaural level difference

- •1.6.3 Cone of confusion and head movement

- •1.6.4 Spectral cues

- •1.6.5 Discussion on directional localization cues

- •1.6.6 Auditory distance perception

- •1.7 Summing localization and spatial hearing with multiple sources

- •1.7.1 Summing localization with two sound sources

- •1.7.2 The precedence effect

- •1.7.3 Spatial auditory perceptions with partially correlated and uncorrelated source signals

- •1.7.4 Auditory scene analysis and spatial hearing

- •1.7.5 Cocktail party effect

- •1.8 Room reflections and auditory spatial impression

- •1.8.1 Auditory spatial impression

- •1.8.2 Sound field-related measures and auditory spatial impression

- •1.8.3 Binaural-related measures and auditory spatial impression

- •1.9.1 Basic principle of spatial sound

- •1.9.2 Classification of spatial sound

- •1.9.3 Developments and applications of spatial sound

- •1.10 Summary

- •2.1 Basic principle of a two-channel stereophonic sound

- •2.1.1 Interchannel level difference and summing localization equation

- •2.1.2 Effect of frequency

- •2.1.3 Effect of interchannel phase difference

- •2.1.4 Virtual source created by interchannel time difference

- •2.1.5 Limitation of two-channel stereophonic sound

- •2.2.1 XY microphone pair

- •2.2.2 MS transformation and the MS microphone pair

- •2.2.3 Spaced microphone technique

- •2.2.4 Near-coincident microphone technique

- •2.2.5 Spot microphone and pan-pot technique

- •2.2.6 Discussion on microphone and signal simulation techniques for two-channel stereophonic sound

- •2.3 Upmixing and downmixing between two-channel stereophonic and mono signals

- •2.4 Two-channel stereophonic reproduction

- •2.4.1 Standard loudspeaker configuration of two-channel stereophonic sound

- •2.4.2 Influence of front-back deviation of the head

- •2.5 Summary

- •3.1 Physical and psychoacoustic principles of multichannel surround sound

- •3.2 Summing localization in multichannel horizontal surround sound

- •3.2.1 Summing localization equations for multiple horizontal loudspeakers

- •3.2.2 Analysis of the velocity and energy localization vectors of the superposed sound field

- •3.2.3 Discussion on horizontal summing localization equations

- •3.3 Multiple loudspeakers with partly correlated and low-correlated signals

- •3.4 Summary

- •4.1 Discrete quadraphone

- •4.1.1 Outline of the quadraphone

- •4.1.2 Discrete quadraphone with pair-wise amplitude panning

- •4.1.3 Discrete quadraphone with the first-order sound field signal mixing

- •4.1.4 Some discussions on discrete quadraphones

- •4.2 Other horizontal surround sounds with regular loudspeaker configurations

- •4.2.1 Six-channel reproduction with pair-wise amplitude panning

- •4.2.2 The first-order sound field signal mixing and reproduction with M ≥ 3 loudspeakers

- •4.3 Transformation of horizontal sound field signals and Ambisonics

- •4.3.1 Transformation of the first-order horizontal sound field signals

- •4.3.2 The first-order horizontal Ambisonics

- •4.3.3 The higher-order horizontal Ambisonics

- •4.3.4 Discussion and implementation of the horizontal Ambisonics

- •4.4 Summary

- •5.1 Outline of surround sounds with accompanying picture and general uses

- •5.2 5.1-Channel surround sound and its signal mixing analysis

- •5.2.1 Outline of 5.1-channel surround sound

- •5.2.2 Pair-wise amplitude panning for 5.1-channel surround sound

- •5.2.3 Global Ambisonic-like signal mixing for 5.1-channel sound

- •5.2.4 Optimization of three frontal loudspeaker signals and local Ambisonic-like signal mixing

- •5.2.5 Time panning for 5.1-channel surround sound

- •5.3 Other multichannel horizontal surround sounds

- •5.4 Low-frequency effect channel

- •5.5 Summary

- •6.1 Summing localization in multichannel spatial surround sound

- •6.1.1 Summing localization equations for spatial multiple loudspeaker configurations

- •6.1.2 Velocity and energy localization vector analysis for multichannel spatial surround sound

- •6.1.3 Discussion on spatial summing localization equations

- •6.1.4 Relationship with the horizontal summing localization equations

- •6.2 Signal mixing methods for a pair of vertical loudspeakers in the median and sagittal plane

- •6.3 Vector base amplitude panning

- •6.4 Spatial Ambisonic signal mixing and reproduction

- •6.4.1 Principle of spatial Ambisonics

- •6.4.2 Some examples of the first-order spatial Ambisonics

- •6.4.4 Recreating a top virtual source with a horizontal loudspeaker arrangement and Ambisonic signal mixing

- •6.5 Advanced multichannel spatial surround sounds and problems

- •6.5.1 Some advanced multichannel spatial surround sound techniques and systems

- •6.5.2 Object-based spatial sound

- •6.5.3 Some problems related to multichannel spatial surround sound

- •6.6 Summary

- •7.1 Basic considerations on the microphone and signal simulation techniques for multichannel sounds

- •7.2 Microphone techniques for 5.1-channel sound recording

- •7.2.1 Outline of microphone techniques for 5.1-channel sound recording

- •7.2.2 Main microphone techniques for 5.1-channel sound recording

- •7.2.3 Microphone techniques for the recording of three frontal channels

- •7.2.4 Microphone techniques for ambience recording and combination with frontal localization information recording

- •7.2.5 Stereophonic plus center channel recording

- •7.3 Microphone techniques for other multichannel sounds

- •7.3.1 Microphone techniques for other discrete multichannel sounds

- •7.3.2 Microphone techniques for Ambisonic recording

- •7.4 Simulation of localization signals for multichannel sounds

- •7.4.1 Methods of the simulation of directional localization signals

- •7.4.2 Simulation of virtual source distance and extension

- •7.4.3 Simulation of a moving virtual source

- •7.5 Simulation of reflections for stereophonic and multichannel sounds

- •7.5.1 Delay algorithms and discrete reflection simulation

- •7.5.2 IIR filter algorithm of late reverberation

- •7.5.3 FIR, hybrid FIR, and recursive filter algorithms of late reverberation

- •7.5.4 Algorithms of audio signal decorrelation

- •7.5.5 Simulation of room reflections based on physical measurement and calculation

- •7.6 Directional audio coding and multichannel sound signal synthesis

- •7.7 Summary

- •8.1 Matrix surround sound

- •8.1.1 Matrix quadraphone

- •8.1.2 Dolby Surround system

- •8.1.3 Dolby Pro-Logic decoding technique

- •8.1.4 Some developments on matrix surround sound and logic decoding techniques

- •8.2 Downmixing of multichannel sound signals

- •8.3 Upmixing of multichannel sound signals

- •8.3.1 Some considerations in upmixing

- •8.3.2 Simple upmixing methods for front-channel signals

- •8.3.3 Simple methods for Ambient component separation

- •8.3.4 Model and statistical characteristics of two-channel stereophonic signals

- •8.3.5 A scale-signal-based algorithm for upmixing

- •8.3.6 Upmixing algorithm based on principal component analysis

- •8.3.7 Algorithm based on the least mean square error for upmixing

- •8.3.8 Adaptive normalized algorithm based on the least mean square for upmixing

- •8.3.9 Some advanced upmixing algorithms

- •8.4 Summary

- •9.1 Each order approximation of ideal reproduction and Ambisonics

- •9.1.1 Each order approximation of ideal horizontal reproduction

- •9.1.2 Each order approximation of ideal three-dimensional reproduction

- •9.2 General formulation of multichannel sound field reconstruction

- •9.2.1 General formulation of multichannel sound field reconstruction in the spatial domain

- •9.2.2 Formulation of spatial-spectral domain analysis of circular secondary source array

- •9.2.3 Formulation of spatial-spectral domain analysis for a secondary source array on spherical surface

- •9.3 Spatial-spectral domain analysis and driving signals of Ambisonics

- •9.3.1 Reconstructed sound field of horizontal Ambisonics

- •9.3.2 Reconstructed sound field of spatial Ambisonics

- •9.3.3 Mixed-order Ambisonics

- •9.3.4 Near-field compensated higher-order Ambisonics

- •9.3.5 Ambisonic encoding of complex source information

- •9.3.6 Some special applications of spatial-spectral domain analysis of Ambisonics

- •9.4 Some problems related to Ambisonics

- •9.4.1 Secondary source array and stability of Ambisonics

- •9.4.2 Spatial transformation of Ambisonic sound field

- •9.5 Error analysis of Ambisonic-reconstructed sound field

- •9.5.1 Integral error of Ambisonic-reconstructed wavefront

- •9.5.2 Discrete secondary source array and spatial-spectral aliasing error in Ambisonics

- •9.6 Multichannel reconstructed sound field analysis in the spatial domain

- •9.6.1 Basic method for analysis in the spatial domain

- •9.6.2 Minimizing error in reconstructed sound field and summing localization equation

- •9.6.3 Multiple receiver position matching method and its relation to the mode-matching method

- •9.7 Listening room reflection compensation in multichannel sound reproduction

- •9.8 Microphone array for multichannel sound field signal recording

- •9.8.1 Circular microphone array for horizontal Ambisonic recording

- •9.8.2 Spherical microphone array for spatial Ambisonic recording

- •9.8.3 Discussion on microphone array recording

- •9.9 Summary

- •10.1 Basic principle and implementation of wave field synthesis

- •10.1.1 Kirchhoff–Helmholtz boundary integral and WFS

- •10.1.2 Simplification of the types of secondary sources

- •10.1.3 WFS in a horizontal plane with a linear array of secondary sources

- •10.1.4 Finite secondary source array and effect of spatial truncation

- •10.1.5 Discrete secondary source array and spatial aliasing

- •10.1.6 Some issues and related problems on WFS implementation

- •10.2 General theory of WFS

- •10.2.1 Green’s function of Helmholtz equation

- •10.2.2 General theory of three-dimensional WFS

- •10.2.3 General theory of two-dimensional WFS

- •10.2.4 Focused source in WFS

- •10.3 Analysis of WFS in the spatial-spectral domain

- •10.3.1 General formulation and analysis of WFS in the spatial-spectral domain

- •10.3.2 Analysis of the spatial aliasing in WFS

- •10.3.3 Spatial-spectral division method of WFS

- •10.4 Further discussion on sound field reconstruction

- •10.4.1 Comparison among various methods of sound field reconstruction

- •10.4.2 Further analysis of the relationship between acoustical holography and sound field reconstruction

- •10.4.3 Further analysis of the relationship between acoustical holography and Ambisonics

- •10.4.4 Comparison between WFS and Ambisonics

- •10.5 Equalization of WFS under nonideal conditions

- •10.6 Summary

- •11.1 Basic principles of binaural reproduction and virtual auditory display

- •11.1.1 Binaural recording and reproduction

- •11.1.2 Virtual auditory display

- •11.2 Acquisition of HRTFs

- •11.2.1 HRTF measurement

- •11.2.2 HRTF calculation

- •11.2.3 HRTF customization

- •11.3 Basic physical features of HRTFs

- •11.3.1 Time-domain features of far-field HRIRs

- •11.3.2 Frequency domain features of far-field HRTFs

- •11.3.3 Features of near-field HRTFs

- •11.4 HRTF-based filters for binaural synthesis

- •11.5 Spatial interpolation and decomposition of HRTFs

- •11.5.1 Directional interpolation of HRTFs

- •11.5.2 Spatial basis function decomposition and spatial sampling theorem of HRTFs

- •11.5.3 HRTF spatial interpolation and signal mixing for multichannel sound

- •11.5.4 Spectral shape basis function decomposition of HRTFs

- •11.6 Simplification of signal processing for binaural synthesis

- •11.6.1 Virtual loudspeaker-based algorithms

- •11.6.2 Basis function decomposition-based algorithms

- •11.7.1 Principle of headphone equalization

- •11.7.2 Some problems with binaural reproduction and VAD

- •11.8 Binaural reproduction through loudspeakers

- •11.8.1 Basic principle of binaural reproduction through loudspeakers

- •11.8.2 Virtual source distribution in two-front loudspeaker reproduction

- •11.8.3 Head movement and stability of virtual sources in Transaural reproduction

- •11.8.4 Timbre coloration and equalization in transaural reproduction

- •11.9 Virtual reproduction of stereophonic and multichannel surround sound

- •11.9.1 Binaural reproduction of stereophonic and multichannel sound through headphones

- •11.9.2 Stereophonic expansion and enhancement

- •11.9.3 Virtual reproduction of multichannel sound through loudspeakers

- •11.10.1 Binaural room modeling

- •11.10.2 Dynamic virtual auditory environments system

- •11.11 Summary

- •12.1 Physical analysis of binaural pressures in summing virtual source and auditory events

- •12.1.1 Evaluation of binaural pressures and localization cues

- •12.1.2 Method for summing localization analysis

- •12.1.3 Binaural pressure analysis of stereophonic and multichannel sound with amplitude panning

- •12.1.4 Analysis of summing localization with interchannel time difference

- •12.1.5 Analysis of summing localization at the off-central listening position

- •12.1.6 Analysis of interchannel correlation and spatial auditory sensations

- •12.2 Binaural auditory models and analysis of spatial sound reproduction

- •12.2.1 Analysis of lateral localization by using auditory models

- •12.2.2 Analysis of front-back and vertical localization by using a binaural auditory model

- •12.2.3 Binaural loudness models and analysis of the timbre of spatial sound reproduction

- •12.3 Binaural measurement system for assessing spatial sound reproduction

- •12.4 Summary

- •13.1 Analog audio storage and transmission

- •13.1.1 45°/45° Disk recording system

- •13.1.2 Analog magnetic tape audio recorder

- •13.1.3 Analog stereo broadcasting

- •13.2 Basic concepts of digital audio storage and transmission

- •13.3 Quantization noise and shaping

- •13.3.1 Signal-to-quantization noise ratio

- •13.3.2 Quantization noise shaping and 1-Bit DSD coding

- •13.4 Basic principle of digital audio compression and coding

- •13.4.1 Outline of digital audio compression and coding

- •13.4.2 Adaptive differential pulse-code modulation

- •13.4.3 Perceptual audio coding in the time-frequency domain

- •13.4.4 Vector quantization

- •13.4.5 Spatial audio coding

- •13.4.6 Spectral band replication

- •13.4.7 Entropy coding

- •13.4.8 Object-based audio coding

- •13.5 MPEG series of audio coding techniques and standards

- •13.5.1 MPEG-1 audio coding technique

- •13.5.2 MPEG-2 BC audio coding

- •13.5.3 MPEG-2 advanced audio coding

- •13.5.4 MPEG-4 audio coding

- •13.5.5 MPEG parametric coding of multichannel sound and unified speech and audio coding

- •13.5.6 MPEG-H 3D audio

- •13.6 Dolby series of coding techniques

- •13.6.1 Dolby digital coding technique

- •13.6.2 Some advanced Dolby coding techniques

- •13.7 DTS series of coding technique

- •13.8 MLP lossless coding technique

- •13.9 ATRAC technique

- •13.10 Audio video coding standard

- •13.11 Optical disks for audio storage

- •13.11.1 Structure, principle, and classification of optical disks

- •13.11.2 CD family and its audio formats

- •13.11.3 DVD family and its audio formats

- •13.11.4 SACD and its audio formats

- •13.11.5 BD and its audio formats

- •13.12 Digital radio and television broadcasting

- •13.12.1 Outline of digital radio and television broadcasting

- •13.12.2 Eureka-147 digital audio broadcasting

- •13.12.3 Digital radio mondiale

- •13.12.4 In-band on-channel digital audio broadcasting

- •13.12.5 Audio for digital television

- •13.13 Audio storage and transmission by personal computer

- •13.14 Summary

- •14.1 Outline of acoustic conditions and requirements for spatial sound intended for domestic reproduction

- •14.2 Acoustic consideration and design of listening rooms

- •14.3 Arrangement and characteristics of loudspeakers

- •14.3.1 Arrangement of the main loudspeakers in listening rooms

- •14.3.2 Characteristics of the main loudspeakers

- •14.3.3 Bass management and arrangement of subwoofers

- •14.4 Signal and listening level alignment

- •14.5 Standards and guidance for conditions of spatial sound reproduction

- •14.6 Headphones and binaural monitors of spatial sound reproduction

- •14.7 Acoustic conditions for cinema sound reproduction and monitoring

- •14.8 Summary

- •15.1 Outline of psychoacoustic and subjective assessment experiments

- •15.2 Contents and attributes for spatial sound assessment

- •15.3 Auditory comparison and discrimination experiment

- •15.3.1 Paradigms of auditory comparison and discrimination experiment

- •15.3.2 Examples of auditory comparison and discrimination experiment

- •15.4 Subjective assessment of small impairments in spatial sound systems

- •15.5 Subjective assessment of a spatial sound system with intermediate quality

- •15.6 Virtual source localization experiment

- •15.6.1 Basic methods for virtual source localization experiments

- •15.6.2 Preliminary analysis of the results of virtual source localization experiments

- •15.6.3 Some results of virtual source localization experiments

- •15.7 Summary

- •16.1.1 Application to commercial cinema and related problems

- •16.1.2 Applications to domestic reproduction and related problems

- •16.1.3 Applications to automobile audio

- •16.2.1 Applications to virtual reality

- •16.2.2 Applications to communication and information systems

- •16.2.3 Applications to multimedia

- •16.2.4 Applications to mobile and handheld devices

- •16.3 Applications to the scientific experiments of spatial hearing and psychoacoustics

- •16.4 Applications to sound field auralization

- •16.4.1 Auralization in room acoustics

- •16.4.2 Other applications of auralization technique

- •16.5 Applications to clinical medicine

- •16.6 Summary

- •References

- •Index

46 Spatial Sound

independent of the source distance in the far field. However, it varies considerably as the source distance changes within the range of 1.0 m (i.e., in the near field) for a sound source outside the median plane, especially within the range of 0.5 m. ILD is irrelevant to source properties because it is defined as the ratio between the sound pressures in the two ears. Therefore, the near-field ILD is a cue for absolute distance estimation. The pressure spectrum in each ear also changes with the source distance in the near field, which potentially serves as another distance cue. These distance-dependent cues are described by near-field HRTFs. Near-field HRTFs are used to render a virtual source at various distances in a virtual auditory display (Chapter 11). However, this method is reliable only within a target source distance of 1.0 m.

Reflections in an enclosed space are effective cues for distance estimation (Nielsen, 1993). In Equation (1.2.25), the direct-to-reverberant energy ratio is inversely proportional to the square of distance. Therefore, it can be used as a distance cue, although a real reflected sound field may deviate from the diffuse sound field. Equation (1.2.25) is derived on the basis of this ratio. Bronkhorst and Houtgast (1999) indicated that a simple model based on a modified direct-to-reverberant energy ratio can accurately predict the auditory distance perception in rooms. In stereophonic and multichannel sound program production, the perceived distance is often controlled by the direct-to-reverberant energy ratio in program signals. Frequencydependent boundary absorption modifies the power spectra of reflections, and the proportion of the reflected power increases as the sound source distance increases. Thus, the power spectra of binaural pressures vary with the sound source distance. This finding also provides information for auditory distance perception.

In summary, auditory distance perception is derived from the comprehensive analyses of multiple cues. Although distance estimation has recently received increasing attention, knowledge regarding its detailed mechanism remains incomplete.

1.7 SUMMING LOCALIZATION AND SPATIAL HEARING WITH MULTIPLE SOURCES

The localization of multiple sound sources, as the localization of a single sound source presented in Section 1.6, is another important aspect of spatial hearing (Blauert, 1997). Under a specific situation, the auditory system may perceive a sound coming from a spatial position where no real sound source exists when two or more sound sources simultaneously radiate correlated sounds. Such kind of an illusory or phantom sound source, also called virtual sound source (shortened as virtual source) or virtual sound image (shortened as sound image), results from the summing localization of multiple sound sources. In summing localization, the sound pressure in each ear is a linear combination of the pressures generated by multiple sound sources. The auditory system then automatically compares the localization cues, such as ITD and ILD, encoded in binaural sound pressures with the stored patterns derived from prior experiences with a single sound source. If the cues in binaural sound pressures successfully match the pattern of a single sound source at a given spatial position, then a convincing virtual sound source at that position is perceived. However, this case is not always true. Some experimental results of summing localization remain incompletely interpreted. Overall, summing localization with multiple sound sources is a spatial auditory event that should be explained with the psychoacoustic principle (Guan, 1995).

Under some situations, multiple sources may result in other spatial auditory events. For example, in the case of the precedence effect described in Section 1.7.2, a listener perceives sounds as though they come from one of the real sources regardless of the presence of other sound sources. When two or more sound sources simultaneously radiate partially correlated

Sound field, spatial hearing, and sound reproduction 47

sounds, the auditory system may perceive an extended or even diffusely located spatial auditory event.

All the abovementioned phenomena are related to the summing spatial hearing of multiple sound sources, and they are the subjective consequences of comprehensively processing the spatial information of multiple sources by the auditory system. In addition, the subjective perceptions of environment reflection are closely related to spatial hearing with multiple sources (Section 1.8).

1.7.1 Summing localization with two sound sources

The simplest case of summing localization is the one involving two sound sources. Blumlein (1931) first recognized the application of this psychoacoustic phenomenon to stereophonic reproduction. Since the work of Boer (1940), other researchers have conducted a variety of experiments, i.e., two-channel stereophonic localization experiments, on the summing localization with two sound sources (Leakey, 1959, 1960; Mertens, 1965; Simonson, 1984). Blauert (1997) summarized the results of some early experiments in his monograph.

A typical configuration of summing localization with two sources or two-channel stereophonic loudspeakers is shown in Figure 1.25. A listener locates at a symmetric position with respect to left and right loudspeakers. The azimuths of two loudspeakers are ±θ0, or two loudspeakers are separated by a spanned angle of 2θ0.The distance r0 from the loudspeaker to the head center is much larger than the head radius a. The base line length (distance) between two loudspeakers is 2LY, and the distance between the midpoint of the base line and the head center is LX. When both loudspeakers are provided identical signals, the listener perceives a single virtual source at the mid-direction between the two loudspeakers, i.e., directly in front of the listener. When the magnitude ratio or interchannel level difference (ICLD) is adjusted between loudspeaker signals, the virtual source moves toward the direction of the loudspeaker with a large signal level. An ICLD larger than approximately 15 dB to 18 dB is sufficient to position the virtual sound source to either of the loudspeakers (full left or full right). Then, the position of the virtual sound source no longer changes even with an increasing level difference.

The above results are qualitatively held for signals that include a low-frequency component below 1.5 kHz. However, the results obtained from various experiments quantitatively differ in terms of signals, experimental conditions, and methods. Some experimental errors

Figure 1.25 Summing localization experiment involving two sound sources (loudspeakers).

48 Spatial Sound

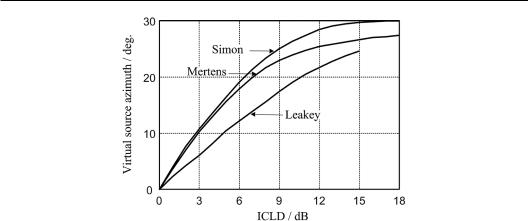

Figure 1.26 Virtual source localization experiments on loudspeaker signals with ICLD. (redrawn on the basis of the results of Leakey 1960, Mertens 1965, and Simonson 1984 and adopted from Wittek and Theile 2002).

may also be included in the results. Figure 1.26 illustrates the experimental results of a virtual source position that varies with the ICLD of loudspeaker signals obtained by Leakey (1960), Mertens (1965), and Simonson (1984). A standard angle span 2θ0 = 60° (or near 60°) between two loudspeakers was chosen in their experiments. Speech signals were used in the experiments of Leakey and Simonson. The noise signal centered at 1.1 kHz was used by Mertens. In addition, the displacements of the virtual source in the baseline were determined in some experiments. Here, they are converted to the azimuths of the virtual source.

In the same loudspeaker configuration shown in Figure 1.25, if a signal and its delayed version are fed into two loudspeakers, a virtual source moves toward the direction of the loudspeaker with the leading signal. When the interchannel time difference (ICTD) between two loudspeaker signals exceeds a certain upper limit, the virtual source moves to the direction of the loudspeaker. This result is held for impulse-like signals or some other signals with transient characteristics, such as click, speech, and music signals. However, the ICTD cannot be utilized effectively for low-frequency steady signals.

The ICTD required to position the virtual sound source to either of the loudspeakers varies considerably in different experiments and usually depends on the type of signals. It differs from several hundreds of microseconds (μs) to slightly more than 1 millisecond (ms) in most cases. Figure 1.27 illustrates the experimental results of the virtual source position varying with the ICTD obtained by Leakey (1960), Mertens (1965), and Simonson (1984). The conditions of experiments are similar to those mentioned above, but the signal used by Mertens was random noise. ICLD and ICTD are the level and time differences between two loudspeaker signals, respectively. They should not be confused with the ILD and ITD discussed in Section 1.6, which describes the level and time differences between the pressures or signals in the two ears and serve as cues for directional localization.

For some transient signals (rather than all signals), a trading effect exists between ICLD and ICTD. This effect has been experimentally investigated, but results have some differences depending on the type of signals (Leakey, 1959; Mertens, 1965; Blauert, 1997). The general tendency is summarized as follows. For loudspeaker signals with ICLD and ICTD, the movement of a virtual source enhances when the individual effects of ICLD and ICTD are consistent, and the movement of the virtual source becomes cancelled when the individual effects of ICLD and ICTD are opposite. Figure 1.28 illustrates the results of Mertens; that is, trading curves between ICLD and ICTD for the virtual source at θI = 0° and ±30°. The loudspeakers

Sound field, spatial hearing, and sound reproduction 49

Figure 1.27 Results of virtual source localization experiments for loudspeaker signals with ICTD. (redrawn on the basis of the results of Leakey 1960, Mertens 1965, Simonson 1984 and adopted from Wittek and Theile 2002.)

Figure 1.28 Trading curve between ICLD and ICTD. (redrawn on the basis of the data of Mertens, 1965 and adopted from Williams, 2013.)

are arranged at azimuths ±30°, and the signal is a Gaussian burst of white noise. The curves in Figure 1.28 are left-right symmetric. All combinations of ICTD and ICLD in each curve yield the same azimuth perception (i.e., 0°, −30°, or 30°).

As is indicated in Section 2.1 and Chapter 12, stereophonic loudspeaker signals with ICLD only results in appropriate low-frequency ITDp in the superposed sound pressures in the two ears. In addition, the ILD caused by loudspeaker signals with ICLD only is small below the frequency of 1.5 kHz, which is qualitatively consistent with the case of an actual sound source. The auditory system determines the position of a virtual source based on the comparison between the resultant ITDp and the patterns stored from previous experiences on real sound sources because ITDp dominates the lateral localization. The method of recreating a