- •Preface

- •Introduction

- •1.1 Spatial coordinate systems

- •1.2 Sound fields and their physical characteristics

- •1.2.1 Free-field and sound waves generated by simple sound sources

- •1.2.2 Reflections from boundaries

- •1.2.3 Directivity of sound source radiation

- •1.2.4 Statistical analysis of acoustics in an enclosed space

- •1.2.5 Principle of sound receivers

- •1.3 Auditory system and perception

- •1.3.1 Auditory system and its functions

- •1.3.2 Hearing threshold and loudness

- •1.3.3 Masking

- •1.3.4 Critical band and auditory filter

- •1.4 Artificial head models and binaural signals

- •1.4.1 Artificial head models

- •1.4.2 Binaural signals and head-related transfer functions

- •1.5 Outline of spatial hearing

- •1.6 Localization cues for a single sound source

- •1.6.1 Interaural time difference

- •1.6.2 Interaural level difference

- •1.6.3 Cone of confusion and head movement

- •1.6.4 Spectral cues

- •1.6.5 Discussion on directional localization cues

- •1.6.6 Auditory distance perception

- •1.7 Summing localization and spatial hearing with multiple sources

- •1.7.1 Summing localization with two sound sources

- •1.7.2 The precedence effect

- •1.7.3 Spatial auditory perceptions with partially correlated and uncorrelated source signals

- •1.7.4 Auditory scene analysis and spatial hearing

- •1.7.5 Cocktail party effect

- •1.8 Room reflections and auditory spatial impression

- •1.8.1 Auditory spatial impression

- •1.8.2 Sound field-related measures and auditory spatial impression

- •1.8.3 Binaural-related measures and auditory spatial impression

- •1.9.1 Basic principle of spatial sound

- •1.9.2 Classification of spatial sound

- •1.9.3 Developments and applications of spatial sound

- •1.10 Summary

- •2.1 Basic principle of a two-channel stereophonic sound

- •2.1.1 Interchannel level difference and summing localization equation

- •2.1.2 Effect of frequency

- •2.1.3 Effect of interchannel phase difference

- •2.1.4 Virtual source created by interchannel time difference

- •2.1.5 Limitation of two-channel stereophonic sound

- •2.2.1 XY microphone pair

- •2.2.2 MS transformation and the MS microphone pair

- •2.2.3 Spaced microphone technique

- •2.2.4 Near-coincident microphone technique

- •2.2.5 Spot microphone and pan-pot technique

- •2.2.6 Discussion on microphone and signal simulation techniques for two-channel stereophonic sound

- •2.3 Upmixing and downmixing between two-channel stereophonic and mono signals

- •2.4 Two-channel stereophonic reproduction

- •2.4.1 Standard loudspeaker configuration of two-channel stereophonic sound

- •2.4.2 Influence of front-back deviation of the head

- •2.5 Summary

- •3.1 Physical and psychoacoustic principles of multichannel surround sound

- •3.2 Summing localization in multichannel horizontal surround sound

- •3.2.1 Summing localization equations for multiple horizontal loudspeakers

- •3.2.2 Analysis of the velocity and energy localization vectors of the superposed sound field

- •3.2.3 Discussion on horizontal summing localization equations

- •3.3 Multiple loudspeakers with partly correlated and low-correlated signals

- •3.4 Summary

- •4.1 Discrete quadraphone

- •4.1.1 Outline of the quadraphone

- •4.1.2 Discrete quadraphone with pair-wise amplitude panning

- •4.1.3 Discrete quadraphone with the first-order sound field signal mixing

- •4.1.4 Some discussions on discrete quadraphones

- •4.2 Other horizontal surround sounds with regular loudspeaker configurations

- •4.2.1 Six-channel reproduction with pair-wise amplitude panning

- •4.2.2 The first-order sound field signal mixing and reproduction with M ≥ 3 loudspeakers

- •4.3 Transformation of horizontal sound field signals and Ambisonics

- •4.3.1 Transformation of the first-order horizontal sound field signals

- •4.3.2 The first-order horizontal Ambisonics

- •4.3.3 The higher-order horizontal Ambisonics

- •4.3.4 Discussion and implementation of the horizontal Ambisonics

- •4.4 Summary

- •5.1 Outline of surround sounds with accompanying picture and general uses

- •5.2 5.1-Channel surround sound and its signal mixing analysis

- •5.2.1 Outline of 5.1-channel surround sound

- •5.2.2 Pair-wise amplitude panning for 5.1-channel surround sound

- •5.2.3 Global Ambisonic-like signal mixing for 5.1-channel sound

- •5.2.4 Optimization of three frontal loudspeaker signals and local Ambisonic-like signal mixing

- •5.2.5 Time panning for 5.1-channel surround sound

- •5.3 Other multichannel horizontal surround sounds

- •5.4 Low-frequency effect channel

- •5.5 Summary

- •6.1 Summing localization in multichannel spatial surround sound

- •6.1.1 Summing localization equations for spatial multiple loudspeaker configurations

- •6.1.2 Velocity and energy localization vector analysis for multichannel spatial surround sound

- •6.1.3 Discussion on spatial summing localization equations

- •6.1.4 Relationship with the horizontal summing localization equations

- •6.2 Signal mixing methods for a pair of vertical loudspeakers in the median and sagittal plane

- •6.3 Vector base amplitude panning

- •6.4 Spatial Ambisonic signal mixing and reproduction

- •6.4.1 Principle of spatial Ambisonics

- •6.4.2 Some examples of the first-order spatial Ambisonics

- •6.4.4 Recreating a top virtual source with a horizontal loudspeaker arrangement and Ambisonic signal mixing

- •6.5 Advanced multichannel spatial surround sounds and problems

- •6.5.1 Some advanced multichannel spatial surround sound techniques and systems

- •6.5.2 Object-based spatial sound

- •6.5.3 Some problems related to multichannel spatial surround sound

- •6.6 Summary

- •7.1 Basic considerations on the microphone and signal simulation techniques for multichannel sounds

- •7.2 Microphone techniques for 5.1-channel sound recording

- •7.2.1 Outline of microphone techniques for 5.1-channel sound recording

- •7.2.2 Main microphone techniques for 5.1-channel sound recording

- •7.2.3 Microphone techniques for the recording of three frontal channels

- •7.2.4 Microphone techniques for ambience recording and combination with frontal localization information recording

- •7.2.5 Stereophonic plus center channel recording

- •7.3 Microphone techniques for other multichannel sounds

- •7.3.1 Microphone techniques for other discrete multichannel sounds

- •7.3.2 Microphone techniques for Ambisonic recording

- •7.4 Simulation of localization signals for multichannel sounds

- •7.4.1 Methods of the simulation of directional localization signals

- •7.4.2 Simulation of virtual source distance and extension

- •7.4.3 Simulation of a moving virtual source

- •7.5 Simulation of reflections for stereophonic and multichannel sounds

- •7.5.1 Delay algorithms and discrete reflection simulation

- •7.5.2 IIR filter algorithm of late reverberation

- •7.5.3 FIR, hybrid FIR, and recursive filter algorithms of late reverberation

- •7.5.4 Algorithms of audio signal decorrelation

- •7.5.5 Simulation of room reflections based on physical measurement and calculation

- •7.6 Directional audio coding and multichannel sound signal synthesis

- •7.7 Summary

- •8.1 Matrix surround sound

- •8.1.1 Matrix quadraphone

- •8.1.2 Dolby Surround system

- •8.1.3 Dolby Pro-Logic decoding technique

- •8.1.4 Some developments on matrix surround sound and logic decoding techniques

- •8.2 Downmixing of multichannel sound signals

- •8.3 Upmixing of multichannel sound signals

- •8.3.1 Some considerations in upmixing

- •8.3.2 Simple upmixing methods for front-channel signals

- •8.3.3 Simple methods for Ambient component separation

- •8.3.4 Model and statistical characteristics of two-channel stereophonic signals

- •8.3.5 A scale-signal-based algorithm for upmixing

- •8.3.6 Upmixing algorithm based on principal component analysis

- •8.3.7 Algorithm based on the least mean square error for upmixing

- •8.3.8 Adaptive normalized algorithm based on the least mean square for upmixing

- •8.3.9 Some advanced upmixing algorithms

- •8.4 Summary

- •9.1 Each order approximation of ideal reproduction and Ambisonics

- •9.1.1 Each order approximation of ideal horizontal reproduction

- •9.1.2 Each order approximation of ideal three-dimensional reproduction

- •9.2 General formulation of multichannel sound field reconstruction

- •9.2.1 General formulation of multichannel sound field reconstruction in the spatial domain

- •9.2.2 Formulation of spatial-spectral domain analysis of circular secondary source array

- •9.2.3 Formulation of spatial-spectral domain analysis for a secondary source array on spherical surface

- •9.3 Spatial-spectral domain analysis and driving signals of Ambisonics

- •9.3.1 Reconstructed sound field of horizontal Ambisonics

- •9.3.2 Reconstructed sound field of spatial Ambisonics

- •9.3.3 Mixed-order Ambisonics

- •9.3.4 Near-field compensated higher-order Ambisonics

- •9.3.5 Ambisonic encoding of complex source information

- •9.3.6 Some special applications of spatial-spectral domain analysis of Ambisonics

- •9.4 Some problems related to Ambisonics

- •9.4.1 Secondary source array and stability of Ambisonics

- •9.4.2 Spatial transformation of Ambisonic sound field

- •9.5 Error analysis of Ambisonic-reconstructed sound field

- •9.5.1 Integral error of Ambisonic-reconstructed wavefront

- •9.5.2 Discrete secondary source array and spatial-spectral aliasing error in Ambisonics

- •9.6 Multichannel reconstructed sound field analysis in the spatial domain

- •9.6.1 Basic method for analysis in the spatial domain

- •9.6.2 Minimizing error in reconstructed sound field and summing localization equation

- •9.6.3 Multiple receiver position matching method and its relation to the mode-matching method

- •9.7 Listening room reflection compensation in multichannel sound reproduction

- •9.8 Microphone array for multichannel sound field signal recording

- •9.8.1 Circular microphone array for horizontal Ambisonic recording

- •9.8.2 Spherical microphone array for spatial Ambisonic recording

- •9.8.3 Discussion on microphone array recording

- •9.9 Summary

- •10.1 Basic principle and implementation of wave field synthesis

- •10.1.1 Kirchhoff–Helmholtz boundary integral and WFS

- •10.1.2 Simplification of the types of secondary sources

- •10.1.3 WFS in a horizontal plane with a linear array of secondary sources

- •10.1.4 Finite secondary source array and effect of spatial truncation

- •10.1.5 Discrete secondary source array and spatial aliasing

- •10.1.6 Some issues and related problems on WFS implementation

- •10.2 General theory of WFS

- •10.2.1 Green’s function of Helmholtz equation

- •10.2.2 General theory of three-dimensional WFS

- •10.2.3 General theory of two-dimensional WFS

- •10.2.4 Focused source in WFS

- •10.3 Analysis of WFS in the spatial-spectral domain

- •10.3.1 General formulation and analysis of WFS in the spatial-spectral domain

- •10.3.2 Analysis of the spatial aliasing in WFS

- •10.3.3 Spatial-spectral division method of WFS

- •10.4 Further discussion on sound field reconstruction

- •10.4.1 Comparison among various methods of sound field reconstruction

- •10.4.2 Further analysis of the relationship between acoustical holography and sound field reconstruction

- •10.4.3 Further analysis of the relationship between acoustical holography and Ambisonics

- •10.4.4 Comparison between WFS and Ambisonics

- •10.5 Equalization of WFS under nonideal conditions

- •10.6 Summary

- •11.1 Basic principles of binaural reproduction and virtual auditory display

- •11.1.1 Binaural recording and reproduction

- •11.1.2 Virtual auditory display

- •11.2 Acquisition of HRTFs

- •11.2.1 HRTF measurement

- •11.2.2 HRTF calculation

- •11.2.3 HRTF customization

- •11.3 Basic physical features of HRTFs

- •11.3.1 Time-domain features of far-field HRIRs

- •11.3.2 Frequency domain features of far-field HRTFs

- •11.3.3 Features of near-field HRTFs

- •11.4 HRTF-based filters for binaural synthesis

- •11.5 Spatial interpolation and decomposition of HRTFs

- •11.5.1 Directional interpolation of HRTFs

- •11.5.2 Spatial basis function decomposition and spatial sampling theorem of HRTFs

- •11.5.3 HRTF spatial interpolation and signal mixing for multichannel sound

- •11.5.4 Spectral shape basis function decomposition of HRTFs

- •11.6 Simplification of signal processing for binaural synthesis

- •11.6.1 Virtual loudspeaker-based algorithms

- •11.6.2 Basis function decomposition-based algorithms

- •11.7.1 Principle of headphone equalization

- •11.7.2 Some problems with binaural reproduction and VAD

- •11.8 Binaural reproduction through loudspeakers

- •11.8.1 Basic principle of binaural reproduction through loudspeakers

- •11.8.2 Virtual source distribution in two-front loudspeaker reproduction

- •11.8.3 Head movement and stability of virtual sources in Transaural reproduction

- •11.8.4 Timbre coloration and equalization in transaural reproduction

- •11.9 Virtual reproduction of stereophonic and multichannel surround sound

- •11.9.1 Binaural reproduction of stereophonic and multichannel sound through headphones

- •11.9.2 Stereophonic expansion and enhancement

- •11.9.3 Virtual reproduction of multichannel sound through loudspeakers

- •11.10.1 Binaural room modeling

- •11.10.2 Dynamic virtual auditory environments system

- •11.11 Summary

- •12.1 Physical analysis of binaural pressures in summing virtual source and auditory events

- •12.1.1 Evaluation of binaural pressures and localization cues

- •12.1.2 Method for summing localization analysis

- •12.1.3 Binaural pressure analysis of stereophonic and multichannel sound with amplitude panning

- •12.1.4 Analysis of summing localization with interchannel time difference

- •12.1.5 Analysis of summing localization at the off-central listening position

- •12.1.6 Analysis of interchannel correlation and spatial auditory sensations

- •12.2 Binaural auditory models and analysis of spatial sound reproduction

- •12.2.1 Analysis of lateral localization by using auditory models

- •12.2.2 Analysis of front-back and vertical localization by using a binaural auditory model

- •12.2.3 Binaural loudness models and analysis of the timbre of spatial sound reproduction

- •12.3 Binaural measurement system for assessing spatial sound reproduction

- •12.4 Summary

- •13.1 Analog audio storage and transmission

- •13.1.1 45°/45° Disk recording system

- •13.1.2 Analog magnetic tape audio recorder

- •13.1.3 Analog stereo broadcasting

- •13.2 Basic concepts of digital audio storage and transmission

- •13.3 Quantization noise and shaping

- •13.3.1 Signal-to-quantization noise ratio

- •13.3.2 Quantization noise shaping and 1-Bit DSD coding

- •13.4 Basic principle of digital audio compression and coding

- •13.4.1 Outline of digital audio compression and coding

- •13.4.2 Adaptive differential pulse-code modulation

- •13.4.3 Perceptual audio coding in the time-frequency domain

- •13.4.4 Vector quantization

- •13.4.5 Spatial audio coding

- •13.4.6 Spectral band replication

- •13.4.7 Entropy coding

- •13.4.8 Object-based audio coding

- •13.5 MPEG series of audio coding techniques and standards

- •13.5.1 MPEG-1 audio coding technique

- •13.5.2 MPEG-2 BC audio coding

- •13.5.3 MPEG-2 advanced audio coding

- •13.5.4 MPEG-4 audio coding

- •13.5.5 MPEG parametric coding of multichannel sound and unified speech and audio coding

- •13.5.6 MPEG-H 3D audio

- •13.6 Dolby series of coding techniques

- •13.6.1 Dolby digital coding technique

- •13.6.2 Some advanced Dolby coding techniques

- •13.7 DTS series of coding technique

- •13.8 MLP lossless coding technique

- •13.9 ATRAC technique

- •13.10 Audio video coding standard

- •13.11 Optical disks for audio storage

- •13.11.1 Structure, principle, and classification of optical disks

- •13.11.2 CD family and its audio formats

- •13.11.3 DVD family and its audio formats

- •13.11.4 SACD and its audio formats

- •13.11.5 BD and its audio formats

- •13.12 Digital radio and television broadcasting

- •13.12.1 Outline of digital radio and television broadcasting

- •13.12.2 Eureka-147 digital audio broadcasting

- •13.12.3 Digital radio mondiale

- •13.12.4 In-band on-channel digital audio broadcasting

- •13.12.5 Audio for digital television

- •13.13 Audio storage and transmission by personal computer

- •13.14 Summary

- •14.1 Outline of acoustic conditions and requirements for spatial sound intended for domestic reproduction

- •14.2 Acoustic consideration and design of listening rooms

- •14.3 Arrangement and characteristics of loudspeakers

- •14.3.1 Arrangement of the main loudspeakers in listening rooms

- •14.3.2 Characteristics of the main loudspeakers

- •14.3.3 Bass management and arrangement of subwoofers

- •14.4 Signal and listening level alignment

- •14.5 Standards and guidance for conditions of spatial sound reproduction

- •14.6 Headphones and binaural monitors of spatial sound reproduction

- •14.7 Acoustic conditions for cinema sound reproduction and monitoring

- •14.8 Summary

- •15.1 Outline of psychoacoustic and subjective assessment experiments

- •15.2 Contents and attributes for spatial sound assessment

- •15.3 Auditory comparison and discrimination experiment

- •15.3.1 Paradigms of auditory comparison and discrimination experiment

- •15.3.2 Examples of auditory comparison and discrimination experiment

- •15.4 Subjective assessment of small impairments in spatial sound systems

- •15.5 Subjective assessment of a spatial sound system with intermediate quality

- •15.6 Virtual source localization experiment

- •15.6.1 Basic methods for virtual source localization experiments

- •15.6.2 Preliminary analysis of the results of virtual source localization experiments

- •15.6.3 Some results of virtual source localization experiments

- •15.7 Summary

- •16.1.1 Application to commercial cinema and related problems

- •16.1.2 Applications to domestic reproduction and related problems

- •16.1.3 Applications to automobile audio

- •16.2.1 Applications to virtual reality

- •16.2.2 Applications to communication and information systems

- •16.2.3 Applications to multimedia

- •16.2.4 Applications to mobile and handheld devices

- •16.3 Applications to the scientific experiments of spatial hearing and psychoacoustics

- •16.4 Applications to sound field auralization

- •16.4.1 Auralization in room acoustics

- •16.4.2 Other applications of auralization technique

- •16.5 Applications to clinical medicine

- •16.6 Summary

- •References

- •Index

42 Spatial Sound

the incident sound except the first one. Hence, the resonance model supports the idea that the spectral cue provided by the pinna is a directional localization cue.

Numerous psychoacoustic experiments have been devoted to exploring the localization cue encoded in spectral features. However, no general quantitative relationship between spectral features and sound source positions has been found because of the complexity and individuality of the shape and dimension of the pinna and the head. Blauert (1997) used narrow-band noise to investigate directional localization in the median plane. Experimental results show that the perceived position of a sound source is determined by the directional frequency band in the ear canal pressures regardless of the real sound source position; that is, the perceived position of a sound source is always located in specific directions, where the frequency of the spectral peak in the ear canal pressure caused by a wide-band sound coincides with the center frequency of the narrow-band noise. Hence, peaks in the ear canal pressure caused by the head and the pinna are important in localization (Middlebrooks et al., 1989).

However, some researchers argued that the spectral notch, especially the lowest frequency notch caused by the pinna (called the pinna notch), is more important for localization in the median plane and even for vertical localization outside the median plane (Hebrank and Wright, 1974; Butler and Belendiuk, 1977; Bloom, 1977; Kulkarni, 1997; Han, 1994). In front of the median plane, the center frequency of the pinna notch varies with elevation in the range of 5 or 6 kHz to about 12 or 13 kHz. This variation is due to the interaction of the incident sound arriving from different elevations to the different parts of the pinna. As a result, different diffraction and reflection delays occur relative to the direct sound. Thus, shifting frequency notch provides vertical localization information. Moore et al. (1989) found that shifting in the central frequency of the exquisitely narrow notch can be easily perceived although hearing is usually more sensitive to the spectral peak.

Other researchers contended that both peaks and notches (Watkins, 1978) or spectral profiles are important in localization (Middlebrooks, 1992). Algazi et al. (2001b) suggested that the change in the ipsilateral spectra below 3 kHz caused by the scattering and reflection of the torso, especially the shoulder, provides vertical localization information for a sound source outside the median plane.

In brief, the spectral feature caused by the diffraction and reflection of an anatomical structure, such as the head and pinna, is an important and individualized localization cue. Although a clarified and quantitative relationship between the spectral feature and the direction of a sound source is far less complete, one’s own spectral feature can be used to localize a sound source.

1.6.5 Discussion on directional localization cues

In summary, directional localization cues can be classified as follows:

1. For frequencies approximately below 1.5 kHz, the ITD derived from ITDp is the dominant cue for lateral localization.

2. Above the frequency of 1.5 kHz, ILD and ITD derived from the interaural envelope delay difference (ITDe) contribute to lateral localization. As frequency increases (approximately above 4–5 kHz), ILD gradually becomes dominant.

3. A spectral cue is important for localization. In particular, above frequencies of 5–6 kHz, a spectral cue introduced by the pinna is essential for the vertical localization and disambiguation of front–back confusion.

4. The dynamic cue introduced by the slight turning of the head is helpful in resolving front–back ambiguity and vertical localization.

Sound field, spatial hearing, and sound reproduction 43

The aforementioned directional localization cues except the dynamic cue can be evaluated from HRTFs. Therefore, HRTFs include the major directional localization cues. These localization cues are individually dependent because of the unique characteristics of anatomical structures and dimensions. The auditory system determines the position of a sound source based on a comparison between the obtained cues and patterns stored from prior experiences. However, even for the same individual, anatomical structures and dimensions vary with time, especially from childhood into adulthood albeit slowly. Therefore, a comparison with prior experiences may be a self-adaptive process, and the high-level neural system can automatically modify stored patterns by using auditory experiences.

Different kinds of localization cues work in different frequency ranges and contribute differently to localization. For sinusoidal or narrow-band stimuli, only the localization cues existing in the frequency range of the stimuli are available; hence, the resultant localization accuracy is likely to be frequency dependent. Mills (1958) investigated localization accuracy in the horizontal plane by using sinusoidal stimuli. He showed that localization accuracy is frequency dependent, and the highest accuracy is θS = 1° in front of the horizontal plane (θS = 0°) at frequencies below 1 kHz. With an average head radius a of 0.0875 m, the corresponding variation in ITD evaluated from Equation (1.6.1) is about 10 μs, or the variation in the low-frequency interaural phase delay difference evaluated from Equation (1.6.4) is about 15 μs. This finding is consistent with the average value of a just noticeable difference in ITD derived from psychoacoustic experiments (Blauert, 1997; Moore, 2012). Conversely, localization accuracy is the poorest around the frequency of 1.5–1.8 kHz, which is the range of difficult or ambiguous localization. This finding may be because the cue of ITDp becomes invalid within this frequency range; unfortunately, ITDe is a relatively weak localization cue, and the cue of ILD only begins to work and does not vary significantly with the direction within this frequency range.

In general, when more localization cues are presented in sound signals in the ears, the localization of the sound source position is more accurate because the high-level neural system can simultaneously use multiple cues. This fact is responsible for numerous phenomena. For example, (1) the accuracy of binaural localization is much better than that of monaural localization; (2) the accuracy of localization in a mobile head is usually better than that in an immobile one; and (3) the accuracy of localization for a wide-band stimulus is usually better than that for a narrow-band stimulus, especially when the stimulus contains components above 6 kHz, which can improve accuracy in vertical localization. Despite the absence of some cues, the auditory system can localize the sound source because the information provided by multiple localization cues may be somewhat redundant. For example, highfrequency spectral cues and dynamic cues contribute to vertical localization. When one cue is eliminated, another cue alone still enables vertical localization to some extent (Jiang et al., 2019).

Under some situations, when some cues conflict with others, the auditory system appears to identify the source position according to the more consistent cues. This phenomenon indicates that the high-level neural system can correct errors in localization information. Wightman and Kistler (1992) performed psychoacoustic experiments and proved that ITD is dominant as long as the wide-band stimuli include low frequencies regardless of conflicting ILD. However, if too many conflicts or losses exist in localization cues, accuracy, and quality in localization are likely to be degraded, splitting virtual sources are perceived, or localization is even impossible, as proven by a number of experiments. For example, when a dynamic cue at low frequency conflicts with a spectral cue at high frequencies, the front-back accuracy is degraded. Sometimes, one cue may dominate localization, or two conflicting cues may yield two splitting virtual sources at different frequency ranges (Pöntynen et al., 2016). These cues depend on the characteristics of signals, especially the power spectra of signals (Macpherson,

44 Spatial Sound

2011, 2013; Brimijoin and Akeroyd, 2012). These aforementioned results are applicable to spatial sound reproduction. Various practical spatial sound techniques are unable to reproduce the spatial information of a sound field within a full audible frequency range because it is limited by the complexity of the system. Practical spatial sound techniques can create the desired perceived effects to some extent provided that they can reproduce dominant spatial cues.

Aside from acoustic cues, visual cues dramatically affect sound localization. The human auditory system tends to localize sound from a visible source position. For example, in watching television, the sound usually appears to come from the screen, although it actually comes from loudspeakers. However, an unnatural perception may occur when the discrepancy between the visual and auditory location is too large, such as when a loudspeaker is positioned behind a television audience. This result is important for spatial sound reproduction with an accompanying picture (Chapters 3 and 5). This phenomenon further indicates that sound source localization is a consequence of a comprehensive processing of a variety of information received by the high-level neural system.

In Sections 1.3.2 and 1.3.3, directional loudness and spatial unmasking are related to binaural cues. After being scattered and diffracted by anatomical structures, such as the head and pinnae, sound waves are received by the two ears, and sound pressures in the eardrum depend on the source direction. Most variations in subjective loudness with the sound source direction can be analyzed in terms of HRTFs (Sivonen and Ellermeier, 2008).

Spatial unmasking can be partially interpreted with the source position tendency of HRTFs (Kopčo and Shinn-Cunningham, 2003). Other cues also provide information for spatial unmasking. The bandwidth of a masker is assumed to be less than that of an auditory filter, and a target is assumed as a pure tone, whose frequency is within the bandwidth of the masker. At a specific frequency, when the positions of the masker and the target are spatially coincident, the diffractions that anatomical structures, such as the head, cause to the masker and target sounds are the same. Accordingly, a certain target-to-masker sound pressure ratio (target-to-masker ratio) exists for each ear. When the masker and the target are spatially separated, the diffractions imposed on their sounds differ from each other, thereby potentially increasing the target-to-masker ratio of one ear (called the better ear). The auditory system can detect the target with the information provided by the better ear and therefore decrease the masking threshold. Each ear’s target-to-masker ratio, which is related to the conditions of the target and the masker (i.e., intensity, frequency, and spatial position), can be evaluated in terms of HRTFs.

1.6.6 Auditory distance perception

Although the ability of the human auditory system to estimate the sound source distance is generally poorer than the ability to locate sound source direction, a preliminary but biased auditory distance perception can still be formed. Experiments have demonstrated that the auditory system tends to significantly underestimate distances to distant sound sources with the physical source distance farther than a rough average of 1.6 m and typically overestimates distances to nearby sound sources with the physical source distance less than a rough average of 1.6 m. This finding suggests that the perceived source distance is not always identical to the physical one. Zahorik (2002a) examined experimental data from a variety of studies and found that the relationship between the perceived distance rI and the physical distance rS can be well approximated with a compressive power function by using a linear fit method:

rI rS , |

(1.6.9) |

Sound field, spatial hearing, and sound reproduction 45

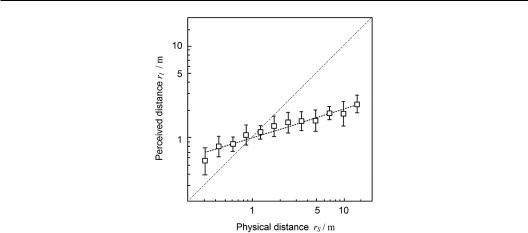

Figure 1.24 Relationship between rI and rS obtained using a linear fit for a typical subject, with α = 0.32 and κ = 1.00 (Zahorik, 2002b, with the permission of Zahorik P.).

where κ is a constant whose average is slightly greater than 1 (average of approximately 1.32), and δ is a power-law exponent whose value is influenced by various factors, such as experimental conditions and subjects, so this value varies in a wide range with a rough average of 0.4. In the logarithmic coordinate, the relationship between rI and rS is expressed with straight lines having various slopes; among them, a straight line through the origin with a slope of 1 means that rI is identical to rS, i.e., the case of unbiased distance estimation. Figure 1.24 shows the relationship between rI and rS obtained using a linear fit for a typical subject (Zahorik, 2002b).

Auditory distance perception, which was thoroughly reviewed by Zahorik et al. (2005), is a complex and comprehensive process based on multiple cues. Subjective loudness has been considered an effective cue to distance perception. Generally, loudness is closely related to sound pressure or intensity at a listener’s position; usually, strong sound pressure results in high loudness. In a free field, the sound pressure generated by a point sound source with constant power is inversely proportional to the distance between the sound source and the receiver (the 1/r law); that is, the SPL decreases by 6 dB for each doubling of the source distance. As a result, a close distance corresponds to a high sound pressure and subsequent high loudness. As such, loudness becomes a cue for distance estimation. However, the 1/r law only applies to the free field and deviates in reflective environments. Moreover, the sound pressure and loudness at a listener’s position depends on source properties, such as radiated power. Previous knowledge on sound sources or stimuli also influences the performance of distance estimation when loudness-based cues are used. In general, loudness is regarded as a relative distance cue unless the listener is highly familiar with the pressure level of the sound source.

The high-frequency attenuation caused by air absorption may be another cue of auditory distance perception. For a far sound source, air absorption acts as a low-pass filter and thereby modifies the spectra of sound pressures at the receiving position. This effect is important only for an extremely far sound source and negligible in an ordinary-sized room. Moreover, previous knowledge on the sound source may influence the performance of distance estimation when high-frequency attenuation-based cues are used. In general, highfrequency attenuation provides weak information for relative distance perception.

Some studies have demonstrated that the effects of acoustic diffraction and shadowing by the head provide information on evaluating distance for nearby sound sources (Brungart and Rabinowitz, 1999; Brungart et al., 1999; Brungart, 1999). In Section 1.6.2, ILD is nearly