- •Validity and reliability

- •What is Reliability?

- •What is Validity?

- •Conclusion

- •What is External Validity?

- •Psychology and External Validity The Battle Lines are Drawn

- •Randomization in External Validity and Internal Validity

- •Work Cited

- •What is Internal Validity?

- •Internal Validity vs Construct Validity

- •How to Maintain High Confidence in Internal Validity?

- •Temporal Precedence

- •Establishing Causality through a Process of Elimination

- •Internal Validity - the Final Word

- •How is Content Validity Measured?

- •An Example of Low Content Validity

- •Face Validity - Some Examples

- •If Face Validity is so Weak, Why is it Used?

- •Bibliography

- •What is Construct Validity?

- •How to Measure Construct Variability?

- •Threats to Construct Validity

- •Hypothesis Guessing

- •Evaluation Apprehension

- •Researcher Expectancies and Bias

- •Poor Construct Definition

- •Construct Confounding

- •Interaction of Different Treatments

- •Unreliable Scores

- •Mono-Operation Bias

- •Mono-Method Bias

- •Don't Panic

- •Bibliography

- •Criterion Validity

- •Content Validity

- •Construct Validity

- •Tradition and Test Validity

- •Which Measure of Test Validity Should I Use?

- •Works Cited

- •An Example of Criterion Validity in Action

- •Criterion Validity in Real Life - The Million Dollar Question

- •Coca-Cola - The Cost of Neglecting Criterion Validity

- •Concurrent Validity - a Question of Timing

- •An Example of Concurrent Validity

- •The Weaknesses of Concurrent Validity

- •Bibliography

- •Predictive Validity and University Selection

- •Weaknesses of Predictive Validity

- •Reliability and Science

- •Reliability and Cold Fusion

- •Reliability and Statistics

- •The Definition of Reliability Vs. Validity

- •The Definition of Reliability - An Example

- •Testing Reliability for Social Sciences and Education

- •Test - Retest Method

- •Internal Consistency

- •Reliability - One of the Foundations of Science

- •Test-Retest Reliability and the Ravages of Time

- •Inter-rater Reliability

- •Interrater Reliability and the Olympics

- •An Example From Experience

- •Qualitative Assessments and Interrater Reliability

- •Guidelines and Experience

- •Bibliography

- •Internal Consistency Reliability

- •Split-Halves Test

- •Kuder-Richardson Test

- •Cronbach's Alpha Test

- •Summary

- •Instrument Reliability

- •Instruments in Research

- •Test of Stability

- •Test of Equivalence

- •Test of Internal Consistency

- •Reproducibility vs. Repeatability

- •The Process of Replicating Research

- •Reproducibility and Generalization - a Cautious Approach

- •Reproducibility is not Essential

- •Reproducibility - An Impossible Ideal?

- •Reproducibility and Specificity - a Geological Example

- •Reproducibility and Archaeology - The Absurdity of Creationism

- •Bibliography

- •Type I Error

- •Type II Error

- •Hypothesis Testing

- •Reason for Errors

- •Type I Error - Type II Error

- •How Does This Translate to Science Type I Error

- •Type II Error

- •Replication

- •Type III Errors

- •Conclusion

- •Examples of the Null Hypothesis

- •Significance Tests

- •Perceived Problems With the Null

- •Development of the Null

Randomization in External Validity and Internal Validity

It is also important to distinguish between external and internal validity, especially with the process of randomization, which is easily misinterpreted. Random selection is an important tenet of external validity.

For example, a research design, which involves sending out survey questionnaires to students picked at random, displays more external validity than one where the questionnaires are given to friends. This is randomization to improve external validity.

Once you have a representative sample, high internal validity involves randomly assigning subjects to groups, rather than using pre-determined selection factors.

With the student example, randomly assigning the students into test groups, rather than picking pre-determined groups based upon degree type, gender, or age strengthens the internal validity.

Work Cited

Campbell, D.T., Stanley, J.C. (1966). Experimental and Quasi-Experimental Designs for Research. Skokie, Il: Rand McNally.

Population Validity

Population validity is a type of external validity which describes how well the sample used can be extrapolated to a population as a whole.

Bottom of Form

It evaluates whether the sample population represents the entire population, and also whether the sampling method is acceptable.

For example, an educational study that looked at a single school could not be generalized to cover children at every US school.

On the other hand, a federally appointed study, that tested every pupil of a certain age group, will have exceptionally strong population validity.

Due to time and cost restraints, most studies lie somewhere between these two extremes, and researchers pay extreme attention to their sampling techniques.

Experienced scientists ensure that their sample groups are as representative as possible, striving to use random selection rather than convenience sampling.

Ecological Validity

Ecological validity is a type of external validity which looks at the testing environment and determines how much it influences behavior.

Bottom of Form

In the school test example, if the pupils are used to regular testing, then the ecological validity is high because the testing process is unlikely to affect behavior.

On the other hand, taking each child out of class and testing them individually, in an isolated room, will dramatically lower ecological validity. The child may be nervous, ill at ease and is unlikely to perform in the same way as they would in a classroom.

Generalization becomes difficult, as the experiment does not resemble the real world situation.

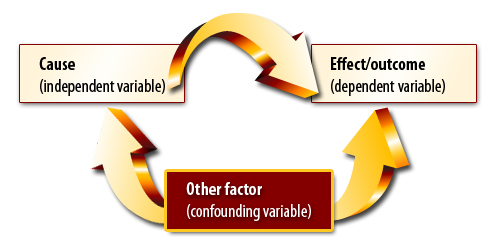

Internal validity is a crucial measure in quantitative studies, where it ensures that a researcher's experiment design closely follows the principle of cause and effect.

Bottom of Form

Internal validity is an important consideration in most scientific disciplines, especially the social sciences.

What is Internal Validity?

The easy way to describe internal validity is the confidence that we can place in the cause and effect relationship in a study. The key question that you should ask in any experiment is:

“Could there be an alternative cause, or causes, that explain my observations and results?”

Looking at some extreme examples, a physics experiment into the effect of heat on the conductivity of a metal has a high internal validity.

The researcher can eliminate almost all of the potential confounding variables and set up strong controlsto isolate other factors.

At the other end of the scale, a study into the correlation between income level and the likelihood of smoking has a far lower internal validity.

A researcher may find that there is a link between low-income groups and smoking, but cannot be certain that one causes the other.

Social

status, profession, ethnicity, education, parental smoking, and

exposure to targeted advertising are all variables that

may have an effect. They are difficult to eliminate, and social

research can be a statistical minefield

for the unwary.