- •1)Introduction to computer architecture. Organizational and methodical instructions

- •2)Interrupts. Interrupts and the Instruction Cycle. Interrupt Processing. Multiprogramming.

- •3)Introduction to Operating systems (os), general architecture and organization.

- •Introduction to os Organization

- •4)Major Achievements of os: the Process; Memory Management; Information Protection and Security; Scheduling and Resource Management; System Structure.

- •5)Process Description and Control

- •6)Process States. Process Models.The Creation and Termination of Processes.Suspended Processes.

- •7)Process Description. Operating System Control Structures.Process Switching.

- •8)Memory Management

- •10)Memory Management Requirements. Relocation. Protection. Sharing.

- •11.Memory Partitioning. Relocation.

- •12) Virtual Memory. Paging. Segmentation. Combined Paging and Segmentation.

- •13) Replacement Policy. First-Come-First-Served, Round-Robin algorithms

- •14) Replacement Policy. Shortest Process Next, Shortest Remaining Time algorithms.

- •15)File Organization and Access.

- •16.Secondary Storage Management.

- •17.Short history of Windows, general architecture, software configuration, registry, main administration tools; the boot process.

- •18. Mutual Exclusion and Synchronization

- •19. Programming tools for mutual exclusion: locks, semaphores

- •20. Deadlock: general principle, synchronization by events, monitors, the producer/consumer example, the dining philosophers’ problem

- •21. Disk scheduling. Raid-arrays

- •22. Input-output management

- •23. Page replacement algorithms

- •24. Mutual exclusion and synchronization

- •25. Overview of computer hardware

- •26.Uniprocessor scheduling.

- •27.Implementation of disk scheduling algorithms sstf, scan.

- •28. Implementation of disk scheduling algorithm look.

- •29. Implementation of lru replacement algorithm.

- •31.Briefly describe basic types of processor scheduling. Examples

- •I/o scheduling

- •32.What is the difference between preemptive and nonpreemptive scheduling? Examples.

28. Implementation of disk scheduling algorithm look.

The default disk scheduler in Linux 2.4 is known as the Linus Elevator,which is a variation

on the LOOK algorithm, the Elevator algorithm has been augmented by two additional algorithms: the deadline I/O scheduler and the anticipatory I/O scheduler [LOVE04].We examine each of these in turn.

The Elevator Scheduler The elevator scheduler maintains a single queue for disk read and write requests and performs both sorting and merging functions on the queue. In general terms, the elevator scheduler keeps the list of requests sorted by block number.Thus, as the disk requests are handled, the drive moves in a single direction, satisfying each request as it is encountered.This general strategy is refined in the following manner.When a new request is added to the queue, four operations are considered in order:

1. If the request is to the same on-disk sector or an immediately adjacent sector to a pending request in the queue, then the existing request and the new request are merged into one request.

2. If a request in the queue is sufficiently old, the new request is inserted at the tail of the queue.

3. If there is a suitable location, the new request is inserted in sorted order.

4. If there is no suitable location, the new request is placed at the tail of the queue.

Deadline Scheduler Operation 2 in the preceding list is intended to prevent starvation of a request, but is not very effective [LOVE04]. It does not attempt to service requests in a given time frame but merely stops insertion-sorting requests after a suitable delay.Two problems manifest themselves with the elevator scheme. The first problem is that a distant block request can be delayed for a substantial time because the queue is dynamically updated. For example, consider the following stream of requests for disk blocks: 20, 30, 700, 25. The elevator scheduler reorders these so that the requests are placed in the queue as 20, 25, 30, 700, with 20 being the head of the queue. If a continuous sequence of low-numbered block requests arrive, then the request for 700 continues to be delayed.

Anticipatory I/O Scheduler The original elevator scheduler and the deadline scheduler both are designed to dispatch a new request as soon as the existing request is satisfied, thus keeping the disk as busy as possible. This same policy applies to all of the scheduling algorithms. However, such a policy can be counterproductive if there are numerous synchronous read requests. Typically, an application will wait until a read request is satisfied and the data available before issuing the next request.The small delay between receiving the data for the last read and issuing the next read enables the scheduler to turn elsewhere for a pending request and dispatch that request.

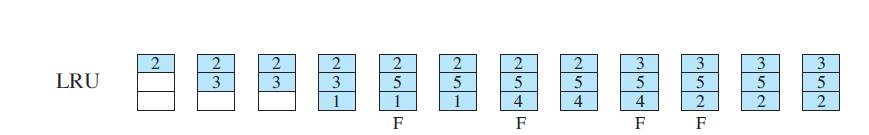

29. Implementation of lru replacement algorithm.

The least recently used (LRU) policy replaces the page in memory that has not been referenced for the longest time. By the principle of locality, this should be the page least likely to be referenced in the near future.And, in fact, the LRU policy does nearly as well as the optimal policy.The problem with this approach is the difficulty in implementation. One approach would be to tag each page with the time of

its last reference; this would have to be done at each memory reference, both instruction and data. Even if the hardware would support such a scheme, the overhead would be tremendous. Alternatively, one could maintain a stack of page references, again an expensive prospect. Although the LRU policy does nearly as well as an optimal policy, it is difficult to implement and imposes significant overhead.

30.Replacement Policy. First-Come-First-Served, Round-Robin algorithms, q=2.

The area of replacement policy is probably the most studied of any area of memory management.When all of the frames in main memory are occupied and it is necessary to bring in a new page to satisfy a page fault,the replacement policy de-termines which page currently in memory is to be replaced.All of the policies have as their objective that the page that is removed should be the page least likely to be referenced in the near future. Because of the principle of locality, there is often a high correlation between recent referencing history and near-future referencing patterns. Thus, most policies try to predict future behavior on the basis of past be-havior.One tradeoff that must be considered is that the more elaborate and sophis-ticated the replacement policy, the greater the hardware and software overhead toimplement it.

First-Come-First-Served The simplest scheduling policy is first-come-first-served (FCFS), also known as first-in-first-out (FIFO) or a strict queuing scheme.As each process becomes ready, it joins the ready queue. When the currently run-ning process ceases to execute, the process that has been in the ready queue thelongest is selected for running.

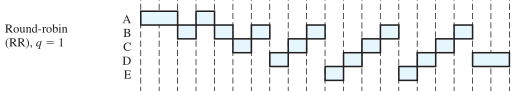

FCFS is not an attractive alternative on its own for a uniprocessor system. However, it is often combined with a priority scheme to provide an effective scheduler. Thus, the scheduler may maintain a number of queues, one for each priority level, and dispatch within each queue on a first-come-first-served basis. Round Robin A straightforward way to reduce the penalty that short jobs suffer with FCFS is to use preemption based on a clock.The simplest such policy is round robin. A clock interrupt is generated at periodic intervals. When the interrupt oc- curs, the currently running process is placed in the ready queue, and the next ready job is selected on a FCFS basis.This technique is also known as time slicing,because each process is given a slice of time before being preempted. With round robin, the principal design issue is the length of the time quantum, or slice, to be used. If the quantum is very short, then short processes will move through the system relatively quickly. On the other hand, there is processing over- head involved in handling the clock interrupt and performing the scheduling and dis- patching function.Thus,very short time quanta should be avoided.One useful guide is that the time quantum should be slightly greater than the time required for a typi- cal interaction or process function. If it is less, then most processes will require at least two time quanta. Round robin is particularly effective in a general-purpose time-sharing system or transaction processing system. One drawback to round robin is its relative treatment of processor-bound and I/O-bound processes.

q=1