- •#3. Role of Econometrics.

- •#4. Main Application of Econometrics.

- •#5. Cross Section Data.

- •#8. Panel or Longitudinal Data.

- •#12. Simple Regression Model (srm)

- •# 13. Simple Linear Model (slm)

- •#18. What is rss, ess, tss?

- •#25. The Random Components of the Regression Coefficients.

- •#26. The Gauss – Markov Theorem.

- •Indeed there have to be satisfied four conditions, known as the Gauss-Markov conditions, if ordinary least squares regression analysis is to give the best possible result.

- •#27. Unbiasedness of the Regression Coefficients.

- •#28. Precision of the Regression Coefficients.

- •#29. Testing Hypotheses Relating to the Regression Coefficients.

- •It has to be estimated by the standard error of b2, given by

- •#30. Confidence Intervals.

- •#35. What Can You Do about Heteroscedasticity?

- •#38. What Can You Do about Autocorrelation?

- •#45. Тhе apt model.

#27. Unbiasedness of the Regression Coefficients.

From

b2 = Cov(X,Y)/Var(X) = β2 + Cov(X,u)/Var(X)

we can show that b2 must be an unbiased estimator of β2 if the fourth Gauss-Markov condition is satisfied:

E(b2) = E[β2+Cov(X,u)/Var(X)] = β2 + E[Cov(X,u)/Var(X)]

If we adopt the strong version of the fourth Gauss-Markov condition and assume that X is nonrandom, we may also take Var(X) as a given constant, and so

E(b2) = β2 + E[Cov(X,u)]*1/Var(X)

We will demonstrate that E[Cov(X,u)] is 0:

In the second line the second expected value rule has been used to bring (1/n) out of the expression as a common factor, and the first rule has been used to break up the expectation of the sum into the sum of the expectations.

In the third line, the term involving X has been brought out because X is nonstochastic.

By

virtue of the first Gauss-Markov condition, E(ui)

is 0, and hence

Therefore E(Cov(X,u)) is 0 and E(b2)=β2

In other words, b2 is an unbiased estimator of β2. One may easily show that b1 is an unbiased estimator of β1.

#28. Precision of the Regression Coefficients.

Now we shall consider σb12 and σb22, the population variances of b1 and b2 about their population means.

These are given by the following expressions (Thomas, 1984)

and

and

The standard errors of the regression coefficients will be calculated

and

and

The higher the variance of the disturbance term, the higher the sample variance of the residuals if likely to be, and hence the higher will be the standard errors of the coefficients in the regression equation, reflecting that the coefficients are inaccurate.

However, it is only a risk. It is possible that in any particular sample the effects of the disturbance term in the different observations will cancel each other out and the regression coefficients will be accurate after all.

#29. Testing Hypotheses Relating to the Regression Coefficients.

Suppose you have a theoretical relationship

Yi = β1 + β2Xi + ui

And your null and alternative hypotheses are

H0: β2 = β20, H1: β2 ≠ β20

We have assumed that the standard deviation of b2 is known, which is mostly unlikely in practice.

It has to be estimated by the standard error of b2, given by

This causes two modifications to the test procedure.

Firs, z is now defined using s.e.(b2) instead of s.d.(b2), and it is referred to as the t statistic.

Second, the critical levels of t depend upon what is known as a t distribution.

The critical value of t is denoted as tcrit.

The condition that a regression estimate should not lead to the rejection of a null hypothesis

H0: β2 = β20

Hence we have the decision rule: reject H0 if

Do not reject if

Where

is

the absolute value (numerical value, neglecting the sign) of t.

is

the absolute value (numerical value, neglecting the sign) of t.

#30. Confidence Intervals.

We can use a confidence interval to describe the amount of uncertainty associated with a sample estimate of a population parameter. The confidence level describes the uncertainty associated with a sampling method. Suppose we used the same sampling method to select different samples and to compute a different interval estimate for each sample. Some interval estimates would include the true population parameter and some would not. A 90% confidence level means that we would expect 90% of the interval estimates to include the population parameter; A 95% confidence level means that 95% of the intervals would include the parameter; and so on. To express a confidence interval, you need three pieces of information: Confidence level, Statistic, Margin of error (Margin of error = Critical value * Standard deviation of statistic)

We would have to construct a 95% confidence intervals to provide upper an lover boundaries for our predictions

Y(low level)=Ytheoretical-tcr.*Sy

Yt(higher level)= Yth.+tcr*Sy(standard error)

So, if real data locate in interval[Y-m+1;Y+m+1] our model for predictions is correct.

#31. One-Tailed t –Tests.

There are really two different one-tailed t-tests, one for each tail. In a one-tailed t-test, all the area associated with a place it is placed in either one tail or the other. Both degrees of freedom for tcr are the same, and the probability is 0.05. V(degree of freedom)1=V2=k-(m+1), where K-quantity of observations and m=1.

#32. Heteroscedasticity and Its Implications.

The 2nd of the Gauss-Markov conditions states that the variance of the disturbance term in each observation should be constant.

The disturbance term in each observation has only one value, so what can be meant by its “variance”?

What we are talking about is its potential behavior before the sample is generated.

When we write the model

Y=β1+β2*X+u1

The first two Gauss-Markov conditions state that the disturbance term u1,…,un in the n observations are drawn from probability distributions that have 0 mean and the same variance.

Their actual values in the sample will sometimes be positive, sometimes negative, sometimes relatively far from 0, sometimes relatively close, but there will be no a priori reason to anticipate a particularly erratic value in any given observation.

To put it another way, the probability of u reaching a given positive (or negative) value will be the same in all observations.

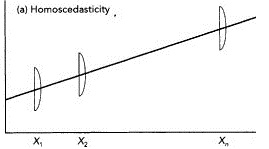

This condition is known as homoscedasticity, which means “same dispersion”.

Figure provides an illustration of homoscedasticity. Homoscedasticity: σu12=σu2, same for all observations.

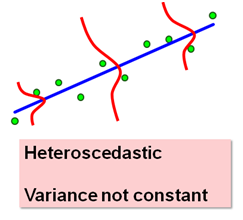

Heteroscedasticity: σu12, not the same for all observations.

Why does heteroscedasticity matter?

If heteroscedasticity is present:

The OLS estimators are inefficient.

The standard errors of the regression coefficients will be wrong.

It is quite likely that the standard errors will be underestimated, so the t statistics will be overestimated and you will have a misleading impression of the precision of your regression coefficients.

You may be led to believe that a coefficient is significantly different from 0, at a given significance level, when in fact it is not.

#33. Possible Causes of Heteroscedasticity.

Heteroscedasticity is likely to be a problem when the values of the variables in the sample vary substantially in different observations.

If the time relationship is given by

Y=β1+β2X+u

It may well be the case that the variations in the omitted variables and the measurement errors that are jointly responsible for the disturbance term will be relatively small when Y and X are small and large when they are large.

#34. Detection of Heteroscedasticity: The Goldfeld–Quandt Test.

The most common formal test for heteroscedasticity is that of Goldfeld and Quandt (1965).

It assumes that σui, the standard deviation of the probability distribution of the disturbance term in observation I, is proportional to the size of Xi.

It also assumes that the disturbance term is normally distributed and satisfies the other Gauss-Markov conditions.

The n observations in the sample are ordered by the magnitude of X and separate regressions are run for the first n’ and for the last n’ observations, the middle (n-2n’) observations being dropped entirely.

If heteroscedasticity is present, and if the assumption concerning its nature is true, the variance of u in the last n’ observations will be greater than that in the first n’ and this will be reflected in the residual sums of squares in the two subregressions.

Denoting these by RSS1 and RSS2 for the subregressions with the first n’ and the last n’ observations, respectively, the ratio RSS2/RSS1 will be distributed as F statistic with (n’-k) and (n’-k) degrees of freedom, where k is the number of parameters in the equation under the null hypothesis of homoscedasticity.

The power of the test depends on the choice of n’ in relation to n.

As a result of some experiments undertaken by them, Goldfeld and Quandt suggests that n’ should be about 11 when n is 30 and about 22 when n is 60, suggesting that n’ should be about 3/8’s of n.

If there is more than 1 explanatory variable in the model, the observations should be ordered by that which is hypothesized to be associated with σi.

The null hypothesis for the test is that RSS2 is not significantly greater than RSS1, and the alternative hypothesis is that it is significantly greater.

If RSS2 turns out to be smaller than RSS1, you are not going to reject the null hypothesis and there is no point in computing the test statistic RSS2/RSS1.

However the Goldfeld and Quandt test can also be used for the case where the standard deviation of the disturbance term is hypothesized to be inversely proportional to Xi.

The procedure is the same as before, but the test statistic is now RSS1/RSS2 and it will again distributed as an F statistic with (n’-k) and (n’-k) degrees of freedom under the null hypothesis of homoscedasticity.