- •Cloud Computing

- •Foreword

- •Preface

- •Introduction

- •Expected Audience

- •Book Overview

- •Part 1: Cloud Base

- •Part 2: Cloud Seeding

- •Part 3: Cloud Breaks

- •Part 4: Cloud Feedback

- •Contents

- •1.1 Introduction

- •1.1.1 Cloud Services and Enabling Technologies

- •1.2 Virtualization Technology

- •1.2.1 Virtual Machines

- •1.2.2 Virtualization Platforms

- •1.2.3 Virtual Infrastructure Management

- •1.2.4 Cloud Infrastructure Manager

- •1.3 The MapReduce System

- •1.3.1 Hadoop MapReduce Overview

- •1.4 Web Services

- •1.4.1 RPC (Remote Procedure Call)

- •1.4.2 SOA (Service-Oriented Architecture)

- •1.4.3 REST (Representative State Transfer)

- •1.4.4 Mashup

- •1.4.5 Web Services in Practice

- •1.5 Conclusions

- •References

- •2.1 Introduction

- •2.2 Background and Related Work

- •2.3 Taxonomy of Cloud Computing

- •2.3.1 Cloud Architecture

- •2.3.1.1 Services and Modes of Cloud Computing

- •Software-as-a-Service (SaaS)

- •Platform-as-a-Service (PaaS)

- •Hardware-as-a-Service (HaaS)

- •Infrastructure-as-a-Service (IaaS)

- •2.3.2 Virtualization Management

- •2.3.3 Core Services

- •2.3.3.1 Discovery and Replication

- •2.3.3.2 Load Balancing

- •2.3.3.3 Resource Management

- •2.3.4 Data Governance

- •2.3.4.1 Interoperability

- •2.3.4.2 Data Migration

- •2.3.5 Management Services

- •2.3.5.1 Deployment and Configuration

- •2.3.5.2 Monitoring and Reporting

- •2.3.5.3 Service-Level Agreements (SLAs) Management

- •2.3.5.4 Metering and Billing

- •2.3.5.5 Provisioning

- •2.3.6 Security

- •2.3.6.1 Encryption/Decryption

- •2.3.6.2 Privacy and Federated Identity

- •2.3.6.3 Authorization and Authentication

- •2.3.7 Fault Tolerance

- •2.4 Classification and Comparison between Cloud Computing Ecosystems

- •2.5 Findings

- •2.5.2 Cloud Computing PaaS and SaaS Provider

- •2.5.3 Open Source Based Cloud Computing Services

- •2.6 Comments on Issues and Opportunities

- •2.7 Conclusions

- •References

- •3.1 Introduction

- •3.2 Scientific Workflows and e-Science

- •3.2.1 Scientific Workflows

- •3.2.2 Scientific Workflow Management Systems

- •3.2.3 Important Aspects of In Silico Experiments

- •3.3 A Taxonomy for Cloud Computing

- •3.3.1 Business Model

- •3.3.2 Privacy

- •3.3.3 Pricing

- •3.3.4 Architecture

- •3.3.5 Technology Infrastructure

- •3.3.6 Access

- •3.3.7 Standards

- •3.3.8 Orientation

- •3.5 Taxonomies for Cloud Computing

- •3.6 Conclusions and Final Remarks

- •References

- •4.1 Introduction

- •4.2 Cloud and Grid: A Comparison

- •4.2.1 A Retrospective View

- •4.2.2 Comparison from the Viewpoint of System

- •4.2.3 Comparison from the Viewpoint of Users

- •4.2.4 A Summary

- •4.3 Examining Cloud Computing from the CSCW Perspective

- •4.3.1 CSCW Findings

- •4.3.2 The Anatomy of Cloud Computing

- •4.3.2.1 Security and Privacy

- •4.3.2.2 Data and/or Vendor Lock-In

- •4.3.2.3 Service Availability/Reliability

- •4.4 Conclusions

- •References

- •5.1 Overview – Cloud Standards – What and Why?

- •5.2 Deep Dive: Interoperability Standards

- •5.2.1 Purpose, Expectations and Challenges

- •5.2.2 Initiatives – Focus, Sponsors and Status

- •5.2.3 Market Adoption

- •5.2.4 Gaps/Areas of Improvement

- •5.3 Deep Dive: Security Standards

- •5.3.1 Purpose, Expectations and Challenges

- •5.3.2 Initiatives – Focus, Sponsors and Status

- •5.3.3 Market Adoption

- •5.3.4 Gaps/Areas of Improvement

- •5.4 Deep Dive: Portability Standards

- •5.4.1 Purpose, Expectations and Challenges

- •5.4.2 Initiatives – Focus, Sponsors and Status

- •5.4.3 Market Adoption

- •5.4.4 Gaps/Areas of Improvement

- •5.5.1 Purpose, Expectations and Challenges

- •5.5.2 Initiatives – Focus, Sponsors and Status

- •5.5.3 Market Adoption

- •5.5.4 Gaps/Areas of Improvement

- •5.6 Deep Dive: Other Key Standards

- •5.6.1 Initiatives – Focus, Sponsors and Status

- •5.7 Closing Notes

- •References

- •6.1 Introduction and Motivation

- •6.2 Cloud@Home Overview

- •6.2.1 Issues, Challenges, and Open Problems

- •6.2.2 Basic Architecture

- •6.2.2.1 Software Environment

- •6.2.2.2 Software Infrastructure

- •6.2.2.3 Software Kernel

- •6.2.2.4 Firmware/Hardware

- •6.2.3 Application Scenarios

- •6.3 Cloud@Home Core Structure

- •6.3.1 Management Subsystem

- •6.3.2 Resource Subsystem

- •6.4 Conclusions

- •References

- •7.1 Introduction

- •7.2 MapReduce

- •7.3 P2P-MapReduce

- •7.3.1 Architecture

- •7.3.2 Implementation

- •7.3.2.1 Basic Mechanisms

- •Resource Discovery

- •Network Maintenance

- •Job Submission and Failure Recovery

- •7.3.2.2 State Diagram and Software Modules

- •7.3.3 Evaluation

- •7.4 Conclusions

- •References

- •8.1 Introduction

- •8.2 The Cloud Evolution

- •8.3 Improved Network Support for Cloud Computing

- •8.3.1 Why the Internet is Not Enough?

- •8.3.2 Transparent Optical Networks for Cloud Applications: The Dedicated Bandwidth Paradigm

- •8.4 Architecture and Implementation Details

- •8.4.1 Traffic Management and Control Plane Facilities

- •8.4.2 Service Plane and Interfaces

- •8.4.2.1 Providing Network Services to Cloud-Computing Infrastructures

- •8.4.2.2 The Cloud Operating System–Network Interface

- •8.5.1 The Prototype Details

- •8.5.1.1 The Underlying Network Infrastructure

- •8.5.1.2 The Prototype Cloud Network Control Logic and its Services

- •8.5.2 Performance Evaluation and Results Discussion

- •8.6 Related Work

- •8.7 Conclusions

- •References

- •9.1 Introduction

- •9.2 Overview of YML

- •9.3 Design and Implementation of YML-PC

- •9.3.1 Concept Stack of Cloud Platform

- •9.3.2 Design of YML-PC

- •9.3.3 Core Design and Implementation of YML-PC

- •9.4 Primary Experiments on YML-PC

- •9.4.1 YML-PC Can Be Scaled Up Very Easily

- •9.4.2 Data Persistence in YML-PC

- •9.4.3 Schedule Mechanism in YML-PC

- •9.5 Conclusion and Future Work

- •References

- •10.1 Introduction

- •10.2 Related Work

- •10.2.1 General View of Cloud Computing frameworks

- •10.2.2 Cloud Computing Middleware

- •10.3 Deploying Applications in the Cloud

- •10.3.1 Benchmarking the Cloud

- •10.3.2 The ProActive GCM Deployment

- •10.3.3 Technical Solutions for Deployment over Heterogeneous Infrastructures

- •10.3.3.1 Virtual Private Network (VPN)

- •10.3.3.2 Amazon Virtual Private Cloud (VPC)

- •10.3.3.3 Message Forwarding and Tunneling

- •10.3.4 Conclusion and Motivation for Mixing

- •10.4 Moving HPC Applications from Grids to Clouds

- •10.4.1 HPC on Heterogeneous Multi-Domain Platforms

- •10.4.2 The Hierarchical SPMD Concept and Multi-level Partitioning of Numerical Meshes

- •10.4.3 The GCM/ProActive-Based Lightweight Framework

- •10.4.4 Performance Evaluation

- •10.5 Dynamic Mixing of Clusters, Grids, and Clouds

- •10.5.1 The ProActive Resource Manager

- •10.5.2 Cloud Bursting: Managing Spike Demand

- •10.5.3 Cloud Seeding: Dealing with Heterogeneous Hardware and Private Data

- •10.6 Conclusion

- •References

- •11.1 Introduction

- •11.2 Background

- •11.2.1 ASKALON

- •11.2.2 Cloud Computing

- •11.3 Resource Management Architecture

- •11.3.1 Cloud Management

- •11.3.2 Image Catalog

- •11.3.3 Security

- •11.4 Evaluation

- •11.5 Related Work

- •11.6 Conclusions and Future Work

- •References

- •12.1 Introduction

- •12.2 Layered Peer-to-Peer Cloud Provisioning Architecture

- •12.4.1 Distributed Hash Tables

- •12.4.2 Designing Complex Services over DHTs

- •12.5 Cloud Peer Software Fabric: Design and Implementation

- •12.5.1 Overlay Construction

- •12.5.2 Multidimensional Query Indexing

- •12.5.3 Multidimensional Query Routing

- •12.6 Experiments and Evaluation

- •12.6.1 Cloud Peer Details

- •12.6.3 Test Application

- •12.6.4 Deployment of Test Services on Amazon EC2 Platform

- •12.7 Results and Discussions

- •12.8 Conclusions and Path Forward

- •References

- •13.1 Introduction

- •13.2 High-Throughput Science with the Nimrod Tools

- •13.2.1 The Nimrod Tool Family

- •13.2.2 Nimrod and the Grid

- •13.2.3 Scheduling in Nimrod

- •13.3 Extensions to Support Amazon’s Elastic Compute Cloud

- •13.3.1 The Nimrod Architecture

- •13.3.2 The EC2 Actuator

- •13.3.3 Additions to the Schedulers

- •13.4.1 Introduction and Background

- •13.4.2 Computational Requirements

- •13.4.3 The Experiment

- •13.4.4 Computational and Economic Results

- •13.4.5 Scientific Results

- •13.5 Conclusions

- •References

- •14.1 Using the Cloud

- •14.1.1 Overview

- •14.1.2 Background

- •14.1.3 Requirements and Obligations

- •14.1.3.1 Regional Laws

- •14.1.3.2 Industry Regulations

- •14.2 Cloud Compliance

- •14.2.1 Information Security Organization

- •14.2.2 Data Classification

- •14.2.2.1 Classifying Data and Systems

- •14.2.2.2 Specific Type of Data of Concern

- •14.2.2.3 Labeling

- •14.2.3 Access Control and Connectivity

- •14.2.3.1 Authentication and Authorization

- •14.2.3.2 Accounting and Auditing

- •14.2.3.3 Encrypting Data in Motion

- •14.2.3.4 Encrypting Data at Rest

- •14.2.4 Risk Assessments

- •14.2.4.1 Threat and Risk Assessments

- •14.2.4.2 Business Impact Assessments

- •14.2.4.3 Privacy Impact Assessments

- •14.2.5 Due Diligence and Provider Contract Requirements

- •14.2.5.1 ISO Certification

- •14.2.5.2 SAS 70 Type II

- •14.2.5.3 PCI PA DSS or Service Provider

- •14.2.5.4 Portability and Interoperability

- •14.2.5.5 Right to Audit

- •14.2.5.6 Service Level Agreements

- •14.2.6 Other Considerations

- •14.2.6.1 Disaster Recovery/Business Continuity

- •14.2.6.2 Governance Structure

- •14.2.6.3 Incident Response Plan

- •14.3 Conclusion

- •Bibliography

- •15.1.1 Location of Cloud Data and Applicable Laws

- •15.1.2 Data Concerns Within a European Context

- •15.1.3 Government Data

- •15.1.4 Trust

- •15.1.5 Interoperability and Standardization in Cloud Computing

- •15.1.6 Open Grid Forum’s (OGF) Production Grid Interoperability Working Group (PGI-WG) Charter

- •15.1.7.1 What will OCCI Provide?

- •15.1.7.2 Cloud Data Management Interface (CDMI)

- •15.1.7.3 How it Works

- •15.1.8 SDOs and their Involvement with Clouds

- •15.1.10 A Microsoft Cloud Interoperability Scenario

- •15.1.11 Opportunities for Public Authorities

- •15.1.12 Future Market Drivers and Challenges

- •15.1.13 Priorities Moving Forward

- •15.2 Conclusions

- •References

- •16.1 Introduction

- •16.2 Cloud Computing (‘The Cloud’)

- •16.3 Understanding Risks to Cloud Computing

- •16.3.1 Privacy Issues

- •16.3.2 Data Ownership and Content Disclosure Issues

- •16.3.3 Data Confidentiality

- •16.3.4 Data Location

- •16.3.5 Control Issues

- •16.3.6 Regulatory and Legislative Compliance

- •16.3.7 Forensic Evidence Issues

- •16.3.8 Auditing Issues

- •16.3.9 Business Continuity and Disaster Recovery Issues

- •16.3.10 Trust Issues

- •16.3.11 Security Policy Issues

- •16.3.12 Emerging Threats to Cloud Computing

- •16.4 Cloud Security Relationship Framework

- •16.4.1 Security Requirements in the Clouds

- •16.5 Conclusion

- •References

- •17.1 Introduction

- •17.1.1 What Is Security?

- •17.2 ISO 27002 Gap Analyses

- •17.2.1 Asset Management

- •17.2.2 Communications and Operations Management

- •17.2.4 Information Security Incident Management

- •17.2.5 Compliance

- •17.3 Security Recommendations

- •17.4 Case Studies

- •17.4.1 Private Cloud: Fortune 100 Company

- •17.4.2 Public Cloud: Amazon.com

- •17.5 Summary and Conclusion

- •References

- •18.1 Introduction

- •18.2 Decoupling Policy from Applications

- •18.2.1 Overlap of Concerns Between the PEP and PDP

- •18.2.2 Patterns for Binding PEPs to Services

- •18.2.3 Agents

- •18.2.4 Intermediaries

- •18.3 PEP Deployment Patterns in the Cloud

- •18.3.1 Software-as-a-Service Deployment

- •18.3.2 Platform-as-a-Service Deployment

- •18.3.3 Infrastructure-as-a-Service Deployment

- •18.3.4 Alternative Approaches to IaaS Policy Enforcement

- •18.3.5 Basic Web Application Security

- •18.3.6 VPN-Based Solutions

- •18.4 Challenges to Deploying PEPs in the Cloud

- •18.4.1 Performance Challenges in the Cloud

- •18.4.2 Strategies for Fault Tolerance

- •18.4.3 Strategies for Scalability

- •18.4.4 Clustering

- •18.4.5 Acceleration Strategies

- •18.4.5.1 Accelerating Message Processing

- •18.4.5.2 Acceleration of Cryptographic Operations

- •18.4.6 Transport Content Coding

- •18.4.7 Security Challenges in the Cloud

- •18.4.9 Binding PEPs and Applications

- •18.4.9.1 Intermediary Isolation

- •18.4.9.2 The Protected Application Stack

- •18.4.10 Authentication and Authorization

- •18.4.11 Clock Synchronization

- •18.4.12 Management Challenges in the Cloud

- •18.4.13 Audit, Logging, and Metrics

- •18.4.14 Repositories

- •18.4.15 Provisioning and Distribution

- •18.4.16 Policy Synchronization and Views

- •18.5 Conclusion

- •References

- •19.1 Introduction and Background

- •19.2 A Media Service Cloud for Traditional Broadcasting

- •19.2.1 Gridcast the PRISM Cloud 0.12

- •19.3 An On-demand Digital Media Cloud

- •19.4 PRISM Cloud Implementation

- •19.4.1 Cloud Resources

- •19.4.2 Cloud Service Deployment and Management

- •19.5 The PRISM Deployment

- •19.6 Summary

- •19.7 Content Note

- •References

- •20.1 Cloud Computing Reference Model

- •20.2 Cloud Economics

- •20.2.1 Economic Context

- •20.2.2 Economic Benefits

- •20.2.3 Economic Costs

- •20.2.5 The Economics of Green Clouds

- •20.3 Quality of Experience in the Cloud

- •20.4 Monetization Models in the Cloud

- •20.5 Charging in the Cloud

- •20.5.1 Existing Models of Charging

- •20.5.1.1 On-Demand IaaS Instances

- •20.5.1.2 Reserved IaaS Instances

- •20.5.1.3 PaaS Charging

- •20.5.1.4 Cloud Vendor Pricing Model

- •20.5.1.5 Interprovider Charging

- •20.6 Taxation in the Cloud

- •References

- •21.1 Introduction

- •21.2 Background

- •21.3 Experiment

- •21.3.1 Target Application: Value at Risk

- •21.3.2 Target Systems

- •21.3.2.1 Condor

- •21.3.2.2 Amazon EC2

- •21.3.2.3 Eucalyptus

- •21.3.3 Results

- •21.3.4 Job Completion

- •21.3.5 Cost

- •21.4 Conclusions and Future Work

- •References

- •Index

140 |

F. Pamieri and S. Pardi |

The interaction with the network control logic is realized by a new set of cloud services created on top of eyeOS web-desktop. The authentication/authorization process is based on x509 proxy certificates with the Virtual Organization Membership Services (VOMS)-extension, in the gLite-style. A user with the proper privileges can ask for a virtual circuit operation through the eyeOS network interface.

8.5.2 Performance Evaluation and Results Discussion

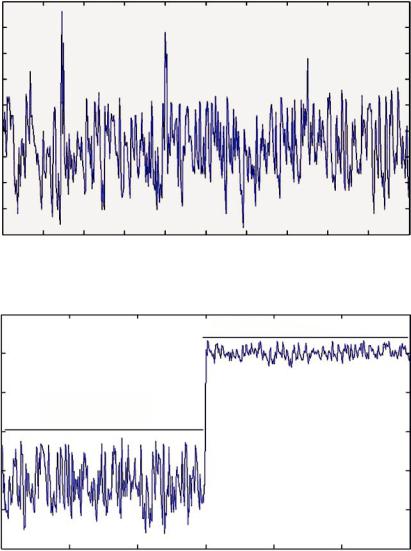

In this section, we present some simple performance evaluation experiences done on our Cloud prototype to show how an application working on large data volumes distributed in the different sites within the cloud can greatly benefit from the introduction of the above-mentioned network control facilities in the cloud stack, and to demonstrate the effectiveness of the implemented architecture in providing QoS or bandwidth guarantees. To better emphasize the above-mentioned benefits and improvements to application behaviour, we performed our tests under real-world extreme traffic load conditions, by working between the Monte S. Angelo Campus site, actually the largest data center in the SCoPE infrastructure, and the Medicine Campus site, currently hosting the other largest storage repository available to the university’s research community. More precisely, the presented results have been obtained by analyzing the throughput associated to the transfer of 1-GB datasets between two EMC2 Clarion CX-3 storage systems located in the above-mentioned sites.

Both the involved storage area networks are connected to their respective access switches through dedicated resource manager nodes equipped with 1 Gbps full-duplex Ethernet interfaces. Here, for simplicity sake, we considered several sample-transfer sessions moving more than 2 TB of experimental data. During the first data transfer, performed on the cloud without any kind of network resource reservation, the underlying routing protocol picks the best but most crowded route between the two sites (owing to the strong utilization of the involved links in peak hours) through the main branch of the metro ring, so that we were able transfer 500 GB of data in 4 h (average 30 s/file) with an average throughput of 33 MB/s (about 270 Mbit/s) and a peak rate of 86 MB/s. We also observed a standard deviation equal to 12.0 owing to the noise present on the link, as it can be appreciated from the strong oscillation illustrated in the picture in Fig. 8.4. During the second test, we created a virtual point-to-point network between the two storage sites by reserving a 1-Gbps bandwidth channel to the above-mentioned datatransfer operation. Such action triggered the creation of a pair of dynamic end-to-end LSPs (one for each required direction) characterized with the required bandwidth on the involved PE nodes. In the presence of background traffic saturating the main branch, such LSPs were automatically re-routed through the secondary (and almost unused) branch to support the required bandwidth commitment. In this case, we observed that the whole 500-GB data transfer was completed in 1 h and 23 min (9.9 s/file) with an average throughput of 101 MB/s and a peak of 106 MB/s. We also observed an improvement in standard deviation achieving an acceptable value of 2.8 against the 12.5 of the best-effort case. We also evidenced a 20-MB/s loss with respect to the theoretical

8 Enhanced Network Support for Scalable Computing Clouds |

|

|

|

141 |

||||||||

|

90 |

|

|

|

|

|

|

|

|

|

|

|

|

80 |

|

|

|

|

|

|

|

|

|

|

|

(MB/s) |

70 |

|

|

|

|

|

|

|

|

|

|

|

60 |

|

|

|

|

|

|

|

|

|

|

|

|

Throughout |

50 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Average |

40 |

|

|

|

|

|

|

|

|

|

|

|

30 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

20 |

|

|

|

|

|

|

|

|

|

|

|

|

10 |

|

|

|

|

|

|

|

|

|

|

|

|

0 |

0 |

50 |

100 |

150 |

200 |

250 |

300 |

350 |

400 |

450 |

500 |

|

|

|

|

|

|

|

Trials |

|

|

|

|

|

Fig. 8.4 |

The best effort transfer behaviour |

|

|

|

|

|

|

|||||

Bandwidth Average MB/s

120

100

80

60

40

20

0

0

With 1 Gbit/s LSP

101 MB/s Average Value

Dev. Standard 12.5

NoLSP

34 MB/s Average Value

Dev. Standard 12.5

100 |

200 |

300 |

400 |

500 |

600 |

Trials

Fig. 8.5 The gain achieved through a virtual connection

achievable maximum bandwidth, due to the known TCP and Ethernet overhead and limits. This simple test evidences how the above-mentioned facility can be effective in optimizing the data-transfer performance within the cloud, and the results are more impressive when expressed in graphical form, as presented in Fig. 8.5, showing the gain achieved by concatenating a sequence of 300 file transfers in the best effort network with the other 300 transfers on the virtual 1-Gbps point-to-point network.