- •Contents

- •Notices

- •Trademarks

- •Preface

- •The team who wrote this book

- •Now you can become a published author, too!

- •Comments welcome

- •Stay connected to IBM Redbooks

- •Chapter 1. Introduction

- •1.1 The opportunity of the in-memory database

- •1.1.1 Disk databases cannot expand to memory

- •1.1.2 IBM solidDB IMDB is memory-friendly

- •1.1.3 Misconceptions

- •1.1.4 Throughput and response times

- •1.2 Database caching with in-memory databases

- •1.2.1 Databases are growing

- •1.2.2 Database caching off-loads the enterprise server

- •1.2.3 IBM solidDB Universal Cache

- •1.3 Applications, competition, and the marketplace

- •Chapter 2. IBM solidDB details

- •2.1 Introduction

- •2.2 Server architecture

- •2.2.1 Database access methods and network drivers

- •2.2.2 Server components

- •2.3 Data storage in solidDB

- •2.3.1 Main-memory engine

- •2.4 Table types

- •2.4.1 In-memory versus disk-based tables

- •2.4.2 Persistent versus non-persistent tables

- •2.4.3 Choosing between different table types

- •2.5 Transactionality

- •2.5.1 Concurrency control and locking

- •2.5.2 Isolation levels

- •2.5.3 Durability levels

- •2.6 solidDB SQL extensions

- •2.6.1 solidDB SQL standard compliance

- •2.6.2 Stored procedures

- •2.6.3 Triggers

- •2.6.4 Sequences

- •2.6.5 Events

- •2.6.6 Replication

- •2.7 Database administration

- •2.7.1 Configuration settings

- •2.7.2 ADMIN COMMAND

- •2.7.3 Data management tools

- •2.7.4 Database object hierarchy

- •Chapter 3. IBM solidDB Universal Cache details

- •3.1 Architecture

- •3.1.1 Architecture and key components

- •3.1.2 Principles of operation

- •3.2 Deployment models

- •3.3 Configuration alternatives

- •3.3.1 Typical configuration

- •3.3.2 Multiple cache nodes

- •3.3.3 SMA for collocation of data

- •3.3.4 solidDB HSB servers for high availability

- •3.4 Key aspects of cache setup

- •3.4.1 Deciding on the replication model

- •3.4.2 Defining what to replicate

- •3.4.3 Starting replication

- •3.5 Additional functionality for cache operations

- •3.5.1 SQL pass-through

- •3.5.2 Aging

- •3.5.3 Improving performance with parallelism

- •3.6 Increasing scale of applications

- •3.6.1 Scaling strategies

- •3.6.2 Examples of cache database applications

- •3.7 Enterprise infrastructure effects of the solidDB Universal Cache

- •3.7.1 Network latency and traffic

- •3.7.3 Database operation execution

- •Chapter 4. Deploying solidDB and Universal Cache

- •4.1 Change and consideration

- •4.2 How to develop applications that use solidDB

- •4.2.1 Application program structure

- •4.2.2 ODBC

- •4.2.3 JDBC

- •4.2.4 Stored procedures

- •4.2.5 Special considerations

- •4.3 New application development on solidDB UC

- •4.3.1 Awareness of separate database connections

- •4.3.2 Combining data from separate databases in a transaction

- •4.3.3 Combining data from different databases in a query

- •4.3.4 Transactionality with Universal Cache

- •4.3.5 Stored procedures in Universal Cache architectures

- •4.4 Integrate an existing application to work with solidDB UC

- •4.4.1 Programming interfaces used by the application

- •4.4.2 Handling two database connections instead of one

- •4.5 Data model design

- •4.5.1 Data model design principles

- •4.5.2 Running in-memory and disk-based tables inside solidDB

- •4.5.3 Data model design for solidDB UC configurations

- •4.6 Data migration

- •4.7 Administration

- •4.7.1 Regular administration operations

- •4.7.2 Information to collect

- •4.7.3 Procedures to plan in advance

- •4.7.4 Automation of administration by scripts

- •Chapter 5. IBM solidDB high availability

- •5.1 High availability (HA) in databases

- •5.2 IBM solidDB HotStandby

- •5.2.1 Architecture

- •5.2.2 State behavior of solidDB HSB

- •5.2.3 solidDB HSB replication and transaction logging

- •5.2.4 Uninterruptable system maintenance and rolling upgrades

- •5.3 HA management in solidDB HSB

- •5.3.1 HA control with a third-party HA framework

- •5.3.2 HA control with the watchdog sample

- •5.3.3 Using solidDB HA Controller (HAC)

- •5.3.4 Preventing Dual Primaries and Split-Brain scenarios

- •5.4 Use of solidDB HSB in applications

- •5.4.1 Location of applications in the system

- •5.4.2 Failover transparency

- •5.4.3 Load balancing

- •5.4.4 Linked applications versus client/server applications

- •5.5 Usage guidelines, use cases

- •5.5.1 Performance considerations

- •5.5.2 Behavior of reads and writes in a HA setup

- •5.5.3 Using asynchronous configurations with HA

- •5.5.4 Using default solidDB HA setup

- •5.5.5 The solidDB HA setup for best data safeness

- •5.5.6 Failover time considerations

- •5.5.7 Recovery time considerations

- •5.5.8 Example situation

- •5.5.9 Application failover

- •5.6 HA in Universal Cache

- •5.6.1 Universal Cache HA architecture

- •5.6.2 UC failure types and remedies

- •6.1 Performance

- •6.1.1 Tools available in the solidDB server

- •6.1.2 Tools available in InfoSphere CDC

- •6.1.3 Performance troubleshooting from the application perspective

- •6.2 Troubleshooting

- •Chapter 7. Putting solidDB and the Universal Cache to good use

- •7.1 solidDB and Universal Cache sweet spots

- •7.1.1 Workload characteristics

- •7.1.2 System topology characteristics

- •7.1.3 Sweet spot summary

- •7.2 Return on investment (ROI) considerations

- •7.2.1 solidDB Universal Cache stimulates business growth

- •7.2.2 solidDB server reduces cost of ownership

- •7.2.3 solidDB Universal Cache helps leverage enterprise DBMS

- •7.2.4 solidDB Universal Cache complements DB2 Connect

- •7.3 Application classes

- •7.3.1 WebSphere Application Server

- •7.3.2 WebLogic Application Server

- •7.3.3 JBoss Application Server

- •7.3.4 Hibernate

- •7.3.5 WebSphere Message Broker

- •7.4 Examining specific industries

- •7.4.1 Telecom (TATP)

- •7.4.2 Financial services

- •7.4.3 Banking Payments Framework

- •7.4.4 Securities Exchange Reference Architecture (SXRA)

- •7.4.5 Retail

- •7.4.6 Online travel industry

- •7.4.7 Media

- •Chapter 8. Conclusion

- •8.1 Where are you putting your data

- •8.2 Considerations

- •Glossary

- •Abbreviations and acronyms

- •Index

4.3 New application development on solidDB UC

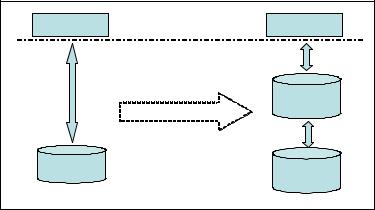

Database application architecture built on cache database or back-end database instead of a single database becomes more complicated. In a high-level conceptual diagram, the legacy back-end database is simply replaced with a cache database that sits between the back-end database and application making the database appear faster from an application perspective. There are no changes in the database interface layer. This concept is illustrated in Figure 4-6.

Application |

Application |

|

Database Interface layer |

|

Cache |

|

Database |

Database |

Backend |

|

Database |

Figure 4-6 Database interface layer

In reality, the conversion from single database system to a cache database system is not quite so straightforward. Consider the following issues, among others, in the application codes:

The application must be aware of the properties of two database connections, one to the cache database and the another to the back-end database. SQL pass-through can mask the two connections to one ODBC or JDBC connection but will require cache awareness in error processing.

Transactions combining data from the cache database and a back-end database are not supported.

Queries combining data from front-end and back-end database are not supported.

A combination of back-end and front-end database is not fully transactional although both individual components are transactional databases.

Support is limited for stored procedures.

Knowing these limitations or conditions of a cached database system enables taking them into account and avoiding them in the design phase.

84 IBM solidDB: Delivering Data with Extreme Speed

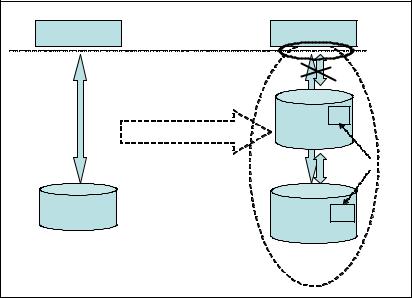

Based on the conditions, the architectural diagram becomes more complicated, as we have illustrated in Figure 4-7

Application |

|

Application |

1 |

|

|

|

|

|

Database Interface layer |

|

2 & 3 |

|

|

|

|

|

|

Cache SPL |

|

|

|

Database |

|

|

|

|

5 |

Database |

|

Backend SPL |

|

|

|

Database |

|

|

|

4 |

|

Figure 4-7 Database interface layer with a cached database

Certain changes are required in the interface between application and cached database as compared to an application with similar logic accessing only a single database.

4.3.1 Awareness of separate database connections

A regular single-database application sees only one database and can handle everything with one database connection. All transactions that have been successfully committed to the single database automatically have the ACID (atomicity, consistency, isolation, durability) properties.

A cached database system has two physical databases, a front end and a back end. Certain performance-critical data has been moved to front-end database; volume data remains in the back-end database. These databases are synchronized by Universal Cache’s Changed Data Capture (CDC) replication but they still act as individual databases.

Chapter 4. Deploying solidDB and Universal Cache 85

The application can access these two databases by two strategies:

Opening and controlling separate connections to the two databases

Using SQL pass-through to route all queries to the back-end database using the front end

Opening separate connections

The application can open two database connections to the two databases and retain and monitor these connections constantly. This strategy provides the application full control on which queries to route to the front end and which to the back end. This is rather laborious but provides flexibility for distribution strategies.

SQL pass-through functionality

SQL pass-through functionality provided by the solidDB Universal Cache product can be used. SQL pass-through assumes that all statements are first run at the front-end database. If any error takes place, the statement is run at the back end. Errors are assumed to be caused by tables not being in place at the front end.

The application sees only one connection but the front-end and back-end databases are still separate and individual databases. The key challenges with SQL pass-through are as follows:

The set of two databases is not transactional. For example, writing something that is routed to the front end is not synchronously written to the back end. If a transaction writes something to a front-end table and in the next statement executes a join that combines data from the same table and another table that only resides in back end, the statement will be routed to back end. The recently written data will not be visible until the asynchronous replication is completed.

Cross-database queries are not supported, so joining data from a front-end table and back-end table is not possible. These queries are always automatically fully executed at the back-end database.

For large result sets, SQL pass-through can present a performance bottleneck. All rows must be first transferred from the back-end database to the front-end database, and then from front end to the application. The front-end database ends up processing all the rows and potentially performing type conversions for all columns. The impact of this challenge is directly proportional to size of result set. For smallish result sets it is not measurable.

SQL pass-through is built to route queries between the front-end and back-end databases on assumption that the routing can be done based on table name. SQL pass-through does not provide a mechanism for situations where a fraction of a table is stored on the front end and the whole table at back end.

86 IBM solidDB: Delivering Data with Extreme Speed

4.3.2 Combining data from separate databases in a transaction

Although both front-end and back-end databases are individually transactional databases, the two transactions taking place in two different databases do not constitute a transaction that would meet the ACID requirements.

Using the default asynchronous replication mechanism does not enable building a transactional combined database, because some compromises are always implicitly included in this architecture.

Creating a transactional combination of two or more databases, using Distributed Transactions, is possible. A Distributed Transaction is a set of database operations where two or more database servers are involved. The database servers provide transactional resources. Additionally, a Transaction Manager is required to create and manage the global transaction that runs on all databases.

4.3.3 Combining data from different databases in a query

Joining data from two or more tables by one query is one of the benefits of relational database and SQL. This is easily possible in the Universal Cache architecture as long as all tables participating the join reside in the same (either front-end or back-end) database. If this is not the case, several ways are available to work around the limitation:

Generally, the easiest way is to run all the joins of this kind in the back end. Typically, all tables would be stored at the back end, but the most recent changes to the tables that reside at the front end also might not have been replicated to the back end yet. If there is no timeliness requirement and if there is no performance benefit visible based on running the query at the front end, this approach is a good one.

Because there is no statement-level joins available between two separate databases, the only way to execute the join between two databases is to define a stored procedure that runs in the front end and executes an application-level join by running queries in the front-end and back-end databases as needed. All join logic will be controlled by the procedure. From the application perspective, the procedure is still called by executing a single SQL statement.

Application-level joins can also be executed outside the database by the application, but they cannot be made to appear as the execution of single statement in any way.

Chapter 4. Deploying solidDB and Universal Cache 87