- •Preface

- •Part IV. Basic Single Equation Analysis

- •Chapter 18. Basic Regression Analysis

- •Equation Objects

- •Specifying an Equation in EViews

- •Estimating an Equation in EViews

- •Equation Output

- •Working with Equations

- •Estimation Problems

- •References

- •Chapter 19. Additional Regression Tools

- •Special Equation Expressions

- •Robust Standard Errors

- •Weighted Least Squares

- •Nonlinear Least Squares

- •Stepwise Least Squares Regression

- •References

- •Chapter 20. Instrumental Variables and GMM

- •Background

- •Two-stage Least Squares

- •Nonlinear Two-stage Least Squares

- •Limited Information Maximum Likelihood and K-Class Estimation

- •Generalized Method of Moments

- •IV Diagnostics and Tests

- •References

- •Chapter 21. Time Series Regression

- •Serial Correlation Theory

- •Testing for Serial Correlation

- •Estimating AR Models

- •ARIMA Theory

- •Estimating ARIMA Models

- •ARMA Equation Diagnostics

- •References

- •Chapter 22. Forecasting from an Equation

- •Forecasting from Equations in EViews

- •An Illustration

- •Forecast Basics

- •Forecasts with Lagged Dependent Variables

- •Forecasting with ARMA Errors

- •Forecasting from Equations with Expressions

- •Forecasting with Nonlinear and PDL Specifications

- •References

- •Chapter 23. Specification and Diagnostic Tests

- •Background

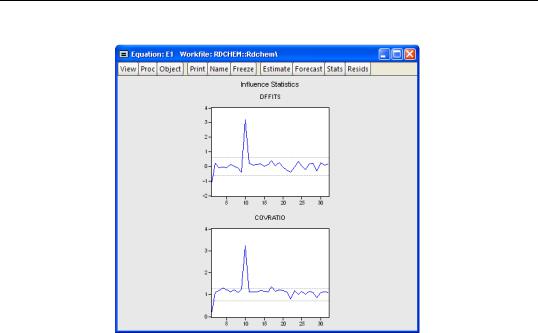

- •Coefficient Diagnostics

- •Residual Diagnostics

- •Stability Diagnostics

- •Applications

- •References

- •Part V. Advanced Single Equation Analysis

- •Chapter 24. ARCH and GARCH Estimation

- •Basic ARCH Specifications

- •Estimating ARCH Models in EViews

- •Working with ARCH Models

- •Additional ARCH Models

- •Examples

- •References

- •Chapter 25. Cointegrating Regression

- •Background

- •Estimating a Cointegrating Regression

- •Testing for Cointegration

- •Working with an Equation

- •References

- •Binary Dependent Variable Models

- •Ordered Dependent Variable Models

- •Censored Regression Models

- •Truncated Regression Models

- •Count Models

- •Technical Notes

- •References

- •Chapter 27. Generalized Linear Models

- •Overview

- •How to Estimate a GLM in EViews

- •Examples

- •Working with a GLM Equation

- •Technical Details

- •References

- •Chapter 28. Quantile Regression

- •Estimating Quantile Regression in EViews

- •Views and Procedures

- •Background

- •References

- •Chapter 29. The Log Likelihood (LogL) Object

- •Overview

- •Specification

- •Estimation

- •LogL Views

- •LogL Procs

- •Troubleshooting

- •Limitations

- •Examples

- •References

- •Part VI. Advanced Univariate Analysis

- •Chapter 30. Univariate Time Series Analysis

- •Unit Root Testing

- •Panel Unit Root Test

- •Variance Ratio Test

- •BDS Independence Test

- •References

- •Part VII. Multiple Equation Analysis

- •Chapter 31. System Estimation

- •Background

- •System Estimation Methods

- •How to Create and Specify a System

- •Working With Systems

- •Technical Discussion

- •References

- •Vector Autoregressions (VARs)

- •Estimating a VAR in EViews

- •VAR Estimation Output

- •Views and Procs of a VAR

- •Structural (Identified) VARs

- •Vector Error Correction (VEC) Models

- •A Note on Version Compatibility

- •References

- •Chapter 33. State Space Models and the Kalman Filter

- •Background

- •Specifying a State Space Model in EViews

- •Working with the State Space

- •Converting from Version 3 Sspace

- •Technical Discussion

- •References

- •Chapter 34. Models

- •Overview

- •An Example Model

- •Building a Model

- •Working with the Model Structure

- •Specifying Scenarios

- •Using Add Factors

- •Solving the Model

- •Working with the Model Data

- •References

- •Part VIII. Panel and Pooled Data

- •Chapter 35. Pooled Time Series, Cross-Section Data

- •The Pool Workfile

- •The Pool Object

- •Pooled Data

- •Setting up a Pool Workfile

- •Working with Pooled Data

- •Pooled Estimation

- •References

- •Chapter 36. Working with Panel Data

- •Structuring a Panel Workfile

- •Panel Workfile Display

- •Panel Workfile Information

- •Working with Panel Data

- •Basic Panel Analysis

- •References

- •Chapter 37. Panel Estimation

- •Estimating a Panel Equation

- •Panel Estimation Examples

- •Panel Equation Testing

- •Estimation Background

- •References

- •Part IX. Advanced Multivariate Analysis

- •Chapter 38. Cointegration Testing

- •Johansen Cointegration Test

- •Single-Equation Cointegration Tests

- •Panel Cointegration Testing

- •References

- •Chapter 39. Factor Analysis

- •Creating a Factor Object

- •Rotating Factors

- •Estimating Scores

- •Factor Views

- •Factor Procedures

- •Factor Data Members

- •An Example

- •Background

- •References

- •Appendix B. Estimation and Solution Options

- •Setting Estimation Options

- •Optimization Algorithms

- •Nonlinear Equation Solution Methods

- •References

- •Appendix C. Gradients and Derivatives

- •Gradients

- •Derivatives

- •References

- •Appendix D. Information Criteria

- •Definitions

- •Using Information Criteria as a Guide to Model Selection

- •References

- •Appendix E. Long-run Covariance Estimation

- •Technical Discussion

- •Kernel Function Properties

- •References

- •Index

- •Symbols

- •Numerics

186—Chapter 23. Specification and Diagnostic Tests

Applications

For illustrative purposes, we provide a demonstration of how to carry out some other specification tests in EViews. For brevity, the discussion is based on commands, but most of these procedures can also be carried out using the menu system.

A Wald Test of Structural Change with Unequal Variance

The F-statistics reported in the Chow tests have an F-distribution only if the errors are independent and identically normally distributed. This restriction implies that the residual variance in the two subsamples must be equal.

Suppose now that we wish to compute a Wald statistic for structural change with unequal subsample variances. Denote the parameter estimates and their covariance matrix in sub-

sample i as bi and Vi for i = 1, 2 . Under the assumption that b1 and b2 are independent normal random variables, the difference b1 – b2 has mean zero and variance

V1 + V2 . Therefore, a Wald statistic for the null hypothesis of no structural change and independent samples can be constructed as:

W = (b1 – b2)¢(V1 + V2 )–1(b1 – b2), |

(23.47) |

which has an asymptotic x2 distribution with degrees of freedom equal to the number of estimated parameters in the b vector.

Applications—187

To carry out this test in EViews, we estimate the model in each subsample and save the estimated coefficients and their covariance matrix. For example, consider the quarterly workfile of macroeconomic data in the workfile “Coef_test2.WF1” (containing data for 1947q1– 1994q4) and suppose wish to test whether there was a structural change in the consumption function in 1973q1. First, estimate the model in the first sample and save the results by the commands:

coef(2) b1

smpl 1947q1 1972q4

equation eq_1.ls log(cs)=b1(1)+b1(2)*log(gdp) sym v1=eq_1.@cov

The first line declares the coefficient vector, B1, into which we will place the coefficient estimates in the first sample. Note that the equation specification in the third line explicitly refers to elements of this coefficient vector. The last line saves the coefficient covariance matrix as a symmetric matrix named V1. Similarly, estimate the model in the second sample and save the results by the commands:

coef(2) b2

smpl 1973q1 1994q4

equation eq_2.ls log(cs)=b2(1)+b2(2)*log(gdp) sym v2=eq_2.@cov

To compute the Wald statistic, use the command:

matrix wald=@transpose(b1-b2)*@inverse(v1+v2)*(b1-b2)

The Wald statistic is saved in the 1 ¥ 1 matrix named WALD. To see the value, either double click on WALD or type “show wald”. You can compare this value with the critical values from the x2 distribution with 2 degrees of freedom. Alternatively, you can compute the p- value in EViews using the command:

scalar wald_p=1-@cchisq(wald(1,1),2)

The p-value is saved as a scalar named WALD_P. To see the p-value, double click on WALD_P or type “show wald_p”. The WALD statistic value of 53.1243 has an associated p- value of 2.9e-12 so that we decisively reject the null hypothesis of no structural change.

The Hausman Test

A widely used class of tests in econometrics is the Hausman test. The underlying idea of the Hausman test is to compare two sets of estimates, one of which is consistent under both the null and the alternative and another which is consistent only under the null hypothesis. A large difference between the two sets of estimates is taken as evidence in favor of the alternative hypothesis.

Hausman (1978) originally proposed a test statistic for endogeneity based upon a direct comparison of coefficient values. Here, we illustrate the version of the Hausman test pro-

188—Chapter 23. Specification and Diagnostic Tests

posed by Davidson and MacKinnon (1989, 1993), which carries out the test by running an auxiliary regression.

The following equation in the “Basics.WF1” workfile was estimated by OLS:

Dependent Variable: LOG(M1)

Method: Least Squares

Date: 08/10/09 Time: 16:08

Sample (adjusted): 1959M02 1995M04

Included observations: 435 after adjustments

Variable |

Coefficient |

Std. Error |

t-Statistic |

Prob. |

|

|

|

|

|

|

|

|

|

|

C |

-0.022699 |

0.004443 |

-5.108528 |

0.0000 |

LOG(IP) |

0.011630 |

0.002585 |

4.499708 |

0.0000 |

DLOG(PPI) |

-0.024886 |

0.042754 |

-0.582071 |

0.5608 |

TB3 |

-0.000366 |

9.91E-05 |

-3.692675 |

0.0003 |

LOG(M1(-1)) |

0.996578 |

0.001210 |

823.4440 |

0.0000 |

|

|

|

|

|

|

|

|

|

|

R-squared |

0.999953 |

Mean dependent var |

5.844581 |

|

Adjusted R-squared |

0.999953 |

S.D. dependent var |

0.670596 |

|

S.E. of regression |

0.004601 |

Akaike info criterion |

-7.913714 |

|

Sum squared resid |

0.009102 |

Schwarz criterion |

-7.866871 |

|

Log likelihood |

1726.233 |

Hannan-Quinn criter. |

-7.895226 |

|

F-statistic |

2304897. |

Durbin-Watson stat |

1.265920 |

|

Prob(F-statistic) |

0.000000 |

|

|

|

|

|

|

|

|

|

|

|

|

|

Suppose we are concerned that industrial production (IP) is endogenously determined with money (M1) through the money supply function. If endogeneity is present, then OLS estimates will be biased and inconsistent. To test this hypothesis, we need to find a set of instrumental variables that are correlated with the “suspect” variable IP but not with the error term of the money demand equation. The choice of the appropriate instrument is a crucial step. Here, we take the unemployment rate (URATE) and Moody’s AAA corporate bond yield (AAA) as instruments.

To carry out the Hausman test by artificial regression, we run two OLS regressions. In the first regression, we regress the suspect variable (log) IP on all exogenous variables and instruments and retrieve the residuals:

equation eq_test.ls log(ip) c dlog(ppi) tb3 log(m1(-1)) urate aaa eq_test.makeresid res_ip

Then in the second regression, we re-estimate the money demand function including the residuals from the first regression as additional regressors. The result is:

Applications—189

Dependent Variable: LOG(M1)

Method: Least Squares

Date: 08/10/09 Time: 16:11

Sample (adjusted): 1959M02 1995M04

Included observations: 435 after adjustments

Variable |

Coefficient |

Std. Error |

t-Statistic |

Prob. |

|

|

|

|

|

|

|

|

|

|

C |

-0.007145 |

0.007473 |

-0.956158 |

0.3395 |

LOG(IP) |

0.001560 |

0.004672 |

0.333832 |

0.7387 |

DLOG(PPI) |

0.020233 |

0.045935 |

0.440465 |

0.6598 |

TB3 |

-0.000185 |

0.000121 |

-1.527775 |

0.1273 |

LOG(M1(-1)) |

1.001093 |

0.002123 |

471.4894 |

0.0000 |

RES_IP |

0.014428 |

0.005593 |

2.579826 |

0.0102 |

|

|

|

|

|

|

|

|

|

|

R-squared |

0.999954 |

Mean dependent var |

5.844581 |

|

Adjusted R-squared |

0.999954 |

S.D. dependent var |

0.670596 |

|

S.E. of regression |

0.004571 |

Akaike info criterion |

-7.924511 |

|

Sum squared resid |

0.008963 |

Schwarz criterion |

-7.868300 |

|

Log likelihood |

1729.581 |

Hannan-Quinn criter. |

-7.902326 |

|

F-statistic |

1868171. |

Durbin-Watson stat |

1.307838 |

|

Prob(F-statistic) |

0.000000 |

|

|

|

|

|

|

|

|

|

|

|

|

|

If the OLS estimates are consistent, then the coefficient on the first stage residuals should not be significantly different from zero. In this example, the test rejects the hypothesis of consistent OLS estimates at conventional levels.

Note that an alternative form of a regressor endogeneity test may be computed using the Regressor Endogeneity Test view of an equation estimated by TSLS or GMM (see “Regressor Endogeneity Test” on page 79).

Non-nested Tests

Most of the tests discussed in this chapter are nested tests in which the null hypothesis is obtained as a special case of the alternative hypothesis. Now consider the problem of choosing between the following two specifications of a consumption function:

H1: CSt = a1 + a2GDPt + a3GDPt – 1 + et

(23.48)

H2: CSt = b1 + b2GDPt + b3CSt – 1 + et

for the variables in the workfile “Coef_test2.WF1”. These are examples of non-nested models since neither model may be expressed as a restricted version of the other.

The J-test proposed by Davidson and MacKinnon (1993) provides one method of choosing between two non-nested models. The idea is that if one model is the correct model, then the fitted values from the other model should not have explanatory power when estimating that model. For example, to test model H1 against model H2 , we first estimate model H2 and retrieve the fitted values:

equation eq_cs2.ls cs c gdp cs(-1)

190—Chapter 23. Specification and Diagnostic Tests

eq_cs2.fit(f=na) cs2

The second line saves the fitted values as a series named CS2. Then estimate model H1 including the fitted values from model H2 . The result is:

Dependent Variable: CS

Method: Least Squares

Date: 08/10/09 Time: 16:17

Sample (adjusted): 1947Q2 1994Q4

Included observations: 191 after adjustments

Variable |

Coefficient |

Std. Error |

t-Statistic |

Prob. |

|

|

|

|

|

|

|

|

|

|

C |

7.313232 |

4.391305 |

1.665389 |

0.0975 |

GDP |

0.278749 |

0.029278 |

9.520694 |

0.0000 |

GDP(-1) |

-0.314540 |

0.029287 |

-10.73978 |

0.0000 |

CS2 |

1.048470 |

0.019684 |

53.26506 |

0.0000 |

|

|

|

|

|

|

|

|

|

|

R-squared |

0.999833 |

Mean dependent var |

1953.966 |

|

Adjusted R-squared |

0.999830 |

S.D. dependent var |

848.4387 |

|

S.E. of regression |

11.05357 |

Akaike info criterion |

7.664104 |

|

Sum squared resid |

22847.93 |

Schwarz criterion |

7.732215 |

|

Log likelihood |

-727.9220 |

Hannan-Quinn criter. |

7.691692 |

|

F-statistic |

373074.4 |

Durbin-Watson stat |

2.253186 |

|

Prob(F-statistic) |

0.000000 |

|

|

|

|

|

|

|

|

|

|

|

|

|

The fitted values from model H2 enter significantly in model H1 and we reject model H1 .

We may also test model H2 against model H1 . First, estimate model H1 and retrieve the fitted values:

equation eq_cs1a.ls cs gdp gdp(-1) eq_cs1a.fit(f=na) cs1

Then estimate model H2 including the fitted values from model H1 . The results of this “reverse” test regression are given by: