- •Table of Contents

- •Foreword

- •Chapter 1. A Quick Walk Through

- •Workfile: The Basic EViews Document

- •Viewing an individual series

- •Looking at different samples

- •Generating a new series

- •Looking at a pair of series together

- •Estimating your first regression in EViews

- •Saving your work

- •Forecasting

- •What’s Ahead

- •Chapter 2. EViews—Meet Data

- •The Structure of Data and the Structure of a Workfile

- •Creating a New Workfile

- •Deconstructing the Workfile

- •Time to Type

- •Identity Noncrisis

- •Dated Series

- •The Import Business

- •Adding Data To An Existing Workfile—Or, Being Rectangular Doesn’t Mean Being Inflexible

- •Among the Missing

- •Quick Review

- •Appendix: Having A Good Time With Your Date

- •Chapter 3. Getting the Most from Least Squares

- •A First Regression

- •The Really Important Regression Results

- •The Pretty Important (But Not So Important As the Last Section’s) Regression Results

- •A Multiple Regression Is Simple Too

- •Hypothesis Testing

- •Representing

- •What’s Left After You’ve Gotten the Most Out of Least Squares

- •Quick Review

- •Chapter 4. Data—The Transformational Experience

- •Your Basic Elementary Algebra

- •Simple Sample Says

- •Data Types Plain and Fancy

- •Numbers and Letters

- •Can We Have A Date?

- •What Are Your Values?

- •Relative Exotica

- •Quick Review

- •Chapter 5. Picture This!

- •A Simple Soup-To-Nuts Graphing Example

- •A Graphic Description of the Creative Process

- •Picture One Series

- •Group Graphics

- •Let’s Look At This From Another Angle

- •To Summarize

- •Categorical Graphs

- •Togetherness of the Second Sort

- •Quick Review and Look Ahead

- •Chapter 6. Intimacy With Graphic Objects

- •To Freeze Or Not To Freeze Redux

- •A Touch of Text

- •Shady Areas and No-Worry Lines

- •Templates for Success

- •Point Me The Way

- •Your Data Another Sorta Way

- •Give A Graph A Fair Break

- •Options, Options, Options

- •Quick Review?

- •Chapter 7. Look At Your Data

- •Sorting Things Out

- •Describing Series—Just The Facts Please

- •Describing Series—Picturing the Distribution

- •Tests On Series

- •Describing Groups—Just the Facts—Putting It Together

- •Chapter 8. Forecasting

- •Just Push the Forecast Button

- •Theory of Forecasting

- •Dynamic Versus Static Forecasting

- •Sample Forecast Samples

- •Facing the Unknown

- •Forecast Evaluation

- •Forecasting Beneath the Surface

- •Quick Review—Forecasting

- •Chapter 9. Page After Page After Page

- •Pages Are Easy To Reach

- •Creating New Pages

- •Renaming, Deleting, and Saving Pages

- •Multi-Page Workfiles—The Most Basic Motivation

- •Multiple Frequencies—Multiple Pages

- •Links—The Live Connection

- •Unlinking

- •Have A Match?

- •Matching When The Identifiers Are Really Different

- •Contracted Data

- •Expanded Data

- •Having Contractions

- •Two Hints and A GotchYa

- •Quick Review

- •Chapter 10. Prelude to Panel and Pool

- •Pooled or Paneled Population

- •Nuances

- •So What Are the Benefits of Using Pools and Panels?

- •Quick (P)review

- •Chapter 11. Panel—What’s My Line?

- •What’s So Nifty About Panel Data?

- •Setting Up Panel Data

- •Panel Estimation

- •Pretty Panel Pictures

- •More Panel Estimation Techniques

- •One Dimensional Two-Dimensional Panels

- •Fixed Effects With and Without the Social Contrivance of Panel Structure

- •Quick Review—Panel

- •Chapter 12. Everyone Into the Pool

- •Getting Your Feet Wet

- •Playing in the Pool—Data

- •Getting Out of the Pool

- •More Pool Estimation

- •Getting Data In and Out of the Pool

- •Quick Review—Pools

- •Chapter 13. Serial Correlation—Friend or Foe?

- •Visual Checks

- •Testing for Serial Correlation

- •More General Patterns of Serial Correlation

- •Correcting for Serial Correlation

- •Forecasting

- •ARMA and ARIMA Models

- •Quick Review

- •Chapter 14. A Taste of Advanced Estimation

- •Weighted Least Squares

- •Heteroskedasticity

- •Nonlinear Least Squares

- •Generalized Method of Moments

- •Limited Dependent Variables

- •ARCH, etc.

- •Maximum Likelihood—Rolling Your Own

- •System Estimation

- •Vector Autoregressions—VAR

- •Quick Review?

- •Chapter 15. Super Models

- •Your First Homework—Bam, Taken Up A Notch!

- •Looking At Model Solutions

- •More Model Information

- •Your Second Homework

- •Simulating VARs

- •Rich Super Models

- •Quick Review

- •Chapter 16. Get With the Program

- •I Want To Do It Over and Over Again

- •You Want To Have An Argument

- •Program Variables

- •Loopy

- •Other Program Controls

- •A Rolling Example

- •Quick Review

- •Appendix: Sample Programs

- •Chapter 17. Odds and Ends

- •How Much Data Can EViews Handle?

- •How Long Does It Take To Compute An Estimate?

- •Freeze!

- •A Comment On Tables

- •Saving Tables and Almost Tables

- •Saving Graphs and Almost Graphs

- •Unsubtle Redirection

- •Objects and Commands

- •Workfile Backups

- •Updates—A Small Thing

- •Updates—A Big Thing

- •Ready To Take A Break?

- •Help!

- •Odd Ending

- •Chapter 18. Optional Ending

- •Required Options

- •Option-al Recommendations

- •More Detailed Options

- •Window Behavior

- •Font Options

- •Frequency Conversion

- •Alpha Truncation

- •Spreadsheet Defaults

- •Workfile Storage Defaults

- •Estimation Defaults

- •File Locations

- •Graphics Defaults

- •Quick Review

- •Index

- •Symbols

Testing for Serial Correlation—319

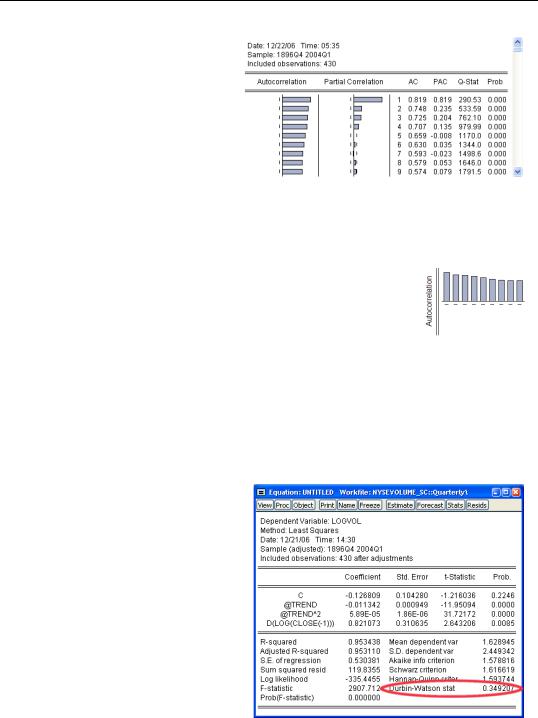

To plot the autocorrelations of the residuals, click the  button and choose the menu Residual

button and choose the menu Residual

Diagnostics/Correlogram - Q-Sta- tistics.... Choose the number of autocorrelations you want to see—the default, 36, is fine—and EViews pops up with a combined graphical and numeric look at the autocorrelations. The unlabeled

column in the middle of the display, gives the lag number (1, 2, 3, and so on). The column marked AC gives estimated autocorrelations at the corresponding lag. This correlogram shows substantial and persistent autocorrelation.

The left-most column gives the autocorrelations as a bar graph. The graph is a little easier to read if you rotate your head 90 degrees to put the autocorrelations on the vertical axis and the lags on the horizontal, giving a picture something like the one to the right showing slowing declining autocorrelations.

Testing for Serial Correlation

Visual checks provide a great deal of information, but you’ll probably want to follow up with one or more formal statistical tests for serial correlation. EViews provides three test statistics: the Durbin-Watson, the Breusch-Godfrey, and the Ljung-Box Q-statistic.

Durbin-Watson Statistic

The Durbin-Watson, or DW, statistic is the traditional test for serial correlation. For reasons discussed below, the DW is no longer the test statistic preferred by most econometricians. Nonetheless, it is widely used in practice and performs excellently in most situations. The Durbin-Watson tradition is so strong that EViews routinely reports it in the lower panel of regression output.

The Durbin-Watson statistic is unusual in that under the null hypothesis (no serial correlation)

320—Chapter 13. Serial Correlation—Friend or Foe?

the Durbin-Watson centers around 2.0 rather than 0. You can roughly translate between the Durbin-Watson and the serial correlation coefficient using the formulas:

DW = 2 – 2r

r = 1 – (DW ⁄ 2)

If the serial correlation coefficient is zero, the Durbin-Watson is about 2. As the serial correlation coefficient heads toward 1.0, the Durbin-Watson heads toward 0.

To test the hypothesis of no serial correlation, compare the reported Durbin-Watson to a table of critical values. In this example, the Durbin-Watson of 0.349 clearly rejects the absence of serial correlation.

Hint: EViews doesn’t compute p-values for the Durbin-Watson.

The Durbin-Watson has a number of shortcomings, one of which is that the standard tables include intervals for which the test statistic is inconclusive. Econometric Theory and Methods, by Davidson and MacKinnon, says:

…the Durbin-Watson statistic, despite its popularity, is not very satisfactory.… the DW statistic is not valid when the regressors include lagged dependent variables, and it cannot be easily generalized to test for higher-order processes.

While we recommend the more modern Breusch-Godfrey in place of the Durbin-Watson, the truth is that the tests usually agree.

Econometric warning: But never use the Durbin-Watson when there’s a lagged dependent variable on the right-hand side of the equation.

Breusch-Godfrey Statistic

The preferred test statistic for checking for serial correlation is the Breusch-Godfrey. From the  menu choose Residual Diagnostics/Serial Correlation LM Test… to pop open a small dialog where you enter the degree of serial correlation you’re interested in testing. In other words, if you’re interested in firstorder serial correlation change Lags to include to 1.

menu choose Residual Diagnostics/Serial Correlation LM Test… to pop open a small dialog where you enter the degree of serial correlation you’re interested in testing. In other words, if you’re interested in firstorder serial correlation change Lags to include to 1.

Testing for Serial Correlation—321

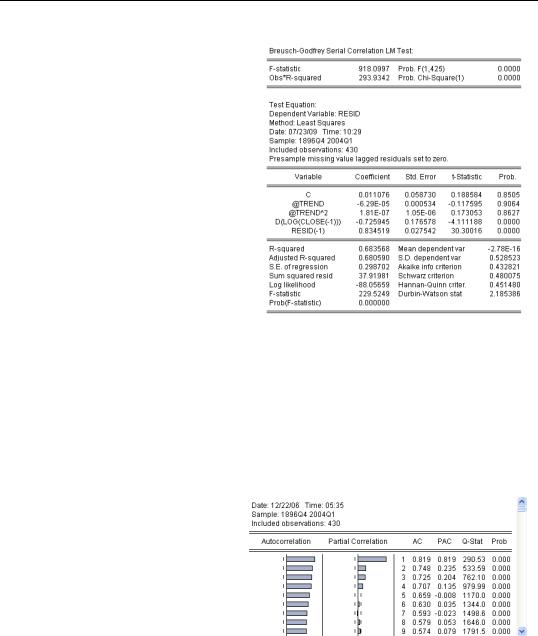

The view to the right shows the results of testing for first-order serial correlation. The top part of the output gives the test results in two versions: an F- statistic and a x2 statistic. (There’s no great reason to prefer one over the other.) Associated p-values are shown next to each statistic. For our stock market volume data, the hypothesis of no serial correlation is easily rejected.

The bottom part of the view provides extra information showing the auxiliary regression used to create the test statistics reported at the top. This extra regression is sometimes interesting, but you don’t need it for conducting the test.

Ljung-Box Q-statistic

A different approach to checking for serial correlation is to plot the correlation of the residual with the residual lagged once, the residual with the residual lagged twice, and so on. As we saw above in The Correlogram, this plot is called the correlogram of the residuals. If there is no serial correlation then correlations should all be zero, except for random fluctuation.

To see the correlogram, choose Residual Diagnostics/Correlogram - Q-statistics… from the  menu. A small dialog pops open allowing you to specify the number of correlations to

menu. A small dialog pops open allowing you to specify the number of correlations to

show.

The correlogram for the residuals from our volume equation is repeated to the right. The column headed “Q-Stat” gives the LjungBox Q-statistic, which tests for a particular row the hypothesis that all the correlations up to and including that row equal zero. The column marked “Prob” gives the corresponding p-value. Continuing

along with the example, the Q-statistic against the hypothesis that both the first and second correlation equal zero is 553.59. The probability of getting this statistic by chance is zero to three decimal places. So for this equation, the Ljung-Box Q-statistic agrees with the evi-

322—Chapter 13. Serial Correlation—Friend or Foe?

dence in favor of serial correlation that we got from the Durbin-Watson and the BreuschGodfrey.

Hint: The number of correlations used in the Q-statistic does not correspond to the order of serial correlation. If there is first-order serial correlation, then the residual correlations at all lags differ from zero, although the correlation diminishes as the lag increases.

More General Patterns of Serial Correlation

The idea of first-order serial correlation can be extended to allow for more than one lag. The correlogram for first-order serial correlation always follows geometric decay, while higher order serial correlation can produce more complex patterns in the correlogram, which also decay gradually. In contrast, moving average processes, below, produce a correlogram which falls abruptly to zero after a finite number of periods.

Higher-Order Serial Correlation

First-order serial correlation is the simplest pattern by which errors in a regression equation may be correlated over time. This pattern is also called an autoregression of order one, or AR(1), because we can think of the equation for the error terms as being a regression on one lagged value of itself. Analogously, second-order serial correlation, or AR(2), is written

ut = r1ut – 1 + r2ut – 2 + et . More generally, serial correlation of order p, AR(p), is written ut = r1ut – 1 + r2ut – 2 + … + rput – p + et .

When you specify the number of lags for the Breusch-Godfrey test, you’re really specifying the order of the autoregression to be tested.

Moving Average Errors

A different specification of the pattern of serial correlation in the error term is the moving average, or MA, error. For example, a moving average of order one, or MA(1), would be written ut = et + vet – 1 and a moving average of order q, or MA(q), looks like

ut = et + v1et – 1 + … + vqet – q . Note that the moving average error is a weighted average of the current innovation and past innovations, where the autoregressive error is a weighted average of the current innovation and past errors.

Convention Hint: There are two sign conventions for writing out moving average errors. EViews uses the convention that lagged innovations are added to the current innovation. This is the usual convention in regression analysis. Some texts, mostly in time series analysis, use the convention that lagged innovations are subtracted instead. There’s no consequence to the choice of one convention over the other.