Jones N.D.Partial evaluation and automatic program generation.1999

.pdf

|

|

|

Partial evaluation for C by two-level execution |

251 |

||||

|

|

|

|

|

|

|||

while (pp != HALT) |

/* |

Two-level execution of statements/ |

*/ |

|||||

switch (stmt_kind(func,pp,pgm)) |

|

|

|

|

|

|||

f |

|

|

|

|

|

|

||

case EXPR: |

|

|

/* |

Static expression |

*/ |

|||

eval( exp, pstore, lstore, gstore); |

|

|

|

|

||||

pp += 1; break; |

|

|

|

|

|

|||

case _EXPR_: |

|

|

/* |

Dynamic expression |

*/ |

|||

gen_expr(reduce( exp, pstore, lstore, gstore)); |

|

|

|

|||||

pp += 1; break; |

|

|

|

|

|

|||

case GOTO: |

|

|

|

|

/* Static jump |

*/ |

||

pp = target-label; break; |

|

|

/* Dynamic jump */ |

|||||

case _GOTO_: |

|

|

|

|||||

lab = seenB4( target-label, pstore, lstore, gstore); |

|

|

||||||

if (!lab) lab = insert_pending( target-label, pstore, lstore, gstore); |

|

|||||||

gen_goto(lab); |

|

|

|

|

|

|||

pp = HALT; break; |

|

|

|

|

|

|||

case IF: |

|

|

/* |

Static conditional |

*/ |

|||

if (eval( test-exp, pstore, lstore, gstore)) pp = |

then-lab; |

|

||||||

else pp = |

else-lab; |

|

|

|

|

|

||

break; |

|

|

|

|

|

|

||

case _IF_: |

|

|

/* |

Dynamic conditional |

*/ |

|||

lab1 = seenB4( then-lab, pstore, lstore, gstore); |

|

|

|

|||||

if (!lab1) lab1 = insert_pending( then-lab, pstore, lstore, gstore); |

|

|||||||

lab2 = seenB4( else-lab, pstore, lstore, gstore); |

|

|

|

|||||

if (!lab2) lab2 = insert_pending( else-lab, pstore, lstore, gstore); |

|

|||||||

gen_if(reduce( test-exp, pstore, lstore, gstore), lab1, lab2); |

|

|||||||

pp = HALT; break; |

|

|

|

|

|

|||

case CALL: |

|

|

/* |

Static function call |

*/ |

|||

store = eval_param( parameters, pstore, lstore, gstore); |

|

|||||||

*eval_lexp( var, pstore, lstore, gstore) = exec_func( fun, store); |

|

|||||||

pp += 1; break; |

|

|

|

|

|

|||

case _CALL_: |

|

|

/* Residual call: specialize |

*/ |

||||

store = eval_param( parameters, pstore, lstore, gstore); |

|

|||||||

if (!(lab = seen_call( fun, store, gstore))) |

|

|

|

|||||

|

|

f code_new_fun(); spec_func( fun,store); code_restore_fun(); g |

|

|||||

gen_call( var, fun, store); |

|

|

|

|

|

|||

for (n = 0; n < # endcon gurations; n++) f |

|

|

|

|||||

|

|

update( n'th endconf, pstore, lstore, gstore); |

|

|

||||

|

|

insert_pending(pp+1, pstore, lstore, gstore); |

|

|

||||

g |

|

|

|

|

|

|

||

gen_callbranch(); |

|

|

|

|

|

|||

pp = HALT; break; |

|

|

|

|

|

|||

case _RCALL_: |

|

/* |

Recursive residual call: specialize |

*/ |

||||

store = eval_param( parameters, pstore, lstore, gstore); |

|

|||||||

if (!(lab = seen_call( fun, store, gstore))) |

|

|

|

|||||

|

|

f code_new_fun(); spec_func( fun, store); code_restore_fun(); g |

|

|||||

update( end-conf, pstore, lstore, gstore); |

|

|

|

|||||

gen |

|

call( var, fun, store); |

|

|

|

|

|

|

|

|

|

|

|

|

|||

pp += 1; break; |

|

|

|

|

|

|||

case _UCALL_: |

|

|

/* |

Residual call: unfold |

*/ |

|||

store = eval_param( parameters, pstore, lstore, gstore); |

|

|||||||

gen_param_assign(store); |

|

|

|

|

|

|||

spec_func( fun, store); |

|

|

|

|

|

|||

pp = HALT; break; |

|

|

|

|

|

|||

case _RETURN_: |

|

|

|

/* |

Dynamic return |

*/ |

||

n = gen_endconf_assign();

gen_return(reduce( exp, pstore, lstore, gstore)); save_endconf(n, func, pstore, gstore);

pp = HALT;

g

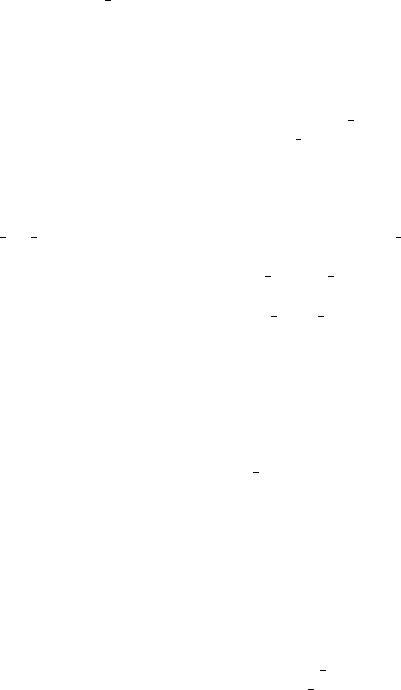

Figure 11.4: Two-level execution of statements.

252 Partial Evaluation for the C Language

Static expressions are evaluated and dynamic expressions are reduced.

In the case of a dynamic goto, the target program point is specialized. If it has not already been specialized with respect to the current values of static variables, it is inserted into a pending list (insert pending()). A residual jump to the residual target point is nally generated.

Dynamic if is treated in a similar way. Both branches are checked to see whether they have been specialized before, and a residual if is added to the residual code.

Consider function specialization. First it is checked to see whether the function already has been specialized with respect to the static values. If so, the residual function is shared, otherwised it is specialized via a recursive call to spec fun (de-ned below). Next the end-con guration branch is generated (gen callbranch()), and the following program point is inserted into the pending list to be specialized with respect to each of the static stores obtained by an updating according to the saved end-con gurations (update()).

To generate a new residual function corresponding to the called function, a library function code new fun() is used. This causes the output of the gen( ) to be accumulated. When the function has been specialized, the code generation process is returned to the previous function by calling (code restore fun()).

Online function unfolding can be accomplished as follows. First, assignments of the dynamic actual parameters to the formal parameters (gen param assign()) is made. Next, the called function is specialized but such that residual statements are added to the current function. By treating returns in the called as goto to the statement following the call, the remaining statements will be specialized accordingly. In practice, though, the process is more involved since care must be taken to avoid name clashes and the static store must be updated to cope with side-e ects.

In case of a return, the expression is reduced and a residual return made. Furthermore, the values of static variables are saved (save endconf()) as part of the residual function.

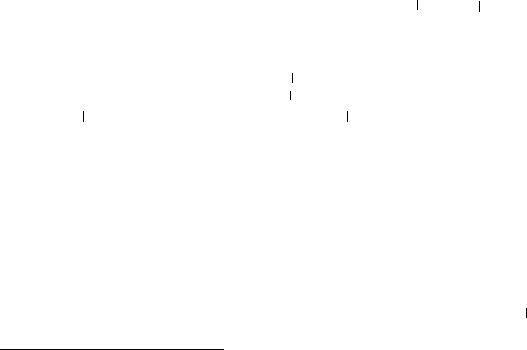

The pending loop

The pending loop driving the polyvariant specialization is outlined in Figure 11.5. As parameter it takes the index of a function to be specialized and the parameter store. It then allocates storage for local variables and initializes the specialization by inserting the rst program point into the pending list.

Let hp; si be a specialization point in the pending list, where p is a program point and s a copy of the static store. To specialize p with respect to s, pp is initialized to p, and the values of static variables restored according to s (pending restore()). Finally, the specialization point is marked as `processed' (pending processed()) such that later specializations of the same program point are shared.

The actual specialization is done by a two-level execution (see Figure 11.4). This is repeated until all pending specialization points have been processed, i.e. until the pending list is empty.

|

|

|

|

Separation of the binding times |

253 |

|

|

|

|

|

|

Value *gstore; |

/* Global store |

*/ |

|||

Value heap[HEAP] |

/* Heap */ |

||||

int spec_func(int func, Value *store) |

|

|

|||

f |

|

|

|

|

|

int pp; |

/* Program point */ |

||||

Value *store; |

/* Store for actual parameters |

*/ |

|||

|

|

|

|

/* Initialize |

*/ |

lstore = alloc_store(func); |

|

|

|||

pending_insert(1, pstore, lstore, gstore); |

|

||||

|

|

|

|

/* Specialize all reachable program points |

*/ |

while (!pending |

|

empty()) |

Restore con guration according to pending |

|

|

|

|

||||

f |

/* |

*/ |

|||

|

pending_restore(pstore, lstore, gstore); |

|

|||

|

pp = pending_pp(); |

|

|

||

|

pending_processed(pp); |

|

|

||

|

|

|

|

/* Two-level execution |

*/ |

|

hFigure 11:4i |

|

|

||

g |

|

|

|

|

|

return |

function index; |

|

|

||

g

Figure 11.5: Function specialization.

11.6Separation of the binding times

In this section we brie y consider binding-time analysis of C with emphasis on basic principles rather than details [10]. Due to the semantics of C, and the involved well-annotatedness conditions, the analysis is considerably more complicated than of the lambda calculus (for example), but nevertheless the same basic principles can be employed. The analysis consists of three main steps:

1.Call-graph analysis: nd (possibly) recursive functions.

2.Pointer analysis: appromixate the usage of pointers.

3.Binding-time analysis: compute the binding times of all variables, expressions, statements, and functions.

11.6.1Call-graph analysis

The aim of the call-graph analysis is to annotate calls to possibly recursive functions by rcall. This information is needed to suspend non-local side-e ects in residual recursive functions. The analysis can be implemented as a xed-point analysis.

The analysis records for every function which other functions it calls, directly or indirectly. Initially the description is empty. At each iteration the description is updated according to the calls until a xed point is reached [4]. Clearly the analysis will terminate since there are only nitely many functions.

254Partial Evaluation for the C Language

11.6.2Pointer analysis

The aim of the pointer analysis is for every pointer variable to approximate the set of objects it may point to, as introduced in Section 11.4.

The pointer analysis can be implemented as an abstract interpretation over the program, where (pointer) variables are mapped to a set of object identi ers [121]. In every iteration, the abstract store is updated according to the assignments, e.g. in case of a p = &x, `x' is included in the map for p. This is repeated until axed-point is reached.

11.6.3Binding-time analysis

The binding-time analysis can be realized using the ideas from Chapter 8 where the type inference rules for the lambda calculus were transformed into a constraint set which subsequently was solved.

The analysis proceeds in three phases. Initially, a set of constraints is collected. The constraints capture the requirements stated in Figure 11.3 and the additional conditions imposed by the handling of recursive functions with non-local side- e ects. Next, the constraints are normalized by applying a number of rewriting steps exhaustively. Finally, a solution to the normalized constraint set is found. The output is a program where every expression is mapped to its binding time.

We introduce ve syntactic constraints between binding time types:

T1 = T2 T1 T2 T1 v T2 T1 Tn v T T1 T2 T1 > T2

which are de ned as follows. The ` ' represents lift: S D. The `<' represents splitting of dynamic arrays: D < D. Furthermore, it captures completely dynamic structs: D D < D. The `< ' is for expressing the binding time of operators. Its de nition is given by T1 T2 i T1 = T2 or T2 = D. The last constraint ` >' introduces a dependency: given T1 > T2, if T1 = D then T2 = D.

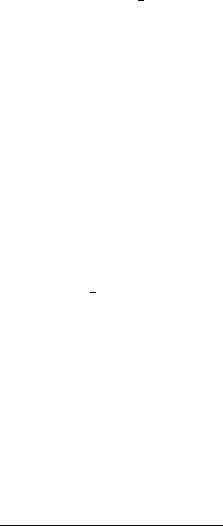

The constraints for an expressions e are inductively de ned in Figure 11.6.

The constraints capture the dependencies between the expression itself and its subexpressions. A unique type variable is assigned to each variable16, expression node, statement node and function.

A constant e is static, captured by the constraint Te = S. The binding time of a variable reference e is given by a unique type variable for every variable v, thus

Te = Tv.

Consider the two-level typing of an index expression e1[e2]. Either the left expression is a static pointer and the index is static, or both subexpressions are dynamic. This is contained in the constraint Te v Te1 , and the constraint Te2 >Te1 assures that if e2 is dynamic then so is e1.

16Contrary to the analysis for the lambda calculus (Chapter 8), we only assign one variable Te to each expression e. Instead we introduce a `lifted' variable T e when needed.

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Separation of the binding times 255 |

||||||||||||||||||||||||||

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Cexp (e) = case e of |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

[[cst c ]] |

|

)fS = Teg |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

||||||

[[var v ]] |

|

)fTv = Teg |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

||||||

[[struct e1.i ]] |

|

)fT1 Te Tn v Te1 g [ Cexp(e1) |

||||||||||||||||||||||||||||||||||||||

[[index e1[e2] ]] |

|

)f Te v Te1 ; Te2 |

|

> Te1 g [ Cexp(ei) |

||||||||||||||||||||||||||||||||||||

|

|

|||||||||||||||||||||||||||||||||||||||

|

|

|||||||||||||||||||||||||||||||||||||||

[[indr e1 ]] |

|

)f Te v Te1 g [ Cexp(e1) |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|||||||||||||||||

[[addr e1 ]] |

|

)f Te1 v Te; Te1 |

|

> Teg [ Cexp(e1) |

||||||||||||||||||||||||||||||||||||

|

|

|||||||||||||||||||||||||||||||||||||||

|

|

|||||||||||||||||||||||||||||||||||||||

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

[[unary op e1 ]] |

|

)fTop1 |

|

T e1 ; Te1 |

|

T e1 ; T e1 |

|

|

|

> Te; Top |

|

Te; Te |

|

> T e1 g [ Cexp(e1 ) |

||||||||||||||||||||||||||

|

|

|

|

|

|

|||||||||||||||||||||||||||||||||||

|

|

|

|

|||||||||||||||||||||||||||||||||||||

|

|

|

|

|

||||||||||||||||||||||||||||||||||||

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|||||||||||

[[binary e1 op e2 |

]] |

)fTopi |

|

T ei ; Tei |

T ei ; T ei |

|

> Te; Top |

|

Te; Te |

|

> T ei g [ Cexp(ei) |

|||||||||||||||||||||||||||||

|

|

|

|

|||||||||||||||||||||||||||||||||||||

|

|

|||||||||||||||||||||||||||||||||||||||

|

|

|

||||||||||||||||||||||||||||||||||||||

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

||||||||||||||||||||||||||

[[ecall f(e1,. . .,en) ]])fTfi |

|

T ei ; Tei T ei ; T ei |

|

> Te; Tf |

|

Te; Te |

|

> T ei g [ Cexp(ei) |

||||||||||||||||||||||||||||||||

|

|

|

|

|||||||||||||||||||||||||||||||||||||

|

|

|||||||||||||||||||||||||||||||||||||||

|

|

|

||||||||||||||||||||||||||||||||||||||

[[alloc(S) ]] |

|

)f Ts v Teg |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

||||||

[[assign e1 = e2 |

]] |

)fTe = Te1 ; Te2 Te1 g [ Cexp(ei) |

||||||||||||||||||||||||||||||||||||||

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Figure 11.6: Constraints for expressions.

The constraints generated for applications of unary, binary and external functions are analogous. Consider the case for ecall. In the typing rule it is de ned that either the arguments are all static or they are all dynamic. In the latter case it may be desired to insert a lift to avoid forcing a static computation dynamic. We introduce n `lifted' variables T ei corresponding to possibly lifted arguments. The are constrained by the static binding time type of the called function Tfi Tei , cf. the use of O map in the well-annotatedness rules. Next, via lift constraints Tei T ei capture the fact that the actual parameters can be lifted. Finally, dependency constraints are added to assure that if one argument is dynamic, then the application is suspended.

In the case of assignments, it is captured that a static right expression can be lifted if the left expression is dynamic, by Te2 Te1 .

The constraints for statements are given in Figure 11.7. For all statements, a dependency between the binding time of the function Tf and the statement is made. This is to guarantee that a function is made residual if it contains a dynamic statement.

Cstmt(s) = case s of |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

[[expr e ]] |

|

) fTe |

|

> Tf g [ Cexp(e) |

||||||||||||||||

|

|

|||||||||||||||||||

|

|

|||||||||||||||||||

[[goto m ]] |

|

) f g |

|

|

|

|

|

|

|

|

|

|

|

|

||||||

[[if (e) m n ]] |

) fTe |

|

> Tf g [ Cexp(e) |

|||||||||||||||||

|

||||||||||||||||||||

|

||||||||||||||||||||

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|||

[[return e ]] |

|

) fTe T e; T e |

|

|

> Tf ; Tfs |

|

|

T e; Tf |

|

> T eg [ Cexp(e) |

||||||||||

|

|

|

|

|||||||||||||||||

|

|

|

||||||||||||||||||

|

|

|

|

|

||||||||||||||||

0 |

(e ,. . . ,en) |

]] ) fTei Tfi ; Tf |

|

|

|

= Tx; Tx |

|

> Tf g [ Cexp(x) [ Si Cexp(ei) |

||||||||||||

[[call x = f |

|

|

|

|

||||||||||||||||

|

1 |

0 |

|

|

0 |

|

|

|

|

|

|

|

|

|

||||||

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

||

Figure 11.7: Constraints for statements. |

|

|

|

|

|

|

|

|

|

|

|

|

||||||||

Using the information collected by the call graph analysis and the pointer analysis, additional constraints can be added to assure, for example, suspension of non-local side-e ects in recursive functions. For example, if a pointer p is set to point to a global variable v in a recursive function, a constraint Tv = D must be added.

Given a multiset of constraints, a solution is looked for. A solution is a sub-

256 Partial Evaluation for the C Language

stitution mapping type variables into binding time types which satis es all the constraints (Chapter 8) [10]. From this it is easy to derive a well-annotated twolevel program.

11.7Self-application, types, and double encoding

So far we have not considered self-application. Contrary to the languages studied previously, C has typed data structures, and this interferes with self-application.

A partial evaluator is a general program expected to work on programs taking all kinds of input, for example integers and characters. To ful l this, it is necessary to encode the static input into a single uniform data type Value. In C, Value could be a huge struct with elds for int, char, doubles, etc. Let the notation dt denote an unspeci ed representation of a datum d (of some type) in a data structure of type t. The proper extensional type of mix (ignoring annotations for simplicity) can then be written as follows:

[[mix]]C (pPgm; sValue) = psPgm

where the result is a representation of the residual program, and the subscripts indicate the languages in use.

Consider self-application [[mix]]C (mix; int), where int is an interpreter. As input

to mix, int must of course be encoded into the program representation intPgm. As for any program input to the running mix, this must be encoded into the Value type. The result may be a huge data structure slowing self-application down and causing memory problems.

This is also seen in the Futamura projections, which can be restated as follows.

|

|

|

|

|

Pgm; |

|

|

|

Value) |

= |

|

|

Pgm |

||||

1. |

Futamura |

[[mix]] ( |

|

|

Pgm |

|

|

||||||||||

int |

|

target |

|||||||||||||||

p |

|||||||||||||||||

|

|

C |

|

|

|

|

|

||||||||||

|

|

|

|

Pgm; |

|

|

Value |

|

|

|

|||||||

2. |

Futamura |

[[mix]] ( |

|

|

|

|

Pgm |

= |

|

|

Pgm |

||||||

mix |

int |

compiler |

|||||||||||||||

|

|

C |

|

|

|

|

|

||||||||||

|

|

|

|

Pgm; |

|

Value) |

|

|

|||||||||

3. |

Futamura |

[[mix]] ( |

|

|

|

|

Pgm |

= |

|

Pgm |

|||||||

mix |

mix |

||||||||||||||||

cogen |

|||||||||||||||||

|

|

C |

|

|

|

|

|

||||||||||

Notice the double encoding of the second argument to mix, and in particular the speculative double encoding of programs. To overcome the problem, the type of the program representation can be added as a new base type. Hereby a level of encoding can be eliminated, a technique also used elsewhere [6,69,169].

11.8C-mix: a partial evaluator for C programs

C-mix is a self-applicable partial evaluator based on the techniques described in this chapter [6,9,10]. It can handle a substantial subset of the Ansi C programming language, and is fully automatic. In this section we report some benchmarks. The

C-mix: a partial evaluator for C programs 257

specialization kernel, described in Section 11.5, takes up 500 lines of code. The whole specializer including library functions consists of approximately 2500 lines of C code.

11.8.1Benchmarks

All experiments have been run on a Sun Sparc II work station with 64M internal memory. No exceptionally large memory usage has been observed. The reported times (cpu seconds) include parsing of Core C, specialization, and dumping of the residual program, but not preprocessing.

Specialization of general programs

The program least square implements approximation of function values by orthogonal polynomials. Input is the degree m of the approximating polynomial, the vector of x-values, and the corresponding function values. It is specialized with respect to xed m and x-values. The program scanner is a general lexical analyser which as input takes a de nition of a token set and a stream of characters. Output is the recognized tokens. It is specialized to a xed token set.

Program run |

Run time |

Code size |

||||

|

|

|

time |

ratio |

size |

ratio |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

[[least |

|

square]](m; Input) |

6.3 |

|

82 |

|

|

|

|

||||

[[least |

|

squarem]](Input) |

3.7 |

1.9 |

1146 |

0.07 |

|

||||||

[[scanner]](Table; Input) |

1.9 |

|

65 |

|

||

[[scannerTable]](Input) |

0.7 |

2.7 |

1090 |

0.06 |

||

The least square program was specialized to degree 3 and 100 xed x-values. The speedup obtained was 1.9.17 It is rather surprising that specialization to the innocent looking parameters of the least-square algorithm can give such a speedup. Furthermore, it should be noted that the so-called `weight-function', which determines the allowed error, is completely static. Thus, more involved weight-functions give larger speedup. The price paid is the size of the residual program which grows linearly with the number of static x-values.

The scanner was specialized to a subset of the C keywords, and applied to a stream with 100 000 tokens. The speedup was 2.7. This is also rather satisfactory, and it shows that e cient lexers can be generated automatically from general scanners. The residual program is rather large, but is comparable to a lex-generated lexical analyser for the same language.18

17The programs were run 100 times on the input.

18This example was also used in Chapter 6, where the scanner was specialized to two-character tokens.

258 Partial Evaluation for the C Language

Compiler generation by self-application

The C mix specializer kernel is self-applicable. Compiler generation is demonstrated by specialization of spec to an interpreter int for an assembly language. This example is taken from Pagan who by hand makes a generating extension of the interpreter [213]. The interpreted program Primes computes the rst n'th primes.

Program run |

Run time |

Code size |

||

|

time |

ratio |

size |

ratio |

|

|

|

|

|

|

|

|

|

|

[[Int]](Primes; 500) |

61.9 |

|

123 |

|

[[IntPrimes]](500) |

8.9 |

7.0 |

118 |

1.0 |

[[spec]](Int; Primes) |

0.6 |

|

474 |

|

[[specInt]](Primes) |

0.5 |

1.2 |

760 |

0.6 |

[[spec]](spec; Int) |

2.2 |

|

474 |

|

[[cogen]](Int) |

0.7 |

3.1 |

2049 |

0.2 |

|

|

|

|

|

The compiled primes program (in C) is approximately 7 times faster than the interpreted version. Compiler generation using cogen compared to self-application is 3 times faster. The compiler generator cogen is 2000 lines of code plus library routines.

11.9Towards partial evaluation for full Ansi C

In this chapter we have described partial evaluation for a substantial subset of C, including most of its syntactical constructs. This does not mean, however, that an arbitrary C program can be specialized well. There are two main reasons for this.

Some C programs are simply not suited for specialization. If partial evaluation is applied to programs not especially written with specialization in mind, it is often necessary to rewrite the program in order to obtain a clean separation of binding times so as much as possible can be done at specialization time. Various tricks which can be employed by the binding-time engineer are described in Chapter 12, and most of these carry over to C. Such transformations should preferably be automated, but this is still an open research area.

A more serious problem is due to the semantics of the language. In C, programmers are allowed to do almost all (im)possible things provided they know what they are doing. By developing stronger analyses it may become possible to handle features such as void pointers without being overly conservative, but obviously programs relying on a particular implementation of integers or the like cannot be handled safely. The recurring problem is that the rather open-ended C semantics is not a su ciently rm base when meaning-preserving transformations are to be performed automatically.

Exercises 259

11.10Exercises

Exercise 11.1 Specialize the mini printf() in Example 11.1 using the methods described in this chapter, i.e. transform to Core C, binding-time analyse to obtain

a two-level Core C program, and specialize it. |

2 |

Exercise 11.2 In the two-level Core C language, both a static and a dynamic goto exists, even though a goto can always be kept static. Find an example program

in which it is useful to annotate a goto dynamic |

2 |

Exercise 11.3 In the Core C language, all loops are represented as L: if () . . .

goto L;. Consider an extension of the Core C language and the two-level algorithm

in Figure 11.4 to include while. Discuss advantages and disadvantages. |

2 |

Exercise 11.4 Consider the binary search function binsearch().

#define N 10

int binsearch(int n) f

int low, high;

low = 0; high = N - 1; while (low <= high) f

mid = (low + high) / 2; if (table[mid] < n)

high = mid - 1;

else if (table[mid] > n) low = mid + 1;

else

return mid;

g

g

Assume that the array table[N] of integer is static. Specialize with respect to

a dynamic n. Predict the speedup. Is the size of the program related to N? |

2 |

Exercise 11.5 The Speedup Theorem |

in Chapter 6 states that mix can at most |

|

accomplish linear speedup. Does the |

theorem hold for partial evaluation of C? |

|

Extend to speedup analysis in Chapter 6 to cope with functions. |

2 |

|

Exercise 11.6 Consider the inference rule for the address operator in Figure 11.3. Notice that if the expression evaluates to a dynamic value, then the application is suspended. This is desirable in the case of an expression &a[e] where e is dynamic, but not in case of &x where x is dynamic. Find ways to remedy this problem. 2

Exercise 11.7 |

Formalize the well-annotatedness requirements for statements, func- |

|

tions, and Core C programs. |

2 |

|

Exercise 11.8 |

Develop the call-graph analysis as described in Section 11.6. Develop |

|

the pointer analysis. |

2 |

|