Dr.Dobb's journal.2006.03

.pdf

W I N D O W S / . N E T D E V E L O P E R

Themes, Skins, and

ASP.NET 2.0

Taking control of your controls

VIKRAM SRIVATSA

There’s nothing really new about the concept of “themes,” particularly with respect to Microsoft Windows. For instance, desktop themes in Windows 95 let you apply color schemes across the

entire range of controls that make up the Windows UI and icons. Themes were also part of the Microsoft Plus! add-on pack for Windows. More recently, themes were extended in Windows XP with Visual Styles that let you control the color, size, and fonts used in controls. Additionally, applications such as Winamp have contributed to the themes craze, with thousands developed by people around the world. With the release of .NET Framework 2.0 and Visual Studio 2005, themes extend to ASP.NET web applications.

In ASP.NET 2.0, a theme is a way of defining the look-and-feel for server controls in web applications. Once defined, a theme can be applied across an entire application, to a single page of an application, or to a specific control on a web page.

A theme is made up of one or more “skins” and can contain traditional Cascading Style Sheets (CSS). Skins are files that contain the property definitions for various controls. Previous versions of ASP and ASP.NET used CSS to achieve consistency in appearance. In fact, themes support and complement CSS. A theme lets you specify values for ASP.NET Web Server controls. Additionally, when you need to specify settings for HTML elements, traditional CSS files can be used as part of a theme.

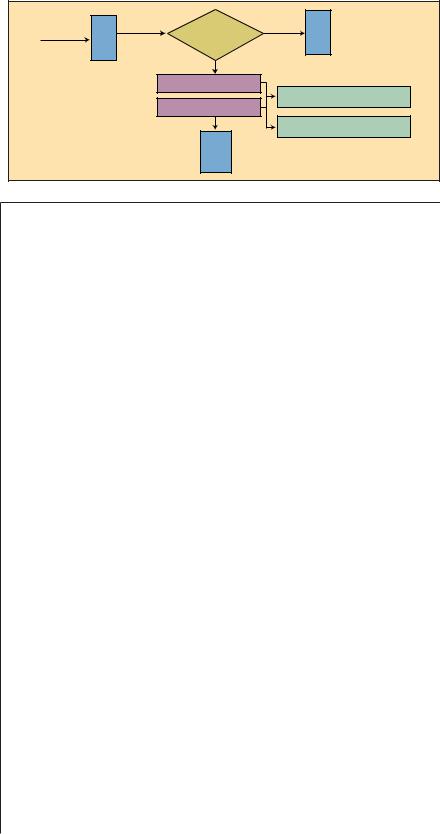

Figure 1 illustrates the control flow for ASP.NET 2.0 themes. As soon as the request for a page arrives, a check verifies

Vikram is a software-designer specialist for Hewlett-Packard GDIC. He can be contacted at vikram404@gmail.com.

that a theme has been defined for the page or web application. If a theme has been defined, then the theme information is retrieved from the default locations where the theme would be stored. This can be the App_Themes folder under the folder of the web application for a theme specific to the application, or the \IISRoot\aspnet_client\system_web\2_0_50727\Themes folder for themes that have been defined at the global level. Based on the information retrieved, the theme is applied to the page and the page is displayed on the browser.

Creating and Applying Themes

There are several steps involved in creating themes:

Step 1. Start Visual Studio 2005 and select New Web Site from the File menu. Choose ASP.NET Web Site from the templates, then C# in the language dropdown. Also choose HTTP as the location and create the web site on the local IIS.

Step 2. Build a UI that has common UI elements — Labels, Textboxes, Dropdowns, and Buttons — on the default.aspx web page.

Step 3. Right click on the Solution Explorer and select Add Folder. Choose the Add ASP.NET Folder from the submenu and drill down to select the Theme folder. This creates an App_Themes folder that acts as the container for all the themes defined for this web application.

Step 4. Under the Theme folder, create another folder of type Theme. Specify a name for the folder. The name you specify becomes the name for the theme. For simplicity, I use “SimpleGrayTheme.”

Step 5. Add a skin file. Right click on the SimpleGrayTheme folder and choose Add New Item. There is no separate template for a skin in the list of installed templates. Choose Skin, then name it Textbox.skin. Add more skin files, such as Dropdown.skin, Label.skin, and Button.skin. (It isn’t mandatory to have separate .skin files for each control; I only do so for clarity. No matter how many .skin files a theme contains, the ASP.NET 2.0 runtime merges them all and considers it as a single .skin file.)

Step 6. Define the appearance for these controls that you placed on the web page.

Start with the skin for the button. Listing One presents the property settings for the Button. Similarly, you define the property settings for each of the other controls. Listing Two presents the contents of each of the .skin files.

Step 7. Once you have defined the appearance for the controls, you can apply the skin to the page. The page exposes the theme as a property. Select the web

“Skins are files that contain the property definitions for various controls”

page and open the Properties window for the page. In the list, navigate to the Property theme, and SimpleGrayTheme drops down as a choice. If more than one theme is defined, these also appear in the dropdown menu. In fact, the theme is an @Page directive and if you open the source view for the web page, this directive would be set. The Visual Studio 2005 IDE does not provide designer support for the themes. Even after applying a theme to the page, the appearance of the page in the VS 2005 Designer does not change. Furthermore, it is possible to specify the theme for the page at runtime. However, this needs to be set in the Page_PreInit event for the page; see Listing Three(a). It is also possible to specify a theme for an entire application by making use of the Web.config file; see Listing Three(b).

Step 8. Run the web application to display the web page in the browser. The page appears with the theme applied to it.

Managing Multiple Skins

In the scenario just presented, I applied the same skin to all text boxes on the page. In reality, it is often necessary to apply multiple different skins to different instances of the same control. For example,

36 |

Dr. Dobb’s Journal, March 2006 |

http://www.ddj.com |

you might want to have a particular skin for the First Name and Last Name text boxes, while a different one for the Job Title and Company. To address this scenario, ASP.NET 2.0 has implemented the concept of a “SkinID”— a unique identifier or a name for identifying a particular skin. In other words, SkinID lets you specify a specific skin for a control. For example, the skin definitions in Listing Four modify the TextBox.skin in Listing Two. Essentially, I have created three skins for the TextBox control. The second and third skin definitions have a SkinID specified, while the first one does not. The next thing to do is associate a specific skin with a specific text-box control.

In the web page, select the text box for which you want to specify a SkinID and open the Properties window. In the Properties window, select the SkinID property. The skins defined for text boxes are presented for selection in the drop-down menu.

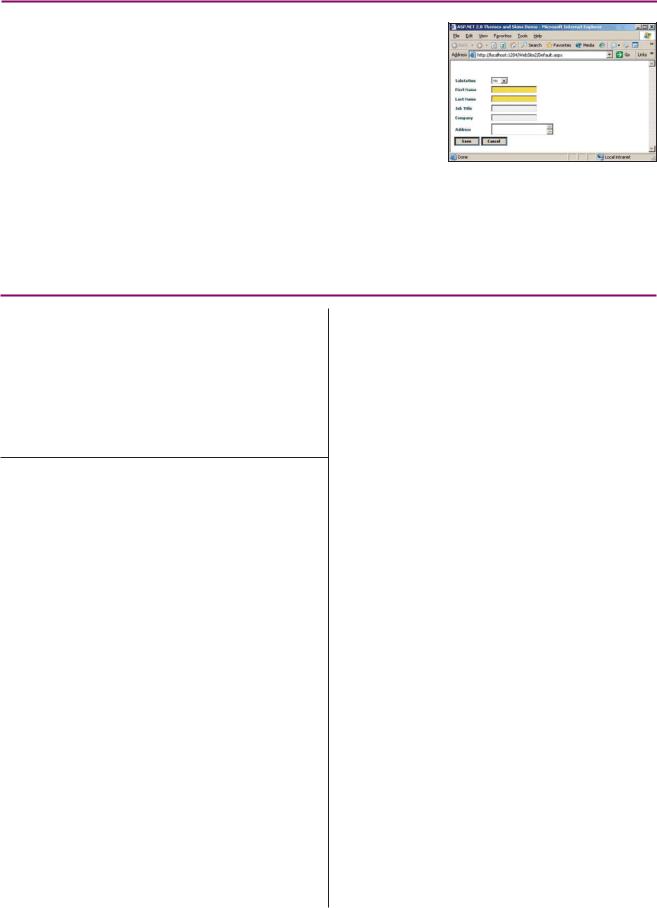

For the FirstName and LastName fields, select RequiredTextBox SkinID and for the Address text box, select the RequiredTextBox SkinID. For the Job Title and Company text boxes, do not specify any SkinID. Now run the application. The web page is displayed in Figure 2.

The default skin was applied to the Job Title and Company fields, although no SkinID was specified to these controls. This is by design. If a default skin exists for a control, then that skin is applied to all instances of that control for which no SkinID has been specified.

Disabling Skins

You can disable skins from being applied to a particular control. To do so, the EnableTheming property on the control needs to be set to False. By default, this is set to True on all controls that support themes. All controls for which the EnableTheming property is set to False are excluded from themes.

Theme Classification

In ASP.NET, another method of applying a theme to a page is a “StyleSheetTheme,” which is applied by setting the property

StyleSheetTheme on the page. The StyleSheetTheme is an @Page directive by the name “StyleSheetTheme.”

It is important to understand that this classification is purely based on the way the theme is applied. It is possible to apply the same theme as a Theme setting in one page, and as a StyleSheetTheme in another page. Based on whether a theme has been applied with a Theme setting or a StyleSheetTheme, the behavior changes.

By behavior, I mean the precedence of the skin over a particular property that has been set on a control. For example, say

that in a page the Font-Bold property for |

When you apply the theme as a Style- |

each of the controls is set to False. In the |

SheetTheme, the property value set on the |

skin for this control, this property has been |

control takes precedence over the value that |

set as True. |

has been applied in the Theme setting. That |

|

Web |

Retrieve Page |

Does |

No |

Web |

|

User Request |

WebApp/Page Have |

|||||

Page |

|

Theme Defined? |

|

Page |

||

|

|

|

||||

|

|

|

|

Yes |

|

|

|

|

Retrieve Theme Information |

|

|

||

|

|

|

|

|

|

App_Themes\ThemeName |

|

|

|

Apply Theme |

|

|

|

|

|

|

Display |

Web Page |

\IISRoot\aspnet_client\system_web\ |

|

|

|

|

Web |

2_0_50727\Themes\ThemeName |

||

|

|

|

|

|

||

|

|

|

Page |

|

|

|

|

|

|

with |

|

|

|

|

|

|

Theme |

|

|

|

Figure 1: Themes. |

|

|

|

|

|

|

http://www.ddj.com |

Dr. Dobb’s Journal, March 2006 |

37 |

is, even though the Font-Bold property has been set to True in the Theme setting, the control’s setting of False in StyleSheetTheme will be considered as final and the page will appear without the boldface applied on the controls.

In contrast, if you applied the same theme to the same page in the Theme setting, then the opposite happens. That is, the control properties set in the Theme folder override any value that has been set on the control. Hence, in this case, the page would appear with boldface applied on all controls. This lets you control the behavior of the themes, based on the scenario and particular requirements.

Application-Specific and Global Themes

Themes stored in the App_Themes folder of a particular web application are spe-

cific to that application and can be used only by that web application.

If there is a need to reuse the same theme in multiple web applications, then the Theme folder would need to be placed in this folder on the machine:

\IISRoot\aspnet_client\system_web \2_0_50727\Themes

For example, if you use the default IIS web site located at C:\Inetpub\wwwroot, the folder is C:\Inetpub\wwwroot\aspnet_client\ system_web\2_0_50727\Themes. Once the theme has been placed in the specified folder, it becomes available to all web applications on the machine.

Conclusion

The concept of themes, coupled with the concept of master pages in ASP.NET 2.0,

Figure 2: Sample application showing required fields.

gives you an efficient and easy-to-use infrastructure for you to design and develop web applications that are consistent in appearance and layout.

DDJ

Listing One

Button.skin |

|

<asp:Button |

|

BorderColor |

= "LightSlateGray" |

BorderStyle |

= "Inset" |

BorderWidth |

= "4px" |

Font-Bold |

= "True" |

Font-Name |

= "Verdana" |

Font-Size |

= "XX-Small" |

ForeColor |

= "Black" |

Runat |

= "Server" |

/> |

|

Listing Two

Dropdown.skin

<asp:DropDownList

Font-Bold |

= "True" |

Font-Name |

= "Verdana" |

Font-Size |

= "XX-Small" |

ForeColor |

= "Gray" |

Runat |

= "Server" |

/> |

|

Label.skin |

|

<asp:Label |

|

BackColor |

= "WhiteSmoke" |

ForeColor |

= "DarkSlateGray" |

Font-Bold |

= "True" |

Font-Name = "Verdana" |

|

Font-Size |

= "XX-Small" |

Runat |

= "Server" |

/> |

|

TextBox.skin |

|

<asp:TextBox |

|

BackColor |

= "WhiteSmoke" |

ForeColor |

= "DarkGray" |

Font-Bold |

= "True" |

Font-Name |

= "Verdana" |

Font-Size |

= "XX-Small" |

Runat |

= "Server" |

/> |

|

Listing Three

(a)

Setting Theme In Code at Runtime

void Page_PreInit(object sender, EventArgs e)

{

Page.Theme = "SimpleGrayTheme";

}

(b)

Web.config

<configuration>

<system.web>

<pages theme="SimpleGrayTheme" /> </system.web>

</configuration>

Listing Four

TextBox.skin |

|

<asp:TextBox |

|

BackColor |

= "WhiteSmoke" |

ForeColor |

= "DarkGray" |

Font-Bold |

= "True" |

Font-Name |

= "Verdana" |

Font-Size |

= "XX-Small" |

Runat |

= "Server" |

/> |

|

<asp:TextBox |

|

SkinId |

= "RequiredTextBox" |

BackColor |

= "Yellow" |

ForeColor |

= "DarkGray" |

Font-Bold |

= "True" |

Font-Name |

= "Verdana" |

Font-Size |

= "XX-Small" |

Runat |

= "Server" |

/> |

|

<asp:TextBox |

|

SkinId |

= "MultiLineTextBox" |

BackColor |

= "White" |

ForeColor |

= "Black" |

Font-Bold |

= "True" |

Font-Name |

= "Verdana" |

Font-Size |

= "XX-Small" |

Runat |

= "Server" |

/> |

DDJ |

38 |

Dr. Dobb’s Journal, March 2006 |

http://www.ddj.com |

E M B E D D E D S Y S T E M S

Debugging & Embedded

Linux Runtime Environments

Modifying the kernel gives you the tools

RAJESH MISHRA

Over the past decade, Linux has become increasingly prevalent in many areas of computing— including in the embedded space where it has been adapted to numerous processors. Embedded Linux, also known as “uClinux” (pronounced “micro-see-Linux”), is a minimized version of standard Linux that runs on highly resource-constrained devices. uClinux is maintained by the Embedded Linux/Microcontroller Project (http://www.uClinux.org/), a group whose sponsors include (among others) Analog Devices, the company I work for. Analog Devices has also ported uClinux to its Blackfin architecture (http://

blackfin.uclinux.org/).

Applications executing in resource-con- strained environments typically try to conserve on CPU and memory use, while still executing in a robust and secure fashion. However, during development, these barriers can be broken. For example, memory leaks, stack overflows, missed real-time application deadlines, process starvation, and resource deadlocks are frequently encountered. In this article, I present tools and techniques for resolving these critical issues, thereby facilitating development of robust application software for Embedded Linux.

Desktop to Embedded Debugging

One of Linux’s most attractive features is its programming environment. Not only is the standard desktop environment friendly to traditional UNIX developers, but modern Linux programmers are also equipped with state-of-the-art IDEs such as Eclipse. uClinux, however, is much more restricted than the native Linux development environment. The typical uClinux development environment consists of a remote interface from a host to the target platform.

Raj is a senior software engineer at the Digital Media Technology Center of Analog Devices. He can be contacted at rajesh

.mishra@analog.com.

The host platform is usually the standard Linux or Windows desktop executing a debugger such as a GDB or commercial IDE. This debugger communicates with the target platform over a bridge, which interconnects a host interface (USB or PCI) to the target processor, such as serial JTAG. The target device (Analog Device’s Blackfin processor, for instance) sits on a firmware board interfaced to all requisite peripherals and external interfaces (network, memories, RS-232, and other serial interfaces, possibly PCI and other system buses). Although the host platform may communicate with the target over any of these external interfaces (network and RS-232), the JTAG interface is the primary means of communication to the target device because JTAG enables “on-chip” debugging and control. That is, it lets you remotely start, stop, and suspend program execution, examine the state of the target processor, memories, and I/O devices, and execute a series of target instructions.

Despite the capabilities provided by onchip JTAG interfaces to IDEs, debugging a remote uClinux platform is still not the same as that of native Linux. Under the desktop, a native debugger can execute in a protected environment, allowing application processes to be traced and monitored throughout their life. If exceptions or error events occur in the program, the native debugger detects and responds to them, without affecting the overall system. Embedded Linux environments, on the other hand, do not provide this level of program protection. When an exception occurs, the remote debugger may detect the event and halt it; however, all other processes within the system are also halted, including the kernel.

To emulate the native scenario, uClinux developers use gdbserver, a uClinux application program that executes just like other user-level processes. However, its primary function is to enable control of a target program’s execution and communicate with remote host debuggers (such as GDB) over standard links (TCP/IP or serial connections).

Application Awareness

Although gdbserver has the advantage of stepping through uClinux application programs and monitoring their execution state, it does have significant shortcomings. For instance, if the network or serial connec-

tion is not 100 percent available as a trusted communications interface, then gdbserver cannot be used. Because uClinux programs run in a virtually unprotected environment, when the program encounters an exception or error event, it is likely that gdbserver itself fails, resulting in all debugging capability being lost. In the uClinux environment, most catastrophic errors are unrecoverable by the system. And so, other mechanisms are required to enable a more robust debugging environment.

“The typical uClinux development environment consists of a remote interface from a host to the target platform”

One such strategy we’ve developed for Analog Devices’ Blackfin-based eM30 platform (which is designed for set-top boxes, media servers, portable entertainment devices, and the like) is to let the host debugger become “application aware.” In this case, the uClinux kernel provides knowledge of what application processes are executing and where they reside in memory to the host debugger. It does this by maintaining a table of all active application processes and sharing it with the host debugger. The debugger examines this table and uses it to reference symbol table information for the corresponding application to control its execution and report its state. With these capabilities, users can set breakpoints within any of the application programs, step through these programs at source level, examine expressions of data variables, and so on. Also, users can continually step through application code into runtime library code and across into kernel space. Users have simultaneous access to all user-space and kernel-space structures.

Kernel Awareness

Exceptions and errors encountered within the uClinux kernel are the most frequent

40 |

Dr. Dobb’s Journal, March 2006 |

http://www.ddj.com |

cause of system failure. Such causes are memory overwrites due to stack and data overflows, missed deadlines of kernel threads and interrupt service routines, and resource deadlocks and starvation due to race conditions in semaphore usage. To debug these conditions, more debugging information is required from the kernel for the debugger than is currently available.

For the eM30 platform, we’ve added extensions to the standard uClinux 2.6.x kernel and GDB Blackfin-uClinux target debugger to expose this relevant internal kernel state.

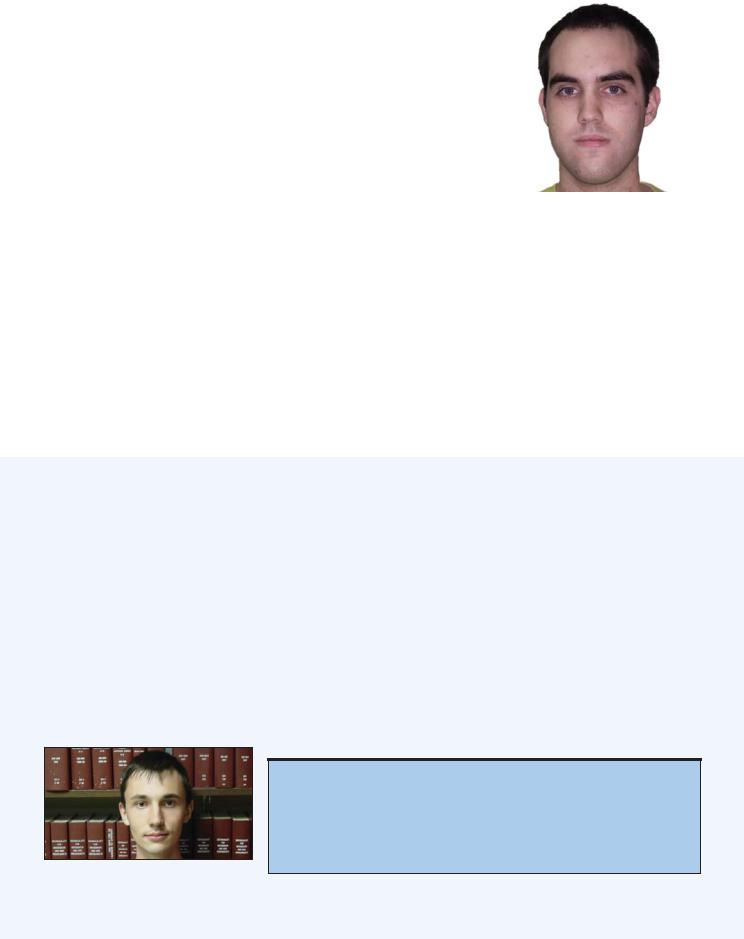

Most of the kernel state is visible through existing kernel data structures, such as the task_struct task structure and network statistics. However, certain states are not captured in the existing kernel and require code extensions. One of these is the processor mode, which describes whether the processor is in kernel/supervisory mode, user mode, or is idling. This state is updated only during mode switching, as in the state diagram in Figure 1. As shown, the processor states are assigned only when the appropriate state transition occurs, such as when a processor event occurs or a kernel routine is invoked.

During debugging, it may also be of interest when timer and scheduler events took place — to know when processes are scheduled, how long the processor was occupied in them, and when deadlines may have passed or missed. We’ve added profiling of these events in the entry and exit points of the existing timer_interrupt and schedule routines. This not only provides information about when these events took place, but also how long it took to process these events.

The Linux kernel does not provide all necessary structures for accessing the entire kernel state. Wait queues and spin locks are some of these structures that cannot be accessed globally. To gain visibility into these structures, we customized the Blackfin uClinux GDB debugger to support this awareness. During the kernel load process, a GDB script examines all declared static and dynamic wait_queue and spin_lock structures and initializes a global list of both types of structures within kernel memory. It also loads the static portions of these structures. For dynamic instantiation of these structures, we modified the kernel allocation routines to load the respective information into this global list. With this scheme, we can examine and access the state of all resource-locking mechanisms within the kernel. This gives us the ability to debug resource deadlock and starvation scenarios.

Modes of Interaction

Debugging under uClinux requires you to interact in different ways with the target system:

Default_Idle()

interrupt

Kernel |

Idle |

User

trap or interrupt

ret_from_interrupt() OR ret_from_exception()

Figure 1: Processor mode state transitions.

# cat /proc/meminfo |

|

MemTotal: |

1035172 kB |

MemFree: |

10040 kB |

|

|

Figure 2: Contents of /proc/meminfo.

•When a system crash occurs, you can resort to examining postmortem information from the last preserved system state within the debugger.

•When a memory leak is suspected, the amount of free memory may be periodically checked to see if it is shrinking.

•When a resource deadlock occurs, you may require a history of all locking and unlocking events on the resource to see how the state was arrived at.

Each of these scenarios requires a unique approach to collecting and analyzing debugging information.

The first scenario requires interactive debugging on the target device from within a debugger. The debugger may halt when a program exception event occurs on the target device to let you examine the postmortem state from within the debugger. You can define specific events (breakpoints) in the program code to trigger the debugger to halt. You may decide to walk through program execution (single-step) as each statement (or instruction) is executed. You may interrupt an already-running program by entering a specific key sequence (for example, CTRL-C) or resume a halted program. The debugger may let you change the state of the program, contents of memory, and machine state (internal and shadow registers). All of these methods necessarily intrude upon the actual execution of the program on the target machine.

Because debugging under the debugger can result in modifying program behavior, a second type of interactive debugging can be used to periodically examine the state of the target device. In the second scenario, dynamic memory allocation may be affected when the machine state is altered (such as when an interrupt service routine allocates memory). Hence, debugging via the debugger may not accurately reproduce the given scenario. An alternate means of

http://www.ddj.com |

Dr. Dobb’s Journal, March 2006 |

41 |

debugging this problem is to examine the system state via the operating system.

uClinux provides the /proc filesystem for examining (and even modifying) system state from the target’s shell command prompt. In this example, the output of the shell command # cat /proc/meminfo (Figure 2) may be inspected. If the MemFree field shrinks during the course of program execution, this may provide evidence for a memory leak.

The usefulness of the /proc filesystem was exploited in the eM30 to output more details of the internal state of the Linux kernel. This uClinux enhancement has been incorporated inside a special miscellaneous, character-mode driver called “bfin_profiler.” This driver provides not only this /proc facility but also a mechanism for the kernel to log events and a user application to output these events to a filesystem.

Listing One (available electronically; see “Resource Center,” page 5) presents the functions that dump the bfin_profiler driver output to the /proc filesystem. Listing Two (also available electronically) is sample output from this dump. Measurements provided under “read count” indicate the user application response time to an interrupt event in clock cycles. Special instrumentation is provided within the kernel to measure this latency. The count itself indicates the number of response events that were received by the application. Cycle measurements indicate average, minimum, and maximum wait times. The CPU profiler provides special instrumentation to measure how much of the processor’s time was spent in user mode, kernel mode, and idle state. Performance figures as cycle counts are reported under “CPU profiler.” Measurements of how long was spent in the timer interrupt service routine timer_inter-

Id |

Cycles |

Value |

00020000 |

- |

00000001 |

00020001 |

16169 |

00000001 |

00020000 |

5399296 |

00000002 |

00020001 |

5406032 |

00000002 |

00020000 |

10799032 |

00000003 |

00020001 |

10805024 |

00000003 |

00020000 |

16198896 |

00000004 |

00020001 |

16204840 |

00000004 |

00020000 |

21598764 |

00000005 |

00020001 |

21604688 |

00000005 |

00020000 |

26998628 |

00000006 |

00020001 |

27004548 |

00000006 |

|

|

|

Figure 3: Sample event telemetry data.

Total Runtime: 2759336252 cycles (5109.88 ms) Bin 2

Total Cycles:3119250 (0.11%) Interval Cycles:2756217002 (99.89%) Count: 512

Average: 6092 Min: 5912 Max: 16169

Figure 4: Sample output of profbin.

rupt and the scheduler schedule are also reported. Telemetry statistics are reported following the scheduler. Finally, the number of active user application processes currently running in the system is reported, along with each program’s pathname, code and data size, pointer to task structure, and process ID. The report in Listing Two identifies the key elements of the uClinux kernel state, directly accessible by users to monitor system activity.

Interactive debugging, from within a debugger or within an operating-system console, is intrusive to overall system operation, although to a lesser extent using the /proc filesystem. Also, it does not provide a history of system activity, only the current system state. This history information is often needed, as in the third scenario, when trying to debug resource deadlocks. A history of all resource locking and unlocking events is necessary to analyze how the deadlock conditions were arrived at.

To assist uClinux programmers in gathering this type of event history, we added a kernel-callable function, bfin_ profiler_event (available electronically; see “Resource Center,” page 5) to the bfin_profiler driver. In addition, we inserted event-capture calls to specific areas of the kernel for measurement. For instance, the timer_interrupt kernel routine (also available electronically) shows event-capture code inserted into the timer-interrupt service routine timer_interrupt. Upon entry into this routine, the bfin_profiler_event procedure is invoked with a unique event identifier BLACKFIN_PROFILER_TIMER_ENTER (value of 0x20000) to denote entry; likewise, upon exit, BLACKFIN_PROFILER_TIMER_EXIT (value of 0x20001) is used to denote the exit event. Both calls unconditionally timestamp each event. Only the first call increments the event counter, so that both the exit and entry events are associated with the same event instance. The event ID, timestamp, and event count are recorded for each instance into a telemetry buffer called bfin_profiler_telem_buffer. Figure 3 shows sample contents of this buffer. The prof2txt postprocessing utility generated this output. The Id field indicates the event ID (BLACKFIN_PROFILER_TIMER_ENTER and BLACKFIN_PROFILER_TIMER_EXIT in this example), Cycles indicates a relative timestamp based on the interval between current and last event, and Value denotes the event instance (note that the timer entry and exit events are associated according to each instance).

The bfin_profiler_event utility routine may be used to log any kernel event. All that is required is that a unique event ID be passed as an argument. As illustrated, timestamps are taken as a 64-bit sample of a hardware cycle counter to allow accurate measurements to be performed at the finest

granularity. Although not all architectures may provide a hardware cycle counter, a programmable timer may also be used for this purpose.

Once event information has been logged into the target machine’s system memory, there are several ways to store the event data for external monitoring and analysis. For offline analysis, the telemetry buffer may be read out by a user application using the ioctl(BFIN_PROFILER_TELEM_READ) driver call and storing its contents to a file. For online analysis, the contents can be read out the same way and sent to a remote host over the network. Because this method may interfere with event logging (for instance, if network events are being logged), a separate JTAG interface is provided for transmitting the event data. Although the JTAG interface is much slower than the network interface, it is much less intrusive to the overall system.

Once the event data has either been captured to a file or delivered to a remote host program, the event trace may be analyzed for relationship between events, timing, and so on. Two utility problems have been written for postprocessing analysis. Again, the prof2txt utility dumps the event trace in readable format with event IDs, relative timestamps, and instance values. In the simplest analysis, the dump displays an ordering of events when they occurred chronologically. Alternatively, for more complex analysis, the output of this utility may be loaded into a spreadsheet or mathprocessing tool such as MATLAB. Using these tools, event plots may be obtained, performance metrics may be calculated, and correlation between events and timing can be analyzed. The profbin utility performs a histogram analysis of each pair of events. It places each event pair instance into a separate bin and calculates the minimum, maximum, and average frequency of each event pair in number of cycles. Sample output of this utility is provided for the timer entry/exit example in Figure 4. As illustrated in this example, during a run of 5.1 seconds, the timer interrupt service routine spent 0.11 percent of the CPU and took an average of 6092 cycles for each timer event.

Conclusion

Debugging in the uClinux environment requires an assortment of tools, including interactive debuggers, operating-system facilities such as the /proc filesystem, and event-based telemetry capture and analysis tools. However, this is not all. The Linux kernel and debugger require key modifications to enable visibility into the internal kernel state and the applications executing on top.

DDJ

42 |

Dr. Dobb’s Journal, March 2006 |

http://www.ddj.com |

|

|

SUMMER OF CODE |

|

|

NetBSD-SoC: Efficient Memory Filesystem |

|

|

|

|

||

|

etBSD inherited the Memory File Sys- |

files stored within it in a tree graph. Each |

|

|

tem (MFS), a filesystem that uses vir- |

file has a node associated with it, which de- |

|

Ntual memory pages to store its con- |

scribes its attributes (owner, group, mode, |

|

|

|

tents, from 4.4BSD. MFS implements the |

and the like) and all the type-specific in- |

|

|

on-disk Fast File System’s lower layer to |

formation. For example, a character device |

|

|

store blocks into memory. This is ineffi- |

has a major and minor number, a symbol- |

|

|

cient because the same algorithms used |

ic link has the name of its target, and so on. |

|

|

to lay out data on disk are used for mem- |

Directories are implemented using |

|

|

ory, introducing redundancy and complex |

linked lists, making their management ex- |

|

|

data treatment. Despite that, the worst |

tremely easy. Directory traversal is quick, |

|

|

problem with MFS is that, once it has |

and node creation and deletion need not |

|

|

mapped a page, it never releases it. |

be concerned with entry proximity nor |

|

|

tmpfs (short for “temporary filesystem”) |

gaps between entries. |

Julio M. Merino Vidal |

|

solves these problems. It is a completely |

Regular files, on the other hand, are in- |

pagers, and file reading and writing is per- |

|

new filesystem that works with NetBSD’s |

teresting because they are managed almost |

|

|

virtual memory subsystem, UVM. It aims |

completely by the UVM. Each file has an |

formed by mapping and accessing pages |

|

to be fast and use memory efficiently, which |

anonymous memory object attached to it, |

of the file’s UVM object. Thus, the only dif- |

|

includes releasing it as soon as possible |

encapsulating the memory allocated to the |

ference between files on other filesystems |

|

(for instance, as a result of a file deletion). |

file. This memory is managed solely by the |

and those on tmpfs is the nature of the |

|

At this writing, tmpfs is already integrat- |

virtual memory subsystem. This design |

UVM object holding the pages. |

|

ed into NetBSD-current (3.99.8), which |

meshes well with the Unified Buffer Cache |

DDJ |

|

makes testing and further development a lot |

used by NetBSD, where files are UVM |

|

|

easier. All the filesystem’s functionality is |

|

|

|

Name: Julio M. Merino Vidal |

|

|

|

written and works, albeit with some known |

|

|

|

bugs. The most significant problem is that |

Contact: jmmv84@gmail.com |

|

|

School: Barcelona School of Informatics, Spain |

||

|

it currently uses wired (nonswappable) ker- |

||

|

Major: Computer Science |

|

|

|

nel memory to store file metadata. |

|

|

|

Project: NetBSD/tmpfs |

|

|

|

Let’s now dive a bit into tmpfs’ internals |

|

|

|

Project Page: http://netbsd-soc.sourceforge.net/projects/tmpfs/ |

||

|

(full documentation is included in the pro- |

||

|

Mentor: William Studenmund |

|

|

|

ject’s tmpfs(9) manual page). As with tra- |

Mentoring Organization: The NetBSD Project (http://www.netbsd.org/) |

|

|

ditional filesystems, tmpfs organizes all the |

|

|

|

|

|

|

Pyasynchio: The Python Asynchronous I/O Library

Pyasynchio is a Python library created to support cross-platform asynchronous I/O (AIO) operations. Compared to the famous asyncore library, for example, pyasynchio is simple and does not require you to use object-oriented approaches. It just provides a way to initiate AIO and poll completion status. It is up to library users to implement patterns for processing operation results. At the moment, Pyasynchio supports file and socket AIO (only on Windows, but POSIX AIO and Solaris AIO support is coming). Pyasynchio uses built-in Python file and socket handles as AIO targets, so users can plug Pyasynchio into the

middle of their code.

AIO is a way to alleviate I/O bottlenecks without multithreading. Usually, AIO is ef-

ficient in terms of resource usage (sometimes more efficient than multithreading, select/poll, or mixed solutions). AIO allows additional speed optimization at the OS and driver level and does not require an operation to complete during the call to the function that started it. A well-known object-oriented implementation of AIO on top of different low-level OS facilities can be seen in the ACE library (http://www.cs

.wustl.edu/~schmidt/ACE.html).

It is important to note that AIO is different from synchronous nonblocking I/O (often, nonblocking I/O is erroneously called “asynchronous” I/O, but it really isn’t because each nonblocking I/O operation is still synchronous). Pyasynchio makes use of the Proactor pattern and Asynchronous Completion Token pattern,

but the dynamic type system of Python makes some of Proactor’s object-oriented burden unnecessary. Applications of Pyasynchio may be even greater when Python introduces coroutines support (PEP 342). With coroutines, some I/O processing tasks can be coded in a synchronous manner (without distributing connectionprocessing logic over numerous handler classes/callbacks) but will still be able to take advantage of nonblocking I/O or AIO.

It is worth mentioning that a coroutinebased approach is possible with ordinary nonblocking synchronous I/O (as outlined in PEP 342), but AIO coroutines may be more effective in terms of resource usage and/or speed and/or implementation clarity. Pyasynchio code and binaries are available under an MIT license.

DDJ

Name: Vladimir Sukhoy

Contact: vladimir.sukhoy@gmail.com School: Iowa State University

Major: Ph.D. candidate, Applied Mathematics Project: Pyasynchio

Project Page: http://pyasynchio.berlios.de/pyasynchio-about.htm Mentor: Mark Hammond

Mentoring Organization: The Python Software Foundation (http://www.python.org/)

Vladimir Sukhoy

44 |

Dr. Dobb’s Journal, March 2006 |

http://www.ddj.com |

P R O G R A M M I N G P A R A D I G M S

Boxing Day

Michael Swaine

Box. Nicely done. Well put together…Carpentry is one of the arts going out…or crafts, if you’re of a nonclassical disposition…But this is solid, perfect joins…good work…Oh, very good work; fine timber, and so fastidious, like when they shined the bottoms of the shoes…and the instep. Not only where you might expect they’d shine the bottoms if they did, but even the instep…

(Grudging…) And other crafts have come up…if not to replace, then…occupy.

—Edward Albee, Box

In this art or craft of software development, thinking outside the box is a constant challenge, because it seems like it’s just one box after another.

Comic-book writer Chris Claremont once said that when he was creating a new superhero, he would always ask himself, “Is there any reason this character can’t be a woman?” It was a conscious effort to challenge the ubiquitous unconscious default, and it changed the course of superhero comicbook history. A small thing, but his own.

It seems to me that there is a similar question a developer might ask at the beginning of every software project: “Is there any reason this project can’t be open source?” The question might be worth asking, even if you know in advance that your company’s business model and your unwillingness to put your job on the line add up to an immediate “you’re darned right there is.”

Thinking Outside the Black Box

I must sojourn once to the ballot-box before I die. I hear the ballot-box is a beautiful glass globe, so you can see all the votes as they go in.

— Sojourner Truth, letter to the World

I’m thinking of one particular software task for which economic or political forces seem to be producing a “you’re darned right there is” answer. Unfortunately, it’s a task that— it seems to me — absolutely must not be tackled with proprietary, black-box solutions. I mean the task of writing software for electronic voting machines and for tallying votes in elections.

The 2004 general election in the United States was a wake-up call, because many disturbing questions were raised — and remain unanswered — regarding the accuracy of machine voting results and the accountability of the process. But when off-year elections come around this November, the reliability of electronic voting prob-

Michael is editor-at-large for DDJ. He can be contacted at mike@swaine.com.

ably won’t be much better than in 2004, according to an October 2005 report from the General Accounting Office. The GAO cited lack of consensus on adequate certification processes. What’s needed, according to the Electronic Frontiers Foundation, is clear: A verifiable paper audit trail and open-source software. EFF.org and BlackBoxVoting.org both have good information on the requirements.

I realize that there are other issues here beside the technical question of how to construct a reliable and accurate electoral system. There is the problem that not every player in the game necessarily shares this goal of reliability and accuracy.

Tracking this issue on a daily business, I am stunned to read about voting software written by convicted felons; officers of voting-machine companies getting involved in political campaigns even after the company officially prohibits such activity; State and local policies on voting that make it less fair, less representative, less trustworthy being put in place by questionable law or in contravention of the law; election results that defy probability; vote counts in which actual votes exceed the number of registered voters by orders of magnitude…But that’s not the point I’m trying to make here. If people are breaking the law and trying to subvert the electoral process, they should be exposed and thwarted. But I’m trying to focus on the straightforward technological issue of how to ensure accurate and trustworthy electronic vote counting, something that every American who cares about democracy wants. And the GAO’s gloomy prediction is just not acceptable. We need to solve the problem in time for this fall’s elections.

without leaving any sign of tampering with evidence? What about that damaging e-mail? More problematic, because removing it will likely involve both your computer and your correspondent’s. Oops, and what about your ISP and his?

The bigger the slug, the bigger the trail of slime. If you’re laundering billions of dollars of money through bogus defense contractors and nonexistent software companies, you’ll leave a lot of electronic traces. Think about all those web sites you were so proud of, with their professional graphics and impressive pictures of the purported corporate headquarters, those dummy sites whose links all go to ground on dead or nonexistent pages. What could an investigator learn from them?

In December, I was following what looked like an important story about one such company. I’m no cyberdetective, but it only took me a few minutes to convince myself that the company in question was, uh, questionable. Faked-up web sites, references to company-produced software products whose names returned empty search results, no way to get to the identities of the company officers from the main web site. Of course, I ran a “whois.” The domain name’s registrant had a Network Solutions-listed address that lacked a street name. Not a lot of help there. The phone number looked real enough, but the fax number obviously wasn’t.

I don’t mention the name of the company because the story could still be bogus, and my research was far short of investigative journalism. I bring it up just to make the point that it’s not that easy for the crooks to cover their tracks now that we all pretty much live in cyberspace.

Trying To Put Jack Back In the Box

When I retire, I’m going to spend my evenings by the fireplace going through those boxes. There are things in there that ought to be burned.

—Richard Milhous Nixon, Parade

Audit trails are problematic with electronic data. It’s so easy to change the bits. On the other hand, you do have to know where all the bits are that you need to change. Say you’ve done something that you don’t want some Federal prosecutor with subpoena power to find out about. Could you remove all traces of incriminating documents from your hard drive(s) in the hour that you might, if you’re lucky, have before it’s impounded by Federal marshals, and do it

You Only Think You’re Outside the Box

Any man forgets his number spends a night in the box.

— Donn Pearce, Cool Hand Luke

Which suggests two observations and two opinions.

The first observation is that privacy seems to be more and more illusory. That cell phone call you just made pinpoints your location to within a few hundred feet. The credit-card purchase narrows it to right here. You send e-mails and place cell phone calls and offer up your cred- it-card numbers and passwords and rent DVDs and check out library books with insouciant indifference to what you are

46 |

Dr. Dobb’s Journal, March 2006 |

http://www.ddj.com |

revealing to the prying eye. Yet, even as the very notion of privacy seems to dissipate, some seek to discern a right to privacy in the U.S. Constitution.

Another observation: Bloggers and other amateur newshounds are challenging professional journalists, often doing a better job of finding and disseminating important news stories. Boxed in by their success at gaining access to the powerful, celebrity journalists seem to have fallen victim to Stockholm Syndrome, like kidnap victims defending their captors. Professional outsiders like Josh Marshall and bloggers who spread the word even if they don’t break any stories do something that the mainstream media stars can’t. Or don’t. Bob Woodward, Judith Miller, and Viveca Novak all seem to have made the fatal error of forgetting to put truth and the reader first. Meanwhile, all the big newspapers are in financial trouble, challenged as much by Craig’s List as by bloggers.

The decline of privacy and the rise of citizen journalism are huge changes, but I offer these meliorative opinions: It seems to me that the fact that privacy is becoming harder to maintain should have nothing to do with the constraints placed on government interference in our private lives. And it seems to me that citizen journalists

and professional news analysts can perform complementary tasks if they focus on their respective strengths. I just hope these things sort themselves out soon.

Outside the Wrong Box?

One thing a computer can do that most humans can’t is be sealed up in a cardboard box and sit in a warehouse.

—Jack Handey, Deep Thoughts

Whether Microsoft can sort out its dilemma is an open question. And whether it’s an open-source question is another question.

Steve Lohr of The New York Times seems to think that Ray Ozzie has been tasked with bringing Microsoft into the era of software as services. It’s possible that Ray’s role is more limited, being merely to implement the already-articulated and well-under-way dot-net technological plan, while Bill and Steve work on the harder job of reinventing Microsoft to be the kind of company implied by this technological vision.

Either way, Ray has his work cut out for him. Imagine that you have been hired as the CTO for a company that produces huge, feature-laden apps and you are tasked with shifting the focus to producing tools to allow others to produce small, focused apps that compete with and probably better serve the changing market than the company’s fat apps. A tricky message to give to the troops.

Ray’s exhortation to the troops can be Googled by anyone interested. In the document, he admits that he isn’t saying much that Bill hasn’t already said, except for Ray’s kind words about the open-source paradigm.

For all Ray Ozzie’s virtues, he can’t ever have the kind of hearts-and-minds influence at Microsoft that Bill Gates has. But he has as much formal authority as Bill and Steve give him, and that’s shaping up to be a lot. If he’s charged with more than the mere technological challenges in shifting Microsoft’s direction, he’ll need it. Because the software- as-services vision will involve, for example, not just educating the sales force about new products, but more or less rewiring their brains. A company the size of Microsoft trying to change directions has all the maneuverability of a jumbo jet on the runway.

Here’s a thought, Ray. Nobody in technology has survived more disasters or reinvented himself as often as AOL founder Steve Case; He’s the real comeback king. And you know what Steve is proposing for AOL/Time Warner? He thinks that the best thing to do would be to break it up.

Calvin has an obsession with corrugated cardboard boxes, which he adapts for many different uses.

—Wikipedia, on Calvin and Hobbes

DDJ

E M B E D D E D S P A C E

Professionalism

Ed Nisley

Q: What’s the difference between an electrical engineer and a civil engineer?

A: Electrical engineers build weapon systems. Civil engineers build targets.

There’s another difference: About half of all CEs display a Professional Engineer license on the wall, but only one in 10 EEs can do that.

Software engineers, even those near the hardware end of the embedded systems field, aren’t legally engineers because they’re not licensed as Professional Engineers. You have surely followed the discussions about licensing for software professionals, so my experience as a Professional Engineer, albeit of the Electrical variety, may be of interest.

Late last year, I checked the “No” box in response to the question “Do you wish to register for the period [3/1/06–2/28/09]?” on my New York State PE license renewal form so that, effective this month, I am no longer a Registered Professional Engineer. After almost exactly two decades, I can no longer produce engineering drawings stamped with my PE seal. Not, as it turns out, that I ever did, because all my work has been in areas and for clients that did not require sealed plans.

With that in mind, here’s my largely anecdotal description of what Professional Engineering means, how I got in, and why I bailed out. You’ll also see why the existing PE license structure has little relevance and poses considerable trouble for software developers.

The Beginnings

When I emerged from Lehigh University with a shiny-new BSEE, I didn’t know much about Professional Engineering. I moved to Poughkeepsie and became a Junior Engineer at a locally important computer manufacturer (the name isn’t im-

Ed’s an EE, an inactive PE, and author in Poughkeepsie, NY. Contact him at ed

.nisley@ieee.org with “Dr Dobbs” in the subject to avoid spam filters.

portant, but its initials were IBM). Very few of my colleagues were PEs, as a license wasn’t a job requirement.

After about a decade, I decided that I could have more fun on my own and started looking into what was required to become a consulting engineer. I contacted the NYS licensing folks, described what I was planning to do, and received, in no uncertain terms, a directive that I must become a PE. Well, okay, ask a stupid question and get a snappy answer.

The New York State Education Department’s Office of the Professions regulates 47 distinct professions defined by Title VIII of the Education Law. In that extensive list, among the Acupuncturists and Landscape Architects and Midwives and Social Workers, you’ll find Engineers. Each must display a similar certificate on the wall showing compliance with the rules.

Section 7201 defines the practice of engineering as:

…performing professional service such as consultation, investigation, evaluation, planning, design or supervision of construction or operation in connection with any utilities, structures, buildings, machines, equipment, processes, works, or projects wherein the safeguarding of life, health and property is concerned, when such service or work requires the application of engineering principles and data.

Section 7202 states that:

…only a person licensed or otherwise authorized under this article shall practice engineering or use the title “professional engineer.”

I asked the NYS folks why it was that I had to be a PE to call myself an engineer, while Microsoft Certified System Engineers didn’t. The answer boiled down to, basically, “nobody confuses them with real engineers.” Huh?

Engineers within a company need not be PEs, even if the company provides engineering services, as long as a PE approves the final plans and projects. Sec-

tion 7208-k also provides an “industrial exemption” for:

…the practice of engineering by a manufacturing corporation or by employees of such corporation, or use of the title “engineer” by such employees, in connection with or incidental to goods produced by, or sold by, or nonengineering services rendered by, such corporation or its manufacturing affiliates.

That’s why most EEs aren’t PEs.

The NYS requirements for a prospective PE include an engineering BS degree, a total of 12 years of relevant experience, passing a pair of examinations, possessing the usual citizenship and good-character attributes, and, of course, paying a fee.

The Tests

The National Council of Examiners for Engineering and Surveying (NCEES) designs, administers, and scores the engineering examinations. The Fundamentals of Engineering (FE) test is generally taken shortly after graduation, followed by the Principles and Practices of Engineering (PE or PP) test after acquiring at least four years of experience.

The Fundamentals of Engineering exam covers the broad range of knowledge included in an undergraduate engineering curriculum because, regardless of your major, you’re expected to have some knowledge of how things work in all disciplines. The morning session includes 120 questions in Mathematics, Statistics, Chemistry, Computers, Ethics, Economics, Mechanics, Materials, Fluids, Electricity, and Thermodynamics.

A sample question from my exam review book should give you pause:

102. Ethane gas burns according to the equation 2C2H6+7O2 → 4CO2+6H2O. What volume of CO2, measured at standard temperature and pressure, is formed for each gram-mole of C2H6 burned?

Fortunately, that’s a multiple-choice question and perhaps you could pick (in

http://www.ddj.com |

Dr. Dobb’s Journal, March 2006 |

49 |