BookBody

.pdfSec. 9.2] |

Precedence parsing |

191 |

FIRSTOP(S) = {#}

FIRSTOP(E) = {+, ×, (}

FIRSTOP(T) = {×, (}

FIRSTOP(F) = {(}

LASTOP(S) = {#}

LASTOP(E) = {+, ×, )}

LASTOP(T) = {×, )}

LASTOP(F) = {)}

Figure 9.8 FIRSTOP and LASTOP sets for the grammar of Figure 9.2

This keeps operators from the same handle together.

For each occurrence q 1 A, set q 1 <·q 2 for each q 2 in FIRSTOP(A). This demarcates the left end of a handle.

For each occurrence Aq 1 , set q 2·>q 1 for each q 2 in LASTOP(A). This demarcates the right end of a handle.

If we obtain a table without conflicts this way, that is, if we never find two dif-

ferent relations between two operators, then we call the grammar operator-precedence. It will now be clear why (=˙) and not )=˙(, and why +·>+ (because E+ occurs in E->E+T

and + is in LASTOP(E)).

In this way, the table can be derived from the grammar by a program and be passed on to the operator-precedence parser. A very efficient linear-time parser results. There is, however, one small problem we have glossed over: Although the method properly identifies the handle, it often does not identify the non-terminal to which to reduce it. Also, it does not show any unit rule reductions; nowhere in the examples did we see reductions of the form E->F or T->F. In short, operator-precedence parsing generates only skeleton parse trees.

Operator-precedence parsers are very easy to construct (often even by hand) and very efficient to use; operator-precedence is the method of choice for all parsing problems that are simple enough to allow it. That only a skeleton parse tree is obtained, is often not an obstacle, since operator grammars often have the property that the semantics is attached to the operators rather than to the right-hand sides; the operators are identified correctly.

It is surprising how many grammars are (almost) operator-precedence. Almost all formula-like computer input is operator-precedence. Also, large parts of the grammars of many computer languages are operator-precedence. An example is a construction like CONST total = head + tail; from a Pascal-like language, which is easily rendered as:

|

|

|

Stack |

rest of input |

# <· CONST |

<· |

= <· |

+ ·> |

; # |

|

total |

head |

tail |

|

Ignoring the non-terminals has other bad consequences besides producing a skeleton parse tree. Since non-terminals are ignored, a missing non-terminal is not noticed. As a result, the parser will accept incorrect input without warning and will produce an incomplete parse tree for it. A parser using the table of Figure 9.5 will blithely accept the empty string, since it immediately leads to the stack configuration #=˙#. It produces a parse tree consisting of one empty node.

The theoretical analysis of this phenomenon turns out to be inordinately difficult; see Levy [Precedence 1975], Williams [Precedence 1977, 1979, 1981] and many others

192 |

Deterministic bottom-up parsing |

[Ch. 9 |

in Section 13.8. In practice it is less of a problem than one would expect; it is easy to check for the presence of required non-terminals, either while the parse tree is being constructed or afterwards.

9.2.2 Precedence functions

Several objections can be raised against operator-precedence. First, it cannot handle all grammars that can be handled by other more sophisticated methods. Second, its error detection capabilities are weak. Third, it constructs skeleton parse trees only. And fourth, the two-dimensional precedence table, which for say a 100 tokens has 10000 entries, may take too much room. The latter objection can be overcome for those precedence tables that can be represented by so-called precedence functions. The idea is the following. Rather than having a table T such that for any two operators q 1 and q 2 , T[q 1 ,q 2 ] yields the relation between q 1 and q 2 , we have two integer functions f and g

such that fq 1 <gq 2 means that q 1 <·q 2 , fq 1 =gq 2 means q 1 =˙ q 2 and fq 1 >gq 2 means q 1·>q 2 . fq is called the left priority of q, gq the right priority; they would probably be

better indicated by l and r, but the use of f and g is traditional. Note that we write fq 1 rather than f (q 1 ); this allows us to write, for instance, f ( for the left priority of ( rather than the confusing f ((). It will be clear that two functions are required: with just one function one cannot express, for instance, +·>+. Precedence functions take much less room than precedence tables. For our 100 tokens we need 200 function values rather than 10000 tables entries. Not all tables allow a representation with precedence functions, but many do.

Finding the proper f and g for a given table seems simple enough and can indeed often be done by hand. The fact, however, that there are two functions rather than one, the size of the tables and the occurrence of the =˙ complicate things. A well-known algorithm to construct the functions was given by Bell [Precedence 1969] of which several variants exist. The following technique is a straightforward and easily implemented variant of Bell’s algorithm.

First we turn the precedence table into a list of numerical relations, as follows:

for each q 1 <·q 2 we have fq 1 |

<gq 2 |

, |

for each q 1 =˙ q 2 we have fq 1 |

=gq 2 |

, |

for each q 1·>q 2 we have fq 1 >gq 2 ,

Here we no longer view forms like fq as function values but rather as variables; reinterpretation as function values will occur later. Making such a list is easier done by computer than by hand; see Figure 9.9(a). Next we remove all equals-relations, as follows:

|

for each relation fq 1 =gq 2 |

we create |

a new |

variable |

fq 1 gq 2 |

and replace all |

|

|

occurrences of fq 1 and gq 2 |

by fq 1 gq 2 . |

|

|

|

|

|

Note that fq 1 gq 2 is not the product of fq 1 |

and gq 2 but rather a new variable, i.e., the |

||||||

name of a new priority value. Now |

a |

relation |

like fq 1 =gq 2 |

has turned into |

|||

fq 1 gq 2 =fq 1 gq 2 and can be deleted trivially. See (b). |

|

|

|

||||

Third we flip all > relations: |

|

|

|

|

|

|

|

|

we replace each relation p 1 >p 2 by p 2 <p 1 , where p 1 |

and p 2 |

are priority vari- |

||||

|

ables. See (c). |

|

|

|

|

|

|

The list has now assumed a very uniform appearance and we can start to assign numerical values to the variables. We shall do this by handing out the numbers 0,1, . . . as follows:

Sec. 9.2]

f # = g # f # < g + f # < g × f # < g ( f + > g # f + > g + f + < g × f + < g ( f + > g ) f × > g # f × > g + f × > g × f × < g ( f × > g ) f ( < g + f ( < g × f ( < g ( f ( = g ) f ) > g # f ) > g + f ) > g × f ) > g )

(a)

f # g # = 0 f ( g ) = 0 g + = 1

f + = 2 g × = 3 f × = 4

(h)

Precedence parsing

f # g # < g + f # g # < g × f # g # < g ( f + > f # g # f + > g +

f + < g × f + < g (

f + > f ( g ) f × > f # g # f × > g +

f × > g × f × < g (

f × > f ( g ) f ( g ) < g + f ( g ) < g × f ( g ) < g ( f ) > f # g # f ) > g +

f ) > g ×

f ) > f ( g )

(b)

f # = 0 g # = 0 f ( = 0 g ) = 0 g + = 1 f + = 2 g × = 3 f × = 4

(i)

f # g # < g + f # g # < g × f # g # < g ( f # g # < f + g + < f +

f + < g × f + < g (

f ( g ) < f + f # g # < f × g + < f × g × < f ×

f × < g (

f ( g ) < f × f ( g ) < g + f ( g ) < g × f ( g ) < g ( f # g # < f ) g + < f )

g × < f )

f ( g ) < f )

(c)

f # = 0 f ( = 0 f + = 2 f × = 4 f ) = 5

g # = 0 g ) = 0 g + = 1 g × = 3 g ( = 5

(j)

193

f # g # = 0 |

f # g # |

= 0 |

|

f ( g ) = 0 |

f ( g ) |

= 0 |

|

|

g + = 1 |

||

g + < f + |

f + = 2 |

||

f + < g × |

|

|

|

f + < g ( |

g × < f × |

||

g + < f × |

f × < g ( |

||

g × < f × |

g × < f ) |

||

f × < g ( |

(f) |

|

|

g + < f ) |

|

||

g × < f ) |

f # g # |

= 0 |

|

(d) |

f ( g ) |

= 0 |

|

f # g # = 0 |

g + = 1 |

||

f + = 2 |

|||

f ( g ) = 0 |

g × = 3 |

||

g + = 1 |

f × < g ( |

||

f + < g × |

|||

(g) |

|||

f + < g ( |

|||

g × < f × |

|

|

|

f × < g ( |

|

|

|

g × < f ) |

|

|

|

(e)

Figure 9.9 Calculating precedence functions

Find all variables that occur only on the left of a relation; since they are clearly smaller than all the others, they can all be given the value 0.

In our example we find f# g# and f ( g ) , which both get the value 0. Since the relations that have these two variables on their left will be satisfied provided we hand out no more 0’s, we can remove them (see (d)):

Remove all relations that have the identified variables on their left sides.

194 |

Deterministic bottom-up parsing |

[Ch. 9 |

This removal causes another set of variables to occur on the left of a relation only, to which we now hand out the value 1. We repeat this process with increasing values until the list of relations has become empty; see (e) through (h).

Decompose the compound variables and give each component the numerical value of the compound variable. This decomposes, for instance, f ( g ) =0 into f ( =0 and

=0; see (i).)

This leaves without a value those variables that occurred on the right-hand side only in the comparisons under (c):

To all still unassigned priority values, assign the lowest value that has not yet been handed out.

f ) and g ( both get the value 5 (see (j) where the values have also been reordered) and indeed these occur at the high side of a comparison only. It is easily verified that the priority values found satisfy the initial comparisons as derived from the precedence table.

It is possible that we reach a stage in which there are still relations left but there are no variables that occur on the left only. It is easy to see that in that case there must be a circularity of the form p 1 <p 2 <p 3 . . . <p 1 and that no integer functions representing these relations can exist: the table does not allow precedence functions.

# |

) |

+ |

× |

( |

|

|

|

|

|

|

|

# =˙ <· <· <· |

|||||

|

|

|

|

|

|

( =˙ <· <· <· |

|||||

|

|

|

|

|

|

+ ·> ·> ·> <· <· |

|||||

|

|

|

|

|

|

× ·> ·> ·> ·> <· |

|||||

|

|

|

|

|

|

) ·> ·> ·> ·>

Figure 9.10 The precedence table of Figure 9.5 reordered

Note that finding precedence functions is equivalent to reordering the rows and columns of the precedence table so that the latter can be divided into three regions: a ·> region on the lower left, a <· region on the upper right and a =˙ border between them. See Figure 9.10.

There is always a way to represent a precedence table with more than two functions; see Bertsch [Precedence 1977] on how to construct such functions.

9.2.3 Simple-precedence parsing

The fact that operator-precedence parsing produces skeleton parse trees only is a serious obstacle to its application outside formula handling. The defect seems easy to remedy. When a handle is identified in an operator-precedence parser, it is reduced to a node containing the value(s) and the operator(s), without reference to the grammar. For serious parsing the matching right-hand side of the pertinent rule has to be found. Now suppose we require all right-hand sides in the grammar to be different. Then, given a handle, we can easily find the rule to be used in the reduction (or to find that there is no matching right-hand side, in which case there was an error in the input).

This is, however, not quite good enough. To properly do the right reductions and to find reductions of the form A →B (unit reductions), the non-terminals themselves have to play a role in the identification of the right-hand side. They have to be on the

Sec. 9.2] |

Precedence parsing |

195 |

stack like any other symbol and precedence relations have to be found for them. This has the additional advantage that the grammar need no longer be an operator grammar and that the stack entries have a normal appearance again.

A grammar is simple precedence if (and only if):

it has a conflict-free precedence table over all its symbols, terminals and nonterminals alike,

none of its right-hand sides is ε,

all of its right-hand sides are different.

The construction of the simple-precedence table is again based upon two sets,

FIRSTALL(A) and LASTALL(A). FIRSTALL(A) is similar to the set FIRST(A) introduced in Section 8.2.2.1 and differs from it in that it also contains all non-terminals that

can start a sentential form derived from A (whereas FIRST(A) contains terminals only). LASTALL(A) contains all terminals and non-terminals that can end a sentential form of A. Their construction is similar to that given in Section 8.2.2.1 for the FIRST set. Figure 9.11 shows the pertinent sets for our grammar.

FIRSTALL(S) = {#} |

LASTALL(S) = {#} |

FIRSTALL(E) = {E, T, F, n, (} |

LASTALL(E) = {T, F, n, )} |

FIRSTALL(T) = {T, F, n, (} |

LASTALL(T) = {F, n, )} |

FIRSTALL(F) = {n, (} |

LASTALL(F) = {n, )} |

Figure 9.11 FIRSTALL and LASTALL for the grammar of Figure 9.2

A simple-precedence table is now constructed as follows: For each two juxtaposed symbols X and Y in a right-hand side we have:

X=˙ Y; this keeps X and Y together in the handle;

if X is a non-terminal: for each symbol s in LASTALL(X) and each terminal t in FIRST(Y) (or Y itself if Y is a terminal) we have s·>t; this allows X to be reduced completely when the first sign of Y appears in the input; note that we have FIRST(Y) here rather than FIRSTALL(Y);

if Y is a non-terminal: for each symbol s in FIRSTALL(Y) we have X <·s; this protects X while Y is being recognized.

|

|

|

|

|

|

|

|

|

|

|

|

# |

|

E |

|

T |

F |

n |

+ |

× |

( |

) |

|

# <·/=˙ <· <· <· <· |

|||||||||||

|

|

|

|

|

|

|

|

|

|

|

|

E =˙ =˙ =˙ |

|||||||||||

|

|

|

|

|

|

|

|

|

|

|

|

T ·> ·> =˙ ·> |

|||||||||||

|

|

|

|

|

|

|

|

|

|

|

|

F ·> ·> ·> ·> |

|||||||||||

|

|

|

|

|

|

|

|

|

|

|

|

n ·> ·> ·> ·> |

|||||||||||

|

|

|

|

|

|

|

|

|

|

|

|

+ <·/=˙ <· <· <· |

|||||||||||

|

|

|

|

|

|

|

|

|

|

|

|

× =˙ <· <· |

|||||||||||

|

|

|

|

|

|

|

|

|

|

|

|

( <·/=˙ <· <· <· <·

) ·> ·> ·> ·>

Figure 9.12 Simple-precedence table to Figure 9.2, with conflicts

196 |

Deterministic bottom-up parsing |

[Ch. 9 |

Simple precedence is not the answer to all our problems as is evident from Figure 9.12 which displays the results of an attempt to construct the precedence table for the operator-precedence grammar of Figure 9.2. Not even this simple grammar is simpleprecedence, witness the conflicts for #<·/=˙E, (<·/=˙E and +<·/=˙T.

SS |

-> |

# E’ # |

E’ |

-> |

E |

E |

-> |

E + T’ |

E |

-> |

T’ |

T’ |

-> |

T |

T |

-> |

T × F |

T |

-> |

F |

F |

-> |

n |

|

|

|

F |

-> ( E ) |

|

|

|

|

|

||

FIRSTALL(E’) = {E, T’, T, F, n, (} |

|

LASTALL(E’) = {T’, T, F, n, )} |

|||||||||

FIRSTALL(E) = {E, T’, T, F, n, (} |

|

LASTALL(E) = {T, F, n, )} |

|

||||||||

FIRSTALL(T’) = {T, F, n, (} |

|

|

LASTALL(T’) = {F, n, )} |

|

|||||||

FIRSTALL(T) = {T, F, n, (} |

|

|

LASTALL(T) = {F, n, )} |

|

|||||||

FIRSTALL(F) = {n, (} |

|

|

|

LASTALL(F) = {n, )} |

|

|

|||||

# |

E’ |

E |

T’ |

T |

|

F n |

+ |

× |

( |

) |

|

|

|

|

|

|

|

|

|

|

|

|

|

# =˙ <· <· <· <· <· <· |

|||||||||||

|

|

|

|

|

|

|

|

|

|

|

|

E’ =˙ |

|||||||||||

|

|

|

|

|

|

|

|

|

|

|

|

E ·> =˙ =˙ |

|||||||||||

|

|

|

|

|

|

|

|

|

|

|

|

T’ ·> ·> ·> |

|||||||||||

|

|

|

|

|

|

|

|

|

|

|

|

T ·> ·> =˙ ·> |

|||||||||||

|

|

|

|

|

|

|

|

|

|

|

|

F ·> ·> ·> ·> |

|||||||||||

|

|

|

|

|

|

|

|

|

|

|

|

n ·> ·> ·> ·> |

|||||||||||

|

|

|

|

|

|

|

|

|

|

|

|

+ =˙ <· <· <· <· |

|||||||||||

|

|

|

|

|

|

|

|

|

|

|

|

× =˙ <· <· |

|||||||||||

|

|

|

|

|

|

|

|

|

|

|

|

( =˙ <· <· <· <· <· |

|||||||||||

|

|

|

|

|

|

|

|

|

|

|

|

) ·> ·> ·> ·>

Figure 9.13 A modified grammar with its simple-precedence table, without conflicts

There are two ways to remedy this. We can adapt the grammar by inserting extra levels around the troublesome non-terminals. This is done in Figure 9.13 and works in this case; it brings us, however, farther away from our goal, to produce a correct parse tree, since we now produce a parse tree for a different grammar. Or we can adapt the parsing method, as explained in the next section.

9.2.4 Weak-precedence parsing

It turns out that most of the simple-precedence conflicts are <·/=˙ conflicts. Now the difference between <· and =˙ is in a sense less important than that between either of them and ·>. Both <· and =˙ result in a shift and only ·> asks for a reduce. Only when a reduce

Sec. 9.2] |

Precedence parsing |

197 |

is found will the difference between <· and =˙ become significant for finding the head of the handle. Now suppose we drop the difference between <· and =˙ and combine them into ≤·; then we need a different means of identifying the handle and the proper righthand side. This can be done by requiring not only that all right-hand sides be different, but also that no right-hand side be equal to the tail of another right-hand side. A grammar that conforms to this and has a conflict-free ≤·/>· precedence table is called weak precedence. Figure 9.14 gives the (conflict-free) weak-precedence table for the grammar of Figure 9.2. It is of course possible to retain the difference between <· and =˙ where it exists; this will improve the error detection capability of the parser.

#E T F n + × ( )

≤·

# <· <· <· <·

E =˙ =˙ =˙ T ·> ·> =˙ ·>

F ·> ·> ·> ·>

n ·> ·> ·> ·>+ ≤· <· <· <·

× =˙ <· <·

( ≤· <· <· <· <·

) ·> ·> ·> ·>

Figure 9.14 Weak-precedence table to the grammar of Figure 9.2

The rule that no right-hand side should be equal to the tail of another right-hand side is more restrictive than is necessary. More lenient rules exist in several variants, which, however, all require more work in identifying the reduction rule. See, for instance, Ichbiah and Morse [Precedence 1970] or Sekimoto [Precedence 1972].

Weak precedence is a useful method that applies to a relatively large group of grammars. Especially if parsing is used to roughly structure an input stream, as in the first pass or scan of a complicated system, weak precedence can be of service.

9.2.5 Extended precedence and mixed-strategy precedence

The above methods determine the precedence relations by looking at 1 symbol on the stack and 1 token in the input. Once this has been said, the idea suggests itself to replace the 1’s by m and n respectively, and to determine the precedence relations from the topmost m symbols on the stack and the first n tokens in the input. This is called

(m,n)-extended precedence.

We can use the same technique to find the left end of the handle on the stack when using weak precedence: use k symbols on the left and l on the right to answer the question if this is the head of the handle. This is called (k,l)(m,n)-extended [weak] precedence.

By increasing its parameters, extended precedence can be made reasonably powerful. Yet the huge tables required (2 × 300 × 300 × 300 = 54 million entries for (1,2)(2,1) extended precedence with 300 symbols) severely limit its applicability. Moreover, even with large values of k, l, m and n it is inferior still to LR(1), which we treat in Section 9.5.

198 |

Deterministic bottom-up parsing |

[Ch. 9 |

If a grammar is (k,l)(m,n)-extended precedence, it is not always necessary to test the full k, l, m and n symbols. Indeed it is almost never necessary and large parts of the grammar can almost always be handled by (normal) weak-precedence methods; the full (k,l)(m,n)-extended precedence power is needed only in a small number of spots in the grammar. This phenomenon has led to techniques in which the (normal) weakprecedence table has a (small) number of exception entries that refer to further, more powerful tables. This technique is called mixed-strategy precedence. Mixed-strategy precedence has been investigated by McKeeman [Books 1970].

9.2.6 Actually finding the correct right-hand side

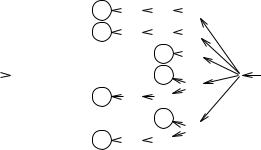

All the above methods identify only the bounds of the handle; the actual right-hand side is still to be determined. It may seem that a search through all right-hand sides is necessary for each reduction, but this is not so. The right-hand sides can be arranged in a tree structure with their right-most symbols forming the root of the tree, as in Figure 9.15. When we have found a ·> relation, we start walking down the stack looking for a <· and at the same time we follow the corresponding path through the tree; when we find the <· we should be at the beginning of a rule in the tree, or we have found an error in the input; see Figure 9.15. The tree can be constructed by sorting the grammar rules on their symbols in backward order and combining equal tails. As an example, the path followed for <· T =˙ × =˙ F ·> has been indicated by a dotted line.

S -> # E # |

|

|

S |

|

# |

|

E |

|

|

# |

|

|

|

|

|

|

|

|

|

|

|

||||||

F -> ( E ) |

|

|

F |

|

( |

|

E |

|

|

) |

|

|

|

|

|

|

|

|

|

|

|

||||||

F -> n |

|

|

|

|

|

|

F |

|

|

n |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|||

T -> F |

|

|

|

|

|

|

T |

|

|

F. . . . . . . . |

. . . |

. . . . |

. |

|

|

|

|

|

|

|

|||||||

T -> T × F |

|

|

|

|

|

|

.×. |

|

|

|

|

|

|

|

|

T. . . . . |

.T. . . . . |

. . . . . |

|

|

|

||||||

|

|

|

|

|

|

. |

|

|

|

|

|

|

|

E -> T |

|

|

|

|

|

|

E |

|

|

T |

|

|

|

E -> E + T |

|

|

E |

|

E |

|

+ |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

||||

|

|

|

|

|

|

|

|

|

|

||||

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Figure 9.15 Tree structure for efficiently finding right-hand sides

For several methods to improve upon this, see the literature (Section 13.8).

9.3 BOUNDED-CONTEXT PARSING

There is a different way to solve the annoying problem of the identification of the right-hand side: let the identity of the rule be part of the precedence relation. A grammar is (m,n) bounded-context (BC(m,n)) if (and only if) for each combination of m symbols on the stack and n tokens in the input there is a unique parsing decision which is either “shift” (≤·) or “reduce using rule X” (>· X ), as obtained by a variant of the rules for extended precedence. Figure 9.16 gives the BC(2,1) tables for the grammar of Figure 9.2. Note that the rows correspond to stack symbol pairs; the entry Accept means that the input has been parsed and Error means that a syntax error has been found. Blank entries will never be accessed; all-blank rows have been left out. See, for instance, Loeckx [Precedence 1970] for the construction of such tables.

Bounded-context (especially BC(2,1)) was once very popular but has been

Sec. 9.3] |

|

|

Bounded-context parsing |

|

199 |

||

|

|

# |

+ |

× |

n |

( |

) |

|

|

|

|

|

|

|

|

#S Accept |

|||||||

#E |

|

·>S->E |

· |

· |

|

|

Error |

|

≤ |

|

|

||||

#T |

·>E->T |

·>E->T |

≤ |

|

|

Error |

|

#F |

|

·>T->F |

·>T->F |

·>T->F |

Error |

Error |

Error |

#n |

|

·> |

·> |

·> |

Error |

||

|

|

F->n |

F->n |

F->n |

· |

· |

|

#( |

|

Error |

Error |

Error |

Error |

||

|

≤ |

≤ |

|||||

E+ |

Error |

Error |

Error |

· |

· |

Error |

|

|

≤ |

≤ |

|||||

E) |

|

·> |

·> |

·> |

Error |

Error |

·> |

T× |

F->(E) |

F->(E) |

F->(E) |

· |

· |

F->(E) |

|

|

Error |

Error |

Error |

≤ |

≤ |

Error |

|

+T |

|

·>E->E+T |

·>E->E+T |

· |

|

|

·>E->E+T |

|

≤ |

|

|

||||

+F |

·> |

·> |

·> |

|

|

·> |

|

+n |

|

T->F |

T->F |

T->F |

Error |

Error |

T->F |

|

·> |

·> |

·> |

·> |

|||

+( |

F->n |

F->n |

F->n |

· |

· |

F->n |

|

|

Error |

Error |

Error |

≤ |

≤ |

Error |

|

×F |

·> |

·> |

·> |

|

|

·> |

|

×n |

|

T->T×F |

T->T×F |

T->T×F |

Error |

Error |

T->T×F |

|

·> |

·> |

·> |

·> |

|||

×( |

|

F->n |

F->n |

F->n |

· |

· |

F->n |

|

Error |

Error |

Error |

≤ |

≤ |

Error |

|

(E |

Error |

· |

|

|

|

· |

|

|

≤ |

· |

|

|

≤ |

||

(T |

|

Error |

·>E->T |

|

|

·>E->T |

|

|

≤ |

|

|

||||

(F |

Error |

·> |

·> |

|

|

·> |

|

|

|

|

T->F |

T->F |

|

|

T->F |

(n |

|

Error |

·>F->n |

·>F->n |

Error |

Error |

·>F->n |

(( |

|

Error |

Error |

Error |

· |

· |

Error |

|

≤ |

≤ |

|||||

Figure 9.16 BC(2,1) tables for the grammar of Figure 9.2

superseded almost completely by LALR(1) (Section 9.6). Recently, interest in bounded-context grammars has been revived, since it has turned out that such grammars have some excellent error recovery properties; see Section 10.8. This is not completely surprising if we consider that bounded-context grammars have the property that a small number of symbols in the sentential form suffice to determine completely what is going on.

9.3.1 Floyd productions

Bounded-context parsing steps can be summarized conveniently by using Floyd productions. Floyd productions are rules for rewriting a string that contains a marker, , on which the rules focus. A Floyd production has the form αΔβ => γΔδ and means

that if the marker in the string is preceded by α and is followed by β, the construction must be replaced by γΔδ. The rules are tried in order starting from the top and the first

one to match is applied; processing then resumes on the resulting string, starting from the top of the list, and the process is repeated until no rule matches.

Although Floyd productions were not primarily designed as a parsing tool but rather as a general string manipulation language, the identification of the in the string with the gap in a bottom-up parser suggests itself and was already made in Floyd’s original article [Misc 1961]. Floyd productions for the grammar of Figure 9.2 are given in Figure 9.17. The parser is started with the at the left of the input.

The apparent convenience and conciseness of Floyd productions makes it very tempting to write parsers in them by hand, but Floyd productions are very sensitive to the order in which the rules are listed and a small inaccuracy in the order can have a

200 |

Deterministic bottom-up parsing |

[Ch. 9 |

||

|

n |

=> |

n |

|

|

( |

=> |

( |

|

|

n |

=> |

F |

|

|

T |

* => |

T* |

|

|

T*F |

=> |

T |

|

|

F |

=> |

T |

|

|

E+T |

=> |

E |

|

|

T |

=> |

E |

|

|

(E) |

=> |

F |

|

|

+ |

=> |

+ |

|

|

) |

=> |

) |

|

|

# |

=> |

# |

|

|

#E# |

=> |

S |

|

Figure 9.17 Floyd productions for the grammar of Figure 9.2

devastating effect.

9.4 LR METHODS

The LR methods are based on the combination of two ideas that have already been touched upon in previous sections. To reiterate, the problem is to find the handle in a sentential form as efficiently as possible, for as large a class of grammars as possible. Such a handle is searched for from left to right. Now, from Section 5.3.4 we recall that a very efficient way to find a string in a left-to-right search is by constructing a finitestate automaton. Just doing this is, however, not good enough. It is quite easy to construct an FS automaton that would recognize any of the right-hand sides in the grammar efficiently, but it would just find the left-most reducible substring in the sentential form. This substring is, however, often not the handle.

The idea can be made practical by applying the same trick that was used in Earley’s parser to drastically reduce the fan-out of the breadth-first search (see Section 7.2): start the automaton with the start rule of the grammar and only consider, in any position, right-hand sides that could be derived from the start symbol. This top-down restriction device served in the Earley parser to reduce the cost to O (n 3 ), here we require the grammar to be such that it reduces the cost to O (n). The resulting automaton is started in its initial state at the left end of the sentential form and allowed to run to the right; it has the property that it stops at the right end of the handle and that its accepting state tells us how to reduce the handle. How this is done will be explained in the next section.

Since practical FS automata easily get so big that their states cannot be displayed on a single page of a book, we shall use the grammar of Figure 9.18 for our examples. It is a simplified version of that of Figure 9.2, in which only one binary operator is left, for which we have chosen the - rather than the +. Although this is not essential, it serves to remind us that the proper parse tree must be derived, since (a-b)-c is not the same as a-(b-c) (whereas (a+b)+c and a+(b+c) are). The # indicates the end of the input.