Словари и журналы / Психологические журналы / p109British Journal of Mathematical and Statistical Psycholo

.pdf

109

British Journal of Mathematical and Statistical Psychology (2002), 55, 109–124

© 2002 The British Psychological Society

www.bps.org.uk

Measuring change in controlled longitudinal studies

John E. Overall* and Scott Tonidandel

University of Texas, Health Science Center at Houston, USA

This paper examines the implications of the correlational structure of repeated measurements for three indices of change that can be used to evaluate treatment effects in longitudinal studies with scheduled assessment times and Ž xed total duration. The generalized least squares (GLS) regression of repeated measurements on time, which is usually reserved for complex mixed model solutions, takes the correlational structure of the repeated measurements into account, whereas simple gain scores and ordinary least squares (OLS) regression calculations do not. Nevertheless, the GLS solution is equivalent to OLS under conditions of compound symmetry and is equivalent to the analysis of simple gain scores in the presence of an autoregressive (order 1) correlational structure. The understanding of these relationships is important with regard to the frequently heard criticisms of the simpler deŽ nitions of treatment response in repeated measurement designs.

1. Introduction

Controlled longitudinal studies in psychology, neuropsychopharmacology and other clinical research domains tend to employ a standard parallel groups design. Individuals are randomized between treatment groups, evaluated at baseline, and then evaluated at Žxed intervals across a treatment period of speciŽed total duration. The hypothesis of primary interest in intervention trials concerns the difference between treatment groups in patterns or magnitudes of change from the baseline. In this paper, we examine the similarities and differences between three deŽnitions of change in relation to different patterns of correlation among the repeated measurements. All three methods for testing the ‘equal change hypothesis’ can be accomplished in two stages. At stage 1, a composite index or coefŽcient of change across the repeated measurements

* Requests for reprints should be addressed to John E. Overall, Department of Psychiatry and Behavioral Sciences, University of Texas Medical School, Houston, TX 77225, USA.

110 John E. Overall and Scott Tonidandel

is calculated for each individual, and at stage 2 the difference between group means for the derived change measure is subjected to a parametric test of signiŽcance. It is also common to employ baseline scores and other relevant factors as covariates in the model for the stage 2 analysis. Similar ANCOVAcorrections can be employed at stage 2 for the analysis of any of the measures of change examined here. Conceptually, all are random effects models that differ only in the way that change is deŽned (Laird & Ware, 1982), and general linear random effects models can always be represented as two-stage analyses under proper assumptions (Vonesh & Chinchilli, 1997, p. 256).

The ‘end-point analysis’ calculated using simple gain scores to measure change has long been considered a primary means of evaluating differences in treatment outcomes in controlled clinical drug trials. The difference between the baseline and the Žnal available observation is the measure of change calculated at stage 1 to serve as the dependent variable for stage 2 hypothesis testing. The end-point analysis does not require the same number of observations or the same duration of treatment for each subject, so it has been perceived as an effective way to deal with missing data for dropouts in longitudinal studies. Admittedly, the analysis of simple gain scores has not been without its critics. Lord (1956, 1963) and Cronbach and Furby (1970) were among earlier authors to warn educational and psychological researchers of potential problems in the analysis of unreliable difference scores, although Overall and Woodward (1975) reminded critics that the power of tests on difference-score means can actually increase as subtraction reduces the ‘reliability’ of the change scores by removing consistent individuals’ differences.

We also consider here a two-stage random regression model in which subjectspeciŽc slope coefŽcients bi are the measures of change calculated across all of the available repeated measurements using ordinary least squares (OLS) regression methods at stage 1 of the analysis.1 Although OLS regression calculations and simple gain scores do not take the complete covariance structure of the repeated measurements into account, an appropriate representation of the covariance or correlation structure is critical for the more complex mixed model and generalized linear regression solutions. To illustrate this, we will also consider another two-stage random regression model that employs a generalized least squares (GLS) solution for subject-speciŽc slope coefŽcients at stage 1, and then enters these slope coefŽcients as the dependent variable for a stage 2 test of signiŽcance for a difference between mean rates of change in two treatment groups. The GLS slope calculations take all of the available repeated measurements into account in modelling the covariance or correlation structure, but the extent to which intermediate data points actually enter into the measure of change that is analysed at stage 2 is minimal in the presence of commonly observed autoregressive structures.

Davidian and Giltinan (1995) have elaborated on a complete solution to the general linear mixed model equation in which random effects and within-subjects covariance structures are modelled separately. Liang and Zeger (1986) employ a marginal or

1 For intent-to-treat analyses that include dropouts with shortened treatment exposure, we have proposed that OLS slope coefŽ cients calculated using only the available measurements should be weighted by the total duration of treatment exposure in order to maintain desired test size and power (Overall & Shivakumar, 1999). Without adjusting for shortened duration of treatment for early dropouts, stage 2 tests of signiŽcance on means of the subject-speciŽc OLS slope coefŽ cients suffer seriously deŽcient power (Overall, 1997; Overall, Shobaki & Fiori, 1996). Other authors have also recognized the reduced power associated with dropouts and have proposed weighting subject-speciŽc OLS slope coefŽ cients by variance estimates calculated across the number of available repeated measurements in the individual dropout data patterns (Palta & Cook, 1987). In this paper, we will not dwell further on the problems created by dropouts because calculation of the different change measures is the same as for completers, except for being based on fewer measurements.

Measuring change in controlled longitudinal studies 111

population-averaged model of the covariance structure in their generalized estimating equation solution, and Wu and colleagues (Wu & Carroll, 1988; Wu & Bailey, 1989) generalize the two-stage random coefŽcients model using either the marginal distribution of the repeated measurements or the within-subjects error distribution to deŽne the covariance structure. Other authors have suggested modelling the covariance structure in terms of the random effects only (Jones, 1990), or as a combination of the subjectspeciŽc random effects bi and within-subjects error (Chi & Reinsel, 1989; Diggle, 1988; Jones & Bodsi-Boteng, 1991), with Jones (1990) and Chi and Reinsel (1989) asserting that the combination of random effects and within-subjects error variances can have a joint AR(1) correlation structure. Recognizing this diversity, we are not concerned here with how the statistical model for a uniform or autoregressive correlation structure has originated. The primaryaim of this paper is to illustrate how complex regression models effectively reduce to simple pre–post difference score analyses in the presence of commonly observed autoregressive correlation structures of repeated measurements and reduce to the analysis of OLS slope coefŽcients under compound symmetry.

2. Linearly weighted composite change scores

To appreciate the similarities and differences among commonly used measures of change in controlled longitudinal studies, it is useful to conceive of ‘slope coefŽcients’ as time-weighted linear combinations of the repeated measurements. Whereas difference scores have traditionally been conceptualized as measurement variables with issues like reliability and validity taken into consideration, slope coefŽcients have traditionally been viewed from the perspective of a regression model in which errors of prediction or estimation are the focus and geometric interpretation is common. However, to examine the relationship between difference scores and regression coefŽcients, it is helpful to place them within the same conceptual frame—in terms of either a regression or measurement model. We believe that the subsequent use of the coefŽcients as dependent variables for evaluating the signiŽcance of treatment effects is more readily viewed from a measurement perspective. In that vein, the linear slope coefŽcient can be recognized as a repeated measurements generalization of the simple end-point difference score. Both are weighted combinations of the repeated measurements, which weights we relate here to a uniŽed model for regression on time.

Without loss of generality, we take time to be represented in mean-deviation form so that the coded values sum to zero. Although it is not necessary, we will further assume that the actual assessment points are equally spaced across the time domain so that the mean-corrected, equally spaced time codes are proportional to familiar linear orthogonal polynomial coefŽcients. The same familiar formula for calculating OLS slope coefŽcients can be used to calculate composite change measures in the form of simple difference scores or as complex GLS slope coefŽcients by giving different weights to the assessment times on which the repeated measurements are regressed. This notion relies on an integration of measurement and regression models through linear or nonlinear transformations of the time-scale coding. The computation, which is of an OLS regression slope, results in a composite variable bi in which the repeated

measurements are weighted by a linear or nonlinear function of time,

X

xy |

(1) |

bi = X x2 , |

112 John E. Overall and Scott Tonidandel

where the xs are weighting coefŽcients representing the mean-corrected and perhaps transformed time codes, and the ys are mean-corrected repeated measurements for the ith subject. Consistent with the usual assumption of independence, no general covariance structure is speciŽed in this familiar equation for OLS regression coefŽcients. For clarifying relationships between deŽnitions of change employed in the simple and the more complex GLS regression models, a more general form of the bi slope coefŽcients is provided bythe following matrix equation in which covariance structures other than independence or compound symmetry can also be accommodated:

bi = |

x ¢y |

, |

(2) |

j2x Cx |

|||

|

¢ |

|

|

where C is the within-groups correlation matrix (or model thereof) for the repeated measurements, and j2 is the within-groups variance which is assumed to be constant across assessment points. For any given set of repeated measurements, the three different measures of change can be calculated as ‘slope coefŽcients’ using this equation with different speciŽcations of the time coding in vector x ¢: in the special case of circularityor compound symmetry in C, equations (1) and (2) are proportional and lead to equivalent tests of the ‘equal slopes hypothesis’. Otherwise, (2) is required, with the x ¢ vector dependent on the particular correlation structure. It should be noted that the denominators of (1) and (2) are scaling constants that do not depend on the individual subject’s responses, and multiplication of a variable by a scaling constant does not affect parametric tests of signiŽcance on means of that variable. Thus, we will assume that j2 is 1 in what follows.

The simple difference scores used for end-point analyses give non-zero weight to only the baseline and the last available observation. For example, consider the x ¢ vector which is illustrated below for an eight-week controlled clinical trial involving a baseline plus eight subsequent equally spaced follow-up measurements. The end-point difference score is proportional to the slope of a line deŽned by the two extreme data points on the time scale, with the intermediate measurements given zero weight. For the regression calculations, the y-values are also corrrected to the mean of the baseline and Žnal observation, so that those values sum to zero. The absolute values of the two nonzero elements in x ¢ are arbitrary as long as they sum to zero because the x ¢vector is normalized when divided by x ¢ x or x ¢ Cx in (1) or (2):

x = ± 1 0 0 0 0 0 0 0 1 . |

(3) |

The OLS deŽnition of¢the£slope coefŽcient, representing regression¤ |

of the complete |

set of available repeated measurements on their associated time points, is obtained by applying mean-corrected, linearly increasing time coefŽcients to repeated measurements that are also corrected to their mean across the available time points (equation (1)). For the OLS slope calculations using (2), the matrix C is assumed to be an identity matrix or to have uniform elements satisfying the symmetry assumption. In the case of equallyspaced measurements, the time coefŽcients in x ¢ increase (or decrease) in equal steps. Again, the absolute magnitudes of values assigned to the linearly increasing elements of the x ¢ time scale are arbitrary because normalization is provided by x ¢x or x ¢Cx in the calculation of OLS slope coefŽcients using (1) or (2):

x ¢ = £± 4 ± 3 ± 2 ± 1 0 1 2 3 4 ¤. |

(4) |

Finally, the GLS solution for the mixed model equation Y = Xb + Zb + « can be obtained using OLS regression of repeated measurements on time codes that have been

Measuring change in controlled longitudinal studies 113

adjusted to account for serial dependencies among the repeated measurements. The correlations among repeated measurements, or among the residualized within-subjects errors (Davidian & Giltinan, 1995), are frequently modelled by an autoregressive (order 1) matrix in which the magnitude of correlation decreases as a simple exponential function of the temporal separation of the successive measurements, r = gt, where g is the correlation between adjacent measurements and t is the temporal separation between the ith and jth measurements. If one speciŽes an AR(1) model for the error structure in a general linear mixed model or generalized estimating equation solution, elements of the matrix model used in calculating tests of signiŽcance will conform exactlyto that simple exponential equation, regardless of how well it does or does not Žt the actual correlation structure of the data. This emphasizes the relevance of examining here how a speciŽed AR(1) model for the correlation structure modulates the weight given successive repeated measurements in deŽning change. It would be possible to use the observed within-groups covariance matrix for a GLS analysis, in which case one should consider how closely it approximates a true AR(1) or compound symmetry (CS) model in order to understand how the individual repeated measurements are being weighted in deŽning change. As will be considered later, the AR(1) structure is a special case of a more general parametric family of correlation structures.

The GLS solution for the subject-speciŽc slope coefŽcients can be obtained using an equation similar in form to those found in Davidian and Giltinan (1995, p. 78), Littell, Milliken, Stroup and WolŽnger (1996, p. 499), or Vonesh and Chinchilli (1997, p. 261),

bi = (z¢C± 1z)± 1z¢C± 1y , |

(5) |

where, for present purposes, we take C to be a matrix model of the within-groups covariance structure of the repeated measurements.2 Assuming equal variances, an appropriate model of the pooled within-groups correlation matrix can be used for C in the GLS calculation of subject-speciŽc slope coefŽcients. For the complete general linear mixed model calculations, the design matrix Z¢ is ordinarilystructured to include an intercept column, a time-code column, and perhaps columns representing other random effect parameters. However, we are concerned here only with the subjectspeciŽc slope coefŽcients, and the intercept parameter is implicit in the speciŽed mean correction of the x ¢ and y vectors. For simplicity, deŽne z¢ to be a mean-corrected vector of equallyspaced time codes (e.g., linear orthogonal polynomial coefŽcients), and again z¢C± 1z is a simple scaling constant that can be absorbed into the normalizing denominator of the OLS slope equation. Thus, x ¢ in (2) for the GLS solution can be represented by:

x ¢ = z¢C± 1, |

(6) |

where C± 1 is the inverse of a matrix model of the within-groups correlations among the repeated measurements and z¢ = [± 4 ± 3 ± 2 ± 1 0 1 2 3 4]. We use z¢ here to distinguish it from the transformed x ¢ that is the basis for the GLS solution when entered into (2). Equation (5) reduces to (2) with x ¢ = zC± 1 and the identity C C± 1 inserted into the denominator. We suggest modelling the correlation structure as an autoregressive function of the repeated measurements rather than estimating the large number of

2 A more precise deŽnition of the mixed model error structure would also adjust C for relation to any covariates that are to be included in the stage 2 analysis. The marginal C matrix is thus conceptually similar to the residual error matrix for a Ž xed effects MANOVA, and it contains both random effect and within-subject error components. The random coefŽ cients model

deŽnes bi as the deviation |

of the subject-speciŽc slope coefŽ cients about |

the complete Ž xed effects model |

bi = (Z¢C± 1 Z)± 1Z¢C± 1 (y ± |

X¢b). However, in considering the case where b = |

E (bi), the derivation is trivial. |

114 John E. Overall and Scott Tonidandel

individual variance/covariance parameters from limited sample data. Davidian and Giltinan (1995), Vonesh and Chinchilli (1997) and others have described complicated iterative methods for reŽning separate estimates of the within-subjects and betweensubjects variance/covariance parameters, but again we are not concerned here with how the correlation structure of the random effects and/or error has been calculated or modelled: it is the implications of that structure for the alternative deŽnitions of change that is the focus of this paper. For understanding the relationships between simple endpoint difference scores, OLS and GLS deŽnitions of treatment effects, it is important to appreciate how the linear z¢ weights are transformed by (6) into a nonlinear x ¢ for entry into (2) in response to the autoregressive structure of sequentially obtained repeated measurements. On the other hand, if the elements in C± 1 are uniform, then x ¢ will be proportional to z¢.

3. Implications of the correlation structure

Although some degree of serial dependence is usually observed among sequentially obtained measurements, the two most commonly assumed patterns of correlation are ‘autoregressive (order 1)’ and ‘compound symmetry’ (see Littell et al., 1996, p. 92). In reality, these are but two extremes of a theoretical family of correlation patterns deŽned by the dampened exponential equation r = gtv , where g is the correlation between adjacent measurements, t is the lag separation between the ith and jth measurements, and v re•ects the degree of serial dependence (Munoz, Carey, Schouten, Segal and Rosner, 1992). Compound symmetry is modelled by setting v = 0, and autoregressive (order 1) by setting v = 1. We illustrate here the implications of those two theoretically important correlation structures for relationships between end-point gain scores, OLS regression and GLS regression deŽnitions of change in repeated measurements designs.

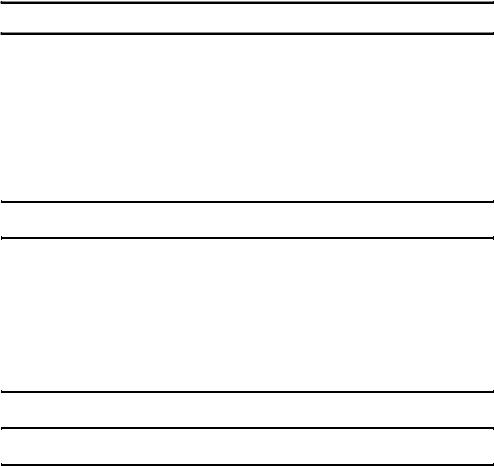

Table 1 shows the autoregressive (order 1) matrix of correlations that is produced by setting v = 1 in the dampened exponential equation r = gtv to model serial dependencies for a study involving baseline and eight weekly follow-up evaluations.3 The inverse of the correlation matrix is shown in the second section of Table 1. The transformed x ¢ vector, shown in the lower section of the table, was calculated using

(6) for the GLS solution with z¢ consisting of equally spaced linear contrast coefŽcients illustrated in (4). These are the x ¢ weights that are applied to the (mean-corrected) repeated measurements to deŽne subject-speciŽc GLS ‘slope coefŽcients’ using (2). The simple difference scores for the end-point analysis and the OLS linear weights are not transformed by the pattern of correlations in deŽning subject-speciŽc slope coefŽcients at stage 1 of a two-stage analysis that has the aim of testing the equal change hypothesis; however, the GLS ‘slope coefŽcients’ do depend on the correlation structure. As shown in Table 1, the inverse of a true autoregressive AR(1) correlation matrix has an elegantly simple quasi-diagonal form. The linear contrast coefŽcients of the OLS model (equation (4)) are effectively transformed into a simple end-point contrast (3) when z¢ is multiplied by autoregressive C± 1 of Table 1 to obtain the

3 The value of g = 0.917 was chosen for this example in order to deŽne an AR(1) matrix in which the baseline–end-point correlation is 0.500, which approximates the commonly observed end-point correlation in clinical trials of antipsychotic drugs in the experience of the present author (JEO). The correlation between adjacent time points g = 0.917 approximates the test– retest reliability of the Brief Psychiatric Rating Scale total score.

Measuring change in controlled longitudinal studies 115

Table 1. Change deŽ ned by generalized least squares solution for autoregressive data

Autoregressive (order 1) correlation matrix

± 5.764 |

± 5.764 |

± 5.764 |

± 5.764 |

± 5.764 |

± 5.764 |

± 5.764 |

± 5.764 |

± 5.764 |

|

1.000 |

0.917 |

0.841 |

0.771 |

0.707 |

0.648 |

0.595 |

0.545 |

0.500 |

|

0.917 |

1.000 |

0.917 |

0.841 |

0.771 |

0.707 |

0.648 |

0.595 |

0.545 |

|

0.841 |

0.917 |

1.000 |

0.917 |

0.841 |

0.771 |

0.707 |

0.648 |

0.595 |

|

0.771 |

0.841 |

0.917 |

1.000 |

0.917 |

0.841 |

0.771 |

0.707 |

0.648 |

|

0.707 |

0.771 |

0.841 |

0.917 |

1.000 |

0.917 |

0.841 |

0.771 |

0.707 |

|

0.648 |

0.707 |

0.771 |

0.841 |

0.917 |

1.000 |

0.917 |

0.841 |

0.771 |

|

0.595 |

0.648 |

0.707 |

0.771 |

0.841 |

0.917 |

1.000 |

0.917 |

0.841 |

|

0.545 |

0.595 |

0.648 |

0.707 |

0.771 |

0.841 |

0.917 |

1.000 |

0.917 |

|

0.500 |

0.545 |

0.595 |

0.648 |

0.707 |

0.771 |

0.841 |

0.917 |

1.000 |

|

|

|

|

|

|

|

||||

|

|

Inverse autoregressive (order 1) correlation matrix |

|

|

|

||||

|

|

|

|

|

|

|

|

|

|

6.285 |

± 5.764 |

0.000 |

0.000 |

0.000 |

0.000 |

0.000 |

0.000 |

0.000 |

|

5.764 |

11.570 |

± 5.764 |

0.000 |

0.000 |

0.000 |

0.000 |

0.000 |

0.000 |

|

0.000 |

± 5.764 |

11.570 |

± 5.764 |

0.000 |

0.000 |

0.000 |

0.000 |

0.000 |

|

0.000 |

0.000 |

± 5.764 |

11.570 |

± 5.764 |

0.000 |

0.000 |

0.000 |

0.000 |

|

0.000 |

0.000 |

0.000 |

± 5.764 |

11.570 |

± 5.764 |

0.000 |

0.000 |

0.000 |

|

0.000 |

0.000 |

0.000 |

0.000 |

± 5.764 |

11.570 |

± 5.764 |

0.000 |

0.000 |

|

0.000 |

0.000 |

0.000 |

0.000 |

0.000 |

± 5.764 |

11.570 |

± 5.764 |

0.000 |

|

0.000 |

0.000 |

0.000 |

0.000 |

0.000 |

0.000 |

± 5.764 |

11.570 |

± 5.764 |

|

0.000 |

0.000 |

0.000 |

0.000 |

0.000 |

0.000 |

0.000 |

± 5.764 |

6.285 |

|

|

|

|

|

|

|

|

|

||

|

|

|

Linear contrast function: x¢ = z¢C± 1 |

|

|

|

|

||

|

|

|

|

|

|

|

|

|

|

± 7.850 |

± 0.130 |

± 0.087 |

± 0.043 |

0.000 |

0.043 |

0.087 |

0.130 |

7.850 |

|

|

|

|

|

|

|

|

|

|

|

transformed time scale x ¢ (equation (6)) for calculation of the GLS ‘slope coefŽcients’ using (2). The elements of C± 1 are uniform under conditions of compound symmetry, so the elements in x ¢ = z¢C± 1 of the GLS solution are proportional to the original equally spaced assessment times in that case.

This illustrates how the GLS solution approaches the simple end-point differencescore analysis as the correlation structure of the repeated measurements approaches or is modelled to have a true AR(1) pattern and why the GLS solution approaches the OLS deŽnition of change when the correlation structure approaches or is modelled as compound symmetry. There is no implication that the actual correlation structure of repeated measurements is likely to correspond precisely to either of these mathematically unique patterns, but understanding the general relationships is important. It is generallyexpected that some degree of serial correlation will be evident in sequentially obtained repeated measurements, and to that extent the end-point analysis accomplished on simple difference scores will approximate the more complex GLS solution if the observed error covariance matrix is used. When an AR(1) mathematical model of the correlation structure is substituted, as is common in mixed model or generalized

116 John E. Overall and Scott Tonidandel

estimating equation computations, the subject-speciŽc GLS regression slopes and end-point difference scores will correlate perfectly and provide precisely equivalent tests of the ‘equal change hypothesis’.

Areader of an earlier draft of this paper commented that the high level of correlation in the particular AR(1) matrix that was used for the example in Table 1 is responsible for the almost complete zeroing of weights assigned to the intermediate time points in the vector x ¢ = z¢C± 1. The conclusion is correct that an AR(1) matrix generated using a smaller initial value of r produces a product x ¢ = z¢C± 1 that is not as clearly a simple difference as that illustrated in Table 1. The general inverse transformation for any true AR(1) correlation matrix is of the following form. Only here do we use the symbol r to represent the speciŽc correlation between adjacent time periods in order for the illustration to be recognized at a glance as a correlation matrix. Throughout the rest of this paper, g is used for the speciŽc correlation between adjacent time periods, and r = gt is the general element of the autoregressive correlation matrix which varies as a function of t. Elements in the product x ¢ = z¢C± 1 depend on the quadratic 1 ± 2r + r2, which increases towards 1 as r decreases toward zero and decreases toward zero as r

increases towards 1: |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

||||

2 |

1 |

r r |

|

¼ rm± 2 |

3 |

|

|

|

|

2 ¼ |

r |

(1 |

|

2 |

) |

¼ |

r |

¼ |

.. |

|

3 |

|||

r |

1 r |

r |

|

|

|

|

|

1 |

+ r |

0 |

. |

|

|

|||||||||||

|

|

|

|

2 |

m± 1 |

± 1 |

|

|

|

|

6 |

|

|

¼r |

|

|

0 |

|

7 |

|||||

6 |

r2 |

r |

|

|

|

7 |

|

|

1 |

|

|

|

|

|

|

|

|

|

|

|

. |

|

||

6 |

|

|

1 |

|

7 |

= |

|

|

2 |

6 |

0 |

|

|

¼ |

r |

|

¼ |

|

|

. |

|

7. |

||

6 |

|

|

|

|

|

7 |

|

1 |

± r |

|

6 |

|

|

|

|

|

|

|

|

|

|

|

|

7 |

6 . |

|

|

|

|

7 |

|

|

|

|

6 |

|

|

|

|

|

|

|

|

|

|

|

|

7 |

|

|

. |

|

|

|

¼ |

7 |

|

|

|

|

6 . |

|

|

|

|

|

|

|

|

2 |

¼ |

|

7 |

|

6 . |

|

|

|

|

|

|

|

6 . |

|

|

|

|

|

|

(1 |

|

r |

7 |

||||||

6 |

|

|

|

|

|

7 |

|

|

|

|

6 . |

|

|

|

|

|

|

+ r ) |

|

7 |

||||

6 |

m± 1 |

¼ ¼ ¼ 1 |

7 |

|

|

|

|

6 |

|

|

|

|

|

|

¼ ¼ |

|

|

|

7 |

|||||

6 r |

|

7 |

|

|

|

|

6 |

0 |

|

|

0 |

|

|

r |

1 |

|

7 |

|||||||

6 |

|

|

|

|

|

7 |

|

|

|

|

6 |

|

|

|

|

|

|

|

|

7 |

||||

4 |

|

|

|

|

|

5 |

|

|

|

|

4 |

|

|

|

|

|

|

|

|

|

|

|

|

5 |

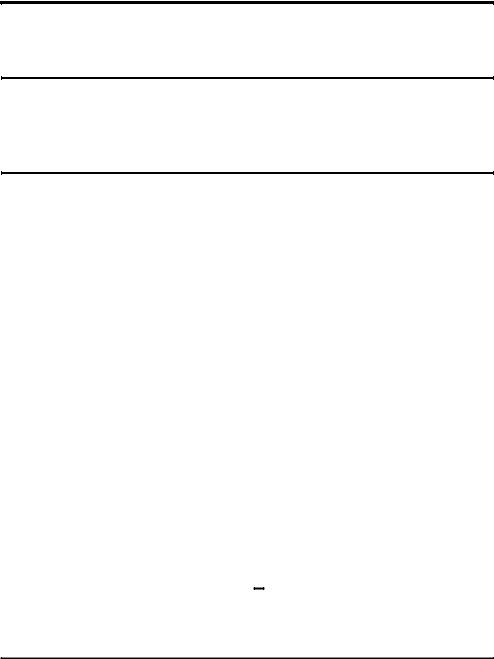

Our concern throughout this paper is with what we consider to be the bottom line for valid statistical inference—Type I error and power. We have previously computed Type I error rates for the two-stage analyses considered here and found them to be quite adequate, so those results need not be reproduced in detail here (Overall, Ahn, Shivakumar & Kalburgi, 1999; Ahn, Tonidandel & Overall, 2000). In view of concerns about the implications of the average level of correlation, we examine the power provided by the transformed x ¢ = z¢C± 1 contrast coefŽcients for autoregressive r = gt conditions with values of g = 0.95 down to 0.75, assuming the same parallel groups design considered above. For ready comparison with the usual end-point difference scores, each set of resulting x ¢ coefŽcients was ‘normalized’ by dividing through by the baseline value to obtain rescaled vectors of contrast coefŽcients beginning and ending in 61.0. Table 2 shows the normalized x ¢ = z¢C± 1 contrast coefŽcients that were obtained for AR(1) matrices generated separately for g values in the 0.95–0.75 range. It is apparent that, as the level of correlation decreases in magnitude, the vectors of contrast coefŽcients do appear less obviously to be simple baseline–end-point differences.

Aproblem with the integer coding of time in constructing an AR(1) model for lower average levels of correlation in a study of several weeks’ duration is that the correlation between the most separated time points tends to decrease to values below those seen in most actual controlled clinical trials. To allow examination, the baseline–end-point correlation is shown on the right of Table 2 for AR(1) matrices generated using integer coding of assessment times and g values shown on the left.4 We believe that it is unlikely

Measuring change in controlled longitudinal studies 117

Table 2. Normalized contrast coefŽ cients for different autoregressive AR(1) correlation structures

|

|

r = gt |

g |

|

Baseline– |

Adjacent |

|

end-point |

times |

Normalized contrast coefŽ cients |

correlation |

|

|

|

0.95 |

(± 1.000, ± 0.007, ± 0.004, 0.000, 0.000, 0.000, 0.004, 0.007, 1.000) |

0.66 |

0.90 |

(± 1.000, ± 0.023, ± 0.015, ± 0.008, 0.000, 0.008, 0.015, 0.023, 1.000) |

0.43 |

0.85 |

(± 1.000, ± 0.047, ± 0.031, ± 0.016, 0.000, 0.016, 0.031, 0.047, 1.000) |

0.27 |

0.80 |

(± 1.000, ± 0.075, ± 0.050, ± 0.025, 0.000, 0.025, 0.050, 0.075, 1.000) |

0.17 |

0.75 |

(± 1.000, ± 0.107, ± 0.072, ± 0.036, 0.000, 0.036, 0.072, 0.107, 1.000) |

0.10 |

|

|

|

that any useful repeated measurements will have baseline–end-point correlations as small as the smaller of those shown here. If we were to observe such, we would probably consider the data to be too unreliable to merit serious analysis. In spite of this, we will next examine the power of alternative tests of the equal change hypothesis under the full range of correlation conditions shown in Table 2 to determine whether the departure of the x ¢ = z¢C± 1 contrast coefŽcients from a clean baseline–end-point difference materially affects the calculated power. Only if power differs appreciably should different inferences follow from end-point analysis and from the complete GLS regression tests of the equal change hypothesis.

4. Power

4.1. Power calculations

The power of a test of signiŽcance for the difference between means of a normally distributed response measure in two treatment groups can be obtained as the area that lies beyond a critical Zb value in the unit normal curve. Power is equal to the larger area under the curve if Zb is positive and equal to the smaller area if Zb is negative. ‘Power’ is reduced to the Type I error rate when D = 0 and Zb is accordinglynegative. Overall and Doyle (1994) present equations for calculating Zb for tests of the ‘equal change hypothesis’ using simple end-point change or OLS regression slopes as dependent variables. An equation for calculating power for tests on GLS regression slopes results from substituting x ¢ = z¢C± 1 from (6) into calculation of effect size D in any of the equations presented by those authors. The general power equation is of the form

Zb = Dr2•± Za |

(7) |

n |

|

where Zb is the critical z-score delineating an area under the unit normal curve equal to power, Za is the critical value corresponding to the desired one-sided or two-sided alpha

4 There is no requirement that t must be integer-coded, as illustrated here, to model the correlation structure r = gt . If one desires the autoregressive model to Ž t both the correlation between adjacent measurementsand the correlation between most temporally separated measurements, an appropriate rescaling of the time coding may be required. Take r to be the required end-point correlation and g the correlation between adjacent measurements, and solve for the end-point time t. In this case, interpretation of ‘slope coefŽ cients’ as change per unit of actual time is sacriŽ ced, but usefulness as an index or coefŽ cient of relative change for comparison of treatment effects in longitudinal studies may be enhanced. This procedure can be used where the experimental design involves unequal assessment intervals. In that case, elements in the vector z¢ should re•ect the same unequal spacing of measurements, as should elements in the x¢ vector for OLS regression.

118 John E. Overall and Scott Tonidandel

level, n is the sample size per group, and D is the effect size which depends on the particular deŽnition of change. In order to examine the similarities and differences among simple and more complex deŽnitions of change, a general form of effect size D for use in power calculation is given by:

|

| x ¢d| |

(8) |

D = |

jpx ¢Cx•, |

where x ¢ is deŽned as in (3), (4) or (6), d is the vector of differences between group means, C is here taken as simply the within-groups correlation matrix (or model thereof) for the repeated measurements, and j is the within-groups standard deviation which is assumed to be constant across the repeated measurements. In other mixed model representations, the matrix C is a matrix model of the random effects and/or error covariance structure. Again, we are concerned here with implications of the speciŽed covariance structure, not with how it evolved.

4.2. Power comparisons

If the x ¢ contrast coefŽcients are identical for two deŽnitions of change, then the results from stage 2 tests of signiŽcance accomplished on the subject-speciŽc rate-of-change parameters should also be identical. Examination of the coefŽcients shown in Table 2 conŽrms that the GLS contrast x ¢ = z¢C± 1 less clearly deŽnes a simple end-point difference score as the general level of correlation decreases. At issue here is the practical consequence of the apparent differences, which we evaluate by comparisons of calculated power for the respective tests of the ‘equal change hypothesis’. A randomized parallel groups design with baseline plus eight weekly follow-up measurements on n = 50 subjects in each of two groups was considered to have either the AR(1) or CS correlational structure. Power was calculated as described in Section 4.1. A treatment mean difference that increased from zero at baseline to 0.5jw units in eight equal steps deŽned the vector d¢ = [0.00 0.063 0.125 0.188 0.251 0.313 0.376 0.438 0.500] with jw = 1.0 used for the power calculations. Note that the departure of GLS regression from the OLS regression model for testing the ‘equal change hypothesis’ depends only on the inverse of the error covariance matrix C± 1, not on a particular pattern of monotonically increasing mean differences d¢.

The AR(1) matrices used for these power calculations were generated for correlations between adjacent measurements from g = 0.95 to 0.75, and the CS matrices had uniform correlations that ranged from r = 0.70 to 0.30. This range of power values allows the in•uence of the different correlation structures on the relative power of the end-point, OLS and GLS tests of the ‘equal change hypothesis’ to be appreciated by comparison. Table 3 shows the power of tests of the equal change hypothesis in presence of these autoregressive AR(1) and CS correlation structures.

The primary motivation for presenting results from the power calculations is to illustrate how closely the more complex GLS regression solution approaches a simple end-point analysis under a realistic range of autoregressive conditions. Given a monotonically increasing treatment effect across time, the analysis of simple baseline–end- point difference scores produces power for tests of the equal change hypothesis that is greater than power for the complete OLS regression solution in the presence of AR(1) correlation structure. The OLS regression solution produces greater power than the simple end-point difference-score analysis under conditions of compound symmetry. The GLS solution is equivalent to OLS under conditions of a uniform CS correlation