- •Contents

- •Preface

- •1 Spread spectrum signals and systems

- •1.1 Basic definition

- •1.2 Historical sketch

- •2 Classical reception problems and signal design

- •2.1 Gaussian channel, general reception problem and optimal decision rules

- •2.2 Binary data transmission (deterministic signals)

- •2.3 M-ary data transmission: deterministic signals

- •2.4 Complex envelope of a bandpass signal

- •2.5 M-ary data transmission: noncoherent signals

- •2.6 Trade-off between orthogonal-coding gain and bandwidth

- •2.7 Examples of orthogonal signal sets

- •2.7.1 Time-shift coding

- •2.7.2 Frequency-shift coding

- •2.7.3 Spread spectrum orthogonal coding

- •2.8 Signal parameter estimation

- •2.8.1 Problem statement and estimation rule

- •2.8.2 Estimation accuracy

- •2.9 Amplitude estimation

- •2.10 Phase estimation

- •2.11 Autocorrelation function and matched filter response

- •2.12 Estimation of the bandpass signal time delay

- •2.12.1 Estimation algorithm

- •2.12.2 Estimation accuracy

- •2.13 Estimation of carrier frequency

- •2.14 Simultaneous estimation of time delay and frequency

- •2.15 Signal resolution

- •2.16 Summary

- •Problems

- •Matlab-based problems

- •3 Merits of spread spectrum

- •3.1 Jamming immunity

- •3.1.1 Narrowband jammer

- •3.1.2 Barrage jammer

- •3.2 Low probability of detection

- •3.3 Signal structure secrecy

- •3.4 Electromagnetic compatibility

- •3.5 Propagation effects in wireless systems

- •3.5.1 Free-space propagation

- •3.5.2 Shadowing

- •3.5.3 Multipath fading

- •3.5.4 Performance analysis

- •3.6 Diversity

- •3.6.1 Combining modes

- •3.6.2 Arranging diversity branches

- •3.7 Multipath diversity and RAKE receiver

- •Problems

- •Matlab-based problems

- •4 Multiuser environment: code division multiple access

- •4.1 Multiuser systems and the multiple access problem

- •4.2 Frequency division multiple access

- •4.3 Time division multiple access

- •4.4 Synchronous code division multiple access

- •4.5 Asynchronous CDMA

- •4.6 Asynchronous CDMA in the cellular networks

- •4.6.1 The resource reuse problem and cellular systems

- •4.6.2 Number of users per cell in asynchronous CDMA

- •Problems

- •Matlab-based problems

- •5 Discrete spread spectrum signals

- •5.1 Spread spectrum modulation

- •5.2 General model and categorization of discrete signals

- •5.3 Correlation functions of APSK signals

- •5.4 Calculating correlation functions of code sequences

- •5.5 Correlation functions of FSK signals

- •5.6 Processing gain of discrete signals

- •Problems

- •Matlab-based problems

- •6 Spread spectrum signals for time measurement, synchronization and time-resolution

- •6.1 Demands on ACF: revisited

- •6.2 Signals with continuous frequency modulation

- •6.3 Criterion of good aperiodic ACF of APSK signals

- •6.4 Optimization of aperiodic PSK signals

- •6.5 Perfect periodic ACF: minimax binary sequences

- •6.6 Initial knowledge on finite fields and linear sequences

- •6.6.1 Definition of a finite field

- •6.6.2 Linear sequences over finite fields

- •6.6.3 m-sequences

- •6.7 Periodic ACF of m-sequences

- •6.8 More about finite fields

- •6.9 Legendre sequences

- •6.10 Binary codes with good aperiodic ACF: revisited

- •6.11 Sequences with perfect periodic ACF

- •6.11.1 Binary non-antipodal sequences

- •6.11.2 Polyphase codes

- •6.11.3 Ternary sequences

- •6.12 Suppression of sidelobes along the delay axis

- •6.12.1 Sidelobe suppression filter

- •6.12.2 SNR loss calculation

- •6.13 FSK signals with optimal aperiodic ACF

- •Problems

- •Matlab-based problems

- •7 Spread spectrum signature ensembles for CDMA applications

- •7.1 Data transmission via spread spectrum

- •7.1.1 Direct sequence spreading: BPSK data modulation and binary signatures

- •7.1.2 DS spreading: general case

- •7.1.3 Frequency hopping spreading

- •7.2 Designing signature ensembles for synchronous DS CDMA

- •7.2.1 Problem formulation

- •7.2.2 Optimizing signature sets in minimum distance

- •7.2.3 Welch-bound sequences

- •7.3 Approaches to designing signature ensembles for asynchronous DS CDMA

- •7.4 Time-offset signatures for asynchronous CDMA

- •7.5 Examples of minimax signature ensembles

- •7.5.1 Frequency-offset binary m-sequences

- •7.5.2 Gold sets

- •7.5.3 Kasami sets and their extensions

- •7.5.4 Kamaletdinov ensembles

- •Problems

- •Matlab-based problems

- •8 DS spread spectrum signal acquisition and tracking

- •8.1 Acquisition and tracking procedures

- •8.2 Serial search

- •8.2.1 Algorithm model

- •8.2.2 Probability of correct acquisition and average number of steps

- •8.2.3 Minimizing average acquisition time

- •8.3 Acquisition acceleration techniques

- •8.3.1 Problem statement

- •8.3.2 Sequential cell examining

- •8.3.3 Serial-parallel search

- •8.3.4 Rapid acquisition sequences

- •8.4 Code tracking

- •8.4.1 Delay estimation by tracking

- •8.4.2 Early–late DLL discriminators

- •8.4.3 DLL noise performance

- •Problems

- •Matlab-based problems

- •9 Channel coding in spread spectrum systems

- •9.1 Preliminary notes and terminology

- •9.2 Error-detecting block codes

- •9.2.1 Binary block codes and detection capability

- •9.2.2 Linear codes and their polynomial representation

- •9.2.3 Syndrome calculation and error detection

- •9.2.4 Choice of generator polynomials for CRC

- •9.3 Convolutional codes

- •9.3.1 Convolutional encoder

- •9.3.2 Trellis diagram, free distance and asymptotic coding gain

- •9.3.3 The Viterbi decoding algorithm

- •9.3.4 Applications

- •9.4 Turbo codes

- •9.4.1 Turbo encoders

- •9.4.2 Iterative decoding

- •9.4.3 Performance

- •9.4.4 Applications

- •9.5 Channel interleaving

- •Problems

- •Matlab-based problems

- •10 Some advancements in spread spectrum systems development

- •10.1 Multiuser reception and suppressing MAI

- •10.1.1 Optimal (ML) multiuser rule for synchronous CDMA

- •10.1.2 Decorrelating algorithm

- •10.1.3 Minimum mean-square error detection

- •10.1.4 Blind MMSE detector

- •10.1.5 Interference cancellation

- •10.1.6 Asynchronous multiuser detectors

- •10.2 Multicarrier modulation and OFDM

- •10.2.1 Multicarrier DS CDMA

- •10.2.2 Conventional MC transmission and OFDM

- •10.2.3 Multicarrier CDMA

- •10.2.4 Applications

- •10.3 Transmit diversity and space–time coding in CDMA systems

- •10.3.1 Transmit diversity and the space–time coding problem

- •10.3.2 Efficiency of transmit diversity

- •10.3.3 Time-switched space–time code

- •10.3.4 Alamouti space–time code

- •10.3.5 Transmit diversity in spread spectrum applications

- •Problems

- •Matlab-based problems

- •11 Examples of operational wireless spread spectrum systems

- •11.1 Preliminary remarks

- •11.2 Global positioning system

- •11.2.1 General system principles and architecture

- •11.2.2 GPS ranging signals

- •11.2.3 Signal processing

- •11.2.4 Accuracy

- •11.2.5 GLONASS and GNSS

- •11.2.6 Applications

- •11.3 Air interfaces cdmaOne (IS-95) and cdma2000

- •11.3.1 Introductory remarks

- •11.3.2 Spreading codes of IS-95

- •11.3.3 Forward link channels of IS-95

- •11.3.3.1 Pilot channel

- •11.3.3.2 Synchronization channel

- •11.3.3.3 Paging channels

- •11.3.3.4 Traffic channels

- •11.3.3.5 Forward link modulation

- •11.3.3.6 MS processing of forward link signal

- •11.3.4 Reverse link of IS-95

- •11.3.4.1 Reverse link traffic channel

- •11.3.4.2 Access channel

- •11.3.4.3 Reverse link modulation

- •11.3.5 Evolution of air interface cdmaOne to cdma2000

- •11.4 Air interface UMTS

- •11.4.1 Preliminaries

- •11.4.2 Types of UMTS channels

- •11.4.3 Dedicated physical uplink channels

- •11.4.4 Common physical uplink channels

- •11.4.5 Uplink channelization codes

- •11.4.6 Uplink scrambling

- •11.4.7 Mapping downlink transport channels to physical channels

- •11.4.8 Downlink physical channels format

- •11.4.9 Downlink channelization codes

- •11.4.10 Downlink scrambling codes

- •11.4.11 Synchronization channel

- •11.4.11.1 General structure

- •11.4.11.2 Primary synchronization code

- •11.4.11.3 Secondary synchronization code

- •References

- •Index

292 |

Spread Spectrum and CDMA |

|

|

code rate Rc |

being measured in bits per symbol. For the code of Example 9.3.1 |

Rc ¼ 1/2, df ¼ 5, so that Ga ¼ 2:5 or about 4 dB. We remember once again that Ga is derived for the AWGN channel (not BSC!); in other words, for the case of soft decoding. Hard decoding degrades this figure by 2–3 dB depending on code parameters and symbol SNR [31,33].

9.3.3 The Viterbi decoding algorithm

As was mentioned, among the reasons for the great popularity of convolutional codes the existence of a feasible decoding algorithm is of special importance. Let us begin with the following statement.

Proposition 9.3.1. The ML hard error-correction decoding of a binary code is equivalent to the minimum Hamming distance rule:

H ðu; yÞ ¼ u U |

H ð ; Þ ) |

u^ is declared the received word |

ð : Þ |

|

d ^ |

min d |

u y |

9 9 |

|

|

2 |

|

|

|

As one can see, the rule is very similar to (2.3) with a single change: in the case of BSC the Hamming distance replaces the Euclidean one, adequate for the AWGN channel. To prove (9.9) it is enough to note that (9.1) rewritten as:

pðyjuÞ ¼ pdH ðu;yÞð1 pÞn dH ðu;yÞ ¼ |

1 p |

ð1 pÞn |

|

|

|

p |

dH ðu;yÞ |

is nothing but the BSC transition probability, i.e. the probability for the sent code vector u of length n being transformed by BSC into a binary observation y. Since the crossover probability of BSC p < 0:5, the transition probability p(yju) is a decreasing function of the Hamming distance from an observation y at the BSC output to a code vector u, and, therefore, the ML codeword u^ is the one closest to y by the Hamming distance.

A direct realization of (9.9) for an arbitrary code appeals to comparison of M Hamming distances from y to all codewords. Since M is typically quite large such a solution might appear infeasible. On the contrary, because of the beneficial structure of convolutional codes the ML decoding is not a big technological challenge, at least if the constraint length is moderate.

The Viterbi decoding procedure implements the ML strategy in a recurrent, step-by-step form of searching a path on the trellis diagram closest to the binary observation y. Every new decoding step starts with the arrival of the next group of n observation symbols. At the ith step the decoder calculates the distance of n incoming observation symbols from every branch of a trellis diagram and increments the distances for all paths calculated over the i 1 previous steps. One might work out the distances for an arbitrary code similarly, as new observation symbols arrive, but it is the recurrent nature of a convolutional code that makes this routine computationally economical due to the immediate discarding of many paths at each step.

Channel coding in spread spectrum systems |

|

293 |

|

|

B |

|

|

|

|

A |

|

|

C |

|

|

Steps: |

i – 1 |

i |

i + 1 |

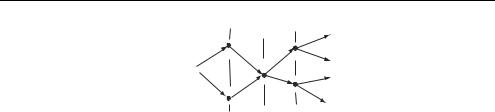

Figure 9.8 Paths going through node A at the ith step

Consider all paths passing through a fixed node A at the ith step, as shown in Figure 9.8. Continuation of any path after the ith step does not depend on the route of arriving at A, so that different paths reaching A may further merge with each other. But this means that of all the paths going through A and continuing the same way after the ith step the one having minimum distance from y up to the ith step will remain closest to y forever, since a common continuation adds an equal contribution to all distances! Why then go on with computing distances for the rest as soon as it is clear that they have no chance of finally appearing closest to the observation? Instead we discard them, retaining only the one reaching the node A with minimum distance. This latter is called a survivor and for the time being we believe that there is only a single survivor for every node of the trellis diagram at the ith step (see comments below). The current, i.e. calculated over all observed symbols up to the ith step, distance of the node A survivor from y is called the node metric of A.

Now recall that there are only two branches entering any node; Figure 9.8 shows this for some node A. Two branches enter it from two nodes B, C of the previous step and, therefore, continue the survivors of B and C. We may calculate the distances of two paths reaching A by just measuring the branch metrics, i.e. the distances of branches from the arriving group of n observed symbols, and adding them to the node metrics of B and C. The path with smaller distance is declared the survivor of A and is recorded in memory along with its distance (node metric) while the other is discarded. On performing these operations for all nodes of the trellis diagram the decoder proceeds to the next step.

To summarize briefly, the Viterbi decoder calculates branch metrics at every step, adds them to all the node metrics accumulated before and then sifts out the more distant of two paths leading to every node. Since there are 2 c 1 nodes (i.e. register states) altogether, the complexity of the decoder is determined only by the constraint length c and remains fixed independently of the theoretically unlimited number of codewords (paths).

Coming back to our assumption on the uniqueness of a survivor for every node, note that since Hamming distance is integer, the probability always exists that two paths leading to the same node have equal current distances from y. Different strategies are possible to solve ambiguity of this sort. One of them is just a random choice: assigning tails of a fair coin to one of the paths and declaring this one the survivor if tails really falls after flipping the coin. This certainly violates the decoding optimality, although the accompanying energy loss is typically insignificant. Alternatively, both competitive paths may be declared survivors and recorded in memory until further steps remove the ambiguity. The latter option preserves decoding optimality at the cost of involving extra memory.

294 |

Spread Spectrum and CDMA |

|

|

Example 9.3.4. Consider decoding the observation y ¼ 0100110000 . . . for the code of Example 9.3.1. Figure 9.9 illustrates the process with node metrics placed immediately near the nodes, frames containing the pairs of observation symbols arriving at the current step. The decoder starts the process assuming zero (i.e. (00)) initial state of the encoder register. The starting

(00) |

00 |

|

11 |

||

|

||

(10) |

11 |

|

00 |

||

|

||

(01) |

10 |

|

01 |

||

|

||

(11) |

01 |

|

10 |

||

|

||

|

Step: |

|

(00) |

00 |

|

11 |

||

|

||

(10) |

11 |

|

00 |

||

|

||

(01) |

10 |

|

01 |

||

|

||

(11) |

01 |

|

10 |

||

|

||

|

Step: |

|

(00) |

00 |

|

11 |

||

|

||

(10) |

11 |

|

00 |

||

|

||

(01) |

10 |

|

01 |

||

|

||

(11) |

01 |

|

10 |

||

|

||

|

Step: |

(00)

00

00

11

(10)

11

11

00

(01)

10

01

(11)

01

01

10

Step:

(00)

00

00

11

(10)

11

11

00

(01)

10

01

(11)

01

10

Step:

01 |

|

00 |

|

11 |

|

|

1 |

|

1 |

|

3 |

1 |

0 |

|

1 |

2 |

2 |

1 |

3 |

1 |

|

1 |

4 |

|

2 |

4 |

13

2 |

4 |

|

3 |

1 2 3

00

22

|

4 |

|

4 |

3 |

2 |

2 |

4 |

2 |

3 |

4 |

|

|

3 |

|

5 |

|

00 |

|

2 |

2 |

5 |

1 |

4 |

|

3 |

|

2 |

3 |

4 |

|

2 |

34

4 |

00 |

|

|

2 |

2 |

|

5 |

|

4 |

2 |

3 |

3 |

3 |

4 |

|

|

3 |

3 |

4 |

00 |

6 |

|

22

5 4

5 4

3 3

3

3 4

4

4

3  4

4

4

7

00

22

|

6 |

|

4 |

3 |

4 |

4 |

4 |

45

4

5

8

00

22

4 |

6 |

4 |

|

4 |

4 |

5 |

|

|

5 |

4 |

5 |

|

5 |

|

9 |

Figure 9.9 Dynamics of decoding for the convolutional code of Example 9.3.1

Channel coding in spread spectrum systems |

295 |

|

|

c 1 ¼ 2 steps correspond to transient behaviour of the encoder register, when only one branch enters every state (see Figure 9.6) and so all paths are survivors. At the first step the decoder compares the first group of n ¼ 2 observation symbols with two branches emanating from the state (00). According to their Hamming distances from the observed symbol group 01, both the solid and dashed lines obtain metric 1, shown near the branches. Consequently, the node metrics of the two nodes, at which the branches arrive, both become equal to one. At the next step the distance is measured between the second group of observation symbols 00 and two pairs of branches emanating from nodes (00) and (10), resulting in the metrics labelling the branches. Added to the node metrics of the previous step, they update the metrics of nodes (00) and (10) and produce the metrics of two more nodes (01) and (11). Starting with the third step two branches enter any node of a trellis diagram of Figure 9.6, meaning that the decoder must decide which of them is a survivor. We do not show in Figure 9.9 the metrics of the branches beginning with this step in order to avoid clutter. As is seen, there are two paths leading to the node (00) at the third step. Their distances from the observed symbols 010011 are 3 and 2, respectively. The first is not a survivor and the decoder discards it along with its metric, so it is crossed out and not present at the plot of the next step. The second is a survivor, and is recorded in memory with its metric until the next step. In the same way, the decoder finds the survivors for the rest of the nodes. The decoding proceeds similarly at further steps, storing in memory only 2 c 1 ¼ 4 survivors, and every plot of Figure 9.9 depicts only the paths declared survivors at the previous step.

At step 7 the decoder first runs into the problem of ambiguity: two paths arrive at the node (01) with equal distances, and the same occurs with the node (11). The choice of survivors illustrated by the figure reflects some realization of flipping a fair coin. The same events happen at steps 8 and 9. The reader is encouraged (Problem 9.19) to make sure that any alternative resolving of the ambiguity will not change the final result of decoding except for the step number when the decoded bits are first released (see below).

The situation after the ninth step is very important: all paths appear to merge with each other up to the seventh symbol group. Whatever happens afterwards, this part of all merging paths will remain common forever, meaning that the data bits corresponding to it may be released right away as decoded ones. Hence, the decoder produces the decoded data 1000000. Comparing the codeword u ¼ 11101100000000 . . . corresponding to it with the observation y ¼ 0100110000 . . . we may note that the Hamming distance between them is 2, and if a transmitted word was really the one declared by the decoder, two errors were corrected, in full consistence with free distance df ¼ 5. Similar situations will arise at further steps, allowing the decoder to release decoded bits in the course of processing an observation symbol stream.

Certainly, outputting the decoded data at random moments of the merging of survivors, as in the example above, looks impractical, and a more regularly arranged procedure is desirable. It has been repeatedly verified by experiments and simulation that during the ith decoding step the merging part of all survivors almost never ends after the data bit number i 5 c, so the decision on every bit may be regularly output with delay 5 c [94].

A very significant feature of convolutional codes making them even more attractive is the comparative simplicity of implementing soft decoding. In a general case of a block code with M words the soft decoding means direct calculation of M Euclidean distances or correlations, no algebraic tricks like syndrome decoding being available. With a