- •Getting Started

- •Product Description

- •Key Features

- •Configuration Notes

- •Related Products

- •Compilability

- •Image Import and Export

- •Introduction

- •Step 1: Read and Display an Image

- •Step 2: Check How the Image Appears in the Workspace

- •Step 3: Improve Image Contrast

- •Step 4: Write the Image to a Disk File

- •Step 5: Check the Contents of the Newly Written File

- •Image Enhancement and Analysis

- •Introduction

- •Step 1: Read Image

- •Step 2: Use Morphological Opening to Estimate the Background

- •Step 3: View the Background Approximation as a Surface

- •Step 4: Subtract the Background Image from the Original Image

- •Step 5: Increase the Image Contrast

- •Step 6: Threshold the Image

- •Step 7: Identify Objects in the Image

- •Step 8: Examine One Object

- •Step 9: View All Objects

- •Step 10: Compute Area of Each Object

- •Step 11: Compute Area-based Statistics

- •Step 12: Create Histogram of the Area

- •Getting Help

- •Product Documentation

- •Image Processing Examples

- •MATLAB Newsgroup

- •Acknowledgments

- •Introduction

- •Images in MATLAB

- •Expressing Image Locations

- •Pixel Indices

- •Spatial Coordinates

- •Intrinsic Coordinates

- •World Coordinates

- •Image Types in the Toolbox

- •Overview of Image Types

- •Binary Images

- •Indexed Images

- •Grayscale Images

- •Truecolor Images

- •Converting Between Image Types

- •Converting Between Image Classes

- •Overview of Image Class Conversions

- •Losing Information in Conversions

- •Converting Indexed Images

- •Working with Image Sequences

- •Overview of Toolbox Functions That Work with Image Sequences

- •Process Image Sequences

- •Process Multi-Frame Image Arrays

- •Image Arithmetic

- •Overview of Image Arithmetic Functions

- •Image Arithmetic Saturation Rules

- •Nesting Calls to Image Arithmetic Functions

- •Getting Information About a Graphics File

- •Reading Image Data

- •Writing Image Data to a File

- •Overview

- •Specifying Format-Specific Parameters

- •Reading and Writing Binary Images in 1-Bit Format

- •Determining the Storage Class of the Output File

- •Converting Between Graphics File Formats

- •Working with DICOM Files

- •Overview of DICOM Support

- •Reading Metadata from a DICOM File

- •Handling Private Metadata

- •Creating Your Own Copy of the DICOM Dictionary

- •Reading Image Data from a DICOM File

- •Viewing Images from DICOM Files

- •Writing Image Data or Metadata to a DICOM File

- •Writing Metadata with the Image Data

- •Understanding Explicit Versus Implicit VR Attributes

- •Removing Confidential Information from a DICOM File

- •Example: Creating a New DICOM Series

- •Working with Mayo Analyze 7.5 Files

- •Working with Interfile Files

- •Working with High Dynamic Range Images

- •Understanding Dynamic Range

- •Reading a High Dynamic Range Image

- •Creating a High Dynamic Range Image

- •Viewing a High Dynamic Range Image

- •Writing a High Dynamic Range Image to a File

- •Image Display and Exploration Overview

- •Displaying Images Using the imshow Function

- •Overview

- •Specifying the Initial Image Magnification

- •Controlling the Appearance of the Figure

- •Displaying Each Image in a Separate Figure

- •Displaying Multiple Images in the Same Figure

- •Dividing a Figure Window into Multiple Display Regions

- •Using the subimage Function to Display Multiple Images

- •Using the Image Tool to Explore Images

- •Image Tool Overview

- •Opening the Image Tool

- •Specifying the Initial Image Magnification

- •Specifying the Colormap

- •Importing Image Data from the Workspace

- •Exporting Image Data to the Workspace

- •Using the getimage Function to Export Image Data

- •Saving the Image Data Displayed in the Image Tool

- •Closing the Image Tool

- •Printing the Image in the Image Tool

- •Exploring Very Large Images

- •Overview

- •Creating an R-Set File

- •Opening an R-Set File

- •Using Image Tool Navigation Aids

- •Navigating an Image Using the Overview Tool

- •Starting the Overview Tool

- •Moving the Detail Rectangle to Change the Image View

- •Specifying the Color of the Detail Rectangle

- •Getting the Position and Size of the Detail Rectangle

- •Printing the View of the Image in the Overview Tool

- •Panning the Image Displayed in the Image Tool

- •Zooming In and Out on an Image in the Image Tool

- •Specifying the Magnification of the Image

- •Getting Information about the Pixels in an Image

- •Determining the Value of Individual Pixels

- •Saving the Pixel Value and Location Information

- •Determining the Values of a Group of Pixels

- •Selecting a Region

- •Customizing the View

- •Determining the Location of the Pixel Region Rectangle

- •Printing the View of the Image in the Pixel Region Tool

- •Determining the Display Range of an Image

- •Measuring the Distance Between Two Pixels

- •Using the Distance Tool

- •Exporting Endpoint and Distance Data

- •Customizing the Appearance of the Distance Tool

- •Adjusting Image Contrast Using the Adjust Contrast Tool

- •Understanding Contrast Adjustment

- •Starting the Adjust Contrast Tool

- •Using the Histogram Window to Adjust Image Contrast

- •Using the Window/Level Tool to Adjust Image Contrast

- •Example: Adjusting Contrast with the Window/Level Tool

- •Modifying Image Data

- •Saving the Modified Image Data

- •Cropping an Image Using the Crop Image Tool

- •Viewing Image Sequences

- •Overview

- •Viewing Image Sequences in the Movie Player

- •Example: Viewing a Sequence of MRI Images

- •Configuring the Movie Player

- •Specifying the Frame Rate

- •Specifying the Color Map

- •Getting Information about the Image Frame

- •Viewing Image Sequences as a Montage

- •Converting a Multiframe Image to a Movie

- •Displaying Different Image Types

- •Displaying Indexed Images

- •Displaying Grayscale Images

- •Displaying Grayscale Images That Have Unconventional Ranges

- •Displaying Binary Images

- •Changing the Display Colors of a Binary Image

- •Displaying Truecolor Images

- •Adding a Colorbar to a Displayed Image

- •Printing Images

- •Printing and Handle Graphics Object Properties

- •Setting Toolbox Preferences

- •Retrieving the Values of Toolbox Preferences Programmatically

- •Setting the Values of Toolbox Preferences Programmatically

- •Overview

- •Displaying the Target Image

- •Creating the Modular Tools

- •Overview

- •Associating Modular Tools with a Particular Image

- •Getting the Handle of the Target Image

- •Specifying the Parent of a Modular Tool

- •Tools With Separate Creation Functions

- •Example: Embedding the Pixel Region Tool in an Existing Figure

- •Positioning the Modular Tools in a GUI

- •Specifying the Position with a Position Vector

- •Build a Pixel Information GUI

- •Adding Navigation Aids to a GUI

- •Understanding Scroll Panels

- •Example: Building a Navigation GUI for Large Images

- •Customizing Modular Tool Interactivity

- •Overview

- •Build Image Comparison Tool

- •Creating Your Own Modular Tools

- •Overview

- •Create Angle Measurement Tool

- •Spatial Transformations

- •Resizing an Image

- •Overview

- •Specifying the Interpolation Method

- •Preventing Aliasing by Using Filters

- •Rotating an Image

- •Cropping an Image

- •Perform General 2-D Spatial Transformations

- •Spatial Transformation Procedure

- •Translate Image Using maketform and imtransform

- •Step 1: Import the Image to Be Transformed

- •Step 2: Define the Spatial Transformation

- •Step 3: Create the TFORM Structure

- •Step 4: Perform the Transformation

- •Step 5: View the Output Image

- •Defining the Transformation Data

- •Using a Transformation Matrix

- •Using Sets of Points

- •Creating TFORM Structures

- •Performing the Spatial Transformation

- •Specifying Fill Values

- •Performing N-Dimensional Spatial Transformations

- •Register Image Using XData and YData Parameters

- •Step 1: Read in Base and Unregistered Images

- •Step 2: Display the Unregistered Image

- •Step 3: Create a TFORM Structure

- •Step 4: Transform the Unregistered Image

- •Step 5: Overlay Base Image Over Registered Image

- •Step 6: Using XData and YData Input Parameters

- •Step 7: Using xdata and ydata Output Values

- •Image Registration

- •Image Registration Techniques

- •Control Point Registration

- •Using cpselect in a Script

- •Example: Registering to a Digital Orthophoto

- •Step 1: Read the Images

- •Step 2: Choose Control Points in the Images

- •Step 3: Save the Control Point Pairs to the MATLAB Workspace

- •Step 4: Fine-Tune the Control Point Pair Placement (Optional)

- •Step 6: Transform the Unregistered Image

- •Geometric Transformation Types

- •Selecting Control Points

- •Specifying Control Points Using the Control Point Selection Tool

- •Starting the Control Point Selection Tool

- •Using Navigation Tools to Explore the Images

- •Using Scroll Bars to View Other Parts of an Image

- •Using the Detail Rectangle to Change the View

- •Panning the Image Displayed in the Detail Window

- •Zooming In and Out on an Image

- •Specifying the Magnification of the Images

- •Locking the Relative Magnification of the Input and Base Images

- •Specifying Matching Control Point Pairs

- •Picking Control Point Pairs Manually

- •Using Control Point Prediction

- •Moving Control Points

- •Deleting Control Points

- •Exporting Control Points to the Workspace

- •Saving Your Control Point Selection Session

- •Using Correlation to Improve Control Points

- •Intensity-Based Automatic Image Registration

- •Registering Multimodal MRI Images

- •Step 1: Load Images

- •Step 2: Set up the Initial Registration

- •Step 3: Improve the Registration

- •Step 4: Improve the Speed of Registration

- •Step 5: Further Refinement

- •Step 6: Deciding When Enough is Enough

- •Step 7: Alternate Visualizations

- •Designing and Implementing 2-D Linear Filters for Image Data

- •Overview

- •Convolution

- •Correlation

- •Performing Linear Filtering of Images Using imfilter

- •Data Types

- •Correlation and Convolution Options

- •Boundary Padding Options

- •Multidimensional Filtering

- •Relationship to Other Filtering Functions

- •Filtering an Image with Predefined Filter Types

- •Designing Linear Filters in the Frequency Domain

- •FIR Filters

- •Frequency Transformation Method

- •Frequency Sampling Method

- •Windowing Method

- •Creating the Desired Frequency Response Matrix

- •Computing the Frequency Response of a Filter

- •Transforms

- •Fourier Transform

- •Definition of Fourier Transform

- •Visualizing the Fourier Transform

- •Discrete Fourier Transform

- •Relationship to the Fourier Transform

- •Visualizing the Discrete Fourier Transform

- •Applications of the Fourier Transform

- •Frequency Response of Linear Filters

- •Fast Convolution

- •Locating Image Features

- •Discrete Cosine Transform

- •DCT Definition

- •The DCT Transform Matrix

- •DCT and Image Compression

- •Radon Transform

- •Radon Transformation Definition

- •Plotting the Radon Transform

- •Viewing the Radon Transform as an Image

- •Detecting Lines Using the Radon Transform

- •The Inverse Radon Transformation

- •Inverse Radon Transform Definition

- •Improving the Results

- •Reconstruct Image from Parallel Projection Data

- •Fan-Beam Projection Data

- •Fan-Beam Projection Data Definition

- •Computing Fan-Beam Projection Data

- •Image Reconstruction Using Fan-Beam Projection Data

- •Reconstruct Image From Fanbeam Projections

- •Morphological Operations

- •Morphology Fundamentals: Dilation and Erosion

- •Understanding Dilation and Erosion

- •Processing Pixels at Image Borders (Padding Behavior)

- •Understanding Structuring Elements

- •The Origin of a Structuring Element

- •Creating a Structuring Element

- •Structuring Element Decomposition

- •Dilating an Image

- •Eroding an Image

- •Combining Dilation and Erosion

- •Morphological Opening

- •Skeletonization

- •Perimeter Determination

- •Morphological Reconstruction

- •Understanding Morphological Reconstruction

- •Understanding the Marker and Mask

- •Pixel Connectivity

- •Defining Connectivity in an Image

- •Choosing a Connectivity

- •Specifying Custom Connectivities

- •Flood-Fill Operations

- •Specifying Connectivity

- •Specifying the Starting Point

- •Filling Holes

- •Finding Peaks and Valleys

- •Terminology

- •Understanding the Maxima and Minima Functions

- •Finding Areas of High or Low Intensity

- •Suppressing Minima and Maxima

- •Imposing a Minimum

- •Creating a Marker Image

- •Applying the Marker Image to the Mask

- •Distance Transform

- •Labeling and Measuring Objects in a Binary Image

- •Understanding Connected-Component Labeling

- •Remarks

- •Selecting Objects in a Binary Image

- •Finding the Area of the Foreground of a Binary Image

- •Finding the Euler Number of a Binary Image

- •Lookup Table Operations

- •Creating a Lookup Table

- •Using a Lookup Table

- •Getting Image Pixel Values Using impixel

- •Creating an Intensity Profile of an Image Using improfile

- •Displaying a Contour Plot of Image Data

- •Creating an Image Histogram Using imhist

- •Getting Summary Statistics About an Image

- •Computing Properties for Image Regions

- •Analyzing Images

- •Detecting Edges Using the edge Function

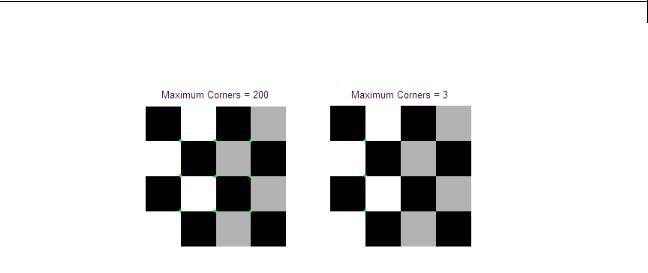

- •Detecting Corners Using the corner Function

- •Tracing Object Boundaries in an Image

- •Choosing the First Step and Direction for Boundary Tracing

- •Detecting Lines Using the Hough Transform

- •Analyzing Image Homogeneity Using Quadtree Decomposition

- •Example: Performing Quadtree Decomposition

- •Analyzing the Texture of an Image

- •Understanding Texture Analysis

- •Using Texture Filter Functions

- •Understanding the Texture Filter Functions

- •Example: Using the Texture Functions

- •Gray-Level Co-Occurrence Matrix (GLCM)

- •Create a Gray-Level Co-Occurrence Matrix

- •Specifying the Offsets

- •Derive Statistics from a GLCM and Plot Correlation

- •Adjusting Pixel Intensity Values

- •Understanding Intensity Adjustment

- •Adjusting Intensity Values to a Specified Range

- •Specifying the Adjustment Limits

- •Setting the Adjustment Limits Automatically

- •Gamma Correction

- •Adjusting Intensity Values Using Histogram Equalization

- •Enhancing Color Separation Using Decorrelation Stretching

- •Simple Decorrelation Stretching

- •Adding a Linear Contrast Stretch

- •Removing Noise from Images

- •Understanding Sources of Noise in Digital Images

- •Removing Noise By Linear Filtering

- •Removing Noise By Median Filtering

- •Removing Noise By Adaptive Filtering

- •ROI-Based Processing

- •Specifying a Region of Interest (ROI)

- •Overview of ROI Processing

- •Using Binary Images as a Mask

- •Creating a Binary Mask

- •Creating an ROI Without an Associated Image

- •Creating an ROI Based on Color Values

- •Filtering an ROI

- •Overview of ROI Filtering

- •Filtering a Region in an Image

- •Specifying the Filtering Operation

- •Filling an ROI

- •Image Deblurring

- •Understanding Deblurring

- •Causes of Blurring

- •Deblurring Model

- •Importance of the PSF

- •Deblurring Functions

- •Deblurring with the Wiener Filter

- •Refining the Result

- •Deblurring with a Regularized Filter

- •Refining the Result

- •Deblurring with the Lucy-Richardson Algorithm

- •Overview

- •Reducing the Effect of Noise Amplification

- •Accounting for Nonuniform Image Quality

- •Handling Camera Read-Out Noise

- •Handling Undersampled Images

- •Example: Using the deconvlucy Function to Deblur an Image

- •Refining the Result

- •Deblurring with the Blind Deconvolution Algorithm

- •Example: Using the deconvblind Function to Deblur an Image

- •Refining the Result

- •Creating Your Own Deblurring Functions

- •Avoiding Ringing in Deblurred Images

- •Color

- •Displaying Colors

- •Reducing the Number of Colors in an Image

- •Reducing Colors Using Color Approximation

- •Quantization

- •Colormap Mapping

- •Reducing Colors Using imapprox

- •Dithering

- •Converting Color Data Between Color Spaces

- •Understanding Color Spaces and Color Space Conversion

- •Converting Between Device-Independent Color Spaces

- •Supported Conversions

- •Example: Performing a Color Space Conversion

- •Color Space Data Encodings

- •Performing Profile-Based Color Space Conversions

- •Understanding Device Profiles

- •Reading ICC Profiles

- •Writing Profile Information to a File

- •Example: Performing a Profile-Based Conversion

- •Specifying the Rendering Intent

- •Converting Between Device-Dependent Color Spaces

- •YIQ Color Space

- •YCbCr Color Space

- •HSV Color Space

- •Neighborhood or Block Processing: An Overview

- •Performing Sliding Neighborhood Operations

- •Understanding Sliding Neighborhood Processing

- •Determining the Center Pixel

- •General Algorithm of Sliding Neighborhood Operations

- •Padding Borders in Sliding Neighborhood Operations

- •Performing Distinct Block Operations

- •Understanding Distinct Block Processing

- •Implementing Block Processing Using the blockproc Function

- •Applying Padding

- •Block Size and Performance

- •TIFF Image Characteristics

- •Choosing Block Size

- •Using Parallel Block Processing on large Image Files

- •What is Parallel Block Processing?

- •When to Use Parallel Block Processing

- •How to Use Parallel Block Processing

- •Working with Data in Unsupported Formats

- •Learning More About the LAN File Format

- •Parsing the Header

- •Reading the File

- •Examining the LanAdapter Class

- •Using the LanAdapter Class with blockproc

- •Understanding Columnwise Processing

- •Using Column Processing with Sliding Neighborhood Operations

- •Using Column Processing with Distinct Block Operations

- •Restrictions

- •Code Generation for Image Processing Toolbox Functions

- •Supported Functions

- •Examples

- •Introductory Examples

- •Image Sequences

- •Image Representation and Storage

- •Image Display and Visualization

- •Zooming and Panning Images

- •Pixel Values

- •Image Measurement

- •Image Enhancement

- •Brightness and Contrast Adjustment

- •Cropping Images

- •GUI Application Development

- •Edge Detection

- •Regions of Interest (ROI)

- •Resizing Images

- •Image Registration and Alignment

- •Image Filtering

- •Fourier Transform

- •Image Transforms

- •Feature Detection

- •Discrete Cosine Transform

- •Image Compression

- •Radon Transform

- •Image Reconstruction

- •Fan-beam Transform

- •Morphological Operations

- •Binary Images

- •Image Histogram

- •Image Analysis

- •Corner Detection

- •Hough Transform

- •Image Texture

- •Image Statistics

- •Color Adjustment

- •Noise Reduction

- •Filling Images

- •Deblurring Images

- •Image Color

- •Color Space Conversion

- •Block Processing

- •Index

- •Summary of Modular Tools

- •Rules for Dilation and Erosion

- •Rules for Padding Images

- •Supported Connectivities

- •Distance Metrics

- •File Header Content

Analyzing Images

Tracing Object Boundaries in an Image

The toolbox includes two functions you can use to find the boundaries of objects in a binary image:

•bwtraceboundary

•bwboundaries

The bwtraceboundary function returns the row and column coordinates of all the pixels on the border of an object in an image. You must specify the location of a border pixel on the object as the starting point for the trace.

The bwboundaries function returns the row and column coordinates of border pixels of all the objects in an image.

For both functions, the nonzero pixels in the binary image belong to an object, and pixels with the value 0 (zero) constitute the background.

The following example uses bwtraceboundary to trace the border of an object in a binary image. Then, using bwboundaries, the example traces the borders of all the objects in the image:

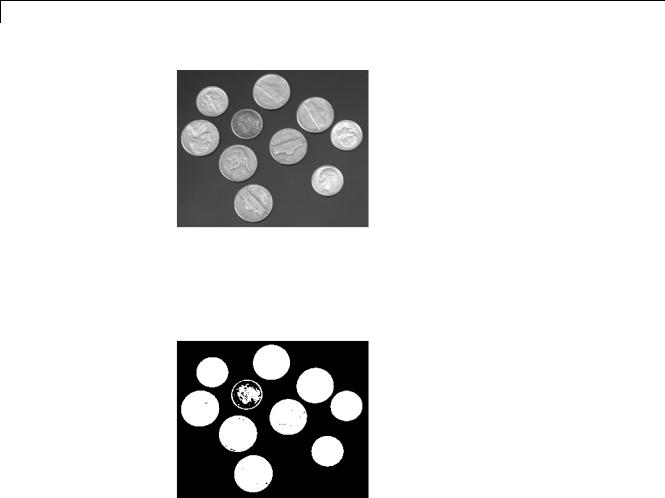

1Read image and display it.

I = imread('coins.png'); imshow(I)

11-15

11 Analyzing and Enhancing Images

2Convert the image to a binary image. bwtraceboundary and bwboundaries only work with binary images.

BW = im2bw(I); imshow(BW)

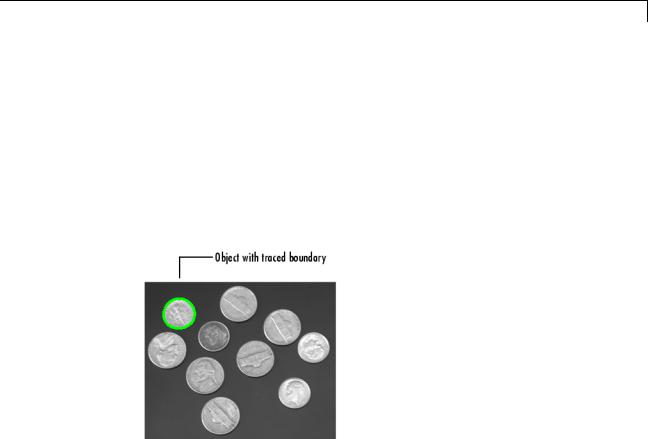

3Determine the row and column coordinates of a pixel on the border of the object you want to trace. bwboundary uses this point as the starting location for the boundary tracing.

dim = size(BW)

col = round(dim(2)/2)-90; row = min(find(BW(:,col)))

4Call bwtraceboundary to trace the boundary from the specified point. As required arguments, you must specify a binary image, the row and column coordinates of the starting point, and the direction of the first step. The example specifies north ('N'). For information about this parameter, see

11-16

Analyzing Images

“Choosing the First Step and Direction for Boundary Tracing” on page 11-18.

boundary = bwtraceboundary(BW,[row, col],'N');

5Display the original grayscale image and use the coordinates returned by bwtraceboundary to plot the border on the image.

imshow(I) hold on;

plot(boundary(:,2),boundary(:,1),'g','LineWidth',3);

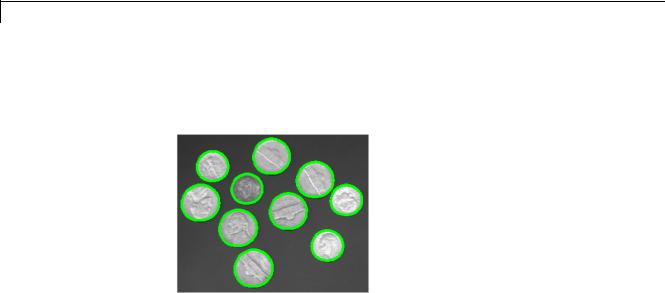

6To trace the boundaries of all the coins in the image, use the bwboundaries function. By default, bwboundaries finds the boundaries of all objects in an image, including objects inside other objects. In the binary image used in this example, some of the coins contain black areas that bwboundaries interprets as separate objects. To ensure that bwboundaries only traces the coins, use imfill to fill the area inside each coin.

BW_filled = imfill(BW,'holes'); boundaries = bwboundaries(BW_filled);

bwboundaries returns a cell array, where each cell contains the row/column coordinates for an object in the image.

7Plot the borders of all the coins on the original grayscale image using the coordinates returned by bwboundaries.

for k=1:10

11-17

11 Analyzing and Enhancing Images

b = boundaries{k}; plot(b(:,2),b(:,1),'g','LineWidth',3);

end

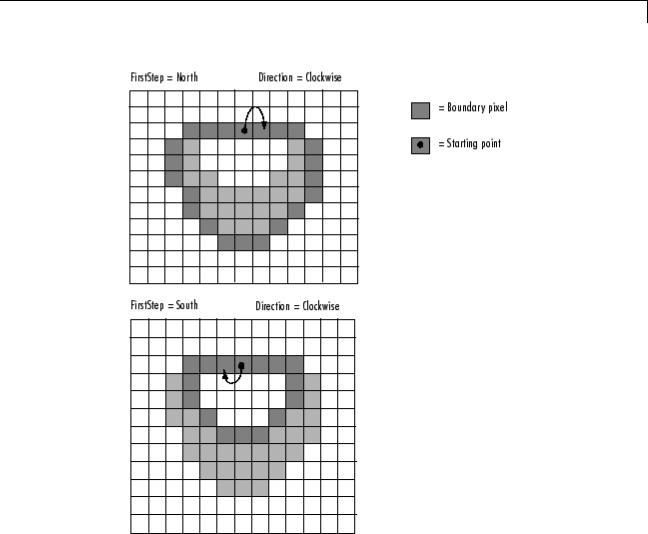

Choosing the First Step and Direction for Boundary Tracing

For certain objects, you must take care when selecting the border pixel you choose as the starting point and the direction you choose for the first step parameter (north, south, etc.).

For example, if an object contains a hole and you select a pixel on a thin part of the object as the starting pixel, you can trace the outside border of the object or the inside border of the hole, depending on the direction you choose for the first step. For filled objects, the direction you select for the first step parameter is not as important.

To illustrate, this figure shows the pixels traced when the starting pixel is on a thin part of the object and the first step is set to north and south. The connectivity is set to 8 (the default).

11-18

Analyzing Images

Impact of First Step and Direction Parameters on Boundary Tracing

11-19