- •Contents

- •Series Preface

- •Acknowledgments

- •RATIONALES UNDERLYING NEPSY AND NEPSY-II

- •NEPSY DEVELOPMENT

- •NEPSY-II REVISION: GOALS AND DEVELOPMENT

- •COMPREHENSIVE REFERENCES

- •CONCLUDING REMARKS

- •APPROPRIATE TESTING CONDITIONS

- •TYPES OF ASSESSMENTS

- •ASSESSING CHILDREN WITH SPECIAL NEEDS

- •OTHER ADMINISTRATION CONSIDERATIONS

- •SUBTEST-BY-SUBTEST RULES OF ADMINISTRATION

- •COMPUTER SCORING

- •PREPARATORY TO SCORING

- •ORDER OF SCORING

- •STEP-BY-STEP SCORING

- •TESTS WITH COMPLEX RECORDING AND/OR SCORING

- •QUICK-SCORING: DESIGN COPY GENERAL (DCG)

- •DESIGN COPYING PROCESS (DCP) SCORING

- •OVERVIEW OF SUBTEST SCORES

- •SUMMARIZING NEPSY-II SCORES

- •CONCLUDING REMARKS

- •GOALS OF INTERPRETATION AND IMPLEMENTATION OF GOALS

- •STEP-BY-STEP INTERPRETATION OF NEPSY-II PERFORMANCE

- •INTRODUCTION

- •TEST DEVELOPMENT

- •STANDARDIZATION

- •PSYCHOMETRIC PROPERTIES

- •ADMINISTRATION AND SCORING

- •INTERPRETATION

- •OVERVIEW OF STRENGTHS AND WEAKNESSES

- •THE NEPSY-II REFERRAL BATTERIES

- •DEVELOPMENTAL DISORDERS AND NEPSY-II

- •EVIDENCE OF RELIABILITY IN NEPSY-II

- •CONVENTIONS FOR REPORTING RESULTS

- •RELIABILITY PROCEDURES IN NEPSY-II

- •CONCLUDING REMARKS

- •CASE STUDY #1: GENERAL REFERRAL BATTERY

- •CLINICAL IMPRESSIONS AND SUMMARY

- •PRELIMINARY DIAGNOSIS

- •RECOMMENDATIONS

- •DIAGNOSIS

- •Appendix: NEPSY-II Data Worksheet

- •References

- •Annotated Bibliography

- •About the Authors

- •Index

CLINICAL APPLICATIONS OF THE NEPSY-II 327

Narrative Memory, which relies strongly on the child being able to encode receptive language and recall salient story details, approached significance and showed moderate to large effect sizes.

EVIDENCE OF RELIABILITY IN NEPSY-II

Test reliability is any indication of the degree with which a test provides a precise and stable measure of the underlying construct it is intended to measure. Classical test theory posits that a test score is an approximation of an individual’s true score (i.e., the score he or she would receive if the test was perfectly reliable) and measurement error (i.e., the difference between an individual’s true score and the individual’s obtained score). A reliable test will have a relatively small measurement error and produce consistent results across administrations. The reliability of the test refers to the accuracy, consistency, and stability of test scores across situations (Anastasi & Urbina, 1997). The reliability of a test should be considered in the interpretation of obtained scores on one occasion and differences between scores obtained on multiple occasions.

The NEPSY-II Clinical and Interpretive Manual (Table 4.1, pp. 54–56) provides reliability coefficients for all primary and process scaled scores (Korkman et al., 2007). They were calculated for each age level separately and then averaged across four age bands (i.e., ages 3–4, 5–6, 7–12, 13–16). This was done because different patterns of tests make up the domains for the two different age groups.

CONVENTIONS FOR REPORTING RESULTS

Several conventions for reporting results were used for the NEPSY-II:

1.For those analyses that serve to evaluate the difference between two mean scores (e.g., test-retest reliability and counterbalanced validity studies and matched controls studies), mean scores in the tables are reported to one place. However, the standard difference, t values and probability ( p) values, were calculated from grouped innings to two decimal places. Calculations of those values with reported means may vary slightly from those reported in the tables due to the rounding error.

2.All analyses use traditional values for significance level (alpha =.05).

3.Along with statistical significance and p values, effect sizes are reported as evidence of reliability and validity. The term standard difference refers to Cohen’s d. The values described in the text follow Cohen’s (1988) suggestions for effect size interpretation.

328 ESSENTIALS OF NEPSY-II ASSESSMENT

The use of descriptors should not replace review of the actual p values and effect sizes reported in the tables of the Clinical and Interpretation Manual. For example, although p equals .07 is not statistically significant, it would be evaluated differently if the effect size were .90 rather than .10. Similarly, a p value of .04 is a significant result, but when paired with an effect size of .03, the effect is probably too small to be meaningful. Moreover, depending on the situation the importance associated with a particular effect size might be very different from those suggested by Cohen’s guidelines. In certain clinical situations, a small effect size might still represent an important finding. The reader is encouraged to evaluate the specifics of a given result when interpreting significance and effect size magnitudes.

(See Rapid Reference 6.12 for a summary of the conventions for reporting results.)

Rapid Reference 6.12

Rapid Reference 6.12

Conventions for Reporting Results

Several conventions for reporting results were used for NEPSY-II.

1.For analyses that evaluate the difference between two mean scores (e.g., test-retest reliability, counterbalanced validity studies, and matched controls studies), mean scores in the tables were reported to one place. However, the standard difference, t values, and probability ( p) values were calculated from grouped innings to two decimal places. Therefore, calculations of those values with reported means may vary slightly from those in the tables due to the rounding error.

2.All analyses use traditional values for significance level (alpha =.05).

3.Along with statistical significance and p values, effect sizes are reported as evidence of reliability and validity. The term standard difference refers to Cohen’s d. The values described in the text follow Cohen’s (1988) suggestions for effect size interpretation.

The use of descriptors should not replace review of the actual p values and effect sizes reported in the tables of the Clinical and Interpretation Manual.

•Example: Although p equals .07 is not statistically significant, it would be evaluated differently if the effect size were .90 rather than .10. Similarly, a p value of .04 is a significant result, but when paired with an effect size of .03, the effect is probably too small to be meaningful.

•Depending on the situation, the importance associated with a particular effect size might be very different from those suggested by Cohen’s guidelines. In certain clinical situations a small effect size might still represent an important finding. The reader is encouraged to evaluate the specifics of a given result when interpreting significance and effect size magnitudes.

CLINICAL APPLICATIONS OF THE NEPSY-II 329

RELIABILITY PROCEDURES IN NEPSY-II

The NEPSY-II subtests vary widely across and within domains in terms of the stimulus presentation, administration procedures used, and the type of responses elicited. Some subtests have baseline conditions that provide a means of identifying the contribution of more basic cognitive skills from performance on the higher-level cognitive tasks (e.g., Inhibition). Other subtests have two or more conditions that measure different aspects of a domain (e.g., Phonological Processing). These factors vary across subtests and have a bearing on the nature and outcome of the reliability analyses performed. For example, internal consistency measures for Inhibition will be unaffected because the earlier conditions have lower cognitive demands than the later items. See Strauss, Sherman, and Spreen (2006) for a review of reliability and validity in neuropsychological instruments.

For many process measures and some primary measures of the NEPSY-II, reliability is influenced by a limited variability in normal samples (e.g., error scores and simple cognitive processes), particularly as a majority of individuals at a given age attain the skills needed to perform a task. In samples of typicallydeveloping (nonclinical) children, some process scores yield a small range of raw scores (e.g., error scores), which hinders the psychometric properties of these variables in the normative sample due to limited ranges or skewed distributions. For example, in typically developing children, the ability to perceive another’s point of view as measured by the theory of mind task is well developed by age 7. Therefore, the full range of scores is seen on the Theory of Mind subtest in children under age 7 where children are highly variable in the attainment of social perspective. After age 7, the distribution is highly skewed because a majority of the children successfully perform the task. The reliability of the score is much higher in younger age groups than in older age groups, where the skewed score distribution and range restriction is reflected in the change from the scaled score to a percentile.

Despite the limitations in obtaining reliability estimates for the scores in typically developing children, children with certain clinical diagnoses, such as Autistic Disorder or Reading Disorder, may exhibit test performances that are best captured by these process measures. Therefore, the clinical utility of the scores is high; the findings from the special group samples highlight the clinical utility of these measures and the importance of including them in an assessment. In addition, the psychometric properties of these variables may differ if the reliability is analyzed within clinical populations, due to the greater range and variability of scores obtained in clinical groups. The NEPSY-II process scores that often yield restricted distributions in normally functioning children and higher ranges and distributions in clinical populations include error rates, contrast scores, and

330 ESSENTIALS OF NEPSY-II ASSESSMENT

scores in which perfect scores are expected in typically developing individuals (e.g., Theory of Mind after age 7) (Korkman et al., 2007, pp. 51–52).

The reliability procedures used in NEPSY-II vary among the subtests based on the properties of the subtest.

Reliability coefficients were obtained utilizing the split-half and alpha methods. The split-half of reliability coefficient of a subtest is the correlation between the total scores of the two half-tests, corrected for length of the test using the SpearmanBrown formula (Crocker & Algina, 1986; Li, Rosenthal, & Rubin, 1996). The internal consistency reliability coefficients were calculated with the formula recommended by Nunnally and Bernstein (1994). The average reliability coefficients were calculated using Fisher’s z transformation (Silver & Dunlap, 1987; Strube, 1988).

Stability coefficients and decision-consistency procedures were used when the aforementioned methods were not appropriate.

Stability Coefficients

Test-retest stability is reported on those subtests for which parallel forms could not be created because the subtests’ scores are based on item-level scores that are not strictly independent, due either to an allowed latency time within which the child can respond and receive credit for an item (e.g., Auditory Attention and Response Set) or to the use of speed of performance as a scoring criterion (e.g., Fingertip Tapping, Speeded Naming). The stability coefficients used as reliability estimates are the correlation of scores on the first and second testing, corrected for variability of the normative sample (Allen & Yen, 1979; Magnusson, 1967).

Decision-Consistency

Several subtests have highly skewed score distributions and are not scaled, but rather are presented as percentile rank scores. In addition, the combined and contrast scores demonstrate the same restriction of score range. For these scores, a test-retest correlation coefficient would be artificially depressed due to restricted score ranges; therefore, a decision-consistency methodology was used to demonstrate reliability. A cutoff score is used to create two categories and the consistency of the classification (i.e., percent agreement) from test to retest is assessed. The decision-consistency reliability indicates the concordance of the decisions in terms of percent of classification. The following cutoff scores were used.

•For percentile ranks: 10th percentile

•For scaled scores: scaled score of 6

■Percentiles classified into less than 10th percentile, equal to 10th percentile, and greater than 10th percentile

■Scaled scores classified into less than 6, equal to 6, and greater than 6

CLINICAL APPLICATIONS OF THE NEPSY-II 331

Along with statistical significance and p values, effect sizes are reported as evidence of reliability and validity. Most NEPSY-II subtests have adequate to high internal consistency or stability.

Effect Size Ranges

•Small effect sizes: .20–.49

•Moderate effect sizes: .50–.79

•Large effect sizes: .80 and greater are reported as large effect sizes

Reliability coefficients are provided in the NEPSY-II Clinical and Interpretative Manual (Korkman et al., 2007, pp. 54–59) for all subtest primary and process scores. Consistent with other neuropsychological instruments, verbal tests have higher reliabilities, and executive functions tend to have modest reliability (Strauss et al., 2006).

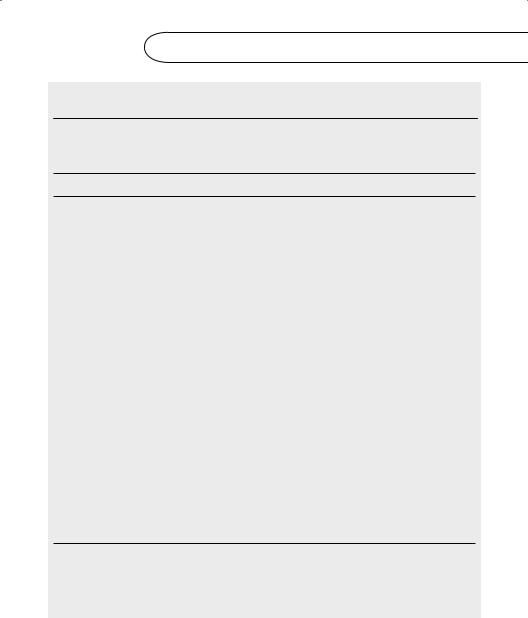

The highest reliability coefficients are seen in Rapid Reference 6.13 across four age bands.

Rapid Reference 6.13

Rapid Reference 6.13

Reliability Procedures Used in NEPSY-II

The reliability procedures used in NEPSY-II vary among the subtests, based on the properties of the subtest.

Reliability coefficients were obtained utilizing the split-half and alpha methods

•The split-half reliability coefficient of a subtest is the correlation between the total scores of the two half-tests, corrected for length of the test using the Spearman-Brown formula.

•The internal consistency reliability coefficients were calculated with the formula recommended by Nunnally and Bernstein.

•The average reliability coefficients were calculated using Fisher’s z transformation.

Stability Coefficients and decision-consistency procedures were used if the above methods were not appropriate

•Test-retest stability is reported on those subtests for which parallel forms could not be created because the subtests’ scores are based on item-level scores that are not strictly independent:

■Due either to an allowed latency time within which the child can respond and get credit for an item or to the use of speed of performance as a scoring criterion.

(continued )

332 ESSENTIALS OF NEPSY-II ASSESSMENT

(continued )

•The stability coefficients used as reliability estimates are the correlation of scores on the first and second testing, corrected for variability of the normative sample.

Decision-Consistency

•Several subtests have highly skewed score distributions and are not scaled, but rather are presented as percentile rank scores.

•The combined and contrast scores demonstrate the same restriction of score range.

For these scores, a test-retest correlation coefficient would be artificially depressed due to restricted score ranges; therefore, a decision-consistency methodology was used to demonstrate reliability.

•A cutoff score is used to create two categories and the consistency of the classification (i.e., percent agreement) from test to retest is assessed.

•The decision consistency reliability indicates the concordance of the decisions in terms of percent of classification. The following cutoff scores were used:

■For percentile ranks: 10th percentile

■For scaled scores: scaled score of 6

■Percentiles classified into less than 10th percentile, equal to 10th percentile, and greater than 10th percentile

■Scaled scores classified into less than 6, equal to 6, and greater than 6

Effect Size Ranges: Along with statistical significance and p values, effect sizes are reported as evidence of reliability and validity. Most NEPSY-II subtests have adequate to high internal consistency or stability.

•Small effect sizes: .20–.49

•Moderate effect sizes: .50–.79

•Large effect sizes: .80 and greater are reported as large effect sizes

Overall, the lowest reliabilities for NEPSY-II subtests coefficients are those calculated with test-retest reliability: Response Set Total Correct, Inhibition Total Errors, Memory for Designs Spatial and Total Scores, Memory for Designs Delayed Total Scores. Lower reliability on the error scores was likely a result of practice effects and range-restriction on the test-retest reliability, as errors tend to decline with experience on a task and small changes in performance result in a large change in classification. The reliability coefficients for scores in the Memory and Learning domain are consistent with findings that memory subtests tend to produce lower reliability on test-retest due to the heavy influence of practice effects on memory tasks. (See Rapid Reference 6.14 for the highest reliability coefficients.)

CLINICAL APPLICATIONS OF THE NEPSY-II 333

Rapid Reference 6.14

Rapid Reference 6.14

The Highest Reliability Coefficients of NEPSY-II Scaled Scores for Normative Sample by Age

(Average r2)

Subtests |

Ages 3-4 |

Ages 5-6 |

Ages 7-12 |

Ages 13-16 |

Comp. of Instructions Total |

.86 |

.82 |

.75 |

.62 |

Design Copying Process |

.88 |

.85 |

.78 |

.82 |

Total |

|

|

|

|

FT Dominant Hand Combined |

— |

.87 |

.90 |

.75 |

FT Nondominant Hand |

— |

.84 |

.94 |

.83 |

Combined |

|

|

|

|

FT Repetitions Combined |

— |

.94 |

.92 |

.83 |

FT Sequences Combined |

— |

.84 |

.98 |

.92 |

Imitating Hand Positions |

.89 |

.82a |

.82a |

— |

Total |

|

|

|

|

List Memory and LM Total |

— |

— |

.91 |

— |

Correct |

|

|

|

|

Memory for Names and MND |

— |

.89 |

.89 |

.79 |

Total |

|

|

|

|

Phonological Processing Total |

.88 |

.92 |

.86 |

.66 |

Picture Puzzles Total |

.85 |

.81 |

— |

— |

Sentence Repetition Total |

.89 |

.87 |

— |

— |

Note: Internal consistency (alpha or split-half reliability coeffi cients) are reported unless otherwise noted.

a) Average reliability coeffi cients were calculated for ages 5–12.

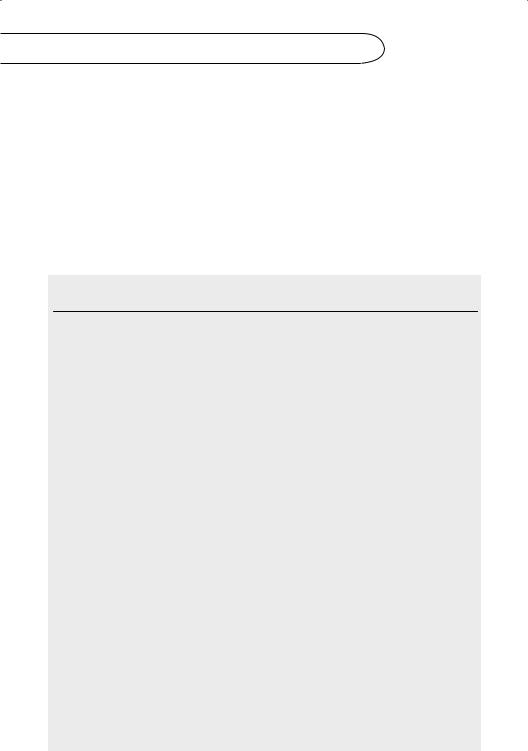

Reliabilities in the Special Groups

The reliability information for the special groups supports the generalizability of the instrument. The evidence of internal consistency for the special groups was obtained by the same methods as those used with the normative sample. Detailed demographic information can be found in Chapter 5 of the NEPSY-II

334 ESSENTIALS OF NEPSY-II ASSESSMENT

Clinical and Interpretive Manual, and descriptions of inclusion criteria can be found in Appendix F of that same volume. Rapid Reference 6.15 shows the highest reliability coefficients of selected primary and process scores for the special groups averaged across two age bands (5–6 and 7–12). As predicted, reliability coefficients calculated with the clinical sample were higher than those in the normative group. These results suggest that NEPSY-II is a reliable tool for the use of assessment of children with clinical diagnoses (Korkman et al., p. 56).

Rapid Reference 6.15

Rapid Reference 6.15

Reliability Coefficients of Selected NEPSY-II Primary and Process Scaled Scores for Special Groups

|

Average r |

|

|

by Age Bands |

|

|

|

|

Subtest Scores by Domain |

5-6 yrs. |

7-12 yrs. |

|

|

|

Attention and Executive Functioning |

|

|

|

|

|

Clocks (CL) Total Score |

– |

.88 |

|

|

|

Inhibition (IN) Naming Total Completion Time |

.94 |

.84 |

|

|

|

IN Inhibition Total Completion Time |

.80 |

.80 |

|

|

|

IN Switching Total Completion Time |

– |

.86 |

|

|

|

Language |

|

|

|

|

|

Comprehension of Instructions (CI) Total |

.83 |

.80 |

|

|

|

Phonological Processing ( pH) Total Score |

.92 |

.90 |

|

|

|

Memory and Learning |

|

|

|

|

|

Memory for Designs (MD) Content Score |

.77 |

.86 |

|

|

|

Memory for Designs (MD) Spatial Score |

.96 |

.88 |

|

|

|

MD Total Score |

.95 |

.93 |

|

|

|

Sentence Repetition Total Score |

.96 |

– |

|

|

|

Word Interference (WI) Repetition Score |

– |

.80 |

|

|

|

WI Recall Total Score |

– |

.67 |

|

|

|

Subtest Scores by Domain |

5-6 yrs. |

7-12 yrs. |

|

|

|

CLINICAL APPLICATIONS OF THE NEPSY-II 335

|

|

|

|

|

|

|

Average r |

|

|

|

|

by Age Bands |

|

|

|

Subtest Scores by Domain |

5-6 yrs. |

7-12 yrs. |

|

|

Social Perception |

|

|

|

|

Affect Recognition Total Score |

.90 |

.88 |

|

|

Theory of Mind Total Score |

.85 |

– |

|

|

Visuospatial Processing |

|

|

|

|

Arrows (AW) Total Score |

.92 |

.92 |

|

|

Block Construction (BC) Total Score |

.94 |

.85 |

|

|

Design Copying (DCP) Motor Score |

.89 |

.74 |

|

|

DCP Global Score |

.78 |

.73 |

|

|

DCP Local |

.77 |

.74 |

|

|

DCP Total |

.91 |

.88 |

|

|

Geometric Puzzle (GP) Total Score |

– |

.82 |

|

|

Picture Puzzle ( pP) Total Score |

– |

.89 |

|

|

|

|

|

|

Evidence of Inter-Rater Agreement

All NEPSY-II protocols were double-scored by two independent scorers, and evidence of interscorer agreement was obtained using all cases entered for scoring, including clinical and normative cases. For subtests where the criteria were simple and objective, inter-rater agreement was very high (.98–.99). Clocks and Design Copying require detailed and interpretive scoring based on established criteria. Memory for Names and Theory of Mind require a small degree of interpretation in scoring unusual responses. Word Generation requires the application of a series of rules to determine whether words are credible, and Visuomotor Precision requires judgment about the number of segments containing errors. To determine the degree to which trained raters were consistent in scoring these subtests during standardization, interrater reliability was calculated as percent agreement rates between trained scorers. Agreement rates ranged from 93–99% (Clocks 97%, Design Copying 94–95% across scores, Memory for Names 99%, Theory of Mind 99%, Word Generation 93%, and Visuomotor Precision 95%). The results show that although these subtests require some judgment in scoring, they can be scored with a very high degree of reliability between raters. (Rapid Reference 6.16 summarizes inter-rater reliability for NEPSY-II.)