Интеллектуальные информационные системы 4 курс Попов / Кольцова АА ЛР8

.docxМинистерство науки и высшего образования Российской Федерации

Федеральное государственное бюджетное образовательное

учреждение высшего образования

«Сочинский государственный университет»

Кафедра Информационных технологий

ОТЧЁТ

по лабораторной работе № 8

«Перенос стиля изображения»

Выполнила: студентка группы 20-ПИ

Кольцова Адриана Андреевна

Дата: 30.12.2023

Проверил: д.т.н. проф. Попов Д.И.

Дата: _______________

Замечания:

______________________________________________________________________________________________________________________________________________________________________________________________________

Сочи 2023

Решение

Первый вариант кода

Код программы:

import os

import numpy as np

import matplotlib.pyplot as plt

from PIL import Image

import tensorflow as tf

import logging

# Установка параметров отображения графиков

plt.rcParams['figure.figsize'] = (10, 8)

plt.rcParams['axes.grid'] = False

# Подготовка данных

temp_dir = 'temp_images'

os.makedirs(temp_dir, exist_ok=True)

content_path = os.path.join('C:\\Users', 'adriana', 'ss', '4.jpg')

style_path = os.path.join('C:\\Users', 'adriana', 'ss', 'aa.jpg')

# Инициализация и настройка окружения

os.environ['TF_CPP_MIN_LOG_LEVEL'] = '2'

tf.compat.v1.disable_eager_execution()

# Загрузка и отображение изображений

def load_and_preprocess_image(image_path):

img = Image.open(image_path)

img = tf.keras.preprocessing.image.img_to_array(img)

img = tf.image.resize(img, [512, 512])

img = tf.keras.applications.vgg19.preprocess_input(img)

return img[tf.newaxis, :]

content_image = load_and_preprocess_image(content_path)

style_image = load_and_preprocess_image(style_path)

# Отображение исходных изображений

def imshow(image, title=None):

if tf.executing_eagerly():

# В режиме eager execution используйте numpy()

image = tf.keras.preprocessing.image.array_to_img(tf.squeeze(image).numpy())

else:

# В символьном режиме используйте eval() с явным указанием сеанса

sess = tf.compat.v1.keras.backend.get_session()

image = tf.keras.preprocessing.image.array_to_img(tf.squeeze(image).eval(session=sess))

plt.imshow(image)

if title:

plt.title(title)

plt.show()

# И вызывайте функцию imshow следующим образом:

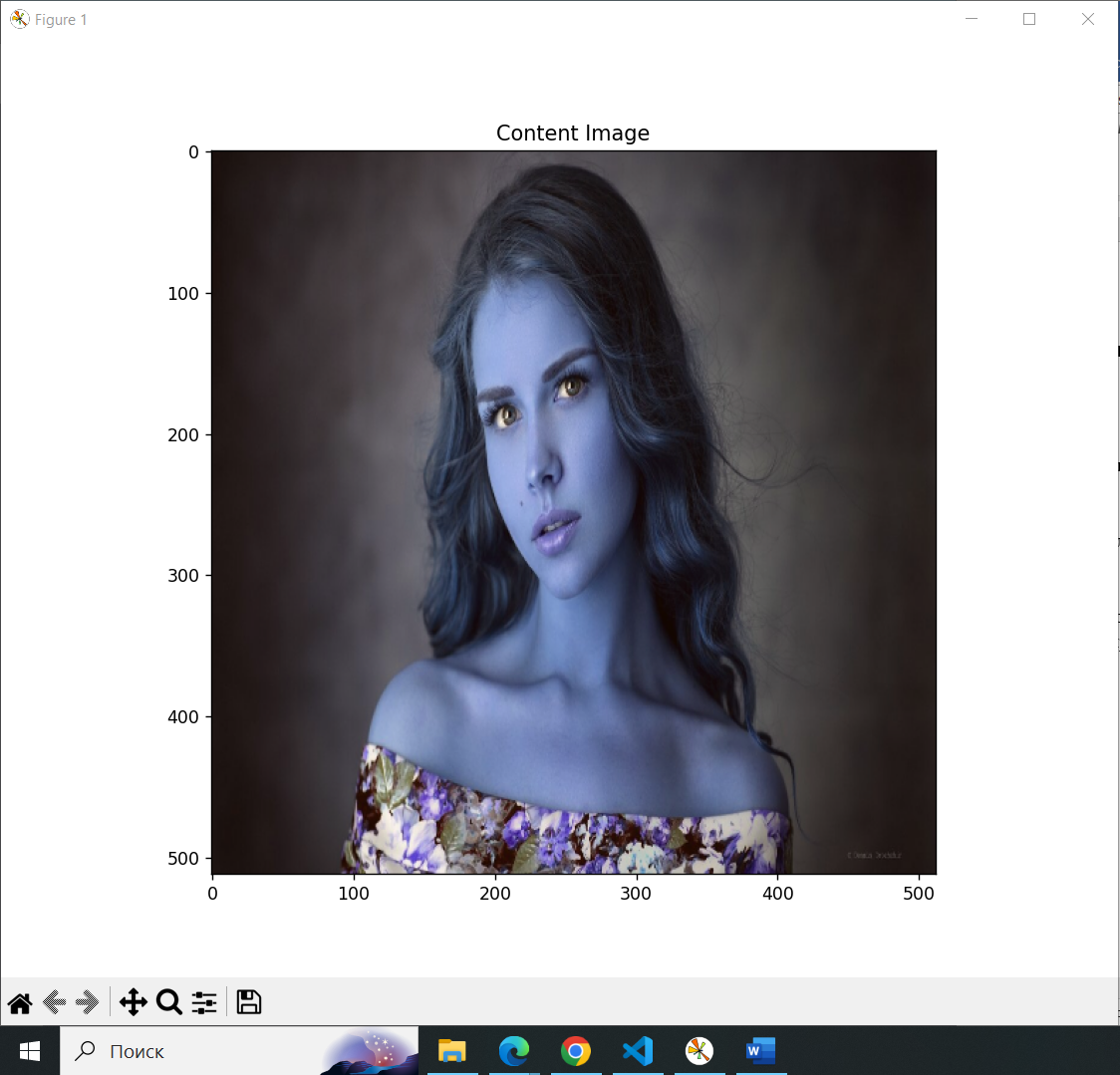

imshow(content_image, 'Content Image')

imshow(style_image, 'Style Image')

# Определение функций для работы с матрицами Грама

def gram_matrix(input_tensor):

result = tf.linalg.einsum('bijc,bijd->bcd', input_tensor, input_tensor)

input_shape = tf.shape(input_tensor)

num_locations = tf.cast(input_shape[1] * input_shape[2], tf.float32)

return result / num_locations

# Получение представлений стиля и содержания из модели

def get_model():

vgg = tf.keras.applications.VGG19(include_top=False, weights='imagenet')

vgg.trainable = False

style_layers = ['block1_conv1', 'block2_conv1', 'block3_conv1', 'block4_conv1', 'block5_conv1']

content_outputs = [vgg.get_layer('block5_conv2').output]

style_outputs = [vgg.get_layer(layer).output for layer in style_layers]

return tf.keras.models.Model(inputs=vgg.input, outputs=content_outputs + style_outputs)

# Вычисление значений потерь контента и стиля

def get_content_loss(base_content, target):

return tf.reduce_mean(tf.square(base_content - target))

def get_style_loss(style, gram_target):

return tf.reduce_mean(tf.square(gram_matrix(style) - gram_target))

# Вычисление общей потери и градиента

def compute_loss(model, base_image, style_targets, content_targets, content_weight, style_weight):

model_outputs = model(base_image)

content_output, style_outputs = model_outputs[0], model_outputs[1:]

content_loss = get_content_loss(content_output, content_targets)

style_loss = 0

for i, style_output in enumerate(style_outputs):

style_loss += get_style_loss(style_output, style_targets[i])

style_loss *= style_weight

total_loss = content_weight * content_loss + style_loss

return total_loss, content_loss, style_loss

# Запуск процесса переноса стиля

def run_style_transfer(content_path, style_path, num_iterations=1000, content_weight=1e3, style_weight=1e-2):

model = get_model()

for layer in model.layers:

layer.trainable = False

content_image = load_and_preprocess_image(content_path)

style_image = load_and_preprocess_image(style_path)

style_targets = [gram_matrix(style_layer) for style_layer in model(style_image)[1:]]

content_targets = model(content_image)[0]

base_image = tf.Variable(content_image)

opt = tf.optimizers.Adam(learning_rate=5, beta_1=0.99, epsilon=1e-1)

best_loss, best_img = float('inf'), None

cfg = {

'model': model,

'base_image': base_image,

'style_targets': style_targets,

'content_targets': content_targets,

'content_weight': content_weight,

'style_weight': style_weight

}

norm_means = np.array([103.939, 116.779, 123.68])

min_vals = np.array([-103.939, -116.779, -123.68])

max_vals = 255 - min_vals

def compute_grads(cfg):

with tf.GradientTape() as tape:

all_loss = compute_loss(**cfg)

total_loss = all_loss[0]

grads = tape.gradient(total_loss, cfg['base_image'])

cfg['base_image'].assign(tf.clip_by_value(cfg['base_image'], min_vals, max_vals))

return grads, all_loss

for i in range(num_iterations):

grads, all_loss = compute_grads(cfg)

loss, content_loss, style_loss = all_loss

opt.apply_gradients([(grads, base_image)])

clipped_base = tf.clip_by_value(base_image, min_vals, max_vals)

base_image.assign(clipped_base)

if loss < best_loss:

best_loss = loss

best_img = base_image.numpy()

if i % 100 == 0:

logging.info(f"Iteration {i}: Total Loss: {loss}, Content Loss: {content_loss}, Style Loss: {style_loss}")

return best_img

# Запуск алгоритма и сохранение итогового изображения

result_image = run_style_transfer(content_path, style_path)

result_path = os.path.join('C:\\Users', 'adriana', 'ss', 'aa11.jpg')

result_image = np.squeeze(result_image, axis=0)

result_image = tf.keras.preprocessing.image.array_to_img(result_image)

result_image.save(result_path)

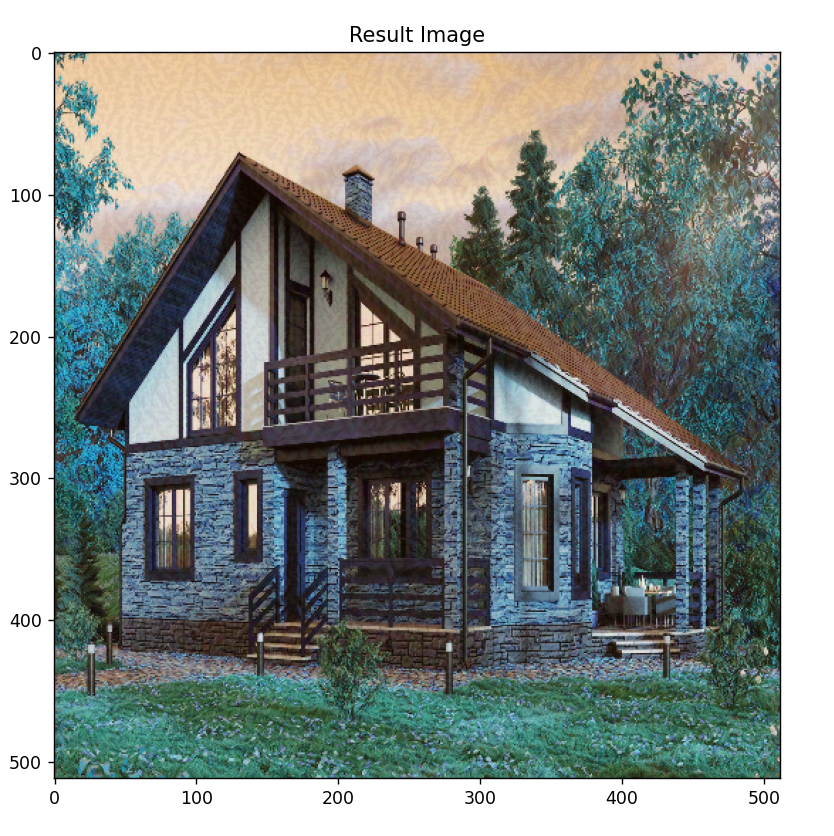

# Отображение итогового изображения

plt.imshow(result_image)

plt.title('Result Image')

plt.show()

Результат выполнения команд:

Вывод:

Второй вариант кода:

import os

import numpy as np

import matplotlib.pyplot as plt

from PIL import Image

import tensorflow as tf

import logging

# Enable eager execution

tf.config.run_functions_eagerly(True)

# Set display parameters

plt.rcParams['figure.figsize'] = (10, 8)

plt.rcParams['axes.grid'] = False

# Prepare data

temp_dir = 'temp_images'

os.makedirs(temp_dir, exist_ok=True)

content_path = os.path.join('C:\\Users', 'adriana', 'ss', '4.jpg')

style_path = os.path.join('C:\\Users', 'adriana', 'ss', 'aa.jpg')

# Load and display images

def load_and_preprocess_image(image_path):

img = Image.open(image_path)

img = tf.keras.preprocessing.image.img_to_array(img)

img = tf.image.resize(img, [512, 512])

img = tf.keras.applications.vgg19.preprocess_input(img)

return img[tf.newaxis, :]

content_image = load_and_preprocess_image(content_path)

style_image = load_and_preprocess_image(style_path)

# Отображение исходных изображений

def imshow(image, title=None):

if tf.executing_eagerly(): # Изменено на tf.executing_eagerly()

# В режиме eager execution используйте numpy()

image = tf.keras.preprocessing.image.array_to_img(tf.squeeze(image).numpy())

else:

# В символьном режиме используйте eval() с явным указанием сеанса

sess = tf.compat.v1.keras.backend.get_session()

image = tf.keras.preprocessing.image.array_to_img(tf.squeeze(image).eval(session=sess))

plt.imshow(image)

if title:

plt.title(title)

plt.show()

imshow(content_image, 'Content Image')

imshow(style_image, 'Style Image')

# Define functions for working with Gram matrices

def gram_matrix(input_tensor):

result = tf.linalg.einsum('bijc,bijd->bcd', input_tensor, input_tensor)

input_shape = tf.shape(input_tensor)

num_locations = tf.cast(input_shape[1] * input_shape[2], tf.float32)

return result / num_locations

# Get style and content representations from the model

def get_model():

vgg = tf.keras.applications.VGG19(include_top=False, weights='imagenet')

vgg.trainable = False

style_layers = ['block1_conv1', 'block2_conv1', 'block3_conv1', 'block4_conv1', 'block5_conv1']

content_outputs = [vgg.get_layer('block5_conv2').output]

style_outputs = [vgg.get_layer(layer).output for layer in style_layers]

return tf.keras.models.Model(inputs=vgg.input, outputs=content_outputs + style_outputs)

# Calculate content and style losses

def get_content_loss(base_content, target):

return tf.reduce_mean(tf.square(base_content - target))

def get_style_loss(style, gram_target):

return tf.reduce_mean(tf.square(gram_matrix(style) - gram_target))

# Calculate total loss and gradient

def compute_loss(model, base_image, style_targets, content_targets, content_weight, style_weight):

model_outputs = model(base_image)

content_output, style_outputs = model_outputs[0], model_outputs[1:]

content_loss = get_content_loss(content_output, content_targets)

style_loss = 0

for i, style_output in enumerate(style_outputs):

style_loss += get_style_loss(style_output, style_targets[i])

style_loss *= style_weight

total_loss = content_weight * content_loss + style_loss

return total_loss, content_loss, style_loss

# Run style transfer process

def run_style_transfer(content_path, style_path, num_iterations=1000, content_weight=1e3, style_weight=1e-2):

model = get_model()

for layer in model.layers:

layer.trainable = False

content_image = load_and_preprocess_image(content_path)

style_image = load_and_preprocess_image(style_path)

style_targets = [gram_matrix(style_layer) for style_layer in model(style_image)[1:]]

content_targets = model(content_image)[0]

base_image = tf.Variable(content_image)

opt = tf.optimizers.Adam(learning_rate=5, beta_1=0.99, epsilon=1e-1)

best_loss, best_img = float('inf'), None

cfg = {

'model': model,

'base_image': base_image,

'style_targets': style_targets,

'content_targets': content_targets,

'content_weight': content_weight,

'style_weight': style_weight

}

norm_means = np.array([103.939, 116.779, 123.68])

min_vals = np.array([-103.939, -116.779, -123.68])

max_vals = 255 - min_vals

def compute_grads(cfg):

with tf.GradientTape() as tape:

all_loss = compute_loss(**cfg)

total_loss = all_loss[0]

grads = tape.gradient(total_loss, cfg['base_image'])

cfg['base_image'].assign(tf.clip_by_value(cfg['base_image'], min_vals, max_vals))

return grads, all_loss

for i in range(num_iterations):

grads, all_loss = compute_grads(cfg)

loss, content_loss, style_loss = all_loss

opt.apply_gradients([(grads, cfg['base_image'])])

clipped_base = tf.clip_by_value(cfg['base_image'], min_vals, max_vals)

cfg['base_image'].assign(clipped_base)

if tf.math.less(loss, best_loss):

best_loss = loss

best_img = cfg['base_image'].numpy()

if i % 100 == 0:

logging.info(f"Iteration {i}: Total Loss: {loss}, Content Loss: {content_loss}, Style Loss: {style_loss}")

return best_img

# Run the algorithm and save the resulting image

result_image = run_style_transfer(content_path, style_path)

result_path = os.path.join('C:\\Users', 'adriana', 'ss', 'aa11.jpg')

result_image = np.squeeze(result_image, axis=0)

result_image = tf.keras.preprocessing.image.array_to_img(result_image)

result_image.save(result_path)

# Display the resulting image

plt.imshow(result_image)

plt.title('Result Image')

plt.show()